This content originally appeared on TimKadlec.com on Web Performance Consulting | TimKadlec.com and was authored by TimKadlec.com on Web Performance Consulting | TimKadlec.com

When I work with companies on improving their performance, we focus more and more on their long-tail of performance data.

We look at histograms instead of any single slice of the pie to get a good composite picture of what the current state of affairs is. And for specific goals and budgets, we turn to the 90th or 95th percentile.

For a long time, the average or median metrics were the default ones our industry zeroed in on, but they provide a distorted view of reality.

Here’s a simplified example (for something in more depth, Ilya did a fantastic job of breaking down this down).

Let’s say we’re looking at five different page load times:

- 2.3 seconds

- 4.5 seconds

- 3.1 seconds

- 2.9 seconds

- 5.4 seconds

To find the average, we add them all up and divide by the length of the data set (in this case, five). So our average load time is 3.64 seconds. You’ll notice that there isn’t a single page load time that matches our average exactly. It’s a representation of the data, but not an exact sample. The average, as Ilya points out, is a myth. It doesn’t exist.

Figuring out the median requires a little less math than calculating the average. Instead, we order the data set from fastest to slowest and then grab the middle (median) value of the data set. In this case, that’s 3.1 seconds. The median does, at least, exist. But both it and the average suffer from the same problem: they ignore interesting, and essential information.

Why are some users experiencing slower page load times? What went wrong that caused our page to load 45% and 74% slower?

Focusing on the median or average is the equivalent of walking around with a pair of blinders on. We’re limiting our perspective and, in the process, missing out on so much crucial detail. By definition, when we make improving the median or average our goal, we are focusing on optimizing for only about half of our sessions.

Even worse, it’s often the half that has the lowest potential to have a sizeable impact on business metrics.

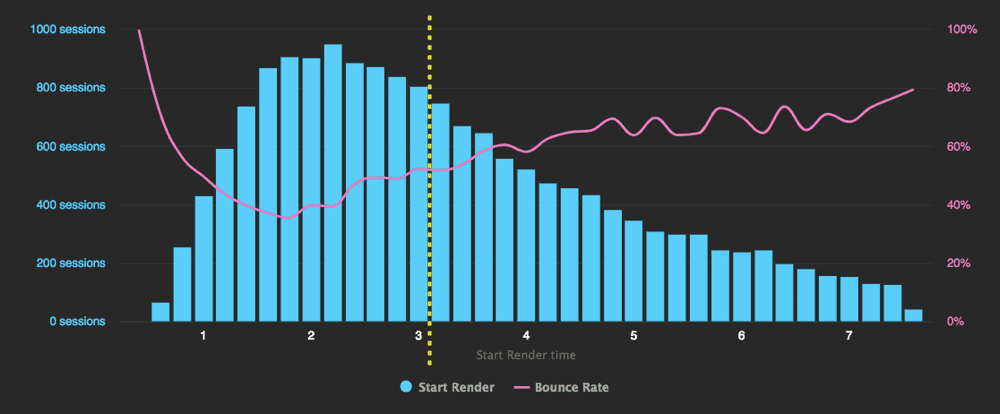

Below is a chart from SpeedCurve showing the relationship between start render time and bounce rate for a company I’m working with. I added a yellow line to indicate where the median metric falls.

This graph shows the relationship between start render time and bounce rate. The yellow dotted line at 3.1 seconds indicates the median start render time.

A few things jump out. The first is just how long that long-tail is. That’s a lot of people running into some pretty slow experiences. We want that long-tail to be a short-tail. The smaller the gap between the median and the 90th percentile, the better. Far more often than not, when we close that gap we also end up moving that median to the left in the process.

The second thing that jumps out is the obvious connection between bounce rate and start render time. As start render time increases so does the bounce rate. In other words, our long-tail is composed of people who wanted to use the site but ended up ditching because it was just too slow. If we’re going to improve the bounce rate for this site, that long-tail offers loads of potential.

It’s tempting to write these slower experiences off, and often we do. We tend to frame the long-tail of performance as being full of the edge cases and oddities. There’s some truth in that because the long-tail is where you tend to run into interesting challenges and technology you haven’t prioritized.

But it’s also a dangerous way of viewing these experiences.

The long-tail doesn’t invent new issues; it highlights the weaknesses that are lurking in your codebase. These weaknessses are likely to impact all of us at some point or another. That’s the thing about the long-tail—it isn’t a bucket for a subset of users. The long-tail is a gradient of experiences that we’ll all find ourselves in at some point or another. When our device is over-taxed, when our connectivity is spotty—when something goes wrong, we find ourselves pushed into that same long-tail.

Shifting our focus to the long-tail ensures that when things go wrong, people still get a usable experience. By honing in on the 90th—or 95th or similar—we ensure those weaknesses don’t get ignored. Our goal is to optimize the performance of our site for the majority of our users—not just a small subset of them.

As we identify, and address, each underlying weakness that impacts our long-tail of experiences, inevitably we make our site more resilient and performant for everyone.

This content originally appeared on TimKadlec.com on Web Performance Consulting | TimKadlec.com and was authored by TimKadlec.com on Web Performance Consulting | TimKadlec.com

TimKadlec.com on Web Performance Consulting | TimKadlec.com | Sciencx (2018-06-07T15:31:34+00:00) Prioritizing the Long-Tail of Performance. Retrieved from https://www.scien.cx/2018/06/07/prioritizing-the-long-tail-of-performance/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.