This content originally appeared on DEV Community and was authored by Jacob Cohen

Do you ever have those moments where you know you’re thinking faster than the app you’re using? You click something and have time to think “what’s taking so long?” It’s frustrating to say the least, but it’s an all-too-common problem in modern applications. A driving factor of this delay is latency, caused by offloading processing from the app to an external server. More often than not, that external server is a monolithic database residing in a single cloud region. This article will dig into some of the existing architectures that cause this issue and provide solutions on how to resolve them.

Latency Defined

Before we get ahead of ourselves, let’s define “latency.” In a general sense, latency measures the duration between an action and a response. In user facing applications, that can be narrowed down to the delay between when a user makes a request and when the application responds to a request. As a user, I don’t really care what is causing the delay resulting in a poor user experience, I just want it to go away. In a typical cloud application architecture, latency is caused by the Internet and the time it takes to make requests back and forth from the user’s device and the cloud, referred to as Internet latency. There is also processing time to consider, the time it takes to actually execute the request, which is referred to as operational latency. This article will focus on Internet latency with a hint of operational latency. If you’re interested in other types of latency, TechTarget has a good deep dive into specifics of the term.

Modern applications have reached a point where end-user performance is critical. However, in practice, most application architectures have not fully caught up to support globally consumed applications. I’ve personally run into cases over and over again where I hear that the application has been distributed around the world, but the database is stuck back in a single cloud geography. We’ve reached a point in technology where static assets are easy to distribute, but persistent data storage is not. Content delivery networks (CDN) have effectively solved the problem of latency in delivering static content. Movies can be streamed across the globe effortlessly because they are static, they don’t change. I can stream Goldfinger (the best Bond movie, by the way), all over the world because it’s hosted across the globe via a CDN. That’s an incredible feat, but what about the metadata associated with that streaming app? What happens when the app needs to remember where I paused the film, when I rate the film (5/5 of course), or if I want to add it to my list of favorites? That data needs to be recorded in a database somewhere for future access. Based on my experience with modern application architecture, that database is most likely centralized in a single cloud region. Depending on where I am in the world, this can result in excess latency for simple application features like clicking around the streaming app. Metadata has to be queried and returned through the pipes of the Internet, potentially across the world, creating a poor user experience on basic application features even when my movie is streaming crystal clear.

The Problem: A Centralized Database

Why is data storage such a bottleneck? Databases are complex, they need to be able to process large amounts of data transactions and stay responsive at all times. The most popular and flexible databases on the market, both SQL and NoSQL, have proven to be incredibly difficult to distribute. As such, application architects typically choose to leave them in a single geographic region and scale vertically to handle increasing usage requirements. This works for a while, but as the application grows, so does the demand on the database, eventually causing ballooning costs and increased latency when not physically near the database’s region. I’ve seen standard Internet latency range from anywhere between 200 milliseconds to a few seconds.

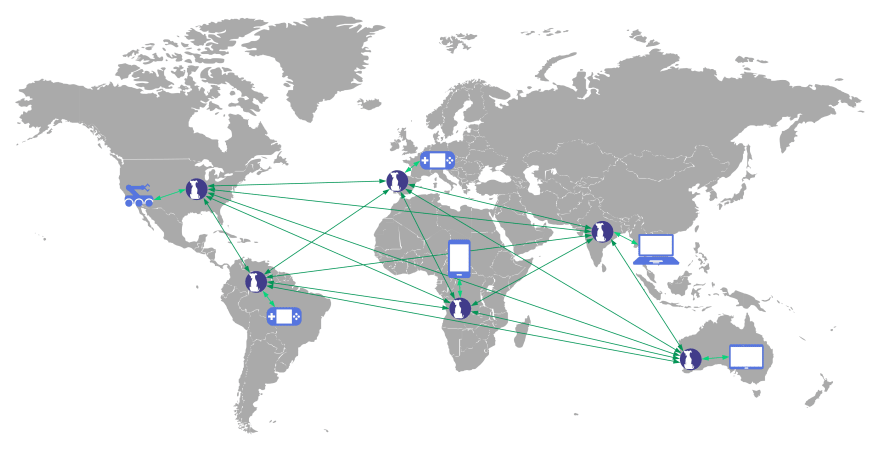

Internet Latency with a Centralized Database

Real World Examples

Before jumping into the solution, let’s take a quick look at some latency-sensitive applications where high latency quickly leads to poor user experience.

Gaming

I don’t pretend to be an expert gamer, but I’ve dabbled in the past. Nothing was more infuriating than my character getting crashed/killed/destroyed because of lag. In my case it didn’t really matter, I’d yell and scream at my TV for a few seconds, like the mature high schooler that I was, and go about my day hoping for redemption (and no lag) in the next match. That said, gaming has turned into a major business with huge competitions, each with millions of dollars in prize money on the line. Imagine losing a million-dollar prize because of a latency issue. Talk about a poor user experience.

Home Internet of Things (IoT)

As soon as I moved into my new house I went a little crazy with the smart home things. I’ve got cameras, alarm sensors, smart plugs, smart speakers, a connected thermostat, and some more gadgets I’m forgetting. They’re pretty cool, but the one thing that drives me absolutely crazy is just how long it takes for the apps to respond. My smart alarm system is not quite as smart as I’d hoped when I installed it. Someone could break into my house and get to the second floor before the alarm detects the break in. Why? Because the processing takes place in the cloud, somewhere that’s far away from my house. Now, I understand that when it comes to speech processing for my smart speakers, but you’re telling me that a contact sensor needs to go all the way to a major data center just to tell me that the door is open? That’s a blatant latency issue. Keep in mind, the cloud is still an important factor here because that activity needs to be logged and recorded. However, reducing that latency from multiple seconds down to a few milliseconds would certainly give me more peace of mind.

Autonomous Vehicles

It wouldn’t be a proper blog by yours truly if I didn’t sneak in some sort of car reference. Autonomous vehicles are the future. Yes, I’m going to cling to the steering wheel as long as I can, but that’s because I enjoy driving. That said, if the car next to me is autonomous, I want it to be able to detect any mistakes I make as quickly as possible. This is why most of the processing is done on board the vehicle itself. Personally, I see a later phase of vehicles being connected and communicating with each other, which will require some sort of data orchestration between vehicles. This is a place where latency is not an option. Imagine a connected car needing a few seconds to alert another connected semi-truck behind it that there’s a traffic jam and it should exit first. Those few seconds may be the difference between the truck rerouting or being stuck in traffic. Another case where latency is the difference between success and failure.

Warehouse Robotics

Beyond cars, I’m also a big robot guy, so this is another fun example for me. There is all sorts of innovation going on within warehouse robotics and distribution facilities. In many of these cases control decisions are still made in the cloud, which makes sense, because you need serious computing power to control a swarm of robots. Latency can seriously affect production, or in this case, output. If a robot has to stop what it’s doing while waiting for a response from the cloud, even just for a second, that can lead to hours of lost productivity across the swarm of robots over the course a day. Sure, it’s automated, but you might as well have your robots operating as efficiently as possible.

The Solution: Decentralization

If you’ve made it this far, I’d imagine this issue resonates with you, so let’s get into the solution. To solve these latency challenges, you need two very important things: distributed data centers and a database technology that can be distributed. Effectively, decentralization. Some of you may have seen this coming based on the title… Let’s dig into each of them separately.

Distributed Data Centers

You can’t geographically distribute any software without the physical hardware to deploy it on. There are all sorts of options for geographically distributing software, I prefer edge data centers because they bring the computing power physically closer to the end user. That means a shorter distance for network traffic to travel and typically means fewer hops, resulting in faster response time based purely on physics. Alternatively, you could choose a multi-cloud approach using a combination of cloud providers and/or private data centers to achieve a more distributed solution free from single cloud provider lock-in (something that will certainly make your CFO happy). Realistically, I see a hybrid of both as the solution which allows you to capitalize on the best of both worlds.

Distributed Database

This is the tricky part. Like I said earlier, CDN technology is established, but distributing data effectively is a whole different beast. Enter HarperDB, a distributed database solution that can be installed anywhere while presenting a single interface across a range of clouds, with backend ability to keep data synchronized everywhere. What makes HarperDB unique from other, more traditional database solutions? Critically, it’s not a cloud exclusive product like DynamoDB (exclusive to AWS), Cosmos DB (exclusive to Azure), or Firebase (exclusive to GCP). These are all strong products, but they are exclusive to a single cloud provider and as a result can only be distributed in those respective data center locations. In practice, they’re also difficult to distribute in general, as I discussed earlier. That’s not the case with HarperDB. Instead, HarperDB is cloud agnostic, meaning it can run anywhere, whether you’re installing from npm, running a Docker container, or choosing a managed service. It’s so flexible that I have a HarperDB instance running on my laptop. Once installed, HarperDB’s clustering and replication can be configured to automatically synchronize data between nodes, regardless of where they’re installed, often times faster than an external request would execute. I should mention that HarperDB is fast on its own, which helps to reduce the operational latency that I explained above. Finally, HarperDB provides a simple and elegant single endpoint API, which provides a consistent interface for consumers.

Reduced Internet Latency with Geographically Distributed HarperDB

With the power of HarperDB, we can extend the concept of CDN to geographically distributed data centers, providing end users with a fast and consistent solution and an improved user experience. Gamers can stop throwing their controllers at the TV, autonomous vehicles can route more effectively, home security can be more responsive, and warehouse robots can be more time efficient. Many organizations have come to accept cloud latency as a given, but it doesn’t have to be. With the power of a geographically distributed database, we can empower innovators to create faster and smoother applications by reducing latency caused by long distance routing. Thus, latency is solved!

This content originally appeared on DEV Community and was authored by Jacob Cohen

Jacob Cohen | Sciencx (2021-03-02T15:53:02+00:00) Reducing Data Latency with Geographically Distributed Databases. Retrieved from https://www.scien.cx/2021/03/02/reducing-data-latency-with-geographically-distributed-databases/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.