This content originally appeared on DEV Community and was authored by Marko Anastasov

Jupyter notebook files have been one of the fastest-growing content types on GitHub in recent years. They provide a simple interface for iterating on visual tasks, whether you are analyzing datasets or writing code-heavy documentation.

Their popularity comes with problems though: large numbers of ipynb files accumulate in repos, many of which are in a broken state. As a result, it is difficult for people to re-run, or even understand your notebooks.

This tutorial describes how you can use the pytest plugin nbmake to automate end-to-end testing of notebooks.

Pre-requisites

This guide builds on fundamental skills in testing Python projects which are described in Python Continuous Integration and Deployment From Scratch.

Before proceeding, please ensure you have covered these basics and have your Python 3 toolchain of choice (such as pip + virtualenv) installed in your development environment.

Demo Application

It’s common for Python projects to contain a directory of notebook files (known by the .ipynb extension) which may contain any of the following contents:

- proof-of-concept modeling code

- example API usage docs

- Lengthy scientific tutorials

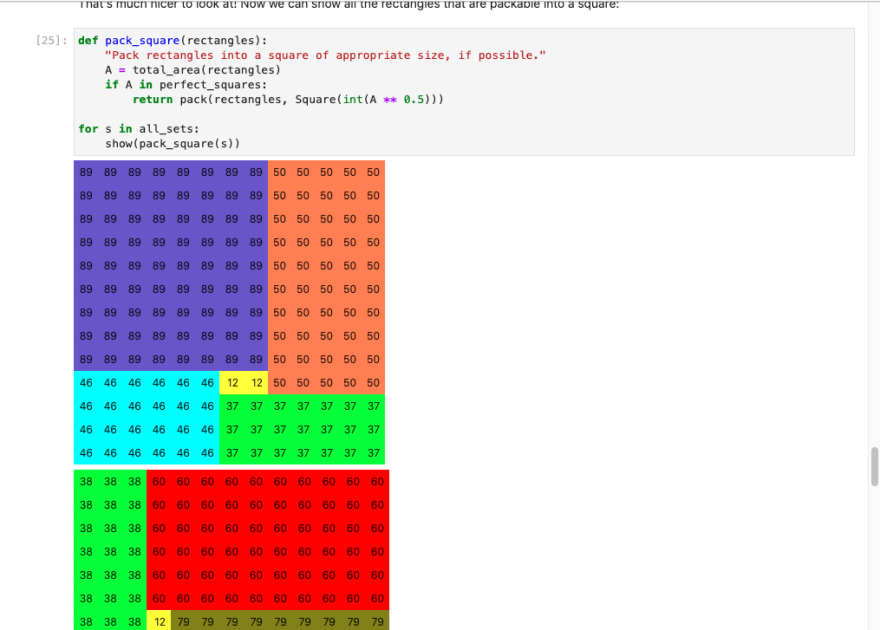

For the purpose of this tutorial, we are going to learn how to automate simple end-to-end tests on some notebooks containing Python exercises. Thanks to pytudes for providing this example material.

Fork and clone the example project on GitHub.

Inside this repo, you will find a directory ipynb containing notebooks. Install the dependencies in the requirements.txt file, optionally creating yourself a virtual environment first.

Before proceeding to the next step, see if you can run these notebooks in your editor of choice.

Testing Notebooks Locally

It’s likely that up to this point, your test process involves you manually running notebooks through the Jupyter Lab UI, or a similar client. This is both time-consuming and error-prone though.

Let’s start automating this process in your development environment using nbmake as an initial step forward.

Nbmake is a python package that serves as a pytest plugin for testing notebooks. It is developed by the author of this guide and is used by well-known scientific organizations such as Dask, Quansight, and Kitware. You can install it using pip, or a package manager of your choice.

pip install nbmake==0.5

If you did not already have pytest installed, it will be installed for you as a dependency of nbmake.

Before testing your notebooks for the first time, let’s check everything is set up correctly by instructing pytest to simply collect (but not run) all notebook test cases.

➜ pytest --collect-only --nbmake "./ipynb"

================================ test session starts =================================

platform darwin -- Python 3.8.2, pytest-6.2.4, py-1.10.0, pluggy-0.13.1

rootdir: /Users/a/git/alex-treebeard/semaphore-demo-python-jupyter-notebooks

plugins: nbmake-0.5

collected 3 items

<NotebookFile ipynb/Boggle.ipynb>

<NotebookItem >

<NotebookFile ipynb/Cheryl-and-Eve.ipynb>

<NotebookItem >

<NotebookFile ipynb/Differentiation.ipynb>

<NotebookItem >

============================= 3 tests collected in 0.01s =============================

As you can see, pytest has collected some notebook items using the nbmake plugin.

Note: If you receive the message unrecognized arguments: --nbmake then the nbmake plugin is not installed. This may happen if your CLI is invoking a pytest binary outside of your current virtual environment. Check where your pytest binary is located with which pytest to confirm this.

Now that we have validated that nbmake and pytest are working together and can see your notebooks, let’s run them for real.

➜ pytest --nbmake "./ipynb"

================================ test session starts =================================

platform darwin -- Python 3.8.2, pytest-6.2.4, py-1.10.0, pluggy-0.13.1

rootdir: /Users/a/git/alex-treebeard/semaphore-demo-python-jupyter-notebooks

plugins: nbmake-0.5

collected 3 items

ipynb/Boggle.ipynb . [ 33%]

ipynb/Cheryl-and-Eve.ipynb . [ 66%]

ipynb/Differentiation.ipynb . [100%]

================================= 3 passed in 37.65s =================================

Great, they have passed. These are simple notebooks in a demo project though. It is very unlikely all of your notebooks will pass the first time in a few seconds, so let’s go through some approaches for getting complex projects running.

Speed up Execution with pytest-xdist

Large projects may have many notebooks, each taking a long time to install packages, pull data from the network, and perform analyses.

If your tests are taking more than a few seconds, it’s worth checking how parallelizing the execution affects the runtime. We can do this with another pytest plugin pytest-xdist as follows:

First, install the xdist package. It is a pytest plugin similar to nbmake and will add new command-line options.

pip install pytest-xdist

Run with the number of worker processes set to auto using the following command

pytest --nbmake -n=auto "./ipynb"

Ignore Expected Errors in a Notebook

Not all notebooks will be easy to test automatically. Some will contain cells that need user input or will raise uncaught exceptions to illustrate some functionality.

Fortunately, we can put directives in our notebook metadata to tell nbmake to ignore and continue after errors are thrown. You may not have used notebook metadata before, so it's a good time to mention that notebooks are just JSON files, despite their ipynb extension. You can add custom fields to extend their behavior.

Your development environment may provide a UI for adding metadata to your notebook file, but let's do it in a raw text editor for educational purposes:

- Open your .ipynb file in a simple text editor such as Sublime.

- Locate your

kernelspecfield inside the notebook's metadata. - Add an

executionobject as a sibling to thekernelspec

This should leave you with something like this:

{

"cells": [ ... ],

"metadata": {

"kernelspec": { ... },

"execution": {

"allow_errors": true,

"timeout": 300

}

}

}

Now you can re-run the notebook to check that the JSON is valid and errors are ignored.

Write Executed Notebooks Back to the Repo

By default, tests are read-only: your ipynb file remains unchanged on disk. This is usually a good default because your editor may be open whilst you are running.

In some situations however, you may want to persist the executed notebooks to disk, if you need to

- Debug failing notebooks by viewing outputs

- Create a commit to your repository with notebooks in a reproducible state

- Build executed notebooks into a doc site, using nbsphinx or jupyter book

We can direct nbmake to persist the executed notebooks to disk using the overwrite flag:

pytest --nbmake --overwrite "./ipynb"

Exclude Certain Notebooks from Test Runs

Some notebooks may be difficult to test automatically, due to requiring user inputs via stdin or taking a long time to run.

Use pytest’s ignore flag to de-select them.

pytest --nbmake docs --overwrite --ignore=docs/landing-page.ipynb

Note: this will not work if you are selecting all notebooks using a glob pattern such as ("*ipynb") which manually overrides pytest's ignore flags.

Automate Notebook Tests on Semaphore CI

Using the techniques above, it is possible to create an automated testing process using Semaphore continuous integration (CI). Pragmatism is key: finding a way to test most of your notebooks and ignoring difficult ones is a good first step to improving quality.

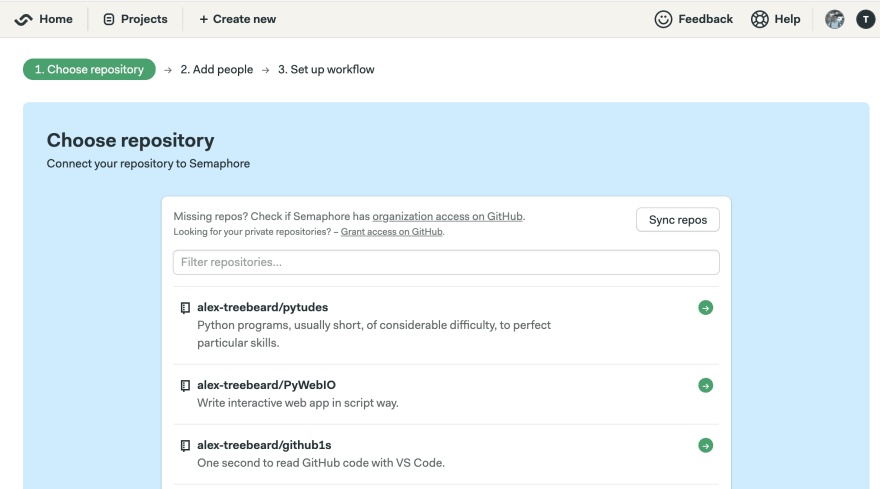

Start by creating a project for the repo containing your notebooks. Select "Choose repository".

Next, connect Semaphore to the repo containing your notebooks.

Skip past "Add People" for now, so we can set up our workflow from scratch (even if you are using the demo repo).

Semaphore gives us some starter configuration, we are going to customize it first before running it.

Create a simple single-block workflow with the following details:

- For Name of the Block we will use Test

- For Name of the Job we will use Test Notebooks

-

For Commands we will use the following:

checkout cache restore pip install -r requirements.txt pip install nbmake pytest-xdist cache store pytest --nbmake -n=auto ./ipynb -

For Prologue use the following to configure Python 3:

sem-version python 3.8

Now we can run the workflow. If the workflow fails, don't worry, we'll address common problems next.

Troubleshooting Some Common Errors

Add Missing Jupyter Kernel to Your CI Environment

If you are using a kernel name other than the default ‘python3’. You will see an error message when executing your notebooks in a fresh CI environment: Error - No such kernel: 'mycustomkernel'

Use ipykernel to install your custom kernel if you are using Python.

python -m ipykernel install --user --name mycustomkernel

If you are using another language such as c++ in your notebooks, you may have a different process for installing your kernel.

Add Missing Secrets to Your CI Environment

In some cases, your notebook will fetch data from APIs requiring an API token or authenticated CLI. Anything that works on your development environment should work on Semaphore, so first check this post to see how to set up secrets.

Once you have installed secrets into Semaphore, you may need to configure the notebook to read the secrets from an environment variable available in CI environments.

Add Missing Dependencies to Your CI Environment

The python data science stack often requires native libraries to be installed. If you are using conda, it is likely that they will be covered in your normal installation process. It is slightly less likely if you are using standard python libraries.

If you are struggling to install the libraries that you need, have a look at creating a CI docker image. It will be easier to test locally and more stable over time than using Semaphore’s default environments.

Please remember the advice on pragmatism; you can often achieve 90% of the value in 10% of the time. This may involve tradeoffs like ignoring notebooks that run ML models requiring a GPU.

Conclusion

This guide demonstrated how you can automate testing of Jupyter Notebooks so you can maintain their reproducibility.

Notebooks have proven to be an effective tool for constructing technical narratives. Due to their novelty however, some software teams rule out their usage until clearer processes emerge to test, review, and reuse their contents.

There is still some way to go before notebook technologies fit into software projects seamlessly but now is a good time to get a head start. You may want to investigate some of the following further work items to further improve your operations:

- Use pre-commit and nbstripout to remove bulky notebook output data before committing changes

- Use jupyter book to compile your notebooks into a beautiful documentation site

- Use ReviewNB to review notebooks in pull requests.

Thanks for reading, and please share any tips for working with notebooks with us!

This article was contributed by Alex Remedios.

This content originally appeared on DEV Community and was authored by Marko Anastasov

Marko Anastasov | Sciencx (2021-07-08T16:03:06+00:00) How to Test Jupyter Notebooks with Pytest and Nbmake. Retrieved from https://www.scien.cx/2021/07/08/how-to-test-jupyter-notebooks-with-pytest-and-nbmake/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.