This content originally appeared on DEV Community and was authored by Swapnil Pawar

When it comes to managed Kubernetes services, Google Kubernetes Engine (GKE) is a great choice if you are looking for a container orchestration platform that offers advanced scalability and configuration flexibility. GKE gives you complete control over every aspect of container orchestration, from networking to storage, to how you set up observability—in addition to supporting stateful application use cases.

However, if your application does not need that level of cluster configuration and monitoring, then a fully managed Cloud Run might be the right solution for you.

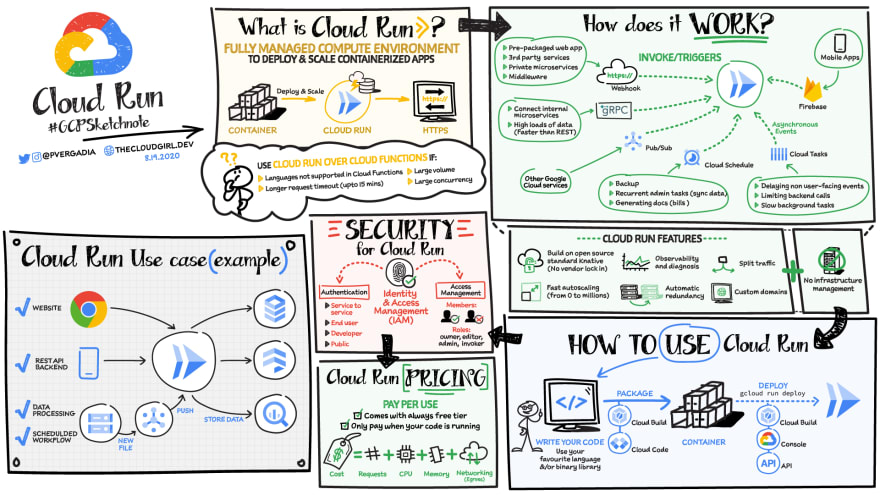

Cloud Run is a fully-managed compute environment for deploying and scaling serverless containerized microservices.

Fully managed Cloud Run is an ideal serverless platform for stateless containerized microservices that don’t require Kubernetes features like namespaces, co-location of containers in pods (sidecars), or node allocation and management.

You must be thinking, Why Cloud Run?

Cloud Run is a fully managed compute environment for deploying and scaling serverless HTTP containers without worrying about provisioning machines, configuring clusters, or autoscaling.

The managed serverless compute platform Cloud Run provides a number of features and benefits:

Easy deployment of microservices. A containerized microservice can be deployed with a single command without requiring any additional service-specific configuration. Si

Simple and unified developer experience. Each microservice is implemented as a Docker image, Cloud Run’s unit of deployment.

Scalable serverless execution. A microservice deployed into managed Cloud Run scales automatically based on the number of incoming requests, without having to configure or manage a full-fledged Kubernetes cluster. Managed Cloud Run scales to zero if there are no requests, i.e., uses no resources.

Support for code written in any language. Cloud Run is based on containers, so you can write code in any language, using any binary and framework.

No vendor lock-in - Because Cloud Run takes standard OCI containers and implements the standard Knative Serving API, you can easily port over your applications to on-premises or any other cloud environment.

Split traffic - Cloud Run enables you to split traffic between multiple revisions, so you can perform gradual rollouts such as canary deployments or blue/green deployments.

Automatic redundancy - Cloud Run offers automatic redundancy so you don’t have to worry about creating multiple instances for high availability

Cloud Run is available in two configurations:

Fully managed Google Cloud Service.

Cloud Run For Anthos (s (this option deploys Cloud Run into an Anthos GKE cluster).

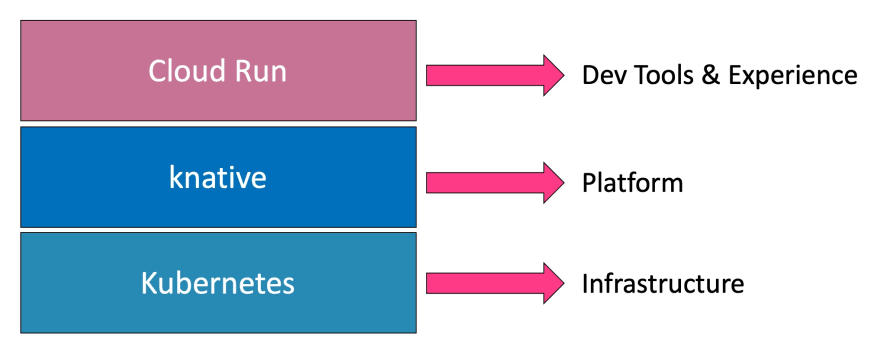

Cloud Run is a layer that Google built on top of Knative to simplify deploying serverless applications on the Google Cloud Platform.

Google is one of the first public cloud providers to deliver a commercial service based on the open-source Knative project. Like the way it offered a managed Kubernetes service before any other provider, Google moved fast in exposing Knative through Cloud Run to developers.

Knative has a set of building blocks for building a serverless platform on Kubernetes. But dealing with it directly doesn’t make developers efficient or productive. While it acts as the meta-platform running on the core Kubernetes infrastructure, the developer tooling and workflow are left to the platform providers.

How does Cloud Run work?

Cloud Run service can be invoked in the following ways:

HTTPS: You can send HTTPS requests to trigger a Cloud Run-hosted service. Note that all Cloud Run services have a stable HTTPS URL. Some use cases include:

Custom RESTful web API

Private microservice

HTTP middleware or reverse proxy for your web applications

Prepackaged web application

gRPC: You can use gRPC to connect Cloud Run services with other services—for example, to provide simple, high-performance communication between internal microservices. gRPC is a good option when you:

Want to communicate between internal microservices

Support high data loads (gRPC uses protocol buffers, which are up to seven times faster than REST calls)

Need only a simple service definition you don't want to write a full client library

Use streaming gRPCs in your gRPC server to build more responsive applications and APIs

WebSockets: WebSockets applications are supported on Cloud Run with no additional configuration required. Potential use cases include any application that requires a streaming service, such as a chat application.

Trigger from Pub/Sub: You can use Pub/Sub to push messages to the endpoint of your Cloud Run service, where the messages are subsequently delivered to containers as HTTP requests. Possible use cases include:

Transforming data after receiving an event upon a file upload to a Cloud Storage bucket

Processing your Google Cloud operations suite logs with Cloud Run by exporting them to Pub/Sub

Publishing and processing your own custom events from your Cloud Run services

Running services on a schedule: You can use Cloud Scheduler to securely trigger a Cloud Run service on a schedule. This is similar to using cron jobs.

Possible use cases include:

Performing backups on a regular basis

Performing recurrent administration tasks, such as regenerating a sitemap or deleting old data, content, configurations, synchronizations, or revisions

Generating bills or other documents

Executing asynchronous tasks: You can use Cloud Tasks to securely enqueue a task to be asynchronously processed by a Cloud Run service.

Typical use cases include:

Handling requests through unexpected production incidents

Smoothing traffic spikes by delaying work that is not user-facing

Reducing user response time by delegating slow background operations, such as database updates or batch processing, to be handled by another service,

Limiting the call rate to backend services like databases and third-party APIs

Events from Eventrac: You can trigger Cloud Run with events from more than 60 Google Cloud sources. For example:

Use a Cloud Storage event (via Cloud Audit Logs) to trigger a data processing pipeline

Use a BigQuery event (via Cloud Audit Logs) to initiate downstream processing in Cloud Run each time a job is completed

How is Cloud Run different from Cloud Functions?

Cloud Run and Cloud Functions are both fully managed services that run on Google Cloud’s serverless infrastructure, auto-scale, and handle HTTP requests or events. They do, however, have some important differences:

Cloud Functions lets you deploy snippets of code (functions) written in a limited set of programming languages, while Cloud Run lets you deploy container images using the programming language of your choice.

Cloud Run also supports the use of any tool or system library from your application; Cloud Functions does not let you use custom executables.

Cloud Run offers a longer request timeout duration of up to 60 minutes, while with Cloud Functions the requests timeout can be set as high as 9 mins.

Cloud Functions only sends one request at a time to each function instance, while by default Cloud Run is configured to send multiple concurrent requests on each container instance. This is helpful to improve latency and reduce costs if you're expecting large volumes.

If you enjoyed this article, you might also like:

3 cool Cloud Run features that developers love—and that you will too | Google Cloud Blog

Cloud Run: Container to production in seconds | Google Cloud

This content originally appeared on DEV Community and was authored by Swapnil Pawar

Swapnil Pawar | Sciencx (2021-09-01T13:34:26+00:00) Google Cloud Run Combines Serverless with Containers. Retrieved from https://www.scien.cx/2021/09/01/google-cloud-run-combines-serverless-with-containers/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.