This content originally appeared on DEV Community and was authored by Haytham Mostafa

1. Challenges with using Oracle databases

Amazon started facing a number of challenges with using Oracle databases to scale its services.

1.1 Complex database engineering required to scale

• Hundreds of hours spent each year trying to scale the Oracle databases horizontally.

• Database shards was used to handle the additional service throughputs and manage the growing data volumes but in doing so increased the database administration workloads.

1.2 Complex, expensive, and error-prone database administration

Hundreds of hours spent each month monitoring database performance, upgrading, database backups and patching the operating system for each instance and shard.

1.3 Inefficient and complex hardware provisioning

• Database and the infrastructure teams expended substantial time forecasting demand and planning hardware capacity to meet it.

• After forecasting, hundreds of hours spent in purchasing, installing, and testing the hardware.

• Additionally, teams had to maintain a sufficiently large pool of spare infrastructure to fix any hardware issues and perform preventive maintenance.

• The high licensing costs were just some of the compelling reasons for the Amazon consumer and digital business to migrate the persistence layer of all its services to AWS.

2. AWS Services

Overview about the key AWS database Services.

2.1 Purpose-built databases

• Amazon expects all its services be globally available, operate with microsecond to millisecond latency, handle millions of requests per second, operate with near zero downtime, cost only what is needed, and be managed efficiently by offering a range of purpose-built databases.

The three key database services to host the persistence layer of their services:

Amazon DynamoDB

Amazon Aurora

Amazon Relational Database Service (Amazon RDS) for MySQL or PostgreSQL

2.2 Other AWS Services used in implementation

• Amazon Simple Storage Service (Amazon S3)

• AWS Database Migration Service

• Amazon Elastic Compute Cloud (Amazon EC2)

• Amazon EMR

• AWS Glue

2.3 Picking the right database

• Pick the most appropriate database based on scale, complexity, and features of its service.

• Business units running services that use relatively static schemas, perform complex table lookups, and experience high service throughputs picked Amazon Aurora.

• Business units using operational data stores that had moderate read and write traffic, and relied on the features of relational databases selected Amazon RDS for PostgreSQL or MySQL.

3. Challenges during migration

The key challenges faced by Amazon during the transformation journey.

3.1 Diverse application architectures inherited

• Amazon has been defined by a culture of decentralized ownership that offered engineers the freedom to make design decisions that would deliver value to their customers. This freedom proliferated a wide range of design patterns and frameworks across teams. Another source of diversity was infrastructure management and its impact on service architectures.

3.2 Distributed and geographically dispersed teams

• Amazon operates in a range of customer business segments in multiple geographies which operate independently.

• Managing the migration program across this distributed workforce posed challenges including effectively communicating the program vision and mission, driving goal alignment with business and technical leaders across these businesses, defining and setting acceptable yet ambitious goals for each business units, and dealing with conflicts.

3.3 Interconnected and highly interdependent services

Amazon operates a vast set of microservices that are interconnected and use common databases. Migrating interdependent and interconnected services and their underlying databases required finely coordinated movement between teams.

3.4 Gap in skills

As Amazon engineers used Oracle databases, they developed expertise over the years in operating, maintaining, and optimizing them. Most service teams shared databases that were managed by a shared pool of database engineers and the migration to AWS was a paradigm shift for them.

3.5 Competing initiatives

Lastly, each business unit was grappling with competing initiatives. In certain situations, competing priorities created resource conflicts that required intervention from the senior leadership.

4. People, processes, and tools

The following three sections discuss how three levers were engaged to drive the project forward.

4.1 People

One of the pillars of success was founding the Center of Excellence (CoE).

The CoE was staffed with experienced enterprise program managers.

The leadership team ensured that these program managers had a combination of technical knowledge and program management capabilities.

4.2 Processes and mechanisms

This section elaborates on the processes and mechanisms established by the CoE and their impact on the outcome of the project.

Goal setting and leadership review

It was realized early in the project that the migration would require attention from senior leaders. They used the review meeting to highlight systemic risks, recurrent issues, and progress.

Establishing a hub-and-spoke model

It would be arduous to individually track the status of each migration. Therefore, they established a hub-and-spoke model where service teams nominated a team member, typically a technical program manager, who acted as the spoke and the CoE program managers were the hub.

Training and guidance

A key objective for the CoE was to ensure that Amazon engineers were comfortable moving their services to AWS. To achieve this, it was essential to train these teams on open source and AWS native databases, and cloud-based design patterns.

Establishing product feedback cycles with AWS

This feedback mechanism was instrumental in helping AWS rapidly test and release features to support internet scale workloads. This feedback mechanism also enabled AWS to launch product features essential for its other customers operating similar sized workloads.

Establishing positive reinforcement

To ensure that teams make regular progress towards goals, it is important to promote and reinforce positive behaviors, recognize teams, and celebrate their progress. The CoE established multiple mechanisms to achieve this.

Risk management and issue tracking

Enterprise scale projects involving large numbers of teams across geographies are bound to face issues and setbacks.

4.3 Tools

Due to the complexity of the project management process, the CoE decided to invest in tools that would automate the project management and tracking.

5. Common migration patterns and strategies

The following section describes the migration of four systems used in Amazon from Oracle to AWS.

5.1 Migrating to Amazon DynamoDB – FLASH

Overview of FLASH

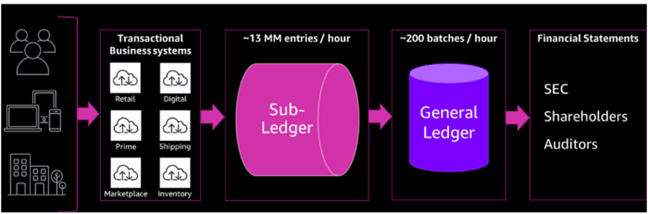

• Set of critical services called the Financial Ledger and Accounting Systems Hub (FLASH).

• Enable various business entities to post financial transactions to Amazon’s sub-ledger.

• It supports four categories of transactions compliant with Generally Accepted Accounting Principles (GAAP)—account receivables, account payables, remittances, and payments.

• FLASH aggregates these sub-ledger transactions and populates them to Amazon’s general ledger for financial reporting, auditing, and analytics.

>>>>>>>>>>>>>> Data flow diagram of FLASH <<<<<<<<<<<<<<

>>>>>>>>>>>>>> Data flow diagram of FLASH <<<<<<<<<<<<<<

Challenges with operating FLASH services on Oracle

FLASH is a high-throughput, complex, and critical system at Amazon. It experienced many challenges while operating on Oracle databases.

a. Poor latency

The poor service latency despite having performed extensive database optimization.

b. Escalating database costs

Each year, the database hosting costs were growing by at least 10%, and the FLASH team was unable to circumvent the excessive database administration overhead associated with this growth.

c. Difficult to achieve scale

As FLASH used a monolithic Oracle database service, the interdependencies between the various components of the FLASH system were preventing efficient scaling of the system.

Reasons to choose Amazon DynamoDB as the persistence layer

a. Easier to scale

b. Easier change management

c. Speed of transactions

d. Easier database management

Challenges and design considerations during refactoring

The FLASH team faced the following challenges during the re-design of its services on DynamoDB:

a. Time stamping transactions and indexed ordering

After a timestamp was assigned, these transactions were logged in a S3 bucket for durable backup. DynamoDB Streams along with Amazon Kinesis Client Libraries were used to ensure exactly-once, ordered indexing of records. When enabled, DynamoDB Streams captures a time-ordered sequence of item-level modifications in a DynamoDB table and durably stores the information for up to 24 hours. Applications can access a series of stream records, which contain an item change, from a DynamoDB stream in near real time.

After a transaction appears on the DynamoDB stream, it is routed to a Kinesis stream and indexed.

b. Providing data to downstream services

• Enable financial analytics.

• FLASH switched the model to an event-sourcing model where an S3 backup of commit logs was created continuously.

• The use of unstructured and disparate tables was eliminated for analytics and data processing.

• The team created a single source of truth and converged all the data models to the core event log/journal, to ensure deterministic data processing.

• Amazon S3 was used as an audit trail of all changes to the DynamoDB journal table.

• Amazon SNS was used to publish these commit logs in batches for downstream consumption.

• The artifact creation was coordinated using Amazon SQS. The entire system is SOX compliant.

• These data batches were delivered to the general ledger for financial reporting and analysis.

c. Archiving historical data

FLASH used a common data model and columnar format for ease of access and migrated historical data to Amazon S3 buckets that are accessible by Amazon Athena. Amazon Athena was ideal as it allows for a query-as-you-go model which works well as this data is queried on average once every two years. Also, because Amazon Athena is serverless.

Performing data backfill

AWS DMS was used to ensure reliable and secure data transfer. It is also SOX compliant from source to target, provided the team granular insights during the process.

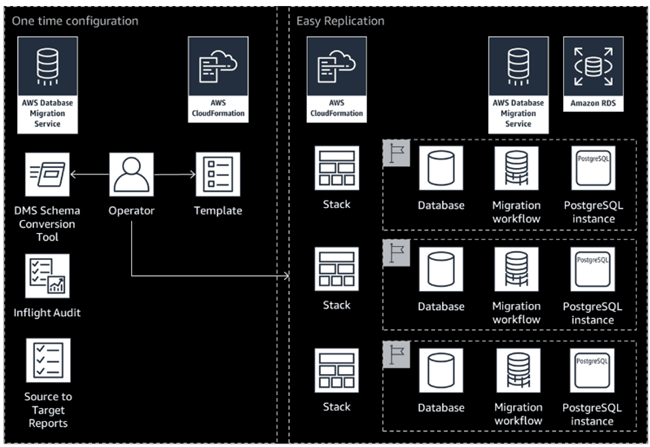

>>>>>>>>>> Lift and shift using AWS DMS and RDS <<<<<<<<<<

>>>>>>>>>> Lift and shift using AWS DMS and RDS <<<<<<<<<<

Benefits

• Rearchitecting the FLASH system to work on AWS database services improved its performance.

• Although FLASH provisioned more compute and larger storage, the database operating costs have remained flat or reduced despite processing higher throughputs.

• The migration reduced administrative overhead enabling focus on optimizing the application.

• Automatic scaling has also allowed the FLASH team to reduce costs.

5.2 Migration to Amazon DynamoDB – Items and Offers

Overview of Items and Offers

• A system manages three components associated with an item – item information, offer information, and relationship information.

• A key service within the Items and Offers system is the Item Service which updates the item information.

Challenges faced when operating Item Service on Oracle databases

The Item Service team was facing many challenges when operating on Oracle databases.

Challenging to administer partitions

The Item data was partitioned using hashing, and partition maps were used to route requests to the correct partition. These partitioned databases were becoming difficult to scale and manage.

Difficult to achieve high availability

To optimize space utilization by the databases, all tables were partitioned and stored across 24 databases.

Reaching scaling limits

Due to the preceding challenges of operating the Items and Offers system on Oracle databases, the team was not able to support the growing service throughputs.

>>>>>>>>>>>>>>> Scale of the Item Service <<<<<<<<<<<<<<<

>>>>>>>>>>>>>>> Scale of the Item Service <<<<<<<<<<<<<<<

Reasons for choosing Amazon DynamoDB

Amazon DynamoDB was the best suited persistence layer for IMS. It offered an ideal combination of features suited for easily operating a highly available and large-scale distributed system like IMS.

a. Automated database management

b. Automatic scaling

c. Cost effective and secure

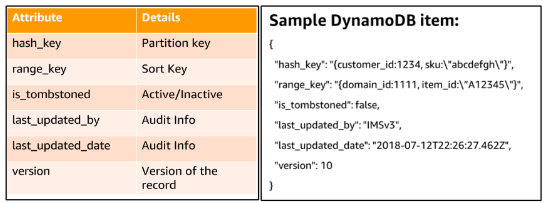

The following figure displays one of the index tables on Oracle that stored SKU to ASIN mappings.

>>>>>>>>>> Table structure of Item Service on Oracle <<<<<<<<<<

>>>>>>>>>> Table structure of Item Service on Oracle <<<<<<<<<<

The following figure shows the equivalent table represented in DynamoDB. All other Item Service schemas were redesigned using similar principles.

>>>>>>>>> Table structure of Item Service on DynamoDB <<<<<<<<<

>>>>>>>>> Table structure of Item Service on DynamoDB <<<<<<<<<

Execution

After building the new data model, the next challenge was performing the migration. The Item Service team devised a two-phased approach to achieve the migration — live migration and

backfill.

i. Live migration

- Transition the main store from Oracle to DynamoDB without any failures and actively migrate all the data being processed by the application.

- The item Service team used three stages to achieve the goal:

a. The copy mode: Validate the correctness, scale, and performance of DynamoDB.

b. The compatibility mode: Allowed the Item Service team to pause the migration should issues arise.

c. The move mode: After the move mode, the Item Service team began the backfill phase of migration that would make DynamoDB the single main database and deprecate Oracle.

ii. Backfill

• AWS DMS was used to backfill records that were not migrated by the application write logic.

• Oracle source tables were partitioned across 24 databases and the destination store on DynamoDB was elastically scalable.

• The migration has scaled by running multiple AWS DMS replication instances per table and each instance had parallel loads configured.

• To handle AWS DMS replication errors, the process automated by creating a library using the AWS DMS SDK.

• Finally, fine tune configurations on AWS DMS and Amazon DynamoDB to maximize the throughput and minimize cost.

>>>>>>>>>>>>>>> Backfill process of IMS <<<<<<<<<<<<<<<

>>>>>>>>>>>>>>> Backfill process of IMS <<<<<<<<<<<<<<<

Benefits

After the migration, the availability of Item Service has improved, ensuring consistent performance and significantly reduced the operational workload for the team. Also, the team used the point-in-time recovery feature to simplify backup and restore operations. The team received these benefits at a lower overall cost than previously, due to dynamic automatic scaling capacity feature.

5.3 Migrating to Aurora for PostgreSQL – Amazon Fulfillment Technologies (AFT)

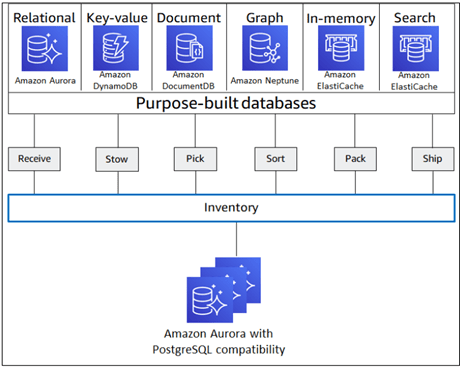

Overview of AFT

The Amazon Fulfillment Technologies (AFT) business unit builds and maintains the dozens of services that facilitate all fulfillment activities. A set of services called the Inventory Management Services facilitate inventory movement and are used by all other major services to perform critical functions within the FC.

Challenges faced operating AFT on Oracle databases

The AFT team faced many challenges operating its services on Oracle databases in the past.

a. Difficult to scale

All the services were becoming difficult to scale and were facing availability issues during peak throughputs due to both hardware and software limitations.

b. Complex hardware management

Hardware management was also becoming a growing concern due to the custom hardware requirements required from these Oracle clusters.

>>>>>>>>>>>>> Databases services used by AFT <<<<<<<<<<<<<

>>>>>>>>>>>>> Databases services used by AFT <<<<<<<<<<<<<

Reasons for choosing Amazon Aurora for PostgreSQL

Picking Amazon Aurora for three primary reasons.

a. Static schemas and relational lookups.

b. Ease of scaling and feature parity.

c. Automated administration.

Before the migration, the team decided to re-platform the services rather than rearchitect them. Re-platforming accelerated the migration by preserving the existing architecture while minimizing service disruptions.

Migration strategy and challenges:

The migration to Aurora was performed in three phases:

a. Preparation phase

• Separate production and non-production accounts to ensure secure and reliable deployment.

• Aurora offers fifteen near real-time read replicas while a central node manages all writes.

• Aurora uses SSL (AES-256) to secure connections between the database and the application.

Important differences to note are:

i. How Oracle and PostgreSQL treat time zones differently.

ii. Oracle and PostgreSQL 9.6 is different partitioning strategies and their implementations.

b. Migration phase

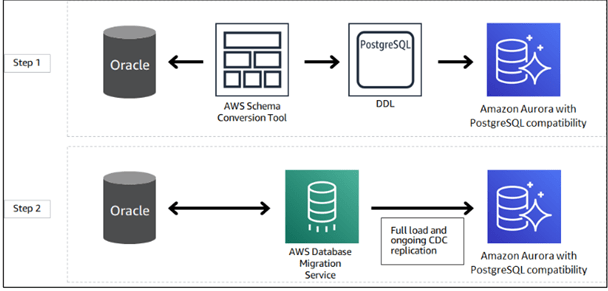

AWS SCT was used to convert the schemas from Oracle to PostgreSQL. Subsequently DMS performed a full load and ongoing Change Data Capture (CDC) replication to move real-time transactional data.

>>> Steps in the migration of schemas using AWS SCT and AWS DMS <<<

>>> Steps in the migration of schemas using AWS SCT and AWS DMS <<<

The maxFileSize parameter specifies the maximum size (in KB) of any CSV file used to transfer data to PostgreSQL. It was observed that setting maxFileSize to 1.1 GB significantly improved migration speed. Since version 2.x AWS DMS has been to increase this parameter to 30 GB.

c. Post-migration phase

Monitoring the health of the database becomes paramount in this phase.One important activity that must occur in PostgreSQL is vacuuming. Aurora PostgreSQL sets auto-vacuum settings according to instance size by default, but one size does not always fit all different workloads, so it is important to ensure auto-vacuum is working properly as expected.

Benefits

• After migrating to Amazon Aurora, provisioning additional capacity is achieved through a few simple mouse clicks or API calls reducing the scaling effort by as much as 95%.

• High availability is another key benefit of Amazon Aurora.

• The business unit is no longer limited by the input/output operations.

5.4 Migrating to Amazon Aurora – buyer fraud detection

Overview

Amazon retail websites operate a set of services called Transaction Risk Management Services (TRMS) to protect brands, sellers, and consumers from transaction fraud by actively detecting and preventing it. The Buyer Fraud Service applies machine learning algorithms over real-time and historical data to detect and prevent fraudulent activity.

Challenges of operating on oracle

The Buyer Fraud Service team faced three challenges operating its services using on-premises Oracle databases.

a. Complex error-prone database administration

The Buyer Fraud Service business unit shared an Oracle cluster of more than one hundred databases with other fraud detection services at Amazon.

b. Poor latency

To maintain performance at scale, Oracle databases were horizontally partitioned. As application code required new database shards to handle the additional throughput, each shard added incremental workload on the infrastructure in terms of backups, patching, and performance.

c. Complication hardware provisioning

After capacity planning, the hardware business unit coordinated suppliers, vendors, and Amazon finance business units to purchase the hardware and prepare for installation and testing.

Application design and migration strategy

The Buyer Fraud Service business unit decided to migrate its databases from Oracle to Amazon Aurora. The team chose to re-factor the service to accelerate the migration and minimize service disruption. The migration was accomplished in two phases:

i. Preparation phase

• Amazon Aurora clusters were launched to replicate the existing Oracle databases.

• A shim layer has built to perform simultaneous r/w operations to both database engines.

• The business unit migrated the initial data, and used AWS DMS to establish active replication from Oracle to Aurora.

• Once the migration was complete, AWS DMS was used to perform a row-by-row validation and a sum count to ensure that the replication was accurate.

>>>> Dual write mode of the Buyer Fraud Service using SHIM layer <<<<

>>>> Dual write mode of the Buyer Fraud Service using SHIM layer <<<<

ii. Execution phase

Buyer Fraud Service began load testing the Amazon Aurora databases to evaluate read/write latencies and simulate peak throughput events such as Prime Day. Results from these load tests indicated that Amazon Aurora could handle twice the throughput of the legacy infrastructure.

Benefits

• Performance, scalability, availability, hardware management, cloud-based automation, and cost.

• AWS manages patching, maintenance, backups, and upgrades improved the application performance.

• The migration has also lowered the cost of delivering the same performance as before.

• The improved performance of Amazon Aurora has allowed to handle high throughput.

• Buyer Fraud service was able to scale its largest workloads, support strict latency requirements with no impact to snapshot backups.

• Hardware management has gotten exponentially easier with new hardware being commissioned in minutes instead of months.

6. Organization-wide benefits

• Services that migrated to DynamoDB, saw significant performance improvements such as a 40% drop in 99th percentile latency, OS patching, database maintenance and software upgrades.

• Additionally, the elastic capacity of preconfigured database hosts on AWS has eliminated administrative overhead to scale by allowing for capacity provisioning.

7. Post-migration operating model

This section discusses key changes in the operating model for service teams and its benefits.

7.1 Distributed ownership of databases

• The migration transformed the operating model to one focused on distributed ownership.

• Individual teams now control every aspect of their infrastructure including capacity provisioning, forecasting and cost allocation.

• Each team also had the option to launch Reserved or On-Demand Instances to optimize costs based on the nature of demand.

• The CoE developed heuristics to identify the optimal ratio of On-Demand to Reserved Instances based on service growth, cyclicality, and price discounts.

• Focusing on innovation on behalf of customers.

7.2 Career growth

The migration presented an excellent opportunity to advance the career paths of scores of database engineers. These engineers who exclusively managed Oracle databases in data centers were offered new avenues of growth and development in the rapidly growing field of cloud services, NoSQL databases, and open-source databases.

This content originally appeared on DEV Community and was authored by Haytham Mostafa

Haytham Mostafa | Sciencx (2021-09-30T08:40:20+00:00) Modernizing Amazon database infrastructure – migrating from Oracle to AWS | AWS White Paper Summary. Retrieved from https://www.scien.cx/2021/09/30/modernizing-amazon-database-infrastructure-migrating-from-oracle-to-aws-aws-white-paper-summary/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.