This content originally appeared on TPGi and was authored by Ricky Onsman

Artificial Intelligence (AI) is a term used to describe the principle that features of human intelligence can be simulated by machines to produce outcomes that resemble those of human insight. This is achieved by aggregating, analyzing and comparing massive amounts of data and then interpreting and applying the results mechanically.

In practice, AI is an umbrella term for a set of technologies including:

- Machine Learning, which trains machines how and what to learn in order to make decisions and predictions.

- Neural Networks, which are series of algorithms imitating the way the human brain receives data and makes decisions.

- Deep Learning, which is used to train neural networks, enabling computers to undertake tasks like speech recognition and facial recognition.

- Natural Language Processing, which uses machine learning to recognize human languages, enabling computers to undertake tasks like translation and captioning.

- Data Visualization, which summarizes information gained through machine learning into visual displays of data patterns, trends, and relationships.

The relatively recent huge rises in both data storage and computational calculating power have made the application of AI practical. That’s particularly evident in the use of AI on the web, where networked computers can handle billions of data points to mechanically produce functionality that resembles what human intelligence produces.

While the web is one environment where both the advantages and shortcomings of using AI are evident, AI also underpins key technologies that produce and refine autonomous vehicles, computer vision, quantum computing, travel concierges, customer support, complex financial transactions, user recommendations, and robotics. It also plays a role in accessibility enhancing tools such as Be My Eyes, Aira and Seeing AI, as well as automated remediation tools such as overlays.

The focus of this article is on the impact of various types of AI on testing for digital accessibility. As more and more websites make use of AI to enhance user experience, we need to be aware of the role of AI in potentially inhibiting web accessibility for people with disabilities.

In practical terms, that means when we test a website for conformance to the requirements of standards like WCAG and provide remediation guidance, we should be aware of the role of AI in both creating accessibility issues and also in addressing issues. On the web, AI can solve accessibility problems and it can create accessibility problems, sometimes simultaneously.

Impact on accessibility testing

Currently, the major issue with AI with regard to web accessibility is that the way specific AI-based functionalities are implemented can exclude people with certain disabilities. An example is that speech recognition is good for people with motor disabilities in that they don’t have to type in passwords, but it can make web content inaccessible to people who can’t speak clearly because of a disability.

In almost all of those situations, we can apply the same principles that are the basis of WCAG: ensure the functionalities make content perceivable, operable, understandable and robust. Often this means providing alternate ways of perceiving and understanding content and operating related functionality, and ensuring that this is possible across multiple technologies and platforms, including assistive technology.

A deeper problem can be that the data that AI is based on can fail to include a wide enough representation of people with disability, creating inbuilt inclusivity issues and biases. If facial recognition functionality is based on an “average” face, it can fail to work for people with disabilities whose facial features are not average, such as people with Down syndrome.

While the use of AI can raise ethical concerns, our focus is on the practical implications of its effect on web accessibility.

In practice, we can usually apply the same principles and methods we use to test all web content.

Implementation

As we go about testing websites for accessibility and providing remediation guidance, here are some AI-driven features to look out for. Over time, we intend to break these sections out into separate articles with more detailed explanations, WCAG references and best practice design patterns.

Alternate text for images

The idea that machine learning can be used to automatically supply images on the web with alternate text without the need for human intervention, sometimes called auto-tagging, is an attractive one. In practice, there are a number of issues, some of which may be resolved as the technology improves and some that may never be resolved.

- it may not distinguish between informative and decorative images

- it may not be specific enough

- it may be slightly to extremely inaccurate

- it may not identify key aspects in context

- it may be subject to identification bias

Given that inadequate, inaccurate or misleading alt text can have dire consequences in settings such as medical, legal and financial reports, product owners cannot rely on auto-generated alt text alone. It must be checked and confirmed as accurate in context, and it must be editable. All of these caveats are even more emphatic when auto-tagging is applied to complex images such as maps, charts and graphs.

Captions

Automatically supplying videos with machine-generated captions addresses another issue we frequently encounter on the web, eliminating the need for manual addition of captions that make synchronized video and audio content accessible to people who are deaf or hard of hearing. The features used by providers such as YouTube and Vimeo can extend to providing closed captions for ambient noise and sound effects as well as speech but the underlying speech and sound recognition technology often produces significant inaccuracies. It can also fail to distinguish between speakers, affecting how the meaning of the audio content is conveyed.

While auto-captions for recorded video can often be edited post-production (and this capability should be available), auto-captioning live video can be particularly tricky as it can’t be edited until collected into a text transcript (another capability that should be available). Context may determine how severely this should be assessed: in some cases, imperfect captions might be acceptable up to a point, in other cases not.

Speech recognition

Speech recognition allows users to exercise voice control over some website functionality such as logging in and site search. It can make web content and functionality available to users who can’t operate a mouse or keyboard, including people with motor impairments and people who are blind or with low vision. It often requires an application to learn the nuances of a specific user’s voice and even then can fail to distinguish certain languages, accents and pronunciations.

Web features that rely on speech recognition may be completely inaccessible to people with speech impediments as a result of disabilities such as dysarthria or deafness. For that reason, it should never be the only way a user can operate key functions such as logins and user authentication – an alternative means must be provided.

Facial recognition

Facial recognition is most commonly used in web situations in two ways. It can use a device’s camera to recognize and identify a specific user as a way of user authentication, and it can also be used to identify and describe images of people’s faces. Both applications are subject to the same caveats as speech recognition.

As a method of user authentication, it can fail to correctly identify faces with features it has not learned to recognize. This is, at this stage, a consequence of the limited representation in its databases of people with certain facial features, including people with disabilities including people with Down syndrome and other intellectual disabilities, and people who have had strokes whose facial features are affected. Facial recognition should never be the only method of user authentication.

When it comes to facial recognition to identify faces in images for the purpose of, for example, generating alternate text or image captions, the level of accuracy is often very poor, particularly for faces that are not well represented in its databases. This identification bias requires that its text output must always be made editable to correct errors.

Image recognition

Machine vision is an AI technology that drives web features such as the use of QR codes and credit card scanning. Aligning a user device camera with an image on a web page or to scan and submit a credit card in order to identify themselves or complete financial transactions respectively can be very difficult for users with some kinds of disability. Image recognition should not be relied on as the only method of these kinds of user interaction and alternate methods should be made available.

Text recognition

Text recognition is used on the web in a number of ways, most prominently in language translation services such as Google Translate. This can have accessibility issues such as the notification that page translation is available not being keyboard accessible or visible when screen magnification is used.

Text recognition can also be used to analyze large sections of complex text and simplify the language and shorten the text to make it more readable for people reading difficulties. This should be checked that it correctly reflects the meaning of the original text.

Optimal Character Recognition (OCR), which uses text recognition and machine vision to translate images of text into text that can be read and manipulated by humans and machines, is an example of a technology that is rarely acknowledged as AI, yet it is machine learning that has driven its refinement in recognizing a multitude of characters, fonts and languages. JAWS uses OCR to announce text in PDF documents and other text in images.

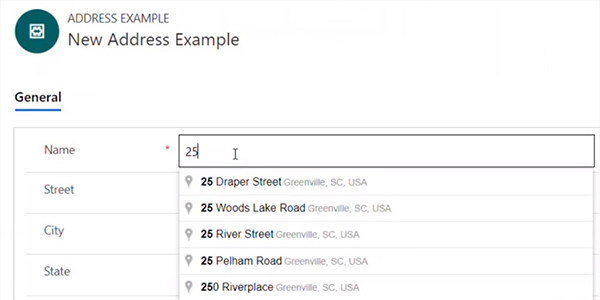

Autocomplete

When form controls use autocomplete functionality, a filtered list of choices is presented to the user based on their initial search string. The user can then choose an item from the list without having to enter the remaining characters. Common accessibility failings of such controls include that the revealed listing of filtered choices is not conveyed to assistive technologies and that items in the list are not reachable when using the keyboard or touch devices.

When users complete forms that include inputs tagged with the autocomplete attribute plus a valid value, the data entered by the user is stored on their local machine and becomes available to use when they next encounter a form that includes inputs tagged the same way. This places less cognitive demand on users who may have memory recall issues as a result of a disability and reduces the risk of incorrect data being entered.

Chatbots

Automated or partially automated web facilities that interact with a user with text or speech are increasingly common on the web as a convenient method of customer service, allowing product owners to present a means of communication that simulates human interaction. Chatbots are prime examples of AI-driven technology that can be made accessible but often is not. Things to look out for include keyboard access (and no keyboard traps), not relying on voice alone, the effects of screen magnification (reflow, resize, text spacing, line height), orientation, color contrast and the appropriate use of landmarks.

Overlays

Accessibility overlays are third-party products that typically use JavaScript to detect accessibility issues and suggest or apply code-based fixes to improve websites accessibility. Some fully automated overlays claim their AI-driven technologies are so complete that they can by themselves provide complete accessibility remediation to conform to standards like WCAG without any human intervention. When we test websites that have overlays installed, it is essential to test the sites with the overlays present and activated, and with them uninstalled or deactivated. Overlays rarely, if ever, are found to provide complete remediation and they can create additional or different accessibility issues, without the product owner being aware.

Notes

The existing uses of AI-driven technologies on websites will continue to grow and will expand into new areas. Some of the existing uses, once they are refined and their datasets grow to overcome biases against people with disabilities, will become great enhancements for web accessibility, such as the recognition tools. Others seem less likely to prosper if they don’t overcome their inherent shortcomings, such as fully automated overlays.

We can also expect new applications of AI to potentially improve web accessibility, such as automatically creating accessible names for content structures like tables, detecting and explaining form errors, and translating sign language into text. In most cases, however, there will remain the need to apply human intelligence to ensure that web content is truly accessible.

Resources

The post Introduction to A.I. and Accessibility Testing appeared first on TPGi.

This content originally appeared on TPGi and was authored by Ricky Onsman

Ricky Onsman | Sciencx (2021-11-08T17:42:58+00:00) Introduction to A.I. and Accessibility Testing. Retrieved from https://www.scien.cx/2021/11/08/introduction-to-a-i-and-accessibility-testing/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.