This content originally appeared on DEV Community and was authored by Arpit Mishra

Ever since the Clubhouse app rose to fame, the popularity of drop-in audio-only rooms has been increasing sharply and adopted by several platforms such as Slack, Twitter, and Discord. These rooms are great for hosting Q&A sessions, panel discussions, and a lot more.

This content was originally published - HERE

Earlier this year, Discord introduced Stages, an audio-only channel to engage with your Discord community with separation between speakers and audience. In this blog, we’ll learn how to build a similar platform painlessly with 100ms.

What We’ll Be Building

Using the 100ms React SDK, we’ll build our custom audio room application that will mimic these features from Discord

Stages:

Allow the user to join as a speaker, listener, or a moderator.

Speakers and moderators will have the permission to mute or unmute themselves.

Listeners will only be able to listen to the conversation, raise their hand to become a speaker, or leave the room.

Moderators will be allowed to mute anyone and change the role of a person to speaker or listener.

By the end of this blog, you can expect to build an application like this with Next.js (a React framework) and 100ms SDK:

The only prerequisites for building this project is a fundamental understanding of Next.js and React hooks. The Next.js documentation is a great place to start reading about how Next.js works, but you can still follow along if you’ve only used React in the past.

Familiarity with Flux-based architecture is a bonus but not a necessity, and no prior knowledge of WebRTC is required. How wonderful is that!

Setting up the Project

Before diving right into the code, create a 100ms account from the 100ms Dashboard to get your token_endpoint and room_id. We’ll be needing these credentials in the later stages of building the application.

Once you’ve created an account, follow the steps given below to create your application and set it up on the 100ms dashboard:

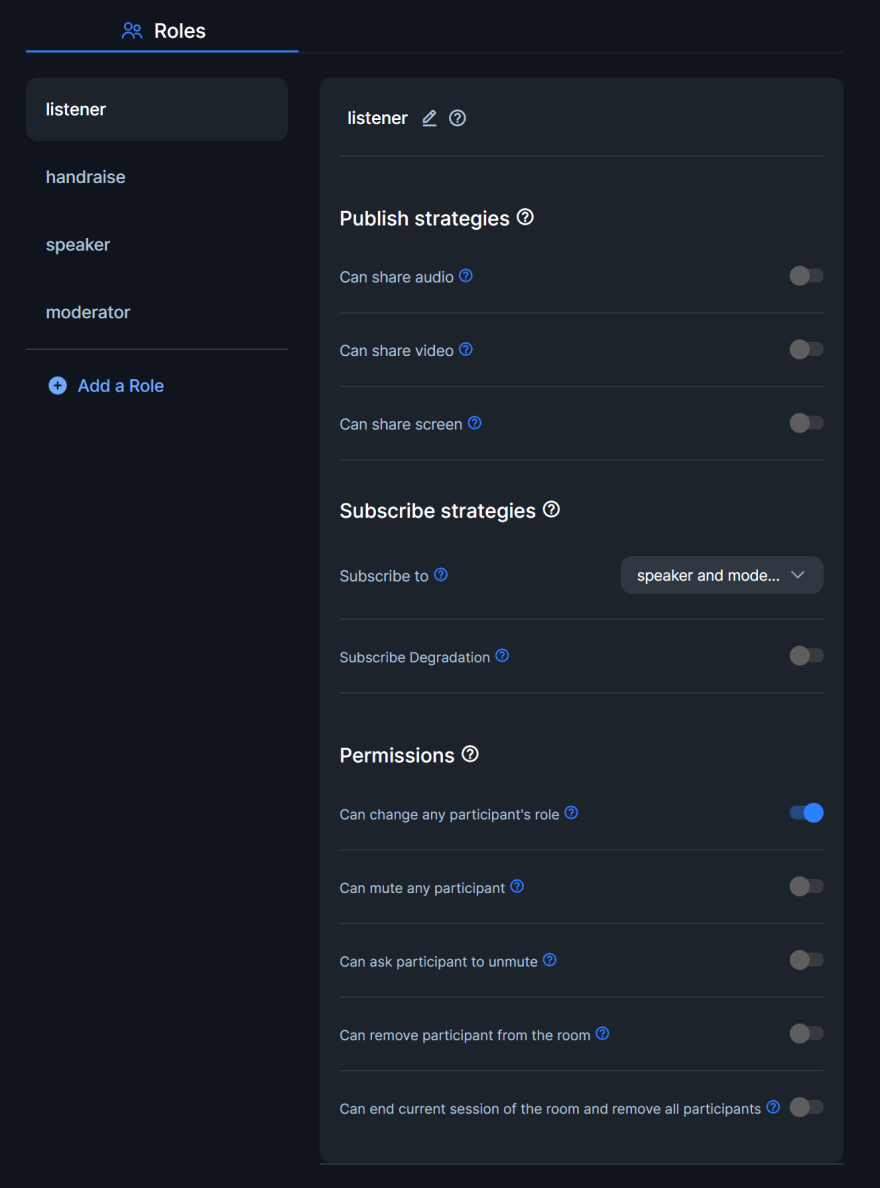

Defining Roles

We’ll be having four roles in our application: listener, speaker, handraise, and moderator. Let’s set up permissions for each of these roles, starting with the listener role.

For the listener role, we can turn off all the publish strategies as we don’t want listeners to share their audio, video, or screen. Listeners will still be able to listen to others’ audio.

Inside the permissions section, uncheck all the options except for Can change any participant's role permission.

For the handraise role, we can again turn off all the publish strategies and just keep the Can change any participant's role permission turned on. This permission will allow us to switch the user from listener role to handraise role, and vice-versa, and help us to to implement the hand-raise functionality.

When a listener wants to become a speaker, they can click on the hand-raise button that will change their role to handraise. When the user's role is handraise, we'll display a small badge next to their avatar to notify the moderator.

Now for the speaker role, since we’re building an audio-only room, we can just check the Can share audio publish strategy and leave the rest of them unchecked. We can leave all the permissions turned off for the speaker role.

Finally, for the moderator role, we can check the Can share audio publish strategy and move on towards the permissions. In the permissions section, turn on the Can change any participant's role permission and the Can mute any participant permission.

For all the roles, set the subscribe strategies to speaker and moderator. And with that, we’re ready to move on and get the required credentials from the 100ms Dashboard.

Getting the token_enpoint

Once you’re done creating your custom application and setting up the roles, head on over to the Developers tab to get your token endpoint URL. Keep this URL handy. We’ll store this URL inside an environment variable shortly in the upcoming sections.

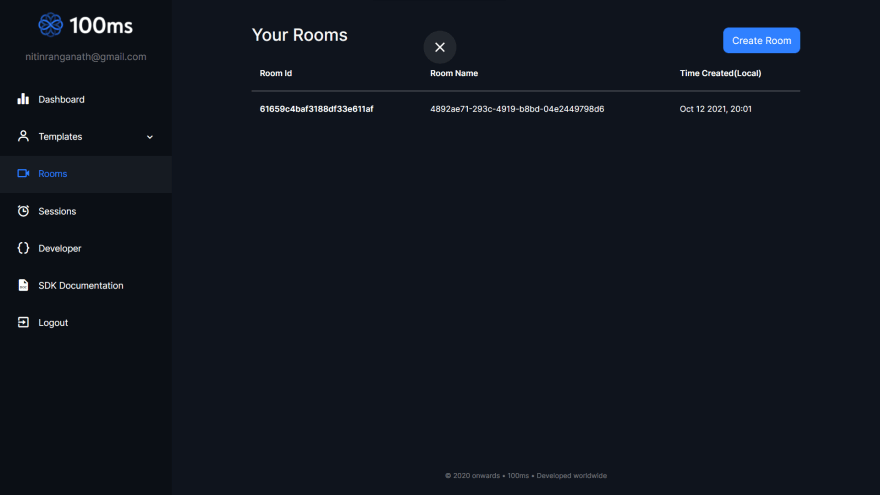

Getting the room_id

To obtain the room ID, head on over the Rooms tab on 100ms Dashboard. If you don’t have an existing room, you can go ahead and create one to get its ID. Otherwise, copy the room ID of an existing room and paste it somewhere for now.

Understanding the Terminologies

I know you’re excited to start coding, but let’s take a moment to understand the key terminologies associated with the 100ms SDK so that we’re on the same page.

Room — A room is the basic object that 100ms SDKs return on successful connection. This contains references to peers, tracks and everything you need to render a live audio/video app.

Peer — A peer is the object returned by 100ms SDKs that contains all information about a user — name, role, video track etc.

Track — A track represents either the audio or video that a peer is publishing.

Role — A role defines who a peer can see/hear, the quality at which they publish their video, whether they have permissions to publish video/screenshare, mute someone, change someone’s role.

An Overview of the Starter Code

To ease the development process, you can grab the starter code with prebuilt components and styling by cloning the template branch of this repo with this command:

git clone -b template https://github.com/itsnitinr/discord-stages-clone-100ms.git

This starter code is built using the Create Next App CLI tool with the Tailwind CSS template. All the dependencies required for this building this project, such as the @100mslive/hms-video and @100mslive/hms-video-react SDK have already been added to the package.json file.

Therefore, make sure to run npm install or yarn install to install these dependencies locally before moving forward.

Remember the token endpoint URL and room ID we had saved earlier? It’s time to transfer them to an environment variable file. The starter code comes with an .env.local.example file.

cp .env.local.example .env.local

Run this command to copy this example env file and create an actual one:

Now, add the token endpoint URL and room ID to this .env.local file:

// .env.local

TOKEN_ENDPOINT = <YOUR-TOKEN-ENDPOINT-URL>

ROOM_ID = <YOUR-ROOM-ID>

To start the Next.js development server, run the dev script in this manner:

npm run dev

#or

yarn dev

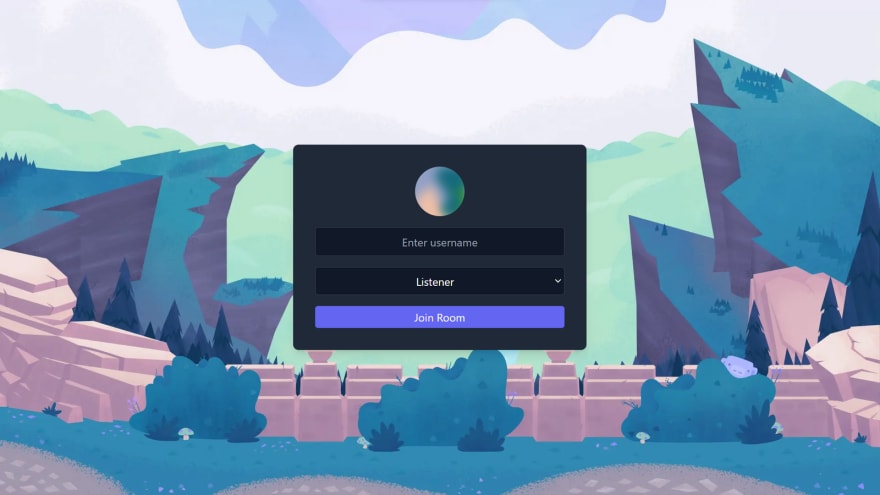

Visit http://localhost:3000 on your browser and you’ll be greeted with this screen if everything goes well:

Fantastic! Let’s start implementing the features one by one in the upcoming sections.

Building the project

Before we can start using the hooks, selectors, or store from the 100ms React SDK, we will need to wrap our entire application with <HMSRoomProvider /> component from the @100mslive/hms-video-react package.

Here’s how your code should look like once you’ve completed this step:

// pages/index.js

import { HMSRoomProvider } from '@100mslive/hms-video-react';

import Head from 'next/head';

import Join from '../components/Join';

import Room from '../components/Room';

const StagesApp = () => {

const isConnected = false;

return isConnected ? <Room /> : <Join />;

};

const App = () => {

return (

<HMSRoomProvider>

<Head>

<title>Discord Stages Clone</title>

</Head>

<StagesApp />

</HMSRoomProvider>

);

};

export default App;

Joining a Room

Right now, we’re conditionally rendering either the <Room /> component or the <Join /> component based on the isConnected variable. However, its value has been hardcoded to be false for now.

To check if the user is connected to a room or not, we can use the selectIsConnectedToRoom selector and useHMSStore hook like this:

// pages/index.js

import { HMSRoomProvider,

useHMSStore,

selectIsConnectedToRoom,

} from '@100mslive/hms-video-react';

import Head from 'next/head';

import Join from '../components/Join';

import Room from '../components/Room';

const StagesApp = () => {

const isConnected = useHMSStore(selectIsConnectedToRoom);

return isConnected ? <Room /> : <Join />;

};

const App = () => {

return (

<HMSRoomProvider>

<Head>

<title>Discord Stages Clone</title>

</Head>

<StagesApp />

</HMSRoomProvider>

);

};

export default App;

By default, the user will not be connected to any room, and hence, the <Join /> component will be rendered. Let’s implement the functionality to join a room inside the components/Join.jsx file.

To join a room, we can use the join() method on the hmsActions object returned by the useHMSActions() hook.

This join() method takes an object containing the userName, authToken and an optional settings object as the parameter.

We can get the userName from the local name state variable created using the useState() hook from React. However, to obtain the authToken, we will need to make a network request to our custom Next.js API route along with the role we want to join the room with.

We’re also tracking the role the user has selected using the local role state variable, similar to name.

You can find the API route inside the pages/api/token.js file. Here’s how it will look like:

// pages/api/token.js

import { v4 } from 'uuid';

export default async function getAuthToken(req, res) {

try {

const { role } = JSON.parse(req.body);

const response = await fetch(`${process.env.TOKEN_ENDPOINT}api/token`, {

method: 'POST',

body: JSON.stringify({

user_id: v4(),

room_id: process.env.ROOM_ID,

role,

}),

});

const { token } = await response.json();

res.status(200).json({ token });

} catch (error) {

console.log('error', error);

res.status(500).json({ error });

}

}

Essentially, this API route makes a POST request to our 100ms token endpoint URL, which is stored inside the environment variables, along with a unique user_id, role, and the room_id, which is also stored inside the environment variables.

If successful, our Next.js API route will return the authToken. Using this authToken, we can join the room. Since we don’t want the user to join with their mic turned on, we can set isAudioMuted to true inside the optional settings object.

// components/Join.jsx

import Image from 'next/image';

import { useState } from 'react';

import Avatar from 'boring-avatars';

import { useHMSActions } from '@100mslive/hms-video-react';

import NameInput from './Join/NameInput';

import RoleSelect from './Join/RoleSelect';

import JoinButton from './Join/JoinButton';

const Join = () => {

const hmsActions = useHMSActions();

const [name, setName] = useState('');

const [role, setRole] = useState('listener');

const joinRoom = async () => {

try {

const response = await fetch('/api/token', {

method: 'POST',

body: JSON.stringify({ role }),

});

const { token } = await response.json();

hmsActions.join({

userName: name || 'Anonymous',

authToken: token,

settings: {

isAudioMuted: true,

},

});

} catch (error) {

console.error(error);

}

};

return (

<>

<Image

src="https://imgur.com/27iLD4R.png"

alt="Login background"

className="w-screen h-screen object-cover relative"

layout="fill"

/>

<div className="bg-gray-800 rounded-lg w-11/12 md:w-1/2 lg:w-1/3 absolute top-1/2 left-1/2 -translate-x-1/2 -translate-y-1/2 p-8 text-white shadow-lg space-y-4 flex flex-col items-center max-w-md">

<Avatar name={name} variant="marble" size="72" />

<NameInput name={name} setName={setName} />

<RoleSelect role={role} setRole={setRole} />

<JoinButton joinRoom={joinRoom} />

</div>

</>

);

};

export default Join;

And with just a few lines of code, we have implemented the functionality to join a room and render the <Room /> component. Now, let’s move forward and render the peers connected to our room.

Rendering The Peers

Right now, if you view the <Room /> component inside the components/Room.jsx file, you can see that we have hardcoded the value of peers to an empty array.Let’s make this dynamic.

To do that, we can use the selectPeers selector combined with the useHMSStore() hook to get an array of all the peers connected to the room in the form of objects. Each of these peer objects will contain information such as their name and roleName that we can use to render their tiles accordingly.

Once we get an array of all the peers, we can use the filter() JavaScript array method to separate them into listenersAndHandraised and speakersAndModerators using the roleName property on each peer object. This will help us render the appropriate tile based on the user’s role.

If the role of the user is a listener or handraise, we will render the <ListenerTile /> component. Else, we will render the <SpeakerTile /> component. While rendering these tiles, pass the peer object as a prop in order to display the peer’s information inside the tiles.

// components/Room.jsx

import { selectPeers, useHMSStore } from '@100mslive/hms-video-react';

import RoomInfo from './Room/RoomInfo';

import Controls from './Room/Controls';

import ListenerTile from './User/ListenerTile';

import SpeakerTile from './User/SpeakerTile';

const Room = () => {

const peers = useHMSStore(selectPeers);

const speakersAndModerators = peers.filter(

(peer) => peer.roleName === 'speaker' || peer.roleName === 'moderator'

);

const listenersAndHandraised = peers.filter(

(peer) => peer.roleName === 'listener' || peer.roleName === 'handraise'

);

return (

<div className="flex flex-col bg-main text-white min-h-screen p-6">

<RoomInfo count={peers.length} />

<div className="flex-1 py-8">

<h5 className="uppercase text-sm text-gray-300 font-bold mb-8">

Speakers - {speakersAndModerators.length}

</h5>

<div className="flex space-x-6 flex-wrap">

{speakersAndModerators.map((speaker) => (

<SpeakerTile key={speaker.id} peer={speaker} />

))}

</div>

<h5 className="uppercase text-sm text-gray-300 font-bold my-8">

Listeners - {listenersAndHandraised.length}

</h5>

<div className="flex space-x-8 flex-wrap">

{listenersAndHandraised.map((listener) => (

<ListenerTile key={listener.id} peer={listener} />

))}

</div>

</div>

<Controls />

</div>

);

};

export default Room;

The <RoomInfo /> component takes a count prop with the total number of peers connected to the rooms as its value. For the speakers and listeners headings, we can access the length property of speakersAndModerators and listenersAndHandraised array, respectively, to get their count.

Adding Functionalities To The Controls

Let’s go to the <Controls /> component inside components/Room/Controls.jsx. Essentially, we will be having three controls: one to toggle our mic on or off, one to toggle hand-raise, and lastly to leave the room. We'll cover the hand-raise functionality in the latter part of this blog post.

The <MicButton /> component responsible for the toggle mic functionality will only be displayed to the speakers and moderators whereas, the <ExitButton /> component will be displayed to all roles.

We need to check if our role and if our mic is turned on or not to render the buttons accordingly. To do this, use the selectIsLocalAudioEnabled selector to get the status of our mic, and the selectLocalPeer selector to get our local peer object.

// components/Room/Controls.jsx

import {

useHMSStore,

selectIsLocalAudioEnabled,

selectLocalPeer,

} from '@100mslive/hms-video-react';

import MicButton from './MicButton';

import ExitButton from './ExitButton';

import HandRaiseButton from './HandRaiseButton';

const Controls = () => {

const isMicOn = useHMSStore(selectIsLocalAudioEnabled);

const peer = useHMSStore(selectLocalPeer);

const isListenerOrHandraised =

peer.roleName === 'listener' || peer.roleName === 'handraise';

return (

<div className="flex justify-center space-x-4">

{!isListenerOrHandraised && (

<MicButton isMicOn={isMicOn} toggleMic={() => {}} />

)}

{isListenerOrHandraised && (

<HandRaiseButton

isHandRaised={peer.roleName === 'handraise'}

toggleHandRaise={() => {}}

/>

)}

<ExitButton exitRoom={() => {}} />

</div>

);

};

export default Controls;

Now, to add the functionalities, start by creating a new instance of the useHMSActions() hook and store it inside hmsActions.

Call the setLocalAudioEnabled() method on the hmsActions object inside the toggleMic prop of the <MicButton /> component.

This method takes a boolean value: true for turning on the mic and false for turning it off. Since we want to toggle, we can pass the opposite of current status using the ! operator.

To exit the room, we can simply call the leave() method on the hmsActions object.

// components/Room/Controls.jsx

import {

useHMSStore,

useHMSActions,

selectIsLocalAudioEnabled,

selectLocalPeer,

} from '@100mslive/hms-video-react';

import MicButton from './MicButton';

import ExitButton from './ExitButton';

import HandRaiseButton from './HandRaiseButton';

const Controls = () => {

const hmsActions = useHMSActions();

const isMicOn = useHMSStore(selectIsLocalAudioEnabled);

const peer = useHMSStore(selectLocalPeer);

const isListenerOrHandraised =

peer.roleName === 'listener' || peer.roleName === 'handraise';

return (

<div className="flex justify-center space-x-4">

{!isListenerOrHandraised && (

<MicButton

isMicOn={isMicOn}

toggleMic={() => hmsActions.setLocalAudioEnabled(!isMicOn)}

/>

)}

{isListenerOrHandraised && (

<HandRaiseButton

isHandRaised={peer.roleName === 'handraise'}

toggleHandRaise={() => {}}

/>

)}

<ExitButton exitRoom={() => hmsActions.leave()} />

</div>

);

};

export default Controls;

Displaying Audio Level and Mic Status

When a user is speaking, we want to display a green ring just outside the user’s avatar to indicate the same. This will require us to know the audio level of the speaker, but how can we find that out? With 100ms React SDK, it is as simple as using the selectPeerAudioByID selector.

This selector function takes the peer’s ID as the parameter and returns an integer to represent the audio level. We can assign it to a variable and check if it is greater than 0 to check if the user is speaking.

Similarly, to check if a user’s mic is turned on or not, we can use the selectIsPeerAudioEnabled selector, which also takes the peer’s ID as the parameter and returns a boolean value to indicate the mic status.

Using these two selectors, we can render the UI accordingly by adding a ring using Tailwind CSS classes and displaying the appropriate icon. Go to the <SpeakerTile /> component inside components/User/SpeakerTile.jsx and make the following changes:

// components/User/SpeakerTile.jsx

import Avatar from 'boring-avatars';

import { FiMic, FiMicOff } from 'react-icons/fi';

import {

useHMSStore,

selectPeerAudioByID,

selectIsPeerAudioEnabled,

} from '@100mslive/hms-video-react';

import PermissionsMenu from './PermissionsMenu';

const SpeakerTile = ({ peer }) => {

const isSpeaking = useHMSStore(selectPeerAudioByID(peer.id)) > 0;

const isMicOn = useHMSStore(selectIsPeerAudioEnabled(peer.id));

return (

<div className="relative bg-secondary px-12 py-6 rounded-lg border border-purple-500">

<PermissionsMenu id={peer.id} audioTrack={peer.audioTrack} />

<div className="flex flex-col gap-y-4 justify-center items-center">

<div

className={

isSpeaking

? 'ring rounded-full transition ring-3 ring-green-600 p-0.5'

: 'p-0.5'

}

>

<Avatar name={peer.name} size="60" />

</div>

<p className="flex items-center gap-x-2">

{peer.name}

{isMicOn ? (

<FiMic className="h-3 w-3" />

) : (

<FiMicOff className="h-3 w-3" />

)}

</p>

</div>

</div>

);

};

export default SpeakerTile;

The Permissions Menu

Time to add some functionality to the <PermissionsMenu /> component inside the components/User/PermissionsMenu.jsx file. We want to display this menu only if we have the moderator role.

To get our localPeer object, we can use the selectLocalPeer selector function. This will return an object with the roleName property that we can check to get our role.

Alternatively, you can also choose to use the selectLocalPeerRole selector and access the name property of the returned object.

To check if we are a moderator, use the === equality operator to check if our roleName equates to moderator.

Accordingly, we can either render this component, or null if we’re not a moderator.

The permissions menu has three options: Mute Peer, Make Listener, and Make Speaker. To achieve these functionalities, create a new instance of the useHMSActions() hook to get access to all the required methods.

For muting a peer, call the setRemoteTrackEnabled() method on hmsActions with the peer’s audio track (that we’re getting from the props) and false as parameters.

To change the role of a peer, call the changeRole() method on hmsActions along with the peer’s ID, new role, and a force boolean value to change their role without asking them or give them a chance to accept/reject.

// components/User/PermissionsMenu.jsx

import { useState } from 'react';

import { AiOutlineMenu } from 'react-icons/ai';

import {

useHMSStore,

useHMSActions,

selectLocalPeer,

} from '@100mslive/hms-video-react';

const PermissionsMenu = ({ audioTrack, id }) => {

const hmsActions = useHMSActions();

const mutePeer = () => {

hmsActions.setRemoteTrackEnabled(audioTrack, false);

};

const changeRole = (role) => {

hmsActions.changeRole(id, role, true);

};

const localPeer = useHMSStore(selectLocalPeer);

const [showMenu, setShowMenu] = useState(false);

const btnClass = 'w-full text-sm font-semibold hover:text-purple-800 p-1.5';

const isModerator = localPeer.roleName === 'moderator';

if (isModerator) {

return (

<div className="absolute right-1 top-1 z-50">

<AiOutlineMenu

className="ml-auto"

onClick={() => setShowMenu(!showMenu)}

/>

{showMenu && (

<div className="mt-2 bg-white text-black py-2 rounded-md">

<button className={btnClass} onClick={() => mutePeer()}>

Mute

</button>

<button className={btnClass} onClick={() => changeRole('listener')}>

Make Listener

</button>

<button className={btnClass} onClick={() => changeRole('speaker')}>

Make Speaker

</button>

</div>

)}

</div>

);

} else {

return null;

}

};

export default PermissionsMenu;

Adding Hand-Raise Functionality

Finally, let’s add the last bit of functionality to our application: hand-raise. As a listener, you might also want a chance to become a speaker at times. To notify the moderator, we can build a simple hand-raise button that will display a badge next to your avatar to show that you're interested to speak.

Therefore, start by building the functionality to change our role from listener to handraise on clicking the <HandRaiseButton /> component.

To do this, go back to the <Controls /> component inside components/Room/Controls.jsx.

Over here, you’ll notice the <HandRaiseButton /> component with 2 props: a isHandRaised boolean prop to check if you've raised hand currently and a toggleHandRaise function to toggle it. Also, we'll display this button only if we are a listener or have handraise role.

For the isHandRaised prop, we simply need to get our role by accessing the roleName property of our local peer and check if it equates to the handraise role.

For the toggle functionality, we can use the changeRole() method available on the hmsActions object like we did for the <PermissionsMenu /> component.

This changeRole() method takes our local peer's ID, the new role to set, and a force boolean prop. For the new role, if we are a listener currently, we need to pass handraise as the parameter. If we already have the role of handraise, we need to set it back to listener.

Here’s how your code should look like:

// components/Room/Controls.jsx

import {

useHMSStore,

useHMSActions,

selectIsLocalAudioEnabled,

selectLocalPeer,

} from '@100mslive/hms-video-react';

import MicButton from './MicButton';

import ExitButton from './ExitButton';

import HandRaiseButton from './HandRaiseButton';

const Controls = () => {

const hmsActions = useHMSActions();

const isMicOn = useHMSStore(selectIsLocalAudioEnabled);

const peer = useHMSStore(selectLocalPeer);

const isListenerOrHandraised =

peer.roleName === 'listener' || peer.roleName === 'handraise';

return (

<div className="flex justify-center space-x-4">

{!isListenerOrHandraised && (

<MicButton

isMicOn={isMicOn}

toggleMic={() => hmsActions.setLocalAudoEnabled(!isMicOn)}

/>

)}

{isListenerOrHandraised && (

<HandRaiseButton

isHandRaised={peer.roleName === 'handraise'}

toggleHandRaise={() =>

hmsActions.changeRole(

peer.id,

peer.roleName === 'listener' ? 'handraise' : 'listener',

true

)

}

/>

)}

<ExitButton exitRoom={() => hmsActions.leave()} />

</div>

);

};

export default Controls;

The starter code already contains the code to display a hand-raise badge in the <ListenerTile /> component. Inside this component, we just need to check if the peer's role is set to handraise and then conditionally render the <HandRaiseBadge /> accordingly.

And That’s a Wrap!

Building real-time audio application with 100ms SDK is as simple as that. I hope you enjoyed building this app, and make sure to drop by the 100ms Discord Server in case of any queries.

We can’t wait to see all the marvelous projects you build with 100ms. Till then, happy coding!

Check 100ms Now -> https://www.100ms.live/blog/build-discord-stage-channel-clone-hms

This content originally appeared on DEV Community and was authored by Arpit Mishra

Arpit Mishra | Sciencx (2021-11-16T07:26:30+00:00) Build a Discord stage channel clone with 100ms and Next.js. Retrieved from https://www.scien.cx/2021/11/16/build-a-discord-stage-channel-clone-with-100ms-and-next-js/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.