This content originally appeared on DEV Community and was authored by Shai Almog

Yesterday we got the first PR through and now I'm on the second PR.

We almost have an open source project. Well, technically we already have the source code and a few lines of code, but it still isn't exactly a "project". Not in the sense of "it needs to do something useful". But it compiles, runs unit tests and even has 80% code coverage. That last one was painful. I'm not a fan of arbitrary metrics to qualify the quality of code. The 80% code coverage is a good example of this.

Case in point, this code. Currently, the source code looks like this:

MonitoredSession monitoredSession = connectSession.create(System.getProperty("java.home"), "-Dhello=true", HelloWorld.class.getName(), "*");

But originally it looked like:

MonitoredSession monitoredSession = connectSession.create(null, null, HelloWorld.class.getName(),null);

Changing this increased code coverage noticeably because code down the road I have special case conditions for variables that aren't null. For good test coverage, I would also want to test the null situation, but it doesn't affect test coverage. Now obviously, you don't write tests just to satisfy a code coverage tool... But if we rely on metrics like that as a benchmark of code quality and reliability. We should probably stop.

Another problem I have with this approach is the amount of work required to get that last 10-20% of code coverage to pass the 80% requirement. That's insanely hard. If you work on a project with 100% code coverage requirement, you have my condolences. I think an arbitrary percentage of code for coverage is a problematic metric. I like the statement coverage stats better, but they still suffer from similar problems.

On the plus side, I love the code coverage report; I feel it gives some sense of where the project stands and helps make sense of it all. In that sense, Sonar Cloud is pretty great.

Keen observers of the code will notice I didn't add any coverage to the common code. It has very little business logic at the moment and in fact I removed even that piece of code in the upcoming PR.

Writing Tests

I spent a lot of time writing tests and mocking, which is good preparation for building a tool that will generate mocked unit tests. As a result, I feel the secondary goal of generating tests based on code coverage report; is more interesting than ever before. I can't wait to dogfood the code to see if I can bring up the statistics easily.

One thing I find very painful in tests is the error log file. For the life of me, I do not know why Maven tests target shows a stack trace that points at the line in the test instead of the full stack trace by default. IntelliJ/IDEA improved on this, but it's still not ideal. Especially when combing through CI logs.

Right now, all of my tests are unit tests because I don't have full integration in place yet. But this will change soon. I'm still not sure what form I will pick for integration tests since the initial version of the application won't have a web front-end. I've yet to test the CLI.

It conflicted me a bit when I started writing this code. As I mentioned before, integrating the Java debug API into the Spring Boot backend was a pretty big shift in direction for me. But it makes sense and solves a lot of problems. The main point of contention for me was supporting other languages/platforms. But I hope I'll be able to integrate with the respective platforms native debug APIs as we add them. This will make the debugging process for other platforms similar to the Java debug sessions. I'll try to write the code in a modular way so we can inject support for additional platforms.

I'm pretty sure program execution for python, node etc. will allow similar capabilities as JDI on Java SE. So if the code is modular enough, I could just add packages to match every supported platform. At least, that's the plan right now. If that won't work, we can always refactor or just add an agent option for those platforms. Our requirements are relatively simple, we don't need expression evaluation, etc. only the current stack frame data and simple step over results.

When I thought about using a debugger session, I thought about setting breakpoints for every method. This is obviously problematic since there are limits on the number of breakpoints we can set. Instead, I'm using method entry callbacks to track the application. Within every method, I use step over to review the elements within. This isn't implemented yet mostly because it's pretty darn hard to test this from unit tests.

That's why I paused the work on the debugger session and moved down the stack to the CLI. I want to use the actual product to debug this functionality.

Integrating CLI through Web Service

I created a CLI project and defined the dependencies, but until now I didn't really write any CLI code. The plan is that the CLI will communicate with the backend code via REST. Initially, I thought of using Swagger (Open API) which is pretty great. If you're unfamiliar with it, it essentially generates the documentation and even application code for all the networking you need. Just by inspecting your API, pretty sweet!

It generates a lot of boilerplate that's sometimes verbose and less intuitive. It also blocks some code reuse. If I had an extensive project or a public facing API, I might have used it. But for something intended for internal use, it seems like an overkill. So I ended up using Gson, which I used in the past, and the new Java 11 HttpClient API. The API is pretty nice and pretty easy to work with.

Over Eager PicoCLI

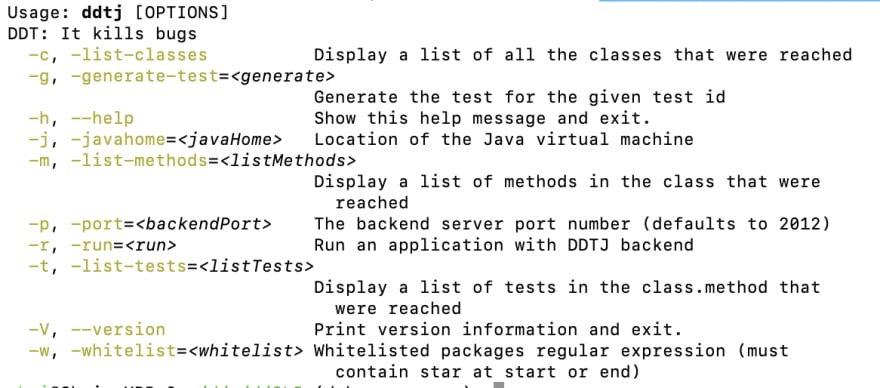

I really like PicoCLI, I think that if you look at this source file, you can easily see why. It makes coding a command line app trivial. You get gorgeous CLI APIs with highlighting, completion, smart grouping and so much more. It even has a preprocessor, which makes it easy to compile it with GraalVM.

Unfortunately, I ran into a use case that PicoCLI was probably a bit too "clever".

My initial design was to provide a command like this:

java -jar ddtj.jar -run [-javahome:<path-to-java-home] [-whitelist:regex-whitelist classes] [-arg=<app argument>...] mainClass

Notice that -arg would essentially allow us to take all the arguments we want to pass to the target JVM. E.g. if we want to pass an environment variable to the JVM we can do something like:

-arg "-Denv=x"

The problem is that PicoCLI thinks that -D is another argument and tries to parse it. I spent a lot of time trying to find a way to do this. I don't think it's possible with PicoCLI, but I might have missed something. The problem is, I don't want to replicate all the JVM arguments with PicoCLI arguments, e.g. classpath parameters, etc. Unfortunately, this is the only option I can see right now.

I'll have to re-think this approach after I get the MVP off the ground, but for now I changed the CLI specification to this:

java -jar ddtj.jar -run mainClass [-javahome:<path-to-java-home] [-whitelist:regex-whitelist classes] [-classpath...] [-jar...]

In fact, here's what the help command passed to PicoCLI generated for that code:

Completely Redid the Common

When I implemented the common project, I did so based on guesses. Which were totally wrong. I had to move every source file from my original implementation into the backend project. Then I had to reimplement classes for the common code.

The reason for this is the web interface. It made me realize I stuck the data model in common instead of the data that needs to move to the client (which is far smaller).

Implementing the web interface was trivial. I just went over the commands I listed in the documentation and added a web service method for each command. Then added a DTO (Data Transfer Object) for each one. The DTOs are all in the common project, which is how it should be.

Initially, I thought I'd just write all the logic within the web service rest class, but I eventually separated the logic to a generic service class. I'd like to add a web UI in the future so it makes sense to have common logic in a service class.

Handling State

State is a tough call for this type of application. I don't want a database and everything is "in memory". But even then, do we use session management?

What if we have multiple apps running on the backend?

So currently I just punted this problem from the MVP. If you run more than one app, it will fail. I just store data in one local field on the session object. I don't need static variables here since spring defaults to singletons for session beans and there's no clustering involved.

After the MVP, we should look into running multiple applications. This might require changing the CLI to determine the app we're working on right now. I think we'll still keep state in a field, but we should have a way to flush the state to reduce RAM usage.

Snyk Integration

Yesterday I had some issues with Snyk. If you're unfamiliar with it, it's a tool that seamlessly scans your code for vulnerabilities and helps you fix them. Pretty cool. The integration was super easy to do, and I was pretty pleased with it. Then I tried to add a badge to the project...

Apparently, Snyk badges don't work well with a mono-repo. They expect the pom file to be at the top level of the project. So I gave up on the badge for that. The scanning worked, so it's weird to me that the badge would fail.

I'll try to monitor that one and see if there's a solution for that. I discussed this with their support, which was reasonably responsive but didn't seem to have a decent answer for that.

It's a shame, I like the idea of a badge that authenticates the security status of the project.

Tomorrow

This has been a busy day, but it felt unproductive in the end because all the time I wasted on the CLI and trying to get things like the Snyk badge working. This is something to be weary of. Always look for the shortcut when doing an MVP and if you can't get something working, just cut it out for now. We can always get back to that later.

Tomorrow I hope to get the current PR up to function coverage standards and start wiring in the command line API so I can start debugging the debugger process in real-world conditions. There are still some commented out code fragments and some smells I need to improve.

This content originally appeared on DEV Community and was authored by Shai Almog

Shai Almog | Sciencx (2021-12-22T12:18:49+00:00) Code Coverage, Java Debugger API and Full Integration in Building DDJT – Day 3. Retrieved from https://www.scien.cx/2021/12/22/code-coverage-java-debugger-api-and-full-integration-in-building-ddjt-day-3/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.