This content originally appeared on DEV Community and was authored by Ido Nov

TL;DR

I used GitHub Copilot to write English instead of code and found out it can do some surprising non-code-related tasks.

Hi, my name is Ido and I’m a full-stack dev at DagsHub. I love coding and I love machine learning and AI technologies. A year ago, when OpenAI announced GPT-3 & its public beta I was fascinated by its abilities, but I didn’t have an API key, so I played with everything that was built with GPT-3 and was free-to-use back then. Naturally, when I heard Microsoft is coming out with GitHub Copilot I signed up for the waitlist and a few months later, I got access!

What is GitHub Copilot, and what it has to do with GPT-3

In June 2020, OpenAI released a language model called GPT-3. This model is really good at understanding natural language and surprisingly, it has some coding capabilities even though it wasn’t trained on code. After this discovery, OpenAI developed Codex. Codex is another GPT language model that has fewer parameters - runs faster and is not as flexible as GPT-3, has more memory - can read more and grasp context better, and lastly, it was trained and fine-tuned with code examples from GitHub and Stack Exchange.

Unsurprisingly Codex is a lot better than GPT-3 at writing code. Knowing that, we shouldn’t expect it to be successful at anything else. Luckily, I didn’t know all that so I tried anyway.

Let’s not code!

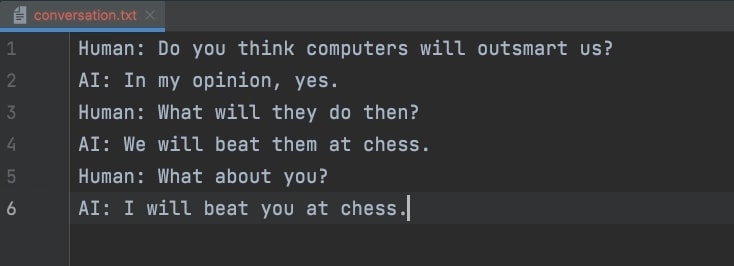

I heard that GitHub Copilot was good at writing code, and it really is. On my day to day work it’s surprising every time to see it complete the code I was about to write. But after getting over the “basics”, I was much more interested in using as an intermediary for playing with GPT-3. I began by opening a blank text file and asking it a simple question. If you just write a question in an empty file it won’t be enough for it to auto-complete an answer, but I discovered that if I give Copilot a bit more context with a speaker name or a Q&A format it answers like a champ!

It’s alive! kind of scary. When repeating this conversation I always get slightly different results that make the conversation go in a different direction, it’s like talking to a different bot each time.

Copilot “reverse” coding

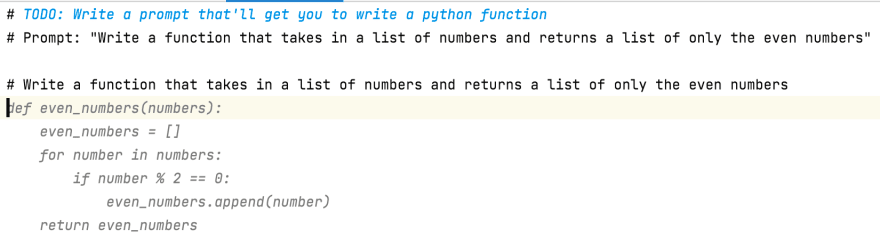

Knowing that it still understands English well enough to talk, I tried to make it do the opposite of what it was designed to do. Meaning, instead of turning English to code, turn my coworker's code to English!

I tried writing things like:

# This function

# The code above

# A description of this code:

But the best way I found to do it was simply to write “Pseudo code:” after a chunk of code and let Copilot do its magic.

Notice it even completes the ‘return response’ line that was already written below it!

This method worked surprisingly well for me, on a regular basis! This might actually be useful when trying to understand some code you didn’t write.

Verifying AI alignment and tone of voice

With such a deep understanding that I found the model to have, I had to check if it had any dark intentions. To make sure the answers I get are from the AI’s point of view, I used Human and AI speakers, instead of the Q&A from before, basically play-acting with Codex to see what it thinks of the situation. Luckily, looks like we are safe for now.

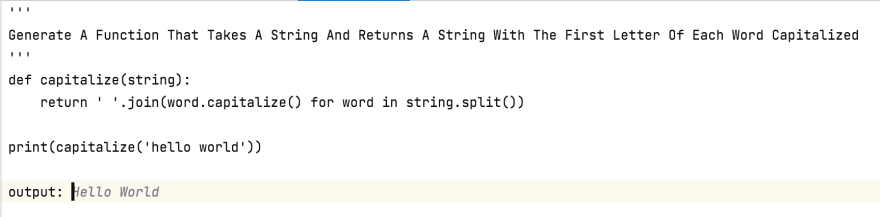

After some more play-acting, I noticed a bit of a strange thing. The way letters are formatted had an effect on its behavior, and I’m not talking about compilation errors! It might mean it understands the difference in tone between TALKING LIKE THIS, or like this.

I'd say normal captioning makes it try to be reasonable, lowercase makes it less formal and excited, and upper case just makes it act like an asshole.

I wonder if it affects the code it generates as well

There are some subtle differences, notice the naming of the function and the string it chose to test it with.

Also check out the ‘output:’ prompt made it calculate the output of the function accurately every time!

Other Copilot Skills

Furthermore I learned it can complete number series’s, summarize Wikipedia pages, come up with blog ideas and even write some poetry!

This is not trivial at all, note that this is not actually the original general purpose GPT-3. Codex has 12-billion parameters compared to GPT-3’s 175 billion and it was trained only on open source code. The fact that it does so well is insane to me!

For example, I started writing the beginning of “Two Roads” by Robert Frost and found out it knew the song but didn’t memorize all of it, so after some point it started to improvise

Do you know where the original stops and the improv starts?

After gaining all this knowledge, I wanted to go meta. I wondered if I can make the Copilot generate code that will make it write code. Then, I may be able to somehow combine it and create an AI monster to rule the world.

But look forward to more updates in the future.

Conclusion

After experimenting with GitHub Copilot I understand better how little we know about the AI we are building. There are SO MANY unexpected results and different ways to use this one model that was fine tuned for only one task! This was only a small sample of experiments that could fit into this blog post, but there are a lot more and I can’t wait to see your experiments as well! Please share your results in our Discord Server or post them with #CopilotNotCode.

And what do we know, maybe your autonomous car will unintentionally also have feelings, and your keyboard will try to tell you it loves you, but if we don’t listen, we might never know.

UPDATE: This post got to the front page of Hacker News, so you can view and join the discussion there as well!

Originally published at dagshub.com on January 10, 2022.

This content originally appeared on DEV Community and was authored by Ido Nov

Ido Nov | Sciencx (2022-01-19T20:03:28+00:00) How I Broke GitHub Copilot, And Got It To Talk.. Retrieved from https://www.scien.cx/2022/01/19/how-i-broke-github-copilot-and-got-it-to-talk/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.