This content originally appeared on DEV Community and was authored by Florian Flock

Introduction

I recently evaluated some of the typical Python web frameworks for a small REST API project. Previously I worked with Flask, but something caught me to try another framework this time. To put it very briefly - this time, I decided to use FastAPI, a modern and high-speed framework for use with REST APIs. FastAPI also has other advantages. For example, the documentation of the endpoints is already automatically generated in OpenAPI format and output as a Swagger interface. Smart!

Many voices and experts also see FastAPI as an emerging framework. So it's time to give it a try.

Frameworks to know if you code in Python 👇

1: Flask

2: Django

3: FastApi (Getting Popular!)

Use these to build your APIs! 💯

Which python framework do you like the most?06:12 AM - 22 Jan 2022

Since many of my provided services are already running in the Google Cloud, it was evident for this project to fall back on the hosting services there. New framework and new infrastructure would feel overwhelming.

My project, in this case, processes huge loads of financial data, and accordingly, I wanted to make sure that the logging of activities is clean and traceable. Sometimes I need to reproduce step by step the processors to understand where adaptions to the business logic are required.

Google Cloud Logging

Google Cloud offers a product called Google Cloud Logging for logging and tracing. All log entries are collected centrally and retrieved using a custom query language.

With most hosting options in the Google Cloud, the log entries are collected and processed via Fluentd. Google provides documentation for a JSON object of the optimal log entry. One of our goals would be to meet the requirements of this object.

In addition, clients are provided by google for the most common programming languages that convert log entries into the format shown above.

pip install google-cloud-logging

In the case of Python, the Django and Flask frameworks are natively supported by the client. For both frameworks, the client converts a lot of data into the proper format, especially the severity level and the trace of the request. Django and Flask benefit from built-in framework recognition by the google client. Have a look on the specific code piece.

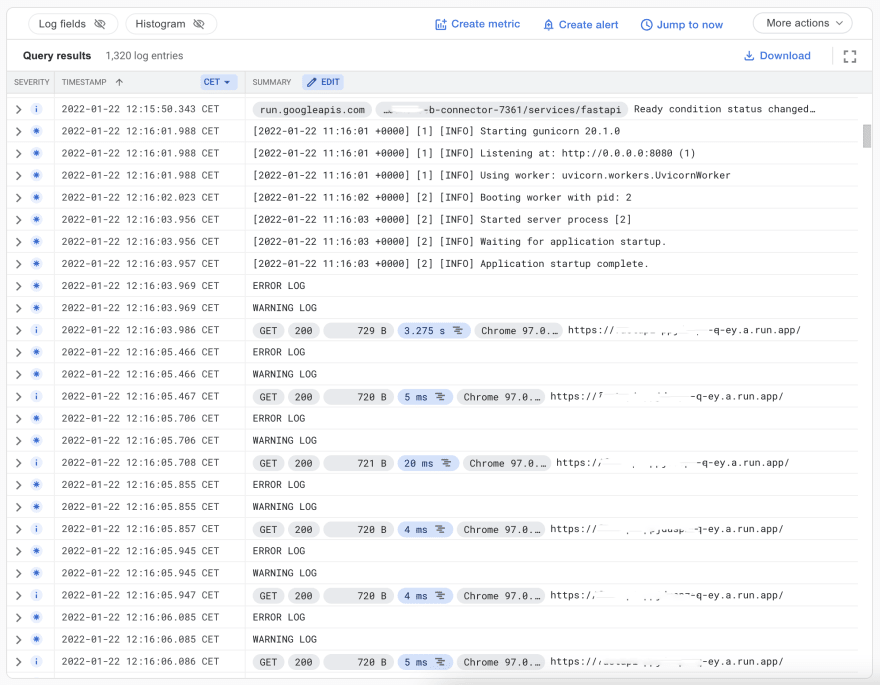

However, FastAPI is a pretty new framework and does not have any built-in support from the logging client. The log entries are loosely coupled, and Google Cloud Logging does not correctly show even the severity of the log entries:

I tried to modify my logging accordingly to ensure better traceability of the log entries.

Enable Log Tracing

One of the main objectives was to include the so-called trace ID for each log entry. This ID enables the log entries to be linked to the respective request. The additional information makes it possible to trace which request caused the log entry. It is particularly advantageous when the REST API is under heavy traffic, and errors or warnings should be reproduced.

I know that there are tools like OpenTelemetry. Even Google suggests OpenTelemetry in their docs. But for most applications, in my opinion, this is over-engineering, especially for stand-alone applications (not chained in a micro service architecture).

However, if applications are hosted with the App Engine, Cloud Functions or Cloud Run on Google Cloud, the GCP proxy/load balancer technology adds a special incoming request header:

X-Cloud-Trace-Context: TRACE_ID/SPAN_ID;o=TRACE_TRUE

I recommend the further reading about the trace log integration. We will use this header information to link the log entries as already discussed.

File Structure

I decided to create a directory called cloud_logging. At this point it should be mentioned that the folder is deliberately not only called logging as there may be complications with the build-in packages of the same name.

The structure after setup of FastAPI and my logging adaptation looks like this:

|-- Dockerfile

|-- cloud_logging

| |-- __init__.py

| |-- filter.py

| |-- middleware.py

| `-- setup.py

|-- main.py

|-- readme.md

`-- requirements.txt

One day, I will publish the source code as a published python package or try to make a pull request to the google logging client for the native integration.

Middleware

Let us start with the middleware. This class implements the BaseHTTPMiddleware class provided by Starlette and is mainly used to provide additional logic for all incoming requests. Starlette is shipped with FastAPI and it is the underlying ASGI framework.

Essentially, two important things are done here. Firstly, essential information about the request is written into a Python dictionary. Secondly, it checks whether the previously mentioned header is available.

Both pieces of information are processed and written into context variables. We use these variables later in the filter.

The finished middleware then looks like this:

import contextvars

import sys

from fastapi.logger import logger

from starlette.middleware.base import BaseHTTPMiddleware, RequestResponseEndpoint

from starlette.requests import Request

from starlette.responses import Response, JSONResponse

cloud_trace_context = contextvars.ContextVar('cloud_trace_context', default='')

http_request_context = contextvars.ContextVar('http_request_context', default=dict({}))

class LoggingMiddleware(BaseHTTPMiddleware):

async def dispatch(

self, request: Request, call_next: RequestResponseEndpoint

) -> Response:

if 'x-cloud-trace-context' in request.headers:

cloud_trace_context.set(request.headers.get('x-cloud-trace-context'))

http_request = {

'requestMethod': request.method,

'requestUrl': request.url.path,

'requestSize': sys.getsizeof(request),

'remoteIp': request.client.host,

'protocol': request.url.scheme,

}

if 'referrer' in request.headers:

http_request['referrer'] = request.headers.get('referrer')

if 'user-agent' in request.headers:

http_request['userAgent'] = request.headers.get('user-agent')

http_request_context.set(http_request)

try:

return await call_next(request)

except Exception as ex:

logger.debug(f'Request failed: {ex}')

return JSONResponse(

status_code=500,

content={

'success': False,

'message': ex

}

)

Logging Filter

After that, it is necessary to implement a logging filter. The following filter implements the CloudLoggingFilter provided in the Google Cloud Logging Library. We overwrite the filter method, which is called for each log entry. The main task of the filter is to append the information according to the Google Cloud Logging format to the record that was previously fetched by the middleware. The trace header still needs a little processing.

The finished filter looks like this:

import logging

import re

from google.cloud.logging_v2.handlers import CloudLoggingFilter

from cloud_logging.middleware import http_request_context, cloud_trace_context

class GoogleCloudLogFilter(CloudLoggingFilter):

def filter(self, record: logging.LogRecord) -> bool:

record.http_request = http_request_context.get()

trace = cloud_trace_context.get()

split_header = trace.split('/', 1)

record.trace = f"projects/{self.project}/traces/{split_header[0]}"

header_suffix = split_header[1]

record.span_id = re.findall(r'^\w+', header_suffix)[0]

super().filter(record)

return True

Setup

Last but not least, we have to make sure that the Python logger executes the desired logic. For this purpose, I have written a setup method called when the FastAPI application is started.

Essentially, this connects the logger provided by Python to Google Cloud Logging and our filter:

import logging

import google.cloud.logging

from cloud_logging.filter import GoogleCloudLogFilter

from fastapi.logger import logger

def setup_logging():

client = google.cloud.logging.Client()

handler = client.get_default_handler()

handler.setLevel(logging.DEBUG)

handler.filters = []

handler.addFilter(GoogleCloudLogFilter(project=client.project))

logger.handlers = []

logger.addHandler(handler)

logger.setLevel(logging.DEBUG)

Main Application

When starting the FastAPI application, adding the middleware and executing the setup method are necessary. I have decided to use Google Cloud Logging only in the production environment; locally, the standard logger is used.

The main application could then look something like this (main.py):

import logging

from fastapi import FastAPI

from fastapi.logger import logger

from pydantic import BaseSettings

from cloud_logging.middleware import LoggingMiddleware

from cloud_logging.setup import setup_logging

class Settings(BaseSettings):

environment: str = 'development'

settings = Settings()

app = FastAPI()

if settings.environment == 'production':

setup_logging()

app.add_middleware(LoggingMiddleware)

else:

logger.setLevel(logging.DEBUG)

@app.get('/')

async def root():

logger.debug('DEBUG LOG')

logger.error('ERROR LOG')

logger.warning('WARNING LOG')

logger.info('INFO LOG')

return {'message': 'Hello World'}

After re-deploying the application to Google Cloud Run, the log entries looks much better.

Conclusion

Clean log entries are one of the most important things for me when developing robust applications. Even though the process described here is not yet perfect, the log entries in the Google Cloud interface look excellent. And with the help of the trace, the log entries that belong together can now be analyzed.

Dockerfile

Just to make the things complete in the blog post, I will share my Dockerfile. The image is optimized for the usage on Google Cloud Run. The environment variable PORT is always set when using Cloud Run.

FROM python:3.9-slim

WORKDIR /app

COPY requirements.txt /app/requirements.txt

RUN pip install -r /app/requirements.txt

COPY . /app

CMD exec gunicorn --bind :$PORT --workers 1 --worker-class uvicorn.workers.UvicornWorker --threads 8 main:app

Cover Photo by Chris Ried on Unsplash

This content originally appeared on DEV Community and was authored by Florian Flock

Florian Flock | Sciencx (2022-01-22T17:19:29+00:00) Enable feature-rich Logging for FastAPI on Google Cloud Logging. Retrieved from https://www.scien.cx/2022/01/22/enable-feature-rich-logging-for-fastapi-on-google-cloud-logging/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.