This content originally appeared on DEV Community and was authored by CAST AI

Does using the cloud make your business sustainable? Research suggests that it’s a greener choice.

By moving to the cloud, the e-commerce giant Etsy slashed its energy consumption by 13% (from 7330 Mwh in 2018 to 6376 MWh in 2019), saving enough energy to power 450 households for a month.(1)

But migrating to the cloud doesn’t guarantee anything if you neglect to optimize your resource utilization over the long term.

(1) A megawatt-hour (Mwh) is equal to 1000 Kilowatt-hours (Kwh) - 1,000 kilowatts of electricity used continuously for one hour. This is about equivalent to the amount of electricity used by about 330 homes during one hour (source).

How much energy does cloud computing consume?

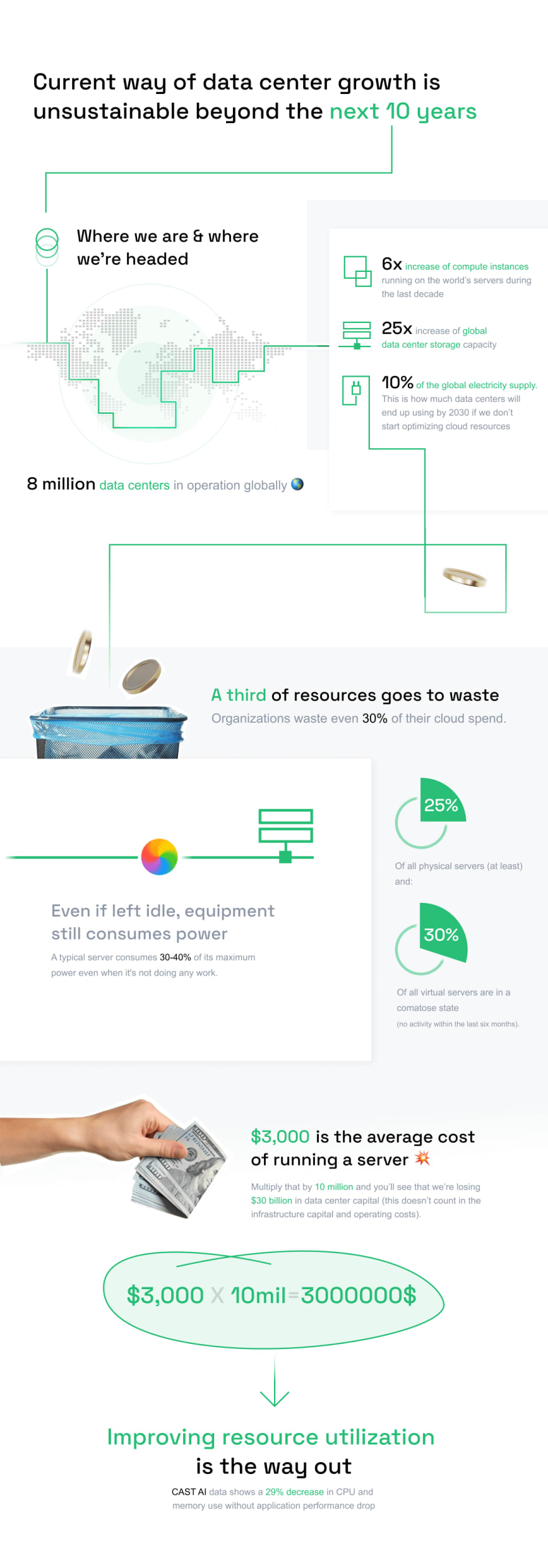

Back in 2012, you’d only find some 500k data centers scattered around the world to support the global internet traffic of the post-PC era. Fast forward to today and you’re looking at 8 million data centers in operation.

All of this infrastructure comes with increasing energy demand. If we fail to optimize it, data centers will end up using over 10% of the global electricity supply by 2030.

There’s no need to jump into the future to see how energy-consuming data centers are.

Just to give you an idea about how fast the data center landscape has been growing:

Between 2010 and 2018, the amount of data passing through the internet increased more than 10x, while global data center storage capacity increased 25x and the number of compute instances running on the world’s servers increased more than 6x.

Now add to this compute-intensive AI applications or IoT devices sending and receiving real-time data and you got yourself into a (cloud waste) fix.

How does all this translate into energy consumption?

Let’s take a step back to 2015 (the year when Volkswagen got caught using software to trick emissions tests and Instagram became bigger than Twitter).

That year, global data centers consumed 416.2 TWh of electricity - more than the energy consumption of the entire UK!

No matter the hardware and software innovations, this number will likely double every 4 years. This level of data center growth isn’t sustainable beyond the next 10-15 years, according to Ian Bitterlin, a renowned data center expert.

What exactly does he mean by “not sustainable”?

For instance, that Japan’s data centers will require more power than the country’s entire electricity generation capacity by 2030.

China is already seeing production lines stopping periodically due to energy shortages. This is just an example of what could happen to the digital world if we fail to address this problem soon.

How to make the cloud sustainable again

Getting cloud resources like compute, memory or storage is easy. Making good use of them? Not so much.

Organizations struggle to fully utilize the resources they purchase, wasting even 30% of their cloud spend.

Servers tend to be only 5-15% utilized, processors 10-20%, and storage devices 20-40%.

Now you see why optimizing utilization has so much potential here.

But that’s not everything.

A lot of servers around the world don’t have any jobs to run.

Even if left idle, the equipment still consumes power. A typical server consumes 30-40% of its maximum power even when it's not doing any work.

This applies to virtual servers as well. A team at Teads Engineering confirmed this in their study of AWS instances where their c5.metal consumed 187 watts per hour when it didn’t have any workloads assigned to it.

Now let’s see how this works from the global perspective.

At least 25% of all physical servers - and 30% of all virtual servers - are in a comatose state (meaning that they had no activity within the last six months). This implies that we might have some 10 million zombie servers sitting idly around the world.

The average cost of running a server is $3,000. Multiply that by 10 million and you’ll see that we’re losing $30 billion in data center capital (this doesn’t count in the infrastructure capital and operating costs).

Optimizing the current use of existing cloud resources is how you avoid China’s fate and deliver the best performance using the resources you already have.

Optimization starts with knowing how much damage to the environment you can eliminate by utilizing servers better. That often depends on your cloud provider.

Not all cloud providers use renewable energy

If you’re on AWS, the inefficiencies in resource use might directly translate into burning coal.

AWS is the only one among the three major public cloud companies that doesn’t publish its power consumption data. The company is reported to be “on a path” to powering its operations with renewable energy by 2025 and becoming net-zero carbon by 2040.

Meanwhile, Google is carbon-neutral and reports on its data center efficiency in detail.

Microsofts Azure cloud has been carbon-neutral since 2012, using wind, solar, and hydro-electric energy sources. It also offers Emissions Impact Dashboard that provides carbon emissions data associated with Azure services.

Most large companies now have public climate strategies, but identifying genuine climate leaders is challenging because of the lack of regulatory oversight at a national and sectoral level.

Still, picking the cloud provider could become the first step to making your cloud setup more sustainable.

Where do you go next in your optimization effort?

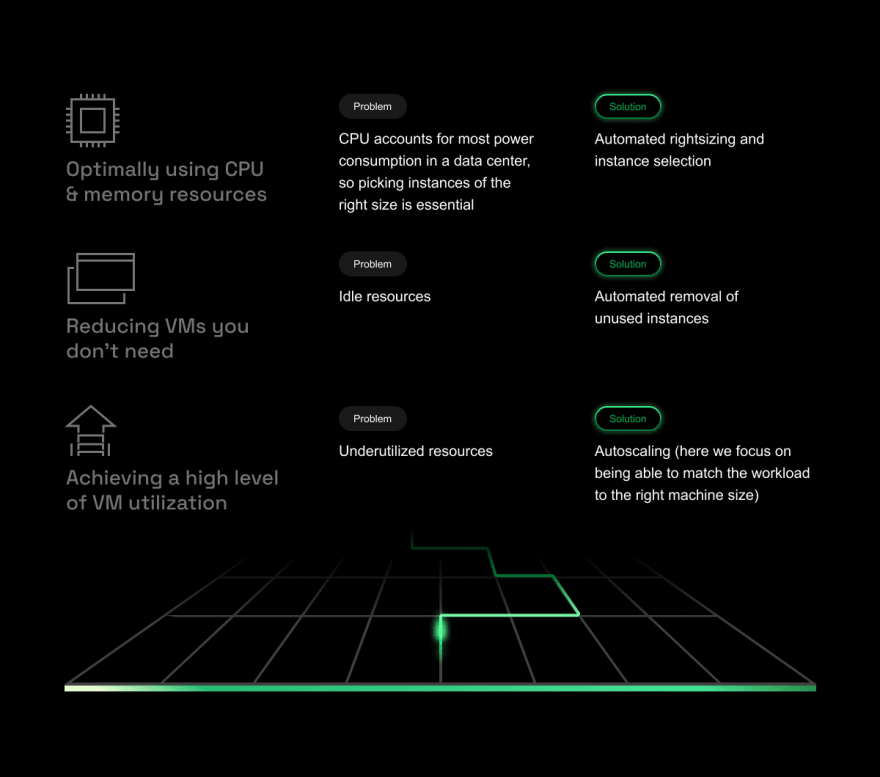

Optimizing compute makes the biggest impact

Which cloud service consumes the most energy? Research clearly points to compute.

The CPU uses more than 50% of the power required to run a server. Another study pointed to CPU and memory as the two largest power consumers in modern data center servers (without GPU).

So, we can safely assume that compute (CPU + memory) easily makes up for over 50% of the power used by the server behind your virtual machine.

Addressing underutilized compute practically guarantees a quick win.

Companies may try these 3 typical methods for solving utilization issues:

- Accurate virtual machine selection and rightsizing - teams first estimate their workloads’ demands and based on their capacity planning find the best instance types and sizes to accommodate these needs. The idea here is to get just enough capacity to ensure good performance without overprovisioning (this often happens when teams choose an instance type based on their assumption of peak load).

- Autoscaling cloud instances - workload demands might change in line with seasonal patterns. To make sure their applications have a place to run, teams use autoscaling methods that expand capacity when needed and shrink it when there’s no work to be done. There’s no reason to keep machines running at 100% capacity when all your clients are fast asleep.

- Removing idle instances - whether it’s a shadow IT project or a testing instance left running, leaving an unused virtual machine on is like setting an office’s AC to pleasant cool over a holiday weekend. Pure waste. Teams should do their best to remove these resources as soon as possible.

Unfortunately, the above is easier said than done.

To do it manually, a team of engineers needs to oversee your cloud resources 24/7 to instantly identify changes in workload demands and adjust capacity in real time. This needs to be done for every single workload running on the public cloud!

AWS offers 400 different EC2 instances and millions of configurations options, so picking the best virtual machine for the job is next to impossible.

And let’s not forget about the team.

Considering skill shortages on the market, filling such roles and retaining people on board is bound to be challenging. Engineers like to do more interesting things than do mind-numbing optimization tasks.

We are sure that teams using automation can improve their utilization significantly.

Based on the Kubernetes clusters running in our platform, at least 30% of compute resources are wasted and could be reduced to make the setup more sustainable and cost-efficient.

By how much? Our latest data sample shows a drop from 256,445 CPU cores before optimization to 182,141 after optimization (29% reduction).

How does this translate into costs? The optimization resulted in $1,416,455 of savings per month.

This increased efficiency would help us all to drastically reduce the carbon footprint of cloud infrastructures, at the same time slashing costs by eliminating resources we don’t need.

What does this mean for your company?

Taking a look at cloud commitments of real companies can reveal a lot:

As of January 31, 2020, Asana had committed $9.2 million in a contract with AWS. Assuming that the 30% of industry standard holds true and Asana doesn’t do any optimization, it’s likely that the company will burn $2.76 million on the cloud.

There's no easy way out once you've committed to a certain spend, but once the end of the term approaches, it's in your best interest to look for alternatives like automated optimization.

The numbers are even more dramatic for Lyft. In January 2019, Lyft signed a commercial agreement with AWS, committing to spend at least $300 million until December 2021 on the cloud. Without optimization, Lyft could have lost $90 million on cloud waste over these three years.

The same goes for Slack. Slack’s contract with AWS spans from 2018 through 2023, with a total minimum commitment of $250 million. If Slack fails to optimize its cloud resource use, it stands to lose $75 million during this 5-year period.

Are you ready to see what your cloud bill could be?

Run a free savings report with CAST AI to find out. You're going to be surprised.

<!-- /wp:button -->

This content originally appeared on DEV Community and was authored by CAST AI

CAST AI | Sciencx (2022-02-18T14:40:19+00:00) Environmental Impact of the Cloud: 5 Data-Based Insights and One Good Fix. Retrieved from https://www.scien.cx/2022/02/18/environmental-impact-of-the-cloud-5-data-based-insights-and-one-good-fix/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.