This content originally appeared on DEV Community and was authored by David Bros

This is a continuation to the previous article: Elasticsearch in 5 minutes.

Logstash is the second essential tool for Data Engineering. Like Elasticsearch it is developed and maintained by the Elastic team and it also has a free version available (which will cover all your needs).

In this article we will learn what Logstash is and how it works, we will also set up a Logstash processor and play with some of the plugins it has to offer. All in under 5 minutes!

For this article I have set up a Github Repository where you can find all the files needed to complete some of the steps as well as the images within this article.

What is Logstash

Logstash is a data processor, it acts as the pipeline between your databases or nodes and other resources, which could be other databases, nodes or applications.

However the true power of Logstash comes in its data manipulation functions: Transforming and Filtering operations.

Where Logstash Shines

- Data Processing

- Data Streaming Enviornments

- Data Analytics Environments

Why does it shine in these enviroments

- High compatibility with input and output resources: Logstash can read from almost anything, including Cloud applications, message Brokers and websockets. Here is the list for input and output plugins.

- Fast and flexible transformation functions: Transform data structures with simple JSON defined operations.

- Filtering: Huge range of filtering functions are your disposal, you can use this in different pipelines to create multiple sources from one raw source.

What version are we setting up

We will be setting up a free Logstash processor version 8.x

Step 1, Docker

For ease of use we will be using a Centos7 Docker container.

Pull the image:

docker pull centos:7

You can find the image here

Run the container with SYS Admin permissions and a mounted volume:

docker run -id --cap-add=SYS_ADMIN --name=logstash-centos7 -v /sys/fs/cgroup:/sys/fs/cgroup:ro centos:7 /sbin/init

We run the container with SYS ADMIN permissions because we need systemctl to work inside the container

Connect to the container:

docker exec -it logstash-centos7 /bin/bash

Step 2, Machine Setup

Update the machine:

yum update -y && yum upgrade -y

Install a text editor (any):

yum install vim -y

Step 3, Install Logstash

Install the GPG key for elastic:

sudo rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

Add this logstash repo under /etc/yum.repo.d/logstash.repo:

[logstash-8.x]

name=Elastic repository for 8.x packages

baseurl=https://artifacts.elastic.co/packages/8.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

Remember to save!

Download and install the repo

yum install logstash

Start logstash

systemctl start logstash

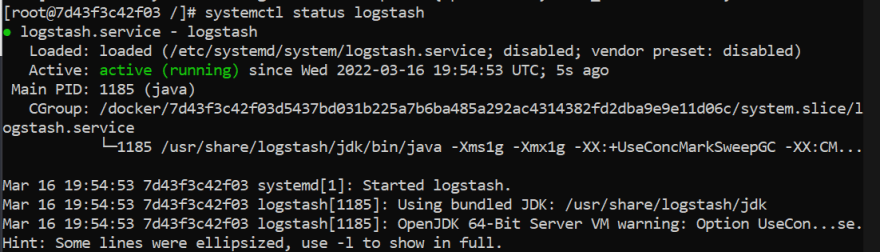

Verify logstash is running

systemctl status logstash

Now stop logstash, we'll restart it later down the line

systemctl stop logstash

Step 4, set up an elastic node

We will be using Elasticsearch as input for our pipelines, so you will need to set up another Docker image with an elastic node, you can find a guide here if you don't have one already running.

Annotate the IPs of your containers

By default Docker sets up a network called bridge which containers can use to communicate between themselves, you can see the IPs by first seeing your container IDs, then inspecting the container:

docker inspect {docker container id}

Docker inspect will yield a very large JSON, search for the field IPAddress

Annotate the IPs for both containers, we'll use them in the next step.

Create a test_pipeline index and insert sample data, you need to do this inside your Elasticsearch container. You can copy this sample data and paste it under /tmp/sample_data.json

vim /tmp/sample_data.json

Check the index has been created correctly

curl -X GET --cacert /etc/elasticsearch/certs/http_ca.crt -u elastic https://localhost:9200/_cat/indices

Index this sample data we previously saved

curl -X POST --cacert /etc/elasticsearch/certs/http_ca.crt -u elastic -H 'Content-Type: application/nx-ndjson' https://localhost:9200/_cat/indices --data-binary @/tmp/sample_data.json

Create another index called test_pipeline_output

Due to time constraints (hint on the title) we are going to take some shortcuts: Disabling SSL and Setting our replicas to 0.

To do disable ssl you need only change xpac.security.enabled to false under the file /etc/elasticsearch/elasticsearch.yml

Restart elasticsearch

systemctl restart elasticsearch

Now set your replicas to 0, do this step for both test_pipeline and test_pipeline_output indices:

curl -X PUT -u elastic http://localhost:9200/**test_pipeline**/_settings -H 'Content-Type: application/json' --data '{"index": {"number_of_replicas": 0}}'

Step 5, set up a logstash pipeline

Copy this configuration under

/etc/logstash/conf.d/pipeline.conf

The purpose of this pipeline is to get the messages from index test_pipeline to another index called test_pipeline_output

The only thing left now is to restart logstash in our logstash docker container.

systemctl restart logstash

Let it run for a couple of minutes, after that check indices in your Elasticsearch node:

curl -X GET --cacert /etc/elasticsearch/certs/http_ca.crt -u elastic http://localhost:9200/_cat/indices/

In the image you can see that the output index has 100 items, while the original has 10, this is because logstash will continuously run this pipeline every few seconds.

In any case, with this article we have successfully set up a Logstash processor and moved data around with a very basic setup.

Follow me for part 3, where we'll set up a Kibana UI in 5 minutes!

This content originally appeared on DEV Community and was authored by David Bros

David Bros | Sciencx (2022-03-22T23:19:18+00:00) ELK: Logstash in 5 minutes. Retrieved from https://www.scien.cx/2022/03/22/elk-logstash-in-5-minutes/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.