This content originally appeared on DEV Community and was authored by Blair

These profanity filtering and content moderation best practices include key platform requirements and essential tools for enterprise-scale advanced filtering systems.

Why Organizations Need Advanced Profanity Filtering Technology

Massive quantities of text, images, and videos are published daily. Organizations that have platforms that rely on user-generated content are struggling to maintain customer safety and trust due to the exponential amount of content being created every day. Problems with profanity in customer communications can have a substantial impact on corporate revenue. Litigation, bad publicity, negative consumer sentiment can cut into the bottom line.

Most B2C companies understand that customer satisfaction is crucial to the success of the business. Beyond billing statements, B2C companies communicate with their customers through online form submissions, chats, forums, support portals, and emails. Organizations need to monitor the content that their platforms host to provide a safe and trusted environment for their users, manage brand perception and reputation, and comply with federal and state regulations.

Content moderation involves screening for, flagging, and removing inappropriate text, images, and videos that users post on a platform by applying pre-set rules for content moderation. Moderated content can include profanity, violence, extremist views, nudity, hate speech, copyright infringement, spam, and other forms of inappropriate or offensive content.

The most effective way to keep tabs on all of this content is by using profanity filters and advanced content moderation technology. Here, we’ll cover 10 platform requirements and 10 key content moderation tools that a profanity filter must have to provide an ideal first line of defense.

10 Content Moderation Platform Requirements

Organizations need to implement advanced, automated profanity filter and content moderation capabilities that will reduce or eliminate inappropriate or unwanted content. The following 10 platform capabilities are critical:

Broad Content Filtering Capabilities – Perform content moderation (text moderation), image moderation, and video content moderation.

Multiple Application Use – Manage users and content across multiple applications, set up different filtering and moderation rules for each application and content source, and isolate moderators so they moderate content and users only for specific applications.

Scalability – Filter tens of thousands of messages per second on a single server.

Ease of Use and Performance – Rapidly implement an integrated, developer-friendly solution that begins working quickly to ensure that all visible online content is safe and clean.

Accuracy – Generate few false positives and misses with fast and accurate filtering in multiple languages to meet diverse business needs.

Built-in Reporting Tools – Use content moderation and content filtering reports and analytics to better understand the user community, extract meaningful insights from user content, and improve overall business performance.

Preapproval/Rejection of Content – Allow moderators to pre-screen content in a preapproval queue before it’s visible to other users.

User Discipline – Automatically take action after a user’s reputation/trust score reaches a designated threshold, as well as use progressive disciplinary action to manage repeat offenders and enable moderators to escalate issues to managers immediately.

Security – Choose an on-premise solution that ensures each customer’s data is isolated and ensures personally identifiable information (PII) is secure.

PII, COPPA, and EU Data Direction Compliance – Choose an on-premise solution that provides strict compliance with the Children’s Online Privacy Protection Act, InfoSec, and EU Data Privacy Directive requirements.

10 Content Moderation Tools

Organizations that leverage the following 10 content moderation tools can protect their user community, save time and money, and vastly reduce the manual workload of human moderators:

Text, Image, and Video Moderation – The solution should filter and moderate text, images, and video, as well as verify that approval policies are being enforced by the moderation team.

User Flagging – Users should be able to report images they deem inappropriate, resulting in content removal or content moderation.

Username Filtering – Advanced language analysis and more aggressive rules should ensure that only appropriate names are allowed.

Blacklist Filtering – Natural language processing and proprietary algorithms can be used to determine if text contains blacklisted words or phrases.

Kid’s Chat Filtering – Filters can be used to allow only acceptable words and phrases and reject any words not included in the whitelist.

PII Filtering – Email and phone number filters, PII filters, and personal health information filters (PHI filters) can be implemented to protect users.

Chat and Commenting Filtering – Filtering can keep communication productive in community chats, customer communications, HIPAA communications, gaming and mobile app communications, forums and reviews, kid-focused communities, in-venue digital displays, and organic user-generated content.

URL Filtering – An advanced filtering capability can limit users’ ability to include URLs in their communication to avoid spamming.

Profile Picture Filtering – The platform should allow moderation of inappropriate and offensive images used in profile pictures and other visible user account information.

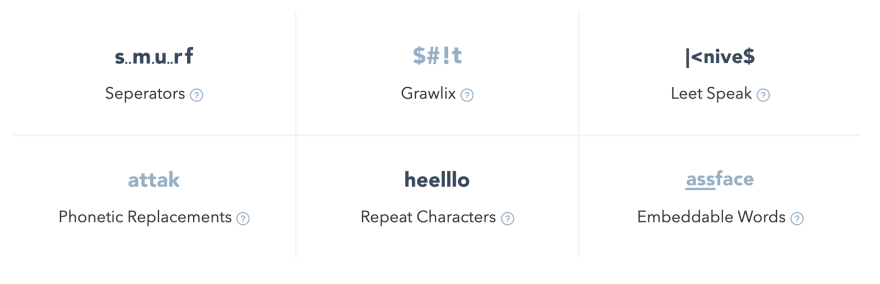

Filter Bypass Prevention – Implementation of the latest filtering techniques should keep false positives to a minimum and prevent users from bypassing filters.

A quick plug

Today, businesses large and small are investing in automated solutions to prevent offensive and inappropriate language, imagery, and videos in all communications, particularly customer-facing content.

Our team at CleanSpeak has been working on our profanity filtering technology for more than a decade to keep customer communications clean and productive and maintain a safe environment for users around the globe. To see if CleanSpeak is right for your business, try it for free today!

This content originally appeared on DEV Community and was authored by Blair

Blair | Sciencx (2022-03-30T16:43:00+00:00) Content Moderation and Profanity Filtering Best Practices. Retrieved from https://www.scien.cx/2022/03/30/content-moderation-and-profanity-filtering-best-practices/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.