This content originally appeared on DEV Community and was authored by Emirhan Akdeniz

Intro

This is a part of the series of blog posts related to Artificial Intelligence Implementation. If you are interested in the background of the story or how it goes:

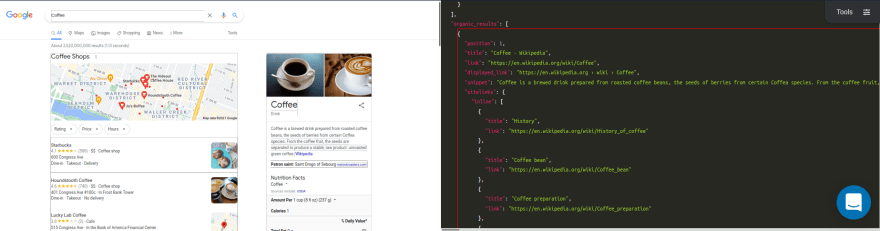

This week we'll showcase training procedures for implementing Machine Learning models for general testing purposes. We will be using SerpApi's Google Organic Results Scraper API for the data collection. Also, you can check in the playground in more detailed view on the data we will use.

Breakdown of Custom Weighted-KNN in Ruby

Let's initialize a training class

class Train

def initialize csv_path

@@csv_path = csv_path

@@vector_arr = []

@@word_arr = []

@@maximum_word_size = 100

@@weights = Vector[]

@@losses = []

end

| Variable | Explanation |

|---|---|

@@csv_path |

The path to key-specific CSV |

@@vector_arr |

An array of vectors representing tokenized words |

@@word_arr |

An array containing words with their corresponding types (0 or 1) |

@@maximum_word_size |

Maximum allowed word size |

@@weights |

Vector containing weights |

@@losses |

Array containing losses throughout training process |

Let's read the key-specific CSV file.

def self.read

@@word_arr = CSV.read(@@csv_path)

@@word_arr

end

To give an example, if we type the following command:

csv_path = "organic_results/organic_results__snippet.csv"

Train.new csv_path

key_array = Train.read

The output would be:

[["0", "McDonald's: Burgers, Fries & More. Quality Ingredients."],

["0", "https://www.mcdonalds.com/us/en-us.html"],

["0", "https://www.mcdonalds.com › en-us"],

["1", "McDonalds.com is your hub for everything McDonald's. Find out more about our menu items and promotions today!"],

["0", "Breakfast"],

...

This array is a replicate of what is on the csv. It contains elements and their corresponding binary correspondence with the key in question.

Let's define the vectorized versions of these words within the Train class.

def self.define_training_set vectors

@@vector_arr = vectors

end

To make such a vectors array, we'll use our vocabulary such as below:

{

"<unk>": 0,

"<pad>": 1,

"1": 2,

"mcdonald": 3,

"'": 4,

"s": 5,

"burgers,": 6,

"fries": 7,

...

}

Each of these token should be expressed in a vector with the following lines of command:

vector_array = key_array.map { |word| Database.word_to_tensor word[1] }

Train.define_training_set vector_array

Database.word_to_tensor is a function we defined in previous week's code. It tokenizes words or sentences in accordance with vocabulary. We actually added the following function within Database class to call for an already created vocabulary:

def self.read_vocab vocab_path

vocab = File.read vocab_path

@@vocab = JSON.parse(vocab)

end

The end result is an array of vectorizable arrays:

[[2],

[3, 4, 5, 6, 7, 8, 9, 10, 11, 12],

[22, 23, 24, 10, 25],

[22, 23, 24, 10, 30, 31, 32],

[36, 10, 37, 38, 39, 40, 41, 42, 3, 4, 43, 44, 45, 9, 46, 47, 48, 49, 50, 51, 52, 53],

[74],

[22, 23, 24, 10, 75],

[77, 78],

[22, 23, 24, 10, 80],

[82, 83],

[22, 23, 24, 10, 85],

...

]

Let's define a function to automatically take the maximum word size to determine padding pattern. This is not recommended for the final model you want to train since the maximum in this set could be lesser than the actual example you'll feed.

def self.auto_define_maximum_size

@@maximum_word_size = @@vector_arr.map {|el| el.size}.max

end

So to give an example again:

Train.auto_define_maximum_size

Output:

37

This is the word with maximum charachter count, so it'll be taken as the maximum word size. The rest of the items will be completed to 37 characters with <pad> character, tokenized as 1 in the vocabulary.

Let's define the function to extend a single vector to maximum word count.

def self.extend_vector vector

vector_arr = vector.to_a

(@@maximum_word_size - vector.size).times { vector_arr << 1 }

Vector.[](*vector_arr)

end

It takes the vector, converts it to array, feed sufficient 1 into it, and then vectorizes it again.

To go through the entire dataset we have:

def self.extend_vectors

@@vector_arr.each_with_index do |vector, index|

@@vector_arr[index] = extend_vector vector

end

end

So if we apply the following command:

[Vector[2, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1],

Vector[3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1],

Vector[22, 23, 24, 10, 25, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1],

Vector[22, 23, 24, 10, 30, 31, 32, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1],

...

We are greeted with multiple vectors each of which is sized 37. This is important in keeping the vectorial multiplication with weights possible in the following parts.

Now we need to initialize weights. They will help us tweak the vectors into the vicinity of their corresponding classification.

def self.initialize_weights

weights = []

@@maximum_word_size.times { weights << 1.0 }

@@weights = Vector.[](*weights)

end

If we enter the following command, we can see that the output will be a vector of size 37, consisting of 1:

Train.initialize_weights

The output:

Vector[1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0]

Let's also add something to control changing parts:

def self.config k = 1, lr = 0.001

[k, lr]

end

| Variable | Explanation |

|---|---|

k |

This is the number of neighbours we want to get the prediction of |

lr |

This is the number that defines the change in weights in each epoch |

Let's define our function to tilt the vector in multiple dimensions to get the result we want.

def self.product vector

@@weights.each_with_index do |weight, index|

vector[index] = weight * vector[index]

end

vector

end

Each element within weight vector is multiplied by each element within the initial vector we use for training or prediction to get a weighted vector. Since our initial weight is consisting only of 1, This function will return the vector we feed into it in first iteration.

If our initial vector was consisting only of 2

vector = Vector[2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0]

Train.product vector

These commands would give us:

Vector[2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0, 2.0]

Euclidean distance between two points in multidimensional space should not scare you, it's easy:

def self.euclidean_distance vector_1, vector_2

subtractions = (vector_1 - vector_2).to_a

subtractions.map! {|sub| sub = sub*sub }

Math.sqrt(subtractions.sum)

end

You take the subtractions of each value within a vector, square them, sum all of them up and get the squareroot just like we mentioned in the previous blog post.

So if we gave Vector[1,2], and Vector[2,3], it would calculate the distance between the points x=1, y=2 and x=2, y=3:

Train.euclidean_distance Vector[1,2], Vector[2,3]

Output:

1.4142135623730951

So to find the k nearest vectors to the given vector, we can plug in the distances:

def self.k_neighbors distances, k

indexes = []

(k).times do

min = distances.index(distances.min)

indexes << min

distances[min] = distances.max + 1

end

indexes

end

There is another command responsible for collecting distances:

distances = []

@@vector_arr.each_with_index do |comparison_vector, vector_index|

if vector_index == index

distances << 100000000

else

distances << euclidean_distance(comparison_vector, vector)

end

end

indexes = k_neighbors distances, k

It gives a big value to distance if the vector is itself to avoid confusion.

If we plug the first vector, with k=1 for example, the output will be:

[22]

Which gives us the index of the closest vector to first vector.

Let's create a function for checking the classification of the indexes and giving us the mean of classifications.

def self.make_prediction indexes

predictions = []

indexes.each do |index|

predictions << @@word_arr[index][0].to_i

end

predictions.sum/predictions.size

end

The outputs of such classifications could be 1 and 0 since we are checking if the element is snippet in this case. So their mean will be between 0 and 1, both ends included.

If we plug 22:

0

It shows us that the vector isn't snippet just like the vector at index 22.

Let's implement something to change the weights according to the validity of this prediction:

def self.update_weights result, indexes, vector, lr

indexes.each do |index|

subtractions = @@vector_arr[index] - vector

subtractions.each_with_index do |sub, sub_index|

if result == 0 && sub >= 0

@@weights[sub_index] = @@weights[sub_index] + lr

elsif result == 0 && sub < 0

@@weights[sub_index] = @@weights[sub_index] - lr

elsif result == 1 && sub >= 0

@@weights[sub_index] = @@weights[sub_index] - lr

elsif result == 1 && sub < 0

@@weights[sub_index] = @@weights[sub_index] + lr

end

end

end

end

result in here is 0 for false and 1 for true as the relationship between predicted classification, and real classification. We use lr to get the vectors closer, or further by adding or subtractiong lr from weights. Since the weighted vectors will be used for training, probabilistically, it won't do the same mistake with updated weights if the prediction is true, and will do a better job if the prediction is false.

The result will be a change in weights:

Vector[0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999, 0.999]

Notice that, not all of the weights might've changed in negative order to maximize, or minimize the distance between two vectors. It is a coincidence.

Mean Absolute Error is a pretty common technique for calculating loss:

def self.mean_absolute_error real, indexes

errors = []

indexes.each do |index|

errors << (@@word_arr[index][0].to_i - real).abs

end

(errors.sum/errors.size).to_f

end

Calculating loss will tell us of the healthy training process. We might need to tweak the configuration to make it a better. Notice that it won't give indications as to if this is the process we must use, only the health of the process. You can find detailed information in previous blog posts.

Now let's cover the entire function for training one vector:

def self.train vector, index

k, lr = config

vector = extend_vector vector

vector = product vector

distances = []

@@vector_arr.each_with_index do |comparison_vector, vector_index|

if vector_index == index

distances << 100000000

else

distances << euclidean_distance(comparison_vector, vector)

end

end

indexes = k_neighbors distances, k

real = @@word_arr[index][0].to_i

prob_prediction = make_prediction indexes

prediction = prob_prediction > 0.5 ? 1 : 0

result = real == prediction ? 1 : 0

update_weights result, indexes, vector, lr

loss = mean_absolute_error real, indexes

@@losses << loss

puts "Result : #{real}, Prediction: #{prediction}"

puts "Loss: #{loss}"

prediction

end

- We start by a vector, and its index.

- We then call the

kandlrvalues fromconfig. - After that, we make the vector into a weighted vector.

- Then we calculate the distance of the weighted vector with respect to every other vector in the training set.

- Find the indexes of

kclosest neighbors. - Get the real classification of the weigthed vector.

- Get the prediction of it using closest neighbors.

- Compare them to create a result.

- Update weights according to the results.

- Calculate loss and append it to all losses. (For graphing it later)

- Output the results and return the prediction.

If we do these steps iteratively, we will train the weights in a way that'll hold for the general case. Vector classified as 1 will get closer to vectors classified as 1, thus give us a good prediction.

Full Code

class Database

def initialize json_data, vocab = { "<unk>" => 0, "<pad>" => 1 }

super()

@@pattern_data = []

@@vocab = vocab

end

## Related to creating main database

def self.add_new_data_to_database json_data, csv_path = nil

json_data.each do |result|

recursive_hash_pattern result, ""

end

@@pattern_data = @@pattern_data.reject { |pattern| pattern.include? nil }.uniq.compact

path = "#{csv_path}master_database.csv"

File.write(path, @@pattern_data.map(&:to_csv).join)

end

def self.element_pattern result, pattern

@@pattern_data.append([result, pattern].flatten)

end

def self.element_array_pattern result, pattern

result.each do |element|

element_pattern element, pattern

end

end

def self.assign hash, key, pattern

if hash[key].is_a?(Hash)

if pattern.present?

pattern = "#{pattern}__#{key}"

else

pattern = "#{key}"

end

recursive_hash_pattern hash[key], pattern

elsif hash[key].present? && hash[key].is_a?(Array) && hash[key].first.is_a?(Hash)

if pattern.present?

pattern = "#{pattern}__#{key}__n"

else

pattern = "#{key}"

end

hash[key].each do |hash_inside_array|

recursive_hash_pattern hash_inside_array, pattern

end

elsif hash[key].present? && hash[key].is_a?(Array)

if pattern.present?

pattern = "#{pattern}__n"

else

pattern = "#{key}"

end

element_array_pattern hash[key], pattern

else

if pattern.present?

pattern = "#{pattern}__#{key}"

else

pattern = "#{key}"

end

element_pattern hash[key], pattern

end

end

def self.recursive_hash_pattern hash, pattern

hash.keys.each do |key|

assign hash, key, pattern

end

end

## Related to tokenizing

def self.default_dictionary_hash

{

/\"/ => "",

/\'/ => " \' ",

/\./ => " . ",

/,/ => ", ",

/\!/ => " ! ",

/\?/ => " ? ",

/\;/ => " ",

/\:/ => " ",

/\(/ => " ( ",

/\)/ => " ) ",

/\// => " / ",

/\s+/ => " ",

/<br \/>/ => " , ",

/http/ => "http",

/https/ => " https ",

}

end

def self.tokenizer word, dictionary_hash = default_dictionary_hash

word = word.downcase

dictionary_hash.keys.each do |key|

word.sub!(key, dictionary_hash[key])

end

word.split

end

def self.iterate_ngrams token_list, ngrams = 1

token_list.each do |token|

1.upto(ngrams) do |n|

permutations = (token_list.size - n + 1).times.map { |i| token_list[i...(i + n)] }

permutations.each do |perm|

key = perm.join(" ")

unless @@vocab.keys.include? key

@@vocab[key] = @@vocab.size

end

end

end

end

end

def self.word_to_tensor word

token_list = tokenizer word

token_list.map {|token| @@vocab[token]}

end

## Related to creating key-specific databases

def self.create_key_specific_databases result_type = "organic_results", csv_path = nil, dictionary = nil, ngrams = nil, vocab_path = nil

keys, examples = create_keys_and_examples

keys.each do |key|

specific_pattern_data = []

@@pattern_data.each_with_index do |pattern, index|

word = pattern.first.to_s

next if word.blank?

if dictionary.present?

token_list = tokenizer word, dictionary

else

token_list = tokenizer word

end

if ngrams.present?

iterate_ngrams token_list, ngrams

else

iterate_ngrams token_list

end

if key == pattern.second

specific_pattern_data << [ 1, word ]

elsif (examples[key].to_s.to_i == examples[key]) && word.to_i == word

next

elsif (examples[key].to_s.to_i == examples[key]) && word.numeric?

specific_pattern_data << [ 0, word ]

elsif examples[key].numeric? && word.numeric?

next

elsif key.split("__").last == pattern.second.to_s.split("__").last

specific_pattern_data << [ 1, word ]

else

specific_pattern_data << [ 0, word ]

end

end

path = "#{csv_path}#{result_type}__#{key}.csv"

File.write(path, specific_pattern_data.map(&:to_csv).join)

end

if vocab_path.present?

save_vocab vocab_path

else

save_vocab

end

end

def self.create_keys_and_examples

keys = @@pattern_data.map { |pattern| pattern.second }.uniq

examples = {}

keys.each do |key|

examples[key] = @@pattern_data.find { |pattern| pattern.first.to_s if pattern.second == key }

end

[keys, examples]

end

def self.numeric?

return true if self =~ /\A\d+\Z/

true if Float(self) rescue false

end

def self.save_vocab vocab_path = ""

path = "#{vocab_path}vocab.json"

vocab = JSON.parse(@@vocab.to_json)

File.write(path, JSON.pretty_generate(vocab))

end

def self.read_vocab vocab_path

vocab = File.read vocab_path

@@vocab = JSON.parse(vocab)

end

def self.return_vocab

@@vocab

end

end

class Train

def initialize csv_path

@@csv_path = csv_path

@@vector_arr = []

@@word_arr = []

@@maximum_word_size = 100

@@weights = Vector[]

@@losses = []

end

def self.read

@@word_arr = CSV.read(@@csv_path)

@@word_arr

end

def self.define_training_set vectors

@@vector_arr = vectors

end

def self.auto_define_maximum_size

@@maximum_word_size = @@vector_arr.map {|el| el.size}.max

end

def self.extend_vector vector

vector_arr = vector.to_a

(@@maximum_word_size - vector.size).times { vector_arr << 1 }

Vector.[](*vector_arr)

end

def self.extend_vectors

@@vector_arr.each_with_index do |vector, index|

@@vector_arr[index] = extend_vector vector

end

end

def self.initialize_weights

weights = []

@@maximum_word_size.times { weights << 1.0 }

@@weights = Vector.[](*weights)

end

def self.config k = 1, lr = 0.001

[k, lr]

end

def self.product vector

@@weights.each_with_index do |weight, index|

vector[index] = weight * vector[index]

end

vector

end

def self.euclidean_distance vector_1, vector_2

subtractions = (vector_1 - vector_2).to_a

subtractions.map! {|sub| sub = sub*sub }

Math.sqrt(subtractions.sum)

end

def self.k_neighbors distances, k

indexes = []

(k).times do

min = distances.index(distances.min)

indexes << min

distances[min] = distances.max + 1

end

indexes

end

def self.make_prediction indexes

predictions = []

indexes.each do |index|

predictions << @@word_arr[index][0].to_i

end

predictions.sum/predictions.size

end

def self.update_weights result, indexes, vector, lr

indexes.each do |index|

subtractions = @@vector_arr[index] - vector

subtractions.each_with_index do |sub, sub_index|

if result == 0 && sub >= 0

@@weights[sub_index] = @@weights[sub_index] + lr

elsif result == 0 && sub < 0

@@weights[sub_index] = @@weights[sub_index] - lr

elsif result == 1 && sub >= 0

@@weights[sub_index] = @@weights[sub_index] - lr

elsif result == 1 && sub < 0

@@weights[sub_index] = @@weights[sub_index] + lr

end

end

end

end

def self.mean_absolute_error real, indexes

errors = []

indexes.each do |index|

errors << (@@word_arr[index][0].to_i - real).abs

end

(errors.sum/errors.size).to_f

end

def self.train vector, index

k, lr = config

vector = extend_vector vector

vector = product vector

distances = []

@@vector_arr.each_with_index do |comparison_vector, vector_index|

if vector_index == index

distances << 100000000

else

distances << euclidean_distance(comparison_vector, vector)

end

end

indexes = k_neighbors distances, k

real = @@word_arr[index][0].to_i

prob_prediction = make_prediction indexes

prediction = prob_prediction > 0.5 ? 1 : 0

result = real == prediction ? 1 : 0

update_weights result, indexes, vector, lr

loss = mean_absolute_error real, indexes

@@losses << loss

puts "Result : #{real}, Prediction: #{prediction}"

puts "Loss: #{loss}"

prediction

end

end

json_path = "organic_results/example.json"

json_data = File.read(json_path)

json_data = JSON.parse(json_data)

Database.new json_data

## For training from scratch

#Database.add_new_data_to_database json_data, csv_path = "organic_results/"

#Database.create_key_specific_databases result_type = "organic_results", csv_path = "organic_results/", ngrams = 2

##

Database.read_vocab "vocab.json"

## We will use an iteration of csvs within a specific path in the end

csv_path = "organic_results/organic_results__snippet.csv"

Train.new csv_path

key_array = Train.read

vector_array = key_array.map { |word| Database.word_to_tensor word[1] }

Train.define_training_set vector_array

Train.auto_define_maximum_size

Train.extend_vectors

Train.initialize_weights

Train.config k = 3

vector_array.each_with_index do |vector, index|

Train.train vector, index

end

Conclusion

From now on, this blog post series will be bi-weekly. Two weeks later, we will train the initial models, and showcase how to store them for implementation.

The end aim of this project is to create an open-source gem to be implemented by everyone using a JSON Data Structure in their code.

I'd like to thank the reader for their attention, and the brilliant people of SerpApi creating wonders even in times of hardship, and for all their support.

Join us on Dev.to | Hashnode | Twitter | YouTube

This content originally appeared on DEV Community and was authored by Emirhan Akdeniz

Emirhan Akdeniz | Sciencx (2022-04-06T21:22:17+00:00) Investigating Machine Learning Techniques to Improve Spec Tests III. Retrieved from https://www.scien.cx/2022/04/06/investigating-machine-learning-techniques-to-improve-spec-tests-iii/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.