This content originally appeared on DEV Community and was authored by Taylor Hunt

In part 2, I glossed over a lot when I wrote…

I decided this called for an MPA. (aka a traditional web app. Site. Thang. Not-SPA. Whatever.)

Okay, but why did I decide that? To demo the fastest possible Kroger.com, I should consider all options — why not a Single-Page App?

Alright, let’s try a SPA

While React was ≈2× too big, surely I could fit a SPA of some kind in my 20kB budget. I’ve seen it done: the PROXX game’s initial load fits in 20kB, and it’s smooth on wimpier phones than the Poblano.

So, rough estimate off the top of my head…

| Source | Parse size | gzip size |

|---|---|---|

preact 10.6.6 |

10.2kB | 4kB |

preact-router 4.0.1 |

4.5kB | 1.9kB |

react-redux 7.2.6 |

15.4kB | 5kB |

redux 4.1.2 |

4.3kB | 1.6kB |

| My components1 | 5.9kB | 2.1kB |

| Total | 40.3kB | ≈14.6kB |

- The HTML is little else than

<script>s, so its size is negligible. - The remaining 5.4kB is plenty for some hand-tuned CSS.

- This total is pessimistic: Bundlephobia’s estimate notes “Actual sizes might be smaller if only parts of the package are used or if packages share common dependencies.”

Seems doable.

…But is that really all the code a SPA needs?

Some code I knew I’d need eventually:

- Translation between UI elements and API request/response formats

- Updates in the

<head> - Instead of fullsize image links, a lightbox script to avoid unloading the SPA

- Checking and reloading outdated SPA versions

- Code splitting to fit in the 20kB first load (my components estimate was only for the homepage), which means dynamic resource-loading code

- Reimplementing

beforeunloadwarnings for unsaved user input - Analytics need extra code for SPAs. Consider two particularly relevant to our site:

Buuuuuut I don’t have concrete numbers to back these up — I abandoned the SPA approach before grappling with them.

Why?

It’s not always about the performance

I didn’t want to demo a toy site that was fast only because it ignored the responsibilities of the real site. To me, those responsibilities (of grocery ecommerce) are…

- 🛡 Security even over accessibility

- Downgrading HTTPS ciphers for old browsers isn’t worth letting credit cards be stolen.

- You must protect data customers trust you with before you can use it.

- You must protect data customers trust you with before you can use it.

- ♿ Accessibility even over speed

- A fast site for only the able creates inequality, but a slower site for everyone creates equality.

- Note that speed doubles as accessibility.

- Note that speed doubles as accessibility.

- 🏎️ Speed even over slickness

-

Delight requires showing up on time.

- Users think fast sites are more easy-to-use, well-designed, trustworthy, and pleasant.

Accordingly, I refused to compromise on security or accessibility. A speedup was worthless to me if it conflicted with those two.

Security

MPAs and SPAs share most security concerns; they both care about XSS/CSRF/other alphabet soups, and ultimately some code performs the defenses. The interesting differences are where that code lives and what that means for ongoing maintenance.

(Unless the stateless ideal of SPA endpoints leads to something like JWT, in which case now you have worse problems.)

Where security code lives

Take CSRF protection: in MPAs, it manifests in-browser as <input type=hidden> in <form>s and/or samesite=strict cookies; a small overhead added to the normal HTTP lifecycle of a website.

SPAs have that same overhead2, and also…

- JS to get and refresh anti-CSRF tokens

- JS to check which requests need those tokens, then attach them

- JS to handle problems in protected responses; to repair or surface them to the user somehow

Repeat for authentication, escaping, session revocation, and all the other titchy bits of a robust, secure app.

Additionally, the deeper and more useful your security, the more the SPA approach penalizes all users. That rare-but-crucial warning to immediately contact support? It (or the code to dynamically load it) can either live in everyone’s SPA bundle, or only burden an MPA’s HTML when that stress-case happens.

What that means for security maintenance

With multiple exposed APIs, you must pentest/fuzz/monitor/etc. each of them. In theory that’s no different than the same for each POSTable URL in an MPA, but…

Sure, 8 out of 9 teams jumped on updating that vulnerable input-parsing library. Unfortunately, Team 9’s senior dev was out this week and the juniors struggled with a dependency conflict and now an Icelandic teenage hacker ring threatens to release the records of anyone who bought laxatives and cake mix together.3

This problem is usually tackled with a unified gateway, which can inflict SPAs with request chains and more script kB to contact it. But for MPAs, they already are that unified gateway.

Accessibility

Unlike security, SPAs have accessibility problems exclusive to them. (Ditto any client-side routing, like Hotwire’s Turbo Drive.)

I’d need code to restore standard page navigation accessibility:

- Remember and restore scroll positions across navigations.

- Focus last-used element on back navigations (accounting for

autofocus,tabindex, JS-driven.focus(), other complications…) - Focus a page-representative element on forward navigation, even if it’s not normally focusable. Which is filled with gotchas.

And do all that while correctly handling the other hard parts of client-side routing:

- Back/forward buttons

- Timeouts, retries, and error handling

- User cancellations and double-clicks

- History API footguns — see its proposed replacement’s reasoning

- Only use SPA navigation if a link is same-origin, not an in-page

#fragment, the sametarget, needs authentication… - Check for Ctrl/⌘/Alt/Shift/right/middle-click, keyboard shortcuts to open in new tabs/windows, or non-standard shortcuts configured by assistive tech (good luck with that last one)

- Reimplement browsers’ load/error UIs, and yours can’t be as good: they can report proxy misconfiguration, DNS failure, what part of the network stack they’re waiting on, etc.

In theory, community libraries should help avoid these problems…

The majority of routers for React, and other SPA frameworks, do this out of the box. This has been a solved problem for half a decade at least. A website has to go out of its way to mess this up.

We use those libraries at work, and let me tell you: we still accidentally mess it up all the time. I asked a maintainer of a popular React router for his take:

We can tell you when and where in the UI you should focus something, but we won’t be focusing anything for you. You also have to consider scroll positions not getting messed up, which is very app-specific and depends on the screen size, UI, padding around the header, etc. It’s incredibly difficult to abstract and generalize.

Even worse, some SPA accessibility problems are currently impossible to fix:

For example, screen readers can produce a summary of a new page when it’s loaded, however it’s not possible to trigger that with JavaScript.

Also, remember how speed doubles as accessibility?

For accessibility as well as performance, you should limit costly lookups and operations all the time, but especially when a page is loading.

Emphasis mine — if you should avoid heavy processing during page load, then SPAs have an obvious disadvantage.

I needed something to break up the text.

Let’s say I do all that, though. Sure, it sounds difficult and error-prone, but theoretically it can be done… by adding client-side JS. And thus we’re back to my original problem.

Sometimes it is about the performance

You probably don’t have my 20kB budget, but 30–50kB budgets are a thing I didn’t invent. And remember: anything added to a page only makes it slower.

Beyond budget constraints, seemingly-small JS downloads have real, measurable costs on cheap devices:

| Code | Startup delay |

|---|---|

| React | ~163ms |

| Preact | ~43ms |

| Bare event listeners | ~7ms |

Details in Jeremy’s follow-up post. Note these figures are from a Nokia 2, which is more powerful than my target device.

I see you with that “premature optimization” comment

The usual advice for not worrying about front-end frameworks goes like this:

- Popular frameworks power some sites that are fast, so which one doesn’t matter — they all can be fast.

- Once you have a performance problem, your framework provides ways to optimize it.

- Don’t avoid libraries, patterns, or APIs until they cause problems — or that’s how you get premature optimization.

The idea of “premature optimization” has always been more nuanced than that, but this logic seems sound.

However… if you add a library to make future updates faster at the expense of a slower first load… isn’t that already a choice of what to optimize for? By that logic, you should only opt for a SPA once you’ve proven the MPA approach is too slow for your use.

Even 10ms of high memory spikes can cause ZRAM kicking in (suuuuper slow) or even app kills. The amount of JS sent to P99 sites is bad news

ZRAM impact is system wide. Keyboard may not show up quickly because the page used too much.

Having less code can make everything faster.

I had performance devtools open and dogfooded my own code as I made my demo. Each time I tested a heavier JS approach, I had tangible evidence that avoiding it was not prematurely optimizing.

It’s not just bundle size

Beyond their inexorable gravity of client-side JavaScript, SPAs have other non-obvious performance downsides.

In-page updates buffer the Web’s streaming, resulting in JSON that renders slower than HTML for lack of incremental rendering. Even if you don’t intentionally stream, browsers incrementally render bytes streaming in from the network.

Memory leaks are inevitable, but they rarely matter in MPAs. In SPAs, one team’s leak ruins the rest of the session.

JS and

fetch()have lower network priority than “main resources” (<a>and<form>). This even affects how the OS prioritizes your app over other programs.Lastly, and most importantly: server code can be measured, scaled, and optimized until you know you can stop worrying about performance. But clients are unboundedly bad, with decade-old chips and miserly RAM in new phones. The Web’s size and diversity makes client-side “fast enough” impossible to judge. And if your usage statistics come from JS-driven analytics that must download, parse, and upload to record a user… can you be certain of low-end usage?

Fresh loads happen more than you think

The core SPA tradeoff: the first load is slower, but it sets up extra code to make future interactions snappier.

But there are situations where we can’t control when fresh loads happen, making that one-time payment more like compounding debt:

- Page freezing/eviction

- Mobile browsers aggressively background, freeze, and discard pages.

- Switching apps/tabs unloads the first annoyingly often — ask any iOS user.

- Browser/OS updates and crashes

- Many devices auto-update while charging.

- I’m sure you can guess whether SPAs or MPAs crash more often.

- Deeplinks and new tabs

- Users open things in new tabs whether we like it or not.

- Outside links must do a fresh boot, weakening email/ad campaigns, search engine visits, and shared links.

- Multi-device usage really doesn’t help.

- In-app browsers share almost nothing with the default browser cache, which turns what users think are return visits into fresh load→parse→execute.

- Outside links must do a fresh boot, weakening email/ad campaigns, search engine visits, and shared links.

- Intentional page refreshes

- Users frequently refresh upon real/perceived issues, especially during support. The world considers Refresh ↻ the fixit button, and they’re not wrong.

- SPAs often refresh themselves for logins, error-handling, etc.

- A related problem; you know those “This site has updated. Please refresh” messages? They bother the user, invoke a refresh, and replicate a part of native apps nobody likes.

- SPAs often refresh themselves for logins, error-handling, etc.

But when fresh pageloads are fast, you can cheat: who cares about reloading when it’s near-instant?

SPAs are more fragile

While “rebooting” on every navigation can seem wasteful, it might be the best survival mechanism we have for the Web:

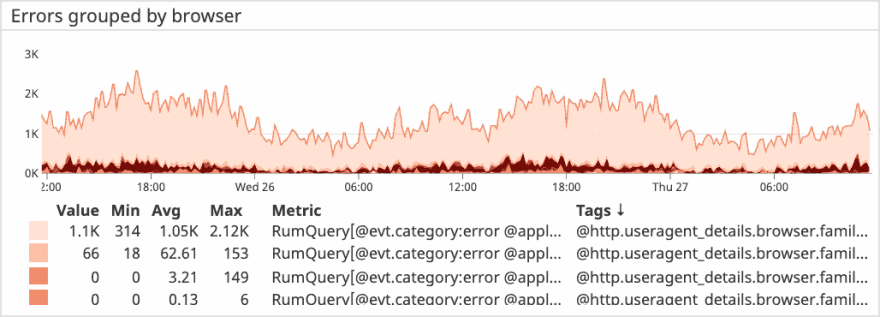

These errors come, in large part, from users running odd niche or out-of-date browsers with broken Javascript or DOM implementations, users with buggy browser extensions injecting themselves into your scope, adblockers blocking one of your

<script>tags, broken browser caches or middleboxes, and other weirder and more exotic failure modes.— Systems that defy detailed understanding § Client-side JavaScript

A typical Datadog error summary.

Given that most bugs are transient3, simply restarting processes back to a state known to be stable when encountering an error can be a surprisingly good strategy.

3 131 out of 132 bugs are transient bugs (they’re non-deterministic and go away when you look at them, and trying again may solve the problem entirely), according to Jim Gray in Why Do Computers Stop and What Can Be Done About It?

We control our servers’ features and known bugs, and can monitor them more thoroughly than browsers.

Client error monitoring must download, run, and upload to be recorded, which is much flakier and adds its own fun drawbacks.

-

Relying on client JS is a hard known-unknown:

- JavaScript isn’t always available and it’s not the user’s fault.

- Why availability matters

-

Dan Abramov’s warning against React roots on

<body>. - User-Agent Interventions are when browsers intentionally mess with your JS.

Overall, SPAs’ reliance on client JS makes them fail unpredictably at the seams: the places we don’t control, the contexts we didn’t plan for. Enough edge-cases added up are the sum total of humanity.

When a SPA is a good choice

Remember PROXX from earlier? It did fit in 20kB, but it also doesn’t worry about a lot of things:

- Its content is procedurally generated, with only a few simple interactions.

- It has few views, a single URL, and doesn’t load further data from a server.

- It doesn’t care about security: it’s a game without logins or multiplayer.

- If it weren’t accessible4 or broke from client-side fragility… so what? It’s a game. Nobody’s missing out on useful information or services.

PROXX is perfect as a SPA. You could make a Minesweeper clone with <form> and a server, but it would probably not feel as fun. Games often should maximize fun at the expense of other qualities.

Similarly, the Squoosh SPA makes sense: the overhead of uploading unoptimized images probably outweighs the overhead of expensive client-side processing, plus offline and privacy benefits. But even then, there are many server-side image processors, like ezgif or ImageOptim online, so clearly there’s nuance.

You don’t have to choose extremes! You can quarantine JS-heavy interactivity to individual pages when it makes sense: SPAs can easily embed in an MPA. (The reverse, though… if it’s even possible, it sounds like it’d inherit the weaknesses of both without any of their strengths.)

But if SPAs only bring ☹️, why would they exist?

We’re seeing the pendulum finally swing away from SPAs for everything, and maybe you’ll be in a position someday where you can choose. On the one hand, I’d be delighted to hand you more literature:

On the other hand…

Please beat offline-first ServiceWorker-cached application shells or even static HTML+JS on a local CDN with a cgi page halfway across the globe.

This is true. It’s not enough for me to say “don’t use client-side navigation” — those things are important for any site, whether MPA or SPA:

- Offline-first reliability and speed

- Serving as near end-users as possible

- Not using CGI (it’s 2022! at least use FastCGI)

So, next time: can we get the benefits that SPAs enjoy, without suffering the consequences they extremely don’t enjoy?

-

Estimated by ruining my demo’s split Marko components and checking devtools on the homepage. ↩

-

Some say that instead of CSRF tokens, SPAs may only need to fetch data from subdomains to guarantee an

originheader. Maybe, but the added DNS lookup has its own performance tax, and more often than you’d think. ↩ -

No, this was not an incident we had. For starters, the teenagers were Luxembourgian. ↩

-

PROXX actually did put a lot of effort into accessibility, and that’s cool. ↩

This content originally appeared on DEV Community and was authored by Taylor Hunt

Taylor Hunt | Sciencx (2022-04-19T11:30:39+00:00) Routing: I’m not smart enough for a SPA. Retrieved from https://www.scien.cx/2022/04/19/routing-im-not-smart-enough-for-a-spa/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.