This content originally appeared on Level Up Coding - Medium and was authored by Mykhailo Kushnir

This year I’ve published my most successful tutorial, and now I want to make it even easier to use.

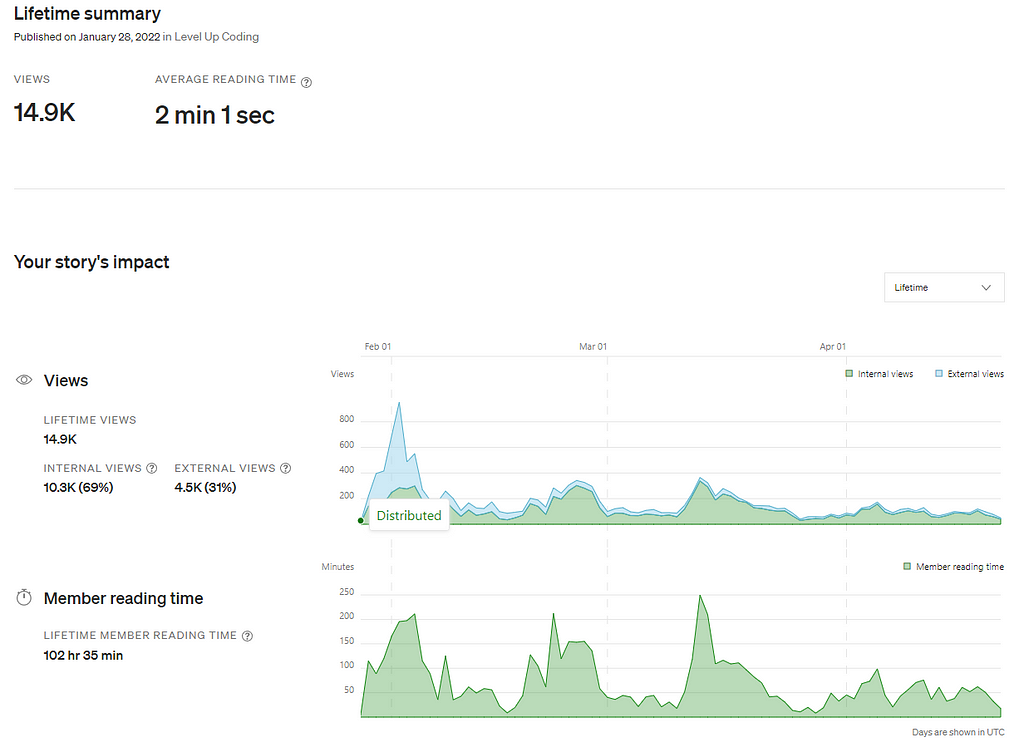

The article called “How I scrape lots of sites with one python script” was a massive success for my humble writing career. Look at it yourself:

It is quite natural as I gave away a helpful script that can be reproduced and customised for personalised needs. But can it be even more user-friendly?

In this article, I’ll share with you:

- Github repository where you can clone it from;

- Link to docker container that can help you scrape stuff with one command;

- Some use cases and how to handle them;

Repo & Updates

Having version control is necessary to have proper control around the refactoring process. It’s also a more convenient way to share the codebase than Gist scripts.

By default, this repository allows you to parse a famous scraping sandbox quotes.toscrape.com. To better understand the script and how to modify it for your own purposes, please read part 1.

You can start using it locally right away by a shell script execution:

./scripts/quotes.sh ./outputs/quotes local

or

./scripts/quotes.sh ./outputs/quotes remote

I encourage you to read the listing of this script with comments to understand better what it does:

webtric/quotes.sh at main · destilabs/webtric

Docker

While this solution seemed helpful for many readers right away, I can imagine it wasn’t painless in its installation. Chromedriver is a nasty tool that requires regular updates and a “beginner+” level of understanding of operation systems configurations nuances. On the other hand, Docker only requires knowing the proper commands for running the container.

There’s probably no better way to describe this container than just showing its docker-compose file:

webtric/docker-compose.yml at main · destilabs/webtric

Let us also go through it step by step:

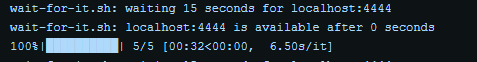

Chromedriver and Selenium Hub are separate services. They would be up and running on their correspondent ports. It’s essential to wait in the script until they get up.

Webtric service (the hero of the post) would be built on the fly and then would just wait for the two services above. You’ll see a few errors in the log, but it should catch up and start parsing.

To run docker-compose file execute two commands:

export APP=./scripts/quotes.sh

docker-compose up

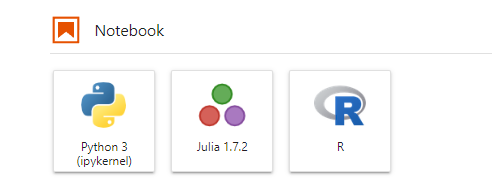

Jupyter service would be raised to have immediate access to parsed data. Enter http://localhost:8888/lab?token=webtric and create a new notebook:

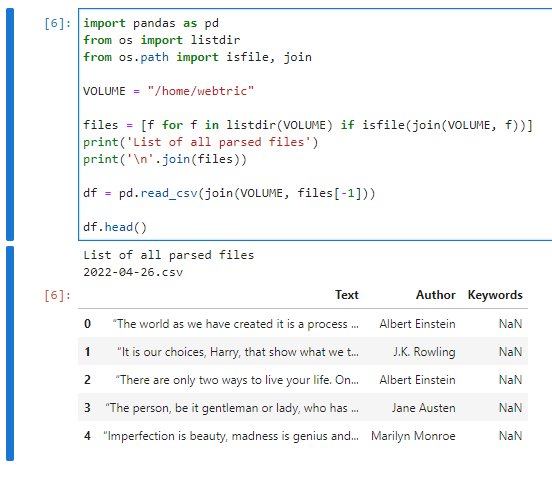

Here’s a neat script to access the last scraped file from your “/home/webtric” volume:

And here’s how it should look in the end

Use Cases

Primarily, I’ve made this project just for fun and learning and so could you. From the practical point of view having Webtric in docker is useful for the purpose of scaling as now it is possible to scrape in parallel by spawning more and more containers. Remember the golden rule of scraping though:

Be gentle with sites you’re parsing

It is also easier to host your spider now as most modern cloud hosting providers are friendly with containers. I’ll prepare a tutorial on how to make it work in the nearest future so stay tuned.

DISCLAIMER: It is still an early version tool and I’d love to get more feedback from my readers. If something fails for you — don’t hesitate to share it in the comments here or on GitHub and I’ll try to fix it ASAP. Pull requests are also appreciated.

How I scrape lots of sites with one Python script — Part 2 with Docker was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Mykhailo Kushnir

Mykhailo Kushnir | Sciencx (2022-04-27T13:01:47+00:00) How I scrape lots of sites with one Python script — Part 2 with Docker. Retrieved from https://www.scien.cx/2022/04/27/how-i-scrape-lots-of-sites-with-one-python-script-part-2-with-docker/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.