This content originally appeared on Level Up Coding - Medium and was authored by Emirhan Akdeniz

[Failed] Investigating Machine Learning Techniques to Improve Spec Tests — V

Intro

This is a part of the series of blog posts related to Artificial Intelligence Implementation. If you are interested in the background of the story or how it goes:

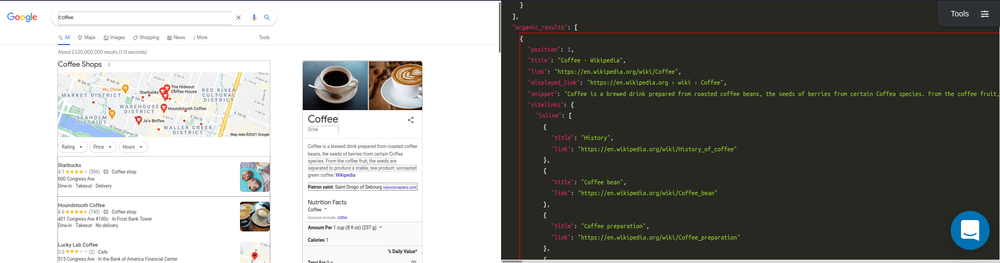

This week we’ll showcase storing and reusing trained weights, and the talk about the crucial mistake I did in calculating the early results of the model. We will be using SerpApi’s Google Organic Results Scraper API for the data collection. Also, you can check in the playground in more detailed view on the data we will use.

Crucial Mistake I made in Calculating Model Accuracy

Let me first give the way in which I calculated using training examples:

true_examples = key_array.map {|el| el = el.first == "1" ? el.second : nil}.compact

false_examples = key_array.map {|el| el = el.first == "0" ? el.second : nil}.compactpredictions = []

false_examples.each do |example|

prediction = test example, 2, vector_array, key_array

predictions << prediction

end

predictions.map! {|el| el = el == 1 ? 0 : 1}true_examples.each_with_index do |example, index|

puts "--------------"

prediction = test example, 2, vector_array, key_array

predictions << prediction

puts "Progress #{(index.to_f/true_examples.size.to_f).to_f}"

puts "--------------"

end

prediction_train_accuracy = predictions.sum.to_f / predictions.size.to_f

puts "Prediction Accuracy for Training Set is: #{prediction_train_accuracy}"We take the examples that are snippet, and ones that are not snippet, and run them in the trained model. If the prediction of not snippet examples turned out to be 0, they would be counted as correct predictions, and if the snippet results were counted as 1 they would also be counted as correct results. In the end, the result of the rate of correct prediction was 0.8187793427230047. I later used a larger dataset and the result was around 89%.

Here’s the logical fallacy I have fallen; There were exhaustively more non-snippet results as opposed to snippet results. Let's suppose the rate of which be 1:9 in a 10 unit example set. If the model is prone to call things non-snippet more than snippet in a random manner, as it was the case, then non-snippet results will be predicted correctly, which will create a bias in results.

The reason why it increased to 89 percent in the larger dataset, was because there were more type of keys in it. It resulted in snippet size / non-snippet size to minimize further, which triggered a bias in false positive predictions of non-snippet results.

In reality, I should’ve tested the model using only snippet examples. I did, and found out that the model is useless. I will try to tweak and find a way to make it useful. But, I think it is better to show why I made a failed calculation before that to inform everybody, and prevent other people from making the same mistake.

Storing Trained Weights in a Custom Way

It took me a while before I realized my mistake. Before that, I have created a way to store and predict using the model.

Here’s the full code for it:

class Predict

def initialize csv_path, trained_weights_path, vocab_path, object = "Snippet", k = 2

@@csv_path = csv_path

@@trained_weights_path = trained_weights_path

@@vocab_path = vocab_path

@@object = object

@@k = k

@@key_arr = []

@@vector_arr = []

@@weights = []

@@maximum_word_size = 0

@@vocab = {}

end

def self.construct

@@weights = initialize_trained_weights @@trained_weights_path

@@vocab = read_vocab @@vocab_path

@@key_arr = read_csv @@csv_path

@@vector_arr = define_training_set @@key_arr

@@maximum_word_size = @@weights.size

extend_vectors

end

def self.read_csv csv_path

CSV.read(csv_path)

end

def self.read_vocab vocab_path

vocab = File.read vocab_path

JSON.parse(vocab)

end

def self.initialize_trained_weights trained_weights_path

weights = File.read trained_weights_path

weights = JSON.parse(weights)

Vector.[](*weights)

end

def self.define_training_set vectors

@@key_arr.map { |word| word_to_tensor word[1] }

end

def self.default_dictionary_hash

{

/\"/ => "",

/\'/ => " \' ",

/\./ => " . ",

/,/ => ", ",

/\!/ => " ! ",

/\?/ => " ? ",

/\;/ => " ",

/\:/ => " ",

/\(/ => " ( ",

/\)/ => " ) ",

/\// => " / ",

/\s+/ => " ",

/<br \/>/ => " , ",

/http/ => "http",

/https/ => " https ",

}

end

def self.tokenizer word, dictionary_hash = default_dictionary_hash

word = word.downcase

dictionary_hash.keys.each do |key|

word.sub!(key, dictionary_hash[key])

end

word.split

end

def self.word_to_tensor word

token_list = tokenizer word

token_list.map {|token| @@vocab[token]}

end

def self.extend_vector vector

vector_arr = vector.to_a

(@@maximum_word_size - vector.size).times { vector_arr << 1 }

Vector.[](*vector_arr)

end

def self.extend_vectors

@@vector_arr.each_with_index do |vector, index|

@@vector_arr[index] = extend_vector vector

end

end

def self.product vector

@@weights.each_with_index do |weight, index|

vector[index] = weight * vector[index]

end

vector

end

def self.euclidean_distance vector_1, vector_2

subtractions = (vector_1 - vector_2).to_a

subtractions.map! {|sub| sub = sub*sub }

Math.sqrt(subtractions.sum)

end

def self.execute example

example_vector = word_to_tensor example

example_vector.map! {|el| el = el.nil? ? 0: el}

example_vector = extend_vector example_vector

weighted_example = product example_vector

distances = []

@@vector_arr.each_with_index do |comparison_vector, vector_index|

distances << euclidean_distance(comparison_vector, weighted_example)

end

indexes = []

@@k.times do

index = distances.index(distances.min)

indexes << index

distances[index] = 1000000000

end

predictions = []

indexes.each do |index|

predictions << @@key_arr[index].first.to_i

end

puts "Predictions: #{predictions}"prediction = (predictions.sum/predictions.size).to_f

if prediction < 0.5

puts "False - Item is not #{@@object}"

return 0

else

puts "True - Item is #{@@object}"

return 1

end

end

end

csv_path = "organic_results/organic_results__snippet.csv"

trained_weights_path = "organic_results/snippet_weights.json"

vocab_path = "organic_results/vocab.json"

Predict.new csv_path, trained_weights_path, vocab_path, object = "Snippet", k = 5

Predict.construct

true_examples = CSV.read(csv_path)

true_examples = true_examples.map {|el| el = el.first == "1" ? el.second : nil}.compact

true_examples.each_with_index do |example, index|

puts "--------"

puts "#{index}"

Predict.execute example

puts "--------"

end

Conclusion

I made an honest attempt into an area that could relieve some pressure on writing of the tests, and I have failed. A subtle mistakes on custom codes may occur and result in overall failure. I am grateful I gave a shot to it, and saw that this approach was not as effective as I thought it was. I will further work on this in the future. However, for next blog post, the topic will most likely be a different one. I would like to apologize to the reader for giving misleading results in the previous blog post, and thank them for their attention.

Originally published at https://serpapi.com on May 4, 2022.

[Failed] Investigating Machine Learning Techniques to Improve Spec Tests — V was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Emirhan Akdeniz

Emirhan Akdeniz | Sciencx (2022-05-06T03:58:23+00:00) [Failed] Investigating Machine Learning Techniques to Improve Spec Tests — V. Retrieved from https://www.scien.cx/2022/05/06/failed-investigating-machine-learning-techniques-to-improve-spec-tests-v/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.