This content originally appeared on Bits and Pieces - Medium and was authored by Akash Yadav

Our previous article covered Thread-based asynchronous programming. As we move forward, let’s examine the true non-blocking nature of asynchronous programming and how IO interrupts as events are used to make systems that can withstand tremendous workloads.

What is event-based programming?

With an event-based style, we ensure that our application does not contain blocking code. Upon initiating an IO operation like a database call, a thread switches over to other tasks. This thread is then notified/interrupted when the IO operation completes, so it can handle the results. From the calling thread’s perspective, the entire IO stage is outsourced to someone else, who will send a notification when the job is complete.

As you can see, this is very similar to what we discussed in our thread-based asynchronous programming discussion. A crucial difference between the thread pool and this model is that the thread pool-based model pretends to be unblocked, but in fact, its block is just pushed across to some other thread, whereas this model has no blocking code at all. It is not necessary to have a thread pool on the other side of the calling thread to receive and notify the caller. The question is, however, who issues the interrupt after the IO operation is complete.

How event-based programming works?

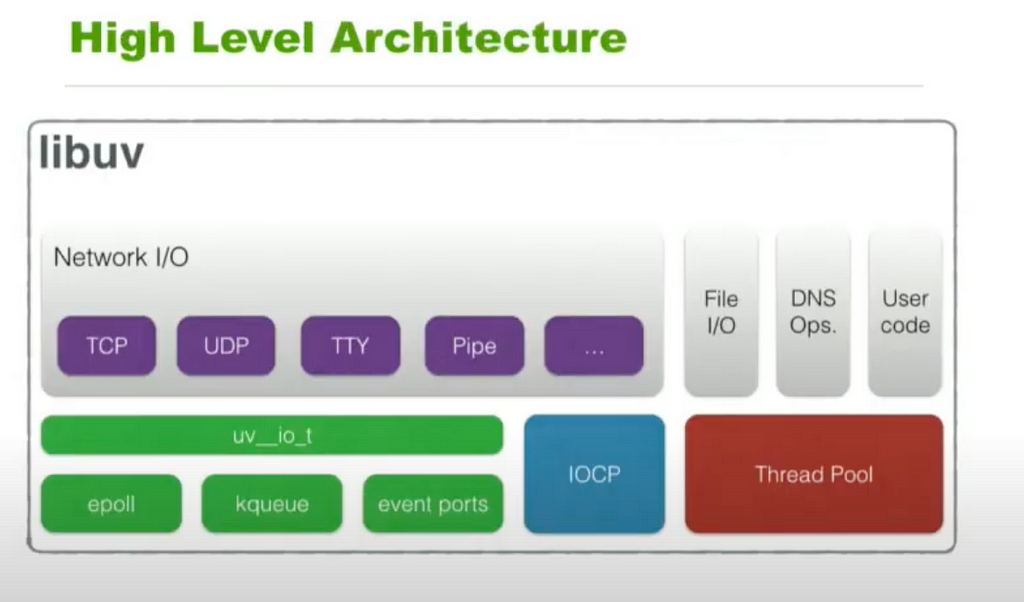

UNIX and its derivatives have support for accepting an IO hook from an application, keeping track of the IO lifecycle, and notifying the application when IO is complete. By using this feature the application can then focus on triggering IO and handling its results rather than having to worry about managing IO. libuv a C library originally written in C for Node.js abstracts away this same IO management.

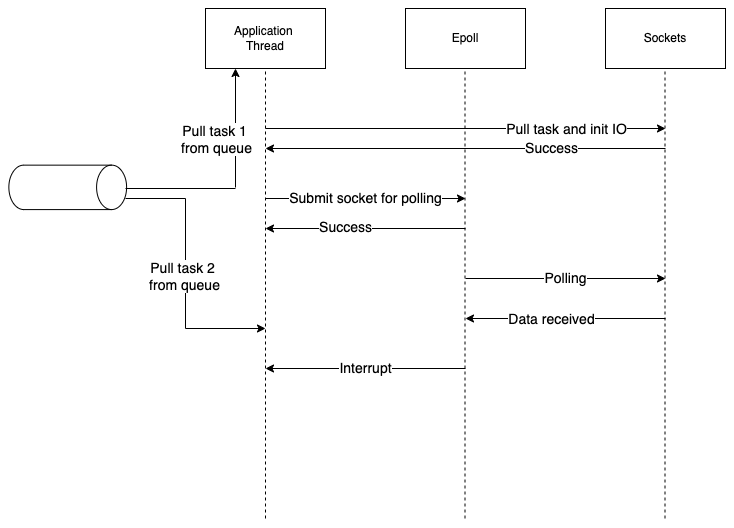

I/O is performed by opening a socket and issuing a syscall to start sending and receiving data over that socket. When the requested data is sent, an application using the one-thread-per-request model would simply poll the socket to check when it received the result.

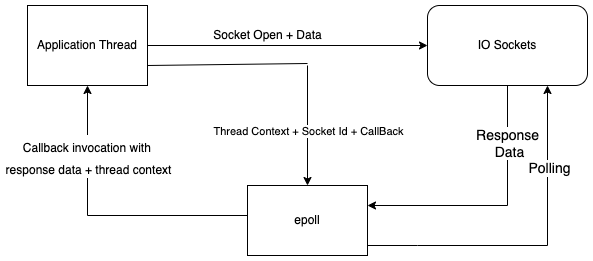

There is a polling program built into the OS that can handle the polling of sockets very efficiently. Our application registers its own socket as a polled socket and gives it a hook, i.e. a callback, that the OS uses to notify the application when the socket receives the response once the inbound data stream is ready for processing. Now that the application thread is free, it can be used to process non-IO tasks since there is no blocking IO, allowing it to achieve a massive scale. In libuv library, epoll is the one that does the polling of these IO sockets.

All sockets registered with epoll are continually polled, typically via a single thread. With the response data and the thread execution context (also stored here by the application thread when handing over to epoll), epoll invokes the callback given to it by the application thread. As a result, an application thread will be interrupted to handle the output to resume its flow of execution from that point onward.

Using an Event-based style, task handover and thread interrupts happen across the Application-OS boundary. This exchange is what we know as an event loop.

Epoll still has to run a thread to poll all the sockets, which is essentially a blocking operation. Isn’t that the same thing as thread pools (only one thread in the pool)?

That is correct, but we (the application) no longer do this. Our application is completely event-driven. The OS is extremely efficient at handling these low-level activities, such as polling sockets.

I would love to hear from you. You can reach me for any query, feedback, or just want to have a discussion through the following channels:

Drop me a message on Linkedin or email me on akash.codingforliving@gmail.com

Build composable web applications

Don’t build web monoliths. Use Bit to create and compose decoupled software components — in your favorite frameworks like React or Node. Build scalable and modular applications with a powerful and enjoyable dev experience.

Bring your team to Bit Cloud to host and collaborate on components together, and speed up, scale, and standardize development as a team. Try composable frontends with a Design System or Micro Frontends, or explore the composable backend with serverside components.

Learn more

- Building a Composable UI Component Library

- How We Build Micro Frontends

- How we Build a Component Design System

- How to build a composable blog

- The Composable Enterprise: A Guide

- Meet Component-Driven Content: Applicable, Composable

- Sharing Components at The Enterprise

Event-Based Asynchronous Programming was originally published in Bits and Pieces on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Bits and Pieces - Medium and was authored by Akash Yadav

Akash Yadav | Sciencx (2022-06-03T11:49:35+00:00) Event-Based Asynchronous Programming. Retrieved from https://www.scien.cx/2022/06/03/event-based-asynchronous-programming/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.