This content originally appeared on Level Up Coding - Medium and was authored by Emirhan Akdeniz

This is a part of the series of blog posts related to Artificial Intelligence Implementation. If you are interested in the background of the story or how it goes:

In the previous weeks, we explored how to create your own image dataset using SerpApi’s Google Images Scraper API automatically. This week we will reshape the structure for training commands, data loading, and training to make everything completely efficient, and fully customizable.

New Commands Structure for Automatic Training

In the past weeks, we have passed a command dictionary to our endpoint to automatically train a model. I have mentioned the changes I made when explaining the structure. Idea is to have more customizable fields over the commands dictionary in order to train and test your models at large. We will give custom control over everything except the model.

Also in previous weeks, we have introduced a way to call a random image from Couchbase Server to use for training purposes. There are good and bad side effects to this approach. But, luckily, bad side effects can be controlled in upcoming updates.

The good part of fetching images from a Couchbase server randomly is the ability to operate without knowing dataset size, speed of operation, efficient storing, and future availability for async fetching from a storage server. This last part could be crucial in reducing the time to train a model, which is a major problem in training big image classifiers. We will see if there will be any other bumps in the road to achieving general concurrent training.

The bad part of fetching images from a Couchbase server, for now, is that we won’t have a separation between training database or a testing database. We will randomly fetch images from the storage server, which also means we could train the network with two image twice. All of these side effects are vital only when using small number of images. Considering SerpApi’s Google Images Scraper API can provide up to 100 images per call, everything I just mentioned could be ignored in the scope of this endeavour. Maybe in the future, we can create another key within the image object in the Couchbase server to have a control based on the model you are training.

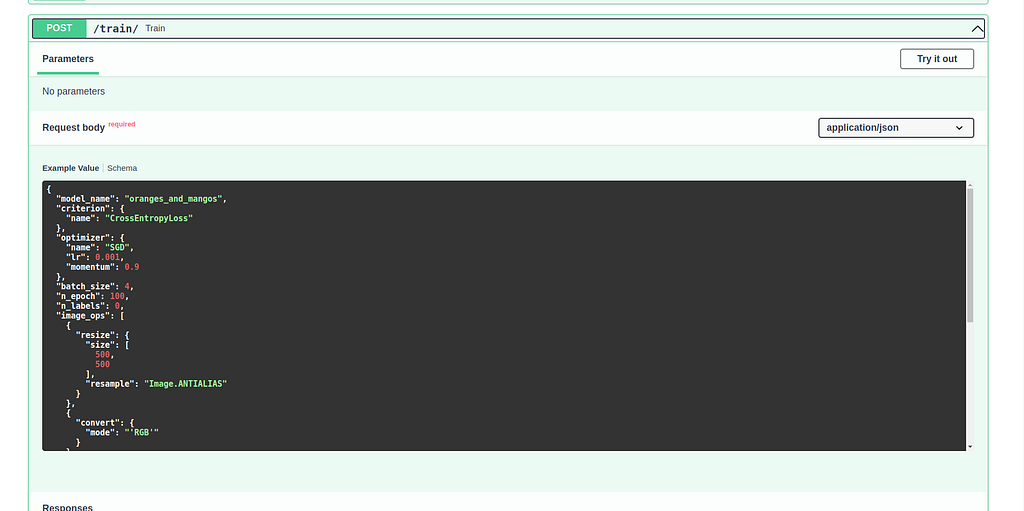

Let’s take a look at the new command structure:

As you can see, parameters of criterion, optimizer can be passed from the dictionary body now. Every key except name will be used as a parameter.

For criterion in this context, the object called will be:

For optimizer:

image_ops represent the image manipulation methods for Image class of the PIL library. Each element is in itself a function to be called:

and

Notice that to pass a string object we nest it in another apostrophe.

transform and target_transform will create an array with torch.nn.transforms to compose after transformations to be made to the image. True means an operation without a parameter. In our case:

For variables containing a dictionary, items in the dictionary will be called as parameters:

Fetching Random Images from a Storage Server with Custom Dataset and Custom Dataloader

Here I had to introduce a way to read images from the database instead of local storage, and return them as tensors.

Also, another challange was to ditch torch.utils.data.DataLoader and introduce a way to return images as tensors just like the class does. The reason was simple. DataLoader is using iteration whereas we need to call objects batch_size many times randomly and return them just like before.

Here is the refactored version of imports:

Let’s initiate the Dataset object:

Notice that we don’t have a type of operation (training, testing) to pass now. Instead, we pass a database object. Also all the initialization of elements are now changed to work with new command structure.

create_transforms function is responsible for recursive object creation in transforms. We then add each creation into an array, and compose them in the main function.

Instead of iteration function (__get_item__), we now refer to another function:

Putting this in while True could seem brutal. But considering we have images in the database, we won’t have any problems with calling a new image randomly. I encountered a minor problems with some of the images in Couchbase storage. I didn’t investigate further. It could be shaped better in the future.

But for now, we call a random label from label_names we provided in the command dictionary. Then we make a one-hot dictonary of the label.

Finally, we call for the image from the database.["$1"] part is admittably, another lazy programming error I made in previous weeks' blog post. Not a vital one though. We take the base64 of the image, decode it into bytes, store it in BytesIO object, and then read it with Image.

In the next part of the function, we make operations on the image based on the entries in commands dictionary:

Then comes the transformations for torch we defined in initializing the Dataset:

Finally, we return the image and the label.

For dataloader, we pass commands dictionary, dataset object, and Couchbase Database adaptor:

For iterating a training, we resort to a longer method now:

We create a list of images and labels. And then convert them numpy.ndarray, then convert them back to float64 pytorch tensors. Finally, we return them at each epoch.

Training Process

I changed the way we train the model from iterating through a dataset to only epochs with random fetchings of images. Also, I added support for training using gpu if it is supported. Here are the updated imports:

I didn’t change anything in the model, although, I didn’t have the time to explain the manual calculation I made there into an automatic one with an explanation. That will be for the next weeks:

Let’s initialize our training class in accordance with the information in the commands dictionary:

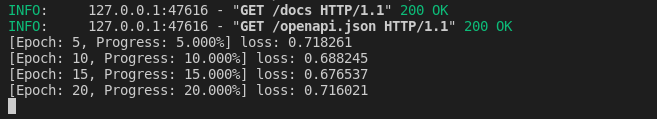

As you can see, optimizer, and criterion are created from the variables we provide. Also, we need to increase n_epochs which is the number of epochs we iterate to train the model since we don't go through full training dataset now. So, in the end, if we provide the epochs to be 100, it'll fetch 100 random images from the storage at each epoch, and train on it.

Here’s the main training process:

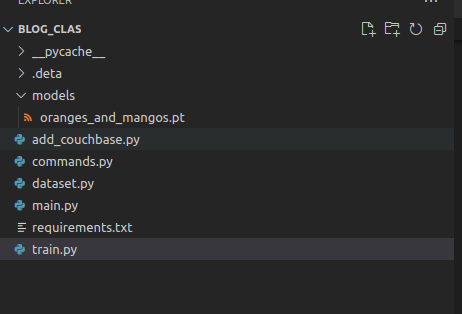

As you can see, we fetch a random image at every epoch, and train on it using cpu or gpu, whichever is available. We then report on the process, and in the end, save it using designated model name:

Showcase

If we run the server, and head to localhost:8000/docs, to try out the new /train endpoint with default commands:

As soon as we execute, we can observe the training process in the terminal:

Once the training ends, the model will be saved with designated name:

Conclusion

I am grateful to brilliant people of SerpApi for making this blog post possible, and I am grateful to the reader for their attention. We haven’t gone through some keypoints I mentioned in prior weeks. However, we have cleared the path for some crucial parts. In the following weeks, we will discuss how to make the model customizable, and callable from a file name, concurrent calls to SerpApi’s Google Images Scraper API, and create a comparative test structure between different models.

Originally published at https://serpapi.com on June 29, 2022.

Using a Customizable Dictionary to Automatically Train a Network with FastApi, PyTorch, and SerpApi was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Emirhan Akdeniz

Emirhan Akdeniz | Sciencx (2022-06-30T11:49:55+00:00) Using a Customizable Dictionary to Automatically Train a Network with FastApi, PyTorch, and SerpApi. Retrieved from https://www.scien.cx/2022/06/30/using-a-customizable-dictionary-to-automatically-train-a-network-with-fastapi-pytorch-and-serpapi/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.