This content originally appeared on Level Up Coding - Medium and was authored by Emirhan Akdeniz

If you are interested in the background of the story:

In the previous week, we explored how to use chips parameter responsible for narrowing down results, querying images with a specific height in SerpApi’s Google Images Scraper API to train machine learning models. This week we will explore updating the image dataset with multiple queries of the same kind automatically and see the results in a bigger scale deep learning.

What is AI at scale?

The term refers to scalability in expanding image dataset to be used in Machine Learning training process and expansion or retraining of the machine learning model in scale with minimal effort. In simple terms, if you have a model that differentiates between a cat and a dog, you should be able to expand AI training easily by automatically collecting monkey images, and retraining, or expanding the existing classifier by using different frameworks. AI solutions with large models need effective workflows to achieve model development.

How to reduce training time?

By using clear data, you may reduce the noise and can have effective artificial intelligence models that can outperform other ai models. In computer vision, this aspect is more important since the workloads will change significantly when compared to algorithms responsible for NLP (natural language processing).

Easily Scraping Clear Data

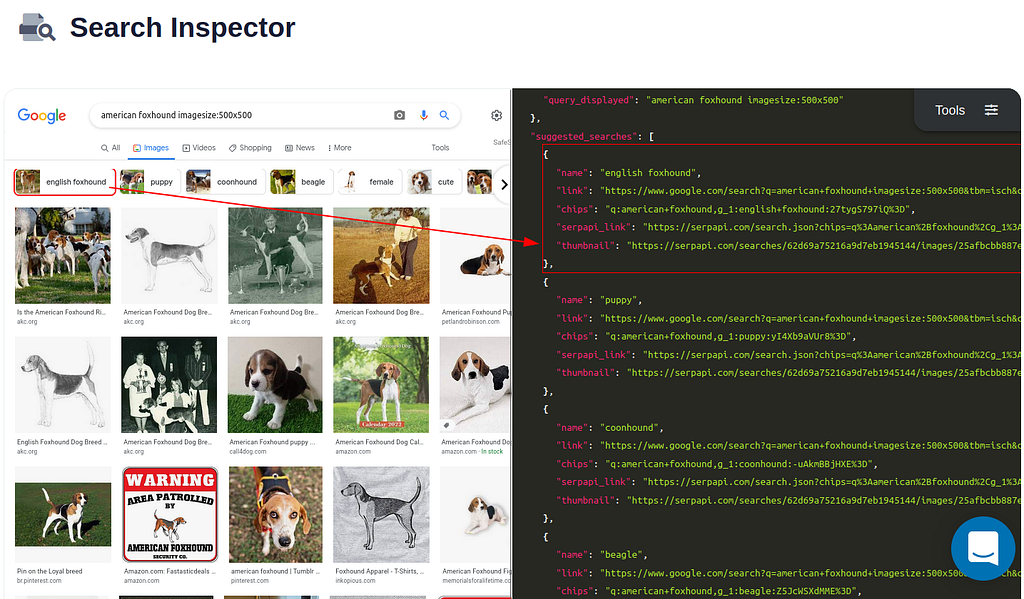

In the previous week, I have shown how to get chips parameter manually from a Google search. SerpApi is capable of creating a list of different chips values and serve them under suggested_searches key.

One thing to notice here is that the chips value for different queries will have different values. However, You may acquire the chips value of a particular query, and run it across different pages for that same specific query.

For example chips value for the query American Foxhound imagesize:500x500 and the specification dog is:

q:american+foxhound,g_1:dog:FSAvwTymSjE%3D

Same chips value for American Hairless Terrier imagesize:500x500 is:

q:american+hairless+terrier,g_1:dog:AI24zk7-hcI%3D

As you can observe, the encrypted part of the chips value differs. This is why you need to make at least one call to SerpApi's Google Images Scraper API to get the desired chips value.

I have added a new key called desired_chips_name within our Query class to check for the results with the desired name in suggested_searches:

Also there was a need for another function within the Download class for extracting chips value and using it for the search:

This function is responsible for calling SerpApi with a query and returning desired chips parameter as well as updating it in the Query class.

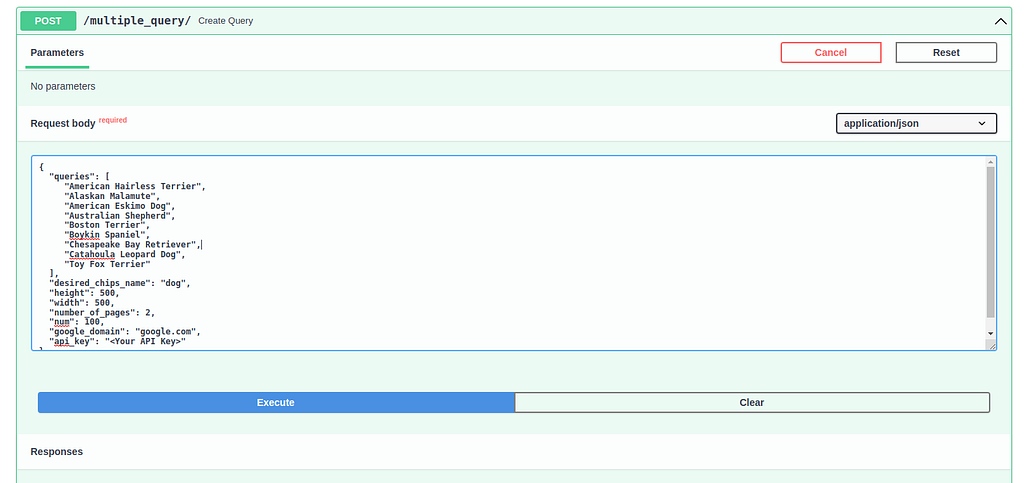

Now that everything necessary is in place to automatically make a call with the desired specification and add it to our ai model dataset to be used for machine learning training, let’s declare a class to be used to do multiple queries:

queries key here will be a list of queries you want to classify between. For example: List of dog breeds

desired_chips_name key will be their common specification. In this case, it is dog.

height and width will be the desired sizes of the images to be collected for deep learning training.

num will be the number of results per page.

number_of_pages will be the number of pages you'd like to get. 1 will give around 100 results, 2 will give around 200 images when num is 100 etc.

google_domain is the Google Domain you'd like to search at.

api_key is your unique API key for SerpApi.

Let’s define the function to automatically call queries in order and upload images to storage dataset with their classification:

We query SerpApi once with chips_serpapi_search and then use the chips value to run actual searches. Then we move all the images to the image dataset storage to train machine learning models we created in earlier weeks.

Finally, let’s declare an endpoint for it in main.py:

Training at Scale

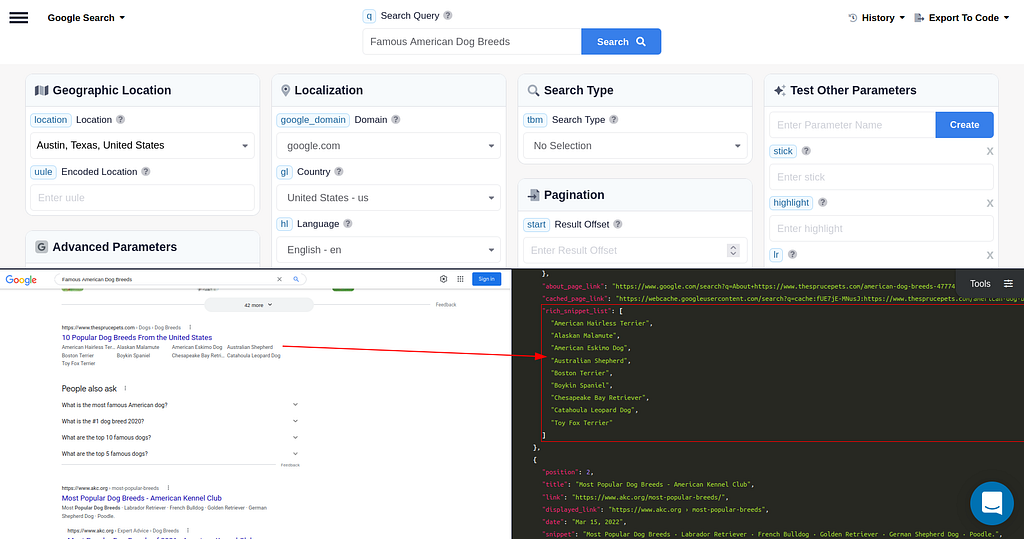

For showcasing purposes, we will be using 8 species of American dog breeds. In fact, we could’ve even integrated SerpApi’s Google Organic Results Scraper API to automatically fetch Famous American Dog Breeds and run it with our queries key. This is outside the scope of today's blog post. But it is a good indicator of multi-purpose usecase of SerpApi.

If you head to playground with the following link, you will be greeted with the desired results:

Now we’ll take the list in the organic_result, and place it in our MultipleQueries object at /multipl_query endpoint:

This dictionary will fetch us 2 pages (around 200 images) with the height of 500, and the width of 500, in Google US, with the chips value for dog (specifically narrowed down to only dog images) for each dog breed we entered.

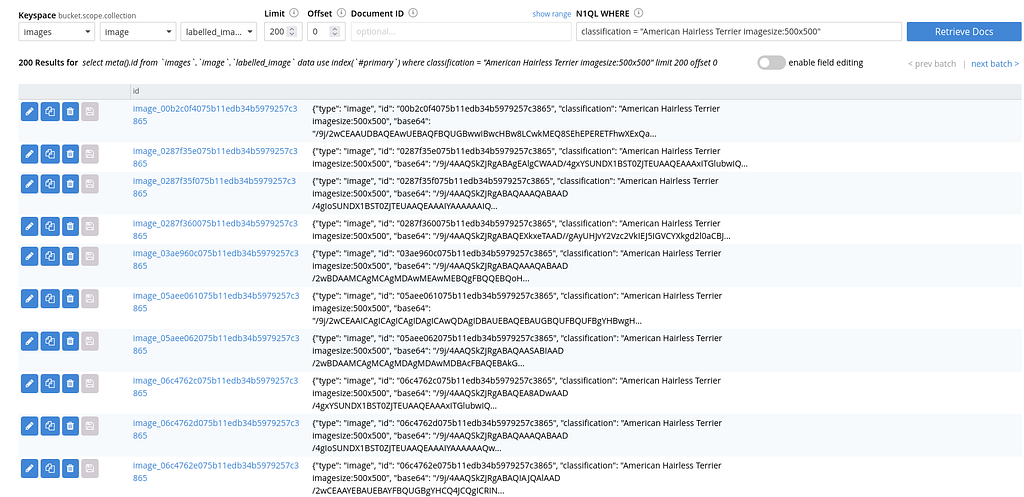

You can observe that the images are uploaded in the N1QL Storage Server:

If you’d like to create your own optimizations for your machine learning project, you may claim a free trial at SerpApi.

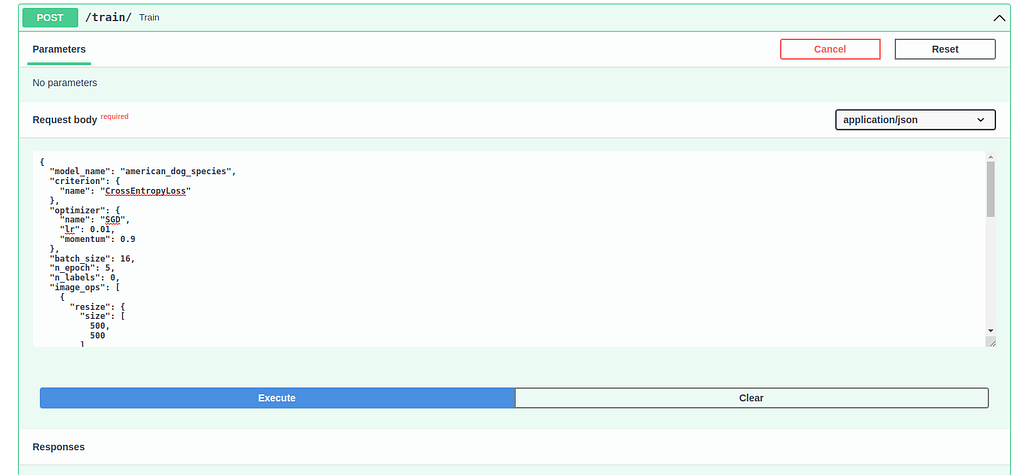

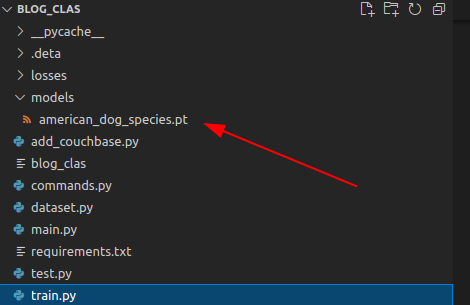

Now that we have everything we need, let’s train the machine learning model that distinguishes between American Dog Breeds at the /train endpoint, with the following dictionary:

The artificial intelligence model will be automatically trained at large scale using the images we uploaded to the dataset, via high-performance gpus of my computer in this case (it is also possible to train with a cpu):

I am grateful to the user for their attention and Brilliant People of SerpApi for making this blog post possible. This week, we didn’t focus on creating effective models with good metrics but the process of automating of creation. In the following weeks we will work on fixing its deficiencies and missing parts. These parts include ability to do distributed training for ml models, supporting all optimizers and criterions, adding customizable model classes, being able to train machine learning models on different file types (text, video), not using pipelines effectively, utilizing different data science tools, supporting other machine learning libraries such as tensorflow alongside pytorch etc. I don’t aim to make a state-of-the-art project. But I aim to create a solution for easily creating ai systems. I also aim to create a visual for the comparison of different deep learning models created within the ecosystem. We will also discuss about visualizing the training process to have effective models. The aim is to have an open source library where you can scale your models at will.

Originally published at https://serpapi.com on July 19, 2022.

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in Level Up Coding

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

- 🚀👉 Top jobs for software engineers

AI Training at Scale was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Emirhan Akdeniz

Emirhan Akdeniz | Sciencx (2022-07-20T12:08:34+00:00) AI Training at Scale. Retrieved from https://www.scien.cx/2022/07/20/ai-training-at-scale-2/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.