This content originally appeared on Level Up Coding - Medium and was authored by Vadym Barylo

You cannot mandate productivity, you must provide the tools to let people become their best.

Steve Jobs

Monorepo is a great solution for managing independent and standalone components of complex projects inside a single codespace.

This dramatically reduces the time needed to propagate code change between layers and independent components, so have a positive effect on developer productivity and reduces pain in managing cross-dependencies.

But adopting this approach only as a code structuring pattern is like additionally proving the quote “If my only tool is a hammer, then every problem is a nail”.

Collecting single-purpose projects and components into a single manageable workspace give additional benefits for more comprehensive and robust verification of all parts of this workspace, as well as a full workspace as a complete solution.

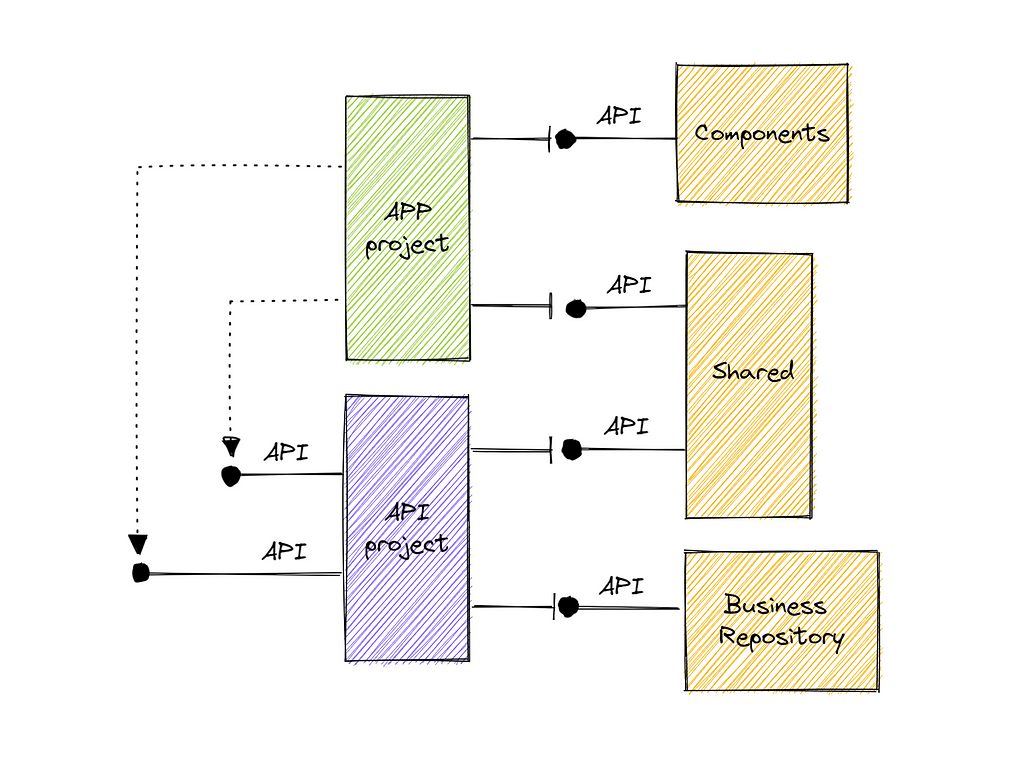

A very common example of single-purpose components and projects that are part of a single workspace is a REST API service application composed of company standardized common libraries and client SPA that is a consumer of this REST API server and also shares with it common elements like transfer objects, authentication/security components, etc.

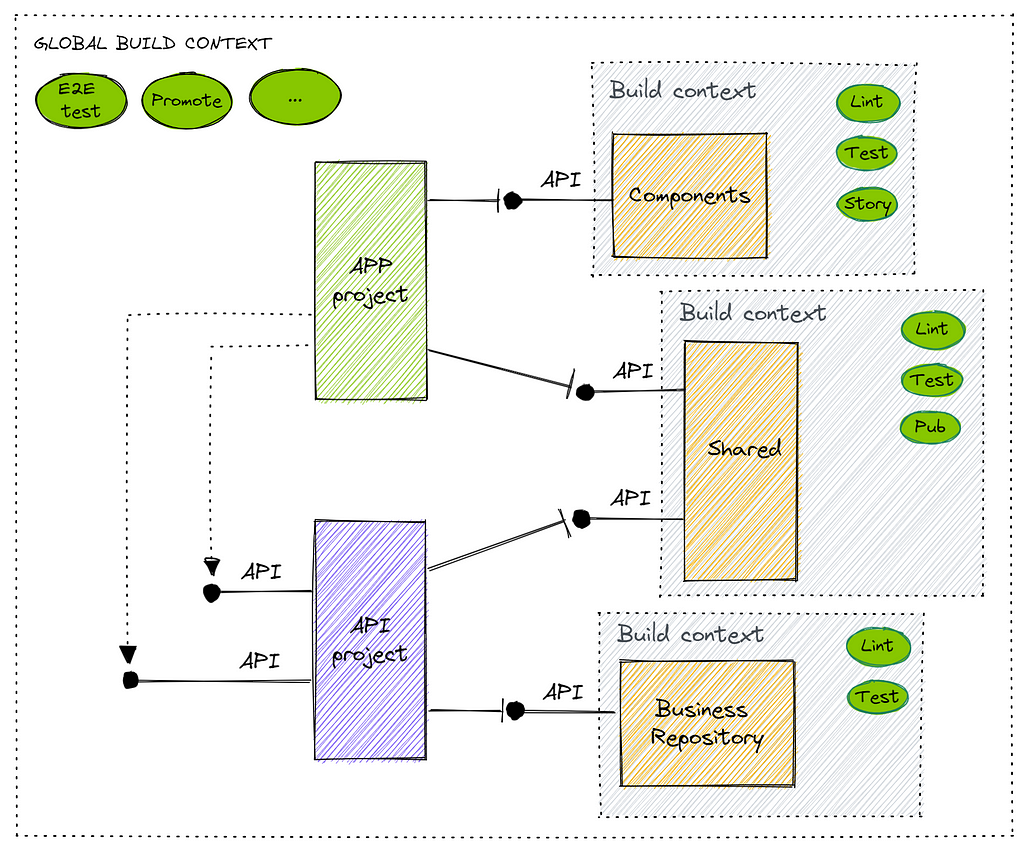

Having all individual components and main consumers of these components (APP and API projects) in the same monorepo is an example of efficient code structuring. Each individual component can have its own personalized build context to produce self-sufficient artifacts for later use.

But what about the global build context? Keeping only individual components tested, can we ensure that overall flow (pressing the button on UI produces proper grid data after reload) also works as expected?

Keeping root projects as part of a single workspace gives much more power in verifying full E2E behaviors as we have all pieces logically connected together to initiate user clicks, process API requests, and bind responses to the user form.

So maybe we can also consider a monorepo not only as a code structure pattern but also as a context with logically connected components that serve for single intent? And “global build context” is a way to manage and manipulate all projects and modules as a complete business unit?

Having all sets of automation in place for all dependent components in this tree, we still struggling with a complete end-to-end flow: the API project is needed database access to store its data, and the APP project is needed a working API server to consume.

So having all required pieces mocked during this check doesn’t give us full confidence about the overall quality state.

So the next step in adopting the monorepo pattern is not only to enforce solution structure for better code re-use but to ensure that all components and root projects can be managed and verified as a single platform that covers full end-to-end user scenarios.

Hands-on experience

Given: REST API service and SPA project (that is a consumer of this API) are part of a single monorepo. The demo solution can be found on GitHub.

Requirements: Ensure a complete E2E check (from browser to database) is part of CI verification and doesn’t require separate deployments as test prerequisites.

Toolset:

- NX monorepo framework

- Angular for SPA

- NestJS for backend API

- TestContainers as a test infrastructure layer provisioner

- Jest and Puppeteer for testing

Design takeaways:

- each application in monorepo has its own build context and it is full to ensure application quality as a fully independent quantum. Also, this build context is sufficient enough to generate a deployable artifact.

- there is a aggregated common build context with main purpose is to check end-to-end flow for all projects connected together in this monorepo. It is also full and sufficient to ensure solution quality without any mocking strategies applied.

Implementation notes:

NX framework allows managing many connected pieces using a single build flow, all we need is to properly define dependency order and task execution order. We have 3 projects in the solution:

- API — NestJS REST API service that exposes users endpoint to load and store user payload into the Postgres database

- APP — Angular front-end project to consume REST API and render stored users

- APP-E2E — test project to verify all connected pieces as a complete unit

Let us keep API and APP project independent and APP-E2E dependent on these two:

Also, complete E2E flow will require build artifacts to be ready before the test starts, so put it also as an explicit requirement for the test target

Once ready, run npm test command to see execution sequence:

As we can see — dependent projects are going to be tested first. If all is good then build artifacts are prepared and complete E2E flow verification is performed. All is good for now.

The next step is to describe basic verification scenarios: store users in a real database and show them on the user page. And most important — it should be a complete E2E test (from API call to store in database).

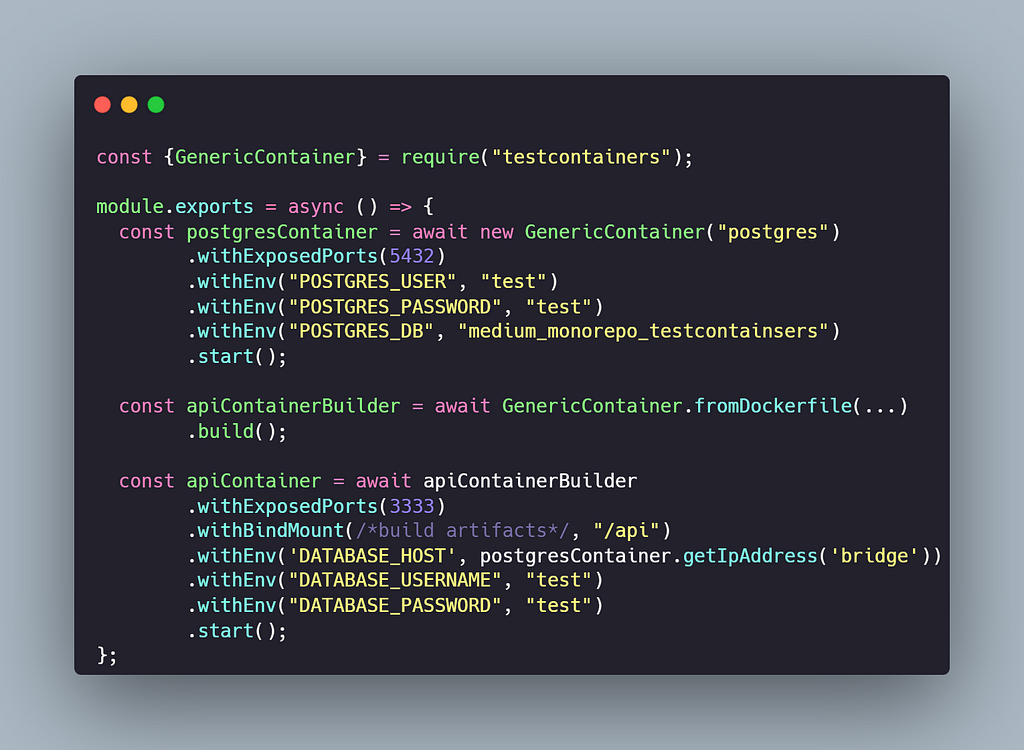

For this purpose, we can use testcontainers — a utility that helps to provision docker components as part of single test execution. We can do it as a test pre-initialization phase in Jest:

and the setup to provision required components:

If to take a look closer — we can see that the boot script is doing:

- start the PostgreSQL container using the official “postgres” image

- build “ApiContainer” from the local Dockerfile and then start it

- promotes Postgres access info service container through env variables

The final step is to cover the scenario by managing the user through the E2E test

The test is self-explanatory:

- service is started as a container and we configure the request object

- we are storing a couple of users by requesting API endpoints further checking that the operation is successful

- finally checking that all stored users can be requested from the persistent storage (Postgres)

The next step is to cover the UI part and to check that stored users are rendered successfully — this includes the next verifications under the hood:

- APP service is up and running as a Docker container instance

- APP service is properly configured to access API service (that also started as Docker container instance)

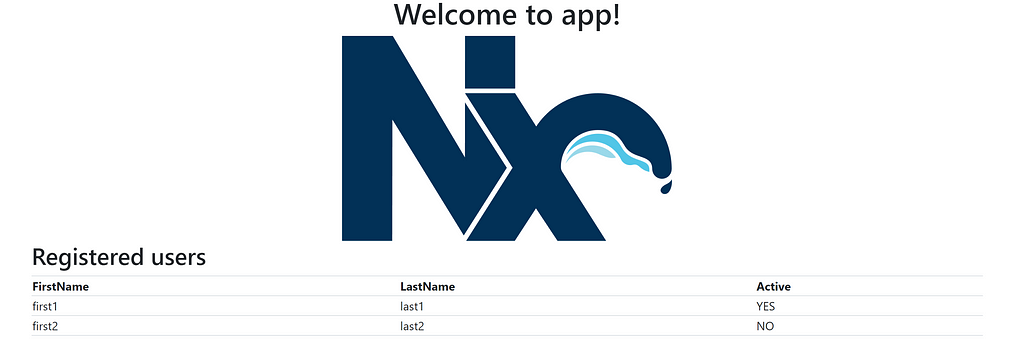

Let's run the test and check the result by directly navigating to the URL of provisioned docker instance (e.g. http://localhost:49164/ in my case).

We can see the next picture — after the test execution page, all users are displayed. These users were requested from the live API service that was stored in the live Postgres database. And all of this was checked completely isolated as part of a test run without physical promotion to live environments.

This solution helps to perform much robust and complete verification before even deploying services into the cluster and use as many live dependencies as we needed during test runs.

If you enjoy reading this post, please take a look at other posts related to monorepo design and investigations

- Structuring complex projects

- Mutation testing as a more robust check of dependent libraries

- What I was doing wrong — dependency management and monorepo

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

- 🚀👉 Top jobs for software engineers

Testing complex mono projects was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Vadym Barylo

Vadym Barylo | Sciencx (2022-07-21T18:44:25+00:00) Testing complex mono projects. Retrieved from https://www.scien.cx/2022/07/21/testing-complex-mono-projects/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.