This content originally appeared on DEV Community 👩💻👨💻 and was authored by Kevin Wan

Circuit Breaker refers to a mechanism that suspends trading in the stock market for a certain period of time during trading hours when price fluctuations reach a certain target (melting point). This mechanism is like a circuit breaker that blows when the current is too high, hence the name. The purpose of the meltdown mechanism is to prevent systemic risk, to give the market more time to calm down, to avoid the spread of panic leading to market-wide volatility, and thus to prevent the occurrence of large-scale stock price declines.

Similarly, in the design of distributed systems, there should be a mechanism for meltdown. The circuit breaker is usually configured on the client side (caller side), when the client initiates a request to the server side, the error on the server side keeps increasing, then the circuit breaker may be triggered, after triggering the circuit breaker the client's request is no longer sent to the server side, but the request is rejected directly on the client side, thus the server side can be protected from overload. The server side mentioned here may be rpc service, http service, or mysql, redis, etc. Note that circuit breaker is a lossy mechanism, and some degradation strategies may be needed to work with it when it is circuit breaker enabled.

Circuit Breaker Principle

Modern microservice architectures are basically distributed, and the whole distributed system is composed of a very large number of microservices. Different services invoke each other and form a complex invocation chain. If a service in the complex invocation link is unstable, it may cascade and eventually the whole link may hang. Therefore, we need to circuit breaker and downgrade unstable service dependencies to temporarily cut off unstable service calls to avoid local instabilities that can lead to an avalanche of the whole distributed system.

To put it bluntly, I think circuit breaker is like a kind of proxy for those services that are prone to exceptions. This proxy can record the number of errors that occurred in recent calls, and then decide whether to continue the operation or return the error immediately.

The circuit breaker maintains a circuit breaker state machine internally, and the state machine transitions are related as shown in the following diagram.

The circuit breaker has three states.

- Closed state: also the initial state, we need a call failure counter, if the call fails, it makes the number of failures plus 1. If the number of recent failures exceeds the threshold of allowed failures in a given time, it switches to the Open state, when a timeout clock is turned on, when the timeout clock time is reached, it switches to the Half Open state, the timeout time is set to give the system a chance to fix the error that caused the call to fail in order to return to the normal working state. In the Closed state, the error count is time-based. This prevents the circuit breaker from entering the Open state due to an accidental error, and can also be based on the number of consecutive failures.

- Open state: In this state, the client request returns an immediate error response without invoking the server side.

- Half-Open state: allows a certain number of client de-calls to the server, and if these requests have a successful call to the service, then it can be assumed that the error that caused the call to fail before has been corrected, at which point the circuit breaker switches to the Closed state and the error counter is reset. The Half-Open state is effective in preventing the recovering service from being hit again by a sudden influx of requests.

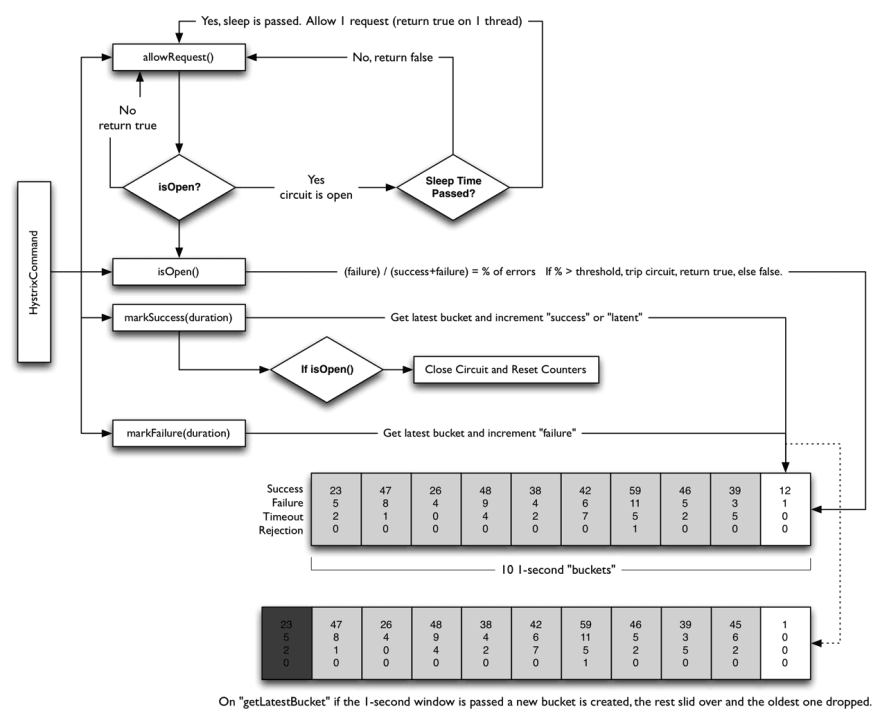

The following diagram shows the logic of the circuit breaker implementation in Netflix's open source project Hystrix.

From this flowchart, it can be seen that.

- a request comes, first allowRequest() function to determine whether in the circuit breaker, if not, then release, if so, but also to see whether a circuit breaker time slice reached, if the circuit breaker time slice to, also release, otherwise directly return an error.

- each call has two functions makeSuccess(duration) and makeFailure(duration) to count how many are successful or failed within a certain duration.

- the condition isOpen() to determine whether to circuit breaker, is to calculate the failure/(success+failure) the current error rate, if higher than a threshold, then the circuit breaker is open, otherwise closed.

- Hystrix will maintain a data in memory which records the statistics of the request results for each cycle, elements that exceed the length of time will be deleted.

Circuit breaker implementation

After understanding the principle of fusing, let's implement a set of fusers by ourselves.

Those who are familiar with go-zero know that fusing in go-zero does not use the way described above, but refers to "Google SRE" to adopt An adaptive fusing mechanism, what are the benefits of this adaptive approach? The following section will do a comparison based on these two mechanisms.

Below we implement a set of our own circuit breaker based on the fusing principle introduced above.

Code path: go-zero/core/breaker/hystrixbreaker.go

The default state of the circuit breaker is Closed, when the circuit breaker is opened the default cooling time is 5 seconds, when the circuit breaker is in HalfOpen state the default detection time is 200 milliseconds, by default the rateTripFunc method is used to determine whether to trigger the circuit breaker, the rule is that the sampling is greater than or equal to 200 and the error rate is greater than 50%, and a sliding window is used to record the total number of requests and number of errors.

func newHystrixBreaker() *hystrixBreaker {

bucketDuration := time.Duration(int64(window) / int64(buckets))

stat := collection.NewRollingWindow(buckets, bucketDuration)

return &hystrixBreaker{

state: Closed,

coolingTimeout: defaultCoolingTimeout,

detectTimeout: defaultDetectTimeout,

tripFunc: rateTripFunc(defaultErrRate, defaultMinSample),

stat: stat,

now: time,

}

}

func rateTripFunc(rate float64, minSamples int64) TripFunc {

return func(rollingWindow *collection.RollingWindow) bool {

var total, errs int64

rollingWindow.Reduce(func(b *collection.Bucket) {

total += b.Count

errs += int64(b.Sum)

})

errRate := float64(errs) / float64(total)

return total >= minSamples && errRate > rate

}

}

The doReq method is called for each request. In this method, first determine if this request is rejected by the accept() method, and if rejected, return the circuit breaker error directly. Otherwise, req() is executed to really initiate the server-side call, and b.markSuccess() and b.markFailure() are called for success and failure respectively

func (b *hystrixBreaker) doReq(req func() error, fallback func(error) error, acceptable Acceptable) error {

if err := b.accept(); err ! = nil {

if fallback ! = nil {

return fallback(err)

}

return err

}

defer func() {

if e := recover(); e ! = nil {

b.markFailure()

panic(e)

}

}()

err := req()

if acceptable(err) {

b.markSuccess()

} else {

b.markFailure()

}

return err

}

In the accept() method, first get the current circuit breaker state, when the circuit breaker is in the Closed state directly return, that normal processing of this request.

When the current state is Open, determine whether the cooling time is expired, if not expired, then directly return the circuit breaker error to reject the request, if expired, then the circuit breaker state is changed to HalfOpen, the main purpose of the cooling time is to give the server some time to recover from failure, to avoid continuous requests to the server to hit the hang.

When the current state is HalfOpen, first determine the probe interval to avoid probing too often, the default use of 200 milliseconds as the probe interval.

func (b *hystrixBreaker) accept() error {

b.mux.Lock()

switch b.getState() {

case Open:

now := b.now()

if b.openTime.Add(b.coolingTimeout).After(now) {

b.mux.Unlock()

return ErrServiceUnavailable

}

if b.getState() == Open {

atomic.StoreInt32((*int32)(&b.state), int32(HalfOpen))

atomic.StoreInt32(&b.halfopenSuccess, 0)

b.lastRetryTime = now

b.mux.Unlock()

} else {

b.mux.Unlock()

return ErrServiceUnavailable

}

case HalfOpen:

now := b.now()

if b.lastRetryTime.Add(b.detectTimeout).After(now) {

b.mux.Unlock()

return ErrServiceUnavailable

}

b.lastRetryTime = now

b.mux.Unlock()

case Closed:

b.mux.Unlock()

}

return nil

}

If this request returns normally, the markSuccess() method is called. If the current circuit breaker is in the HalfOpen state, it determines whether the current number of successful probes is greater than the default number of successful probes, and if it is greater, the state of the circuit breaker is updated to Closed.

func (b *hystrixBreaker) markSuccess() {

b.mux.Lock()

switch b.getState() {

case Open:

b.mux.Unlock()

case HalfOpen:

Atomic.AddInt32(&b.halfopenSuccess, 1)

if atomic.LoadInt32(&b.halfopenSuccess) > defaultHalfOpenSuccesss {

atomic.StoreInt32((*int32)(&b.state), int32(Closed))

b.stat.Reduce(func(b *collection.Bucket) {

b.Count = 0

b.Sum = 0

})

}

b.mux.Unlock()

case Closed:

b.stat.Add(1)

b.mux.Unlock()

}

}

In markFailure() method, if the current state is Closed by executing tripFunc to determine if the circuit breaker condition is met, if it is met then change the circuit breaker state to Open state.

func (b *hystrixBreaker) markFailure() {

b.mux.Lock()

b.stat.Add(0)

switch b.getState() {

case Open:

b.mux.Unlock()

case HalfOpen:

b.openTime = b.now()

atomic.StoreInt32((*int32)(&b.state), int32(Open))

b.mux.Unlock()

case Closed:

if b.tripFunc ! = nil && b.tripFunc(b.stat) {

b.openTime = b.now()

atomic.StoreInt32((*int32)(&b.state), int32(Open))

}

b.mux.Unlock()

}

}

The overall logic of the implementation of the circuit breaker is relatively simple, read the code can basically understand, this part of the code implementation is rather rushed, there may be bugs, if you find bugs can always contact me for correction.

hystrixBreaker and googlebreaker comparison

Next, compare the fusing effect of the two fuses.

This part of the sample code is under: go-zero/example

The user-api and user-rpc services are defined respectively. user-api acts as a client to request user-rpc, and user-rpc acts as a server to respond to client requests.

In the example method of user-rpc, there is a 20% chance of returning an error.

func (l *UserInfoLogic) UserInfo(in *user.UserInfoRequest) (*user.UserInfoResponse, error) {

ts := time.Now().UnixMilli()

if in.UserId == int64(1) {

if ts%5 == 1 {

return nil, status.Error(codes.Internal, "internal error")

}

return &user.UserInfoResponse{

UserId: 1,

Name: "jack",

}, nil

}

return &user.UserInfoResponse{}, nil

}

In the example method of user-api, make a request to user-rpc and then use the prometheus metric to record the number of normal requests.

var metricSuccessReqTotal = metric.NewCounterVec(&metric.CounterVecOpts{

Namespace: "circuit_breaker",

Subsystem: "requests",

Name: "req_total",

Help: "test for circuit breaker",

Labels: []string{"method"},

})

func (l *UserInfoLogic) UserInfo() (resp *types.UserInfoResponse, err error) {

for {

_, err := l.svcCtx.UserRPC.UserInfo(l.ctx, &user.UserInfoRequest{UserId: int64(1)})

if err ! = nil && err == breaker.ErrServiceUnavailable {

fmt.Println(err)

continue

}

metricSuccessReqTotal.Inc("UserInfo")

}

return &types.UserInfoResponse{}, nil

}

Start both services and then observe the number of normal requests under both fusing strategies.

The normal request rate for the googleBreaker is shown in the following figure.

The normal request rate for the hystrixBreaker is shown in the following graph.

From the above experimental results, we can see that the normal request count of go-zero's built-in googleBreaker is higher than that of hystrixBreaker. This is because hystrixBreaker maintains three states. When entering the Open state, a cooling clock is used in order to avoid continuing to cause pressure on the server to initiate requests, and no requests are spared during this time, meanwhile, after changing from the HalfOpen state to the Closed state, a large number of requests will be sent to the server again instantly, when the server It is likely that the server has not yet recovered, thus causing the circuit breaker to change to the Open state again. The googleBreaker is an adaptive fusing strategy, and does not require multiple states, and will not be like the hystrixBreaker as a one-size-fits-all, but will handle as many requests as possible, which is not what we expect, after all, the circuit breaker is detrimental to the customer. Let's learn the go-zero built-in googleBreaker.

Source code interpretation

googleBreaker code path in: go-zero/core/breaker/googlebreaker.go

In the doReq() method by accept() method to determine whether the circuit breaker is triggered, if the circuit breaker is triggered then return error, here if the callback function is defined then you can perform callbacks, such as doing some degraded data processing. If the request is normal then markSuccess() adds 1 to the total number of requests and normal requests, if the request fails by markFailure then only add 1 to the total number of requests.

func (b *googleBreaker) doReq(req func() error, fallback func(err error) error, acceptable Acceptable) error {

if err := b.accept(); err ! = nil {

if fallback ! = nil {

return fallback(err)

}

return err

}

defer func() {

if e := recover(); e ! = nil {

b.markFailure()

panic(e)

}

}()

err := req()

if acceptable(err) {

b.markSuccess()

} else {

b.markFailure()

}

return err

}

Determine whether the circuit breaker is triggered by calculation in the accept() method.

In this algorithm, two request counts need to be recorded, which are

- Total number of requests (requests): the sum of the number of requests initiated by the caller

- Number of requests normally processed (accepts): the number of requests normally processed by the server

In the normal case, these two values are equal. As the called party's service starts rejecting requests with exceptions, the value of the number of requests accepted (accepts) starts to gradually become smaller than the number of requests (requests), at which point the caller can continue sending requests until requests = K * accepts, and once this limit is exceeded, the circuit breaker opens and new requests will be discarded locally with a certain probability of returning errors directly, the probability is calculated as follows.

max(0, (requests - K * accepts) / (requests + 1))

By modifying the K(multiplier) in the algorithm, the sensitivity of the circuit breaker can be adjusted, when decreasing the multiplier will make the adaptive circuit breaker algorithm more sensitive, when increasing the multiplier will make the adaptive circuit breaker algorithm less sensitive, for example, suppose the upper limit of the caller's requests is adjusted from requests = 2 * accepts to requests = 1.1 * accepts then it means that one out of every ten requests from the caller will trigger the circuit breaker.

func (b *googleBreaker) accept() error {

accepts, total := b.history()

weightedAccepts := b.k * float64(accepts)

// https://landing.google.com/sre/sre-book/chapters/handling-overload/#eq2101

dropRatio := math.Max(0, (float64(total-protection)-weightedAccepts)/float64(total+1))

if dropRatio <= 0 {

return nil

}

if b.proba.TrueOnProba(dropRatio) {

return ErrServiceUnavailable

}

return nil

}

history counts the total number of current requests and the number of normally processed requests from the sliding window.

func (b *googleBreaker) history() (accepts, total int64) {

b.stat.Reduce(func(b *collection.Bucket) {

accepts += int64(b.Sum)

total += b.Count

})

return

}

Conclusion

This article introduces a client-side throttling mechanism in service governance - circuit breaker. There are three states to be implemented in the hystrix circuit breaker policy, Open, HalfOpen and Closed, and the timing of switching between the different states is described in detail in the above article, so you can read it again and again to understand it, or better yet, implement it yourself. For go-zero built-in circuit breaker is no state, if you have to say its state, then there are only two cases of open and closed, it is based on the success rate of the current request adaptive discard request, is a more flexible circuit breaker strategy, discard request probability with the number of requests processed normally changing, the more requests processed normally the lower the probability of discarding requests, and vice versa the higher the probability of discarding requests. The higher the probability of dropping a request, the higher the probability of dropping a request.

Although the principle of circuit breaker is the same, the effect may be different due to different implementation mechanisms, so you can choose a circuit breaker strategy that meets the business scenario in actual production.

I hope this article will be helpful to you.

Code for this article: https://github.com/zhoushuguang/go-zero/tree/circuit-breaker

Reference

https://martinfowler.com/bliki/CircuitBreaker.html

https://github.com/Netflix/Hystrix/wiki/How-it-Works

Project address

https://github.com/zeromicro/go-zero

Welcome to use go-zero and star to support us!

This content originally appeared on DEV Community 👩💻👨💻 and was authored by Kevin Wan

Kevin Wan | Sciencx (2022-09-12T13:27:53+00:00) Circuit Breaker Explained. Retrieved from https://www.scien.cx/2022/09/12/circuit-breaker-explained/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.