This content originally appeared on Level Up Coding - Medium and was authored by Joshua Salako

Image Segmentation — An Overview On How Its Algorithms Identify Objects In An Image

Table of contents

- Introduction

- Applications of image segmentation

- Image segmentation algorithms (with a sample of Otsu’s algorithms implementation in Python language)

- Types of image segmentation

- Endnotes

This article will discuss extensively how computer vision uses image segmentation to identify objects on images.

Introduction

A few weeks ago, I discovered this cool filter that enlarges my mouth size to almost triple the real size! I also discovered other cool filters too — one that gives sunglasses and another that gives me a lookalike of me like a toddler! Isn’t it magical how the AI of that app locates, to a very high precision, the region where different parts of my body are in the video to replace, add, edit or enlarge it accordingly? Well, it isn’t magic — the algorithm processed your real-time photo and successfully segmented it into different parts/objects that it identifies.

Image segmentation is a technique in digital image processing. It involves the process of partitioning an image into multiple parts or regions based on the characteristics of the pixels of the image.

The characteristics of the pixels include their value(depending on the intensity of light present and ranging from 0 to 255) and color. Segmentation is done by looking out for abrupt discontinuities in neighboring pixel values and this process is performed autonomously by algorithms written for the purpose. The partitioned regions present are referred to as image objects. As in the case above, my eyes, nose, mouth, etc. are the image objects.

We could say that the input to the algorithm is an image while the output is object attributes.

The success or failure of computer vision depends on the accuracy of the algorithm during segmentation. It is expected of any algorithm to halt segmentation once the object/region of interest in an image, needed for whatever it is to be applied for, is detected.

For instance, the filter we discussed earlier is interested in objects on the face, hence it tries as much as possible to detect those rather than detecting objects outside the face.

Application of image segmentation

Image segmentation is applied extensively across various fields to perform tasks like;

- Computer/Machine Vision

- Medical Imaging, as in;

1. Locate tumors and other pathologies

2. Measure tissue volumes

3. Diagnosis, the study of anatomical structure

4. Surgery planning

5. Virtual surgery simulation

6. Intra-surgery navigation - Recognition tasks, as in;

1. Face recognition

2. Fingerprint recognition

3. Iris recognition - Object detection

- Traffic control system

- Video surveillance

- Automated driving systems

- Satellite imaging and remote sensing

Image segmentation algorithms

Image segmentation processes are carried out by algorithms. Various algorithms have developed over the years and each uses different approaches to segment images. The performance of the algorithm in a task depends on the process/path at which it follows to partition the image. Therefore some algorithms may perform much better in a particular task than the rest and vice versa. Below is a list of basic image segmentation algorithms and how they work;

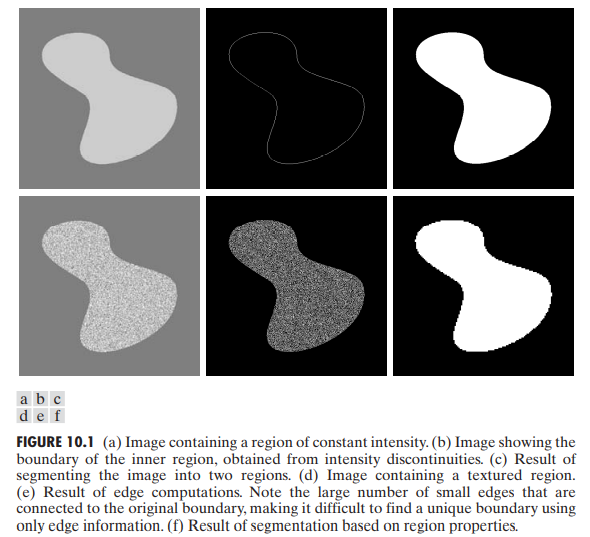

Edge-based image segmentation algorithms

The principal approach used by the algorithm involves the classification of the pixels of an image to either edge or non-edge pixels. Edge/boundary pixels are detected based on an abrupt discontinuity in pixel values throughout the image.

Figure 10.1 (a), (b), (c,) illustrates edge-based segmentation. Figure 10.1 (a) shows an image of a region of constant intensity superimposed on a darker background, also of constant intensity. These two regions comprise the overall image region. Figure 10.1(b) shows the result of computing the boundary of the inner region based on intensity discontinuities. Points on the inside and outside of the boundary are black (zero) because there are no discontinuities in intensity in those regions. To segment the image, we assign one level (say, white) to the pixels on, or interior to the boundary, and another level (say, black) to all points exterior to the boundary. Figure 10.1(c) shows the result of such a procedure. We see that conditions (a) through © stated at the beginning of this section are satisfied by this result.

Region-based image segmentation algorithms

This segmentation is done based on a set of predefined criteria. Figure 10.1 (d), (e) and (f) illustrates region-based segmentation. Figure 10.1 (d) shows another image with a region textured superimposed on a darker background of constant intensity. Applying edge-based to the image, due to the numerous spurious(texture) changes in intensity, makes it difficult to identify a unique boundary for the original image because many of the nonzero intensity changes are connected to the boundary, so edge-based segmentation is not a suitable approach. However, the pixels of the textured region can be clustered together due to their similarities in value, as in Figure 10.1 (f).

Region-based and Edge-based segmentation is the basis of point, line, and edge detection in image analysis.

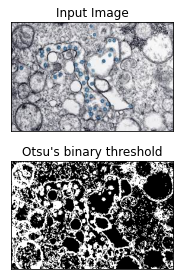

Otsu’s image segmentation algorithms

This algorithm uses a thresholding approach to segment objects in an image. The process of thresholding involves plotting a histogram of the distribution of pixels across the image and then a threshold value can be computed from the peak value. The threshold value is then compared to the image pixels. If the pixel value is less than the threshold value, the pixel value is then set to 0 (black); if greater than, the pixel value is set to 255 (white). Multiple thresholding involves the computation of more than one threshold value and then comparing each value with

Otsu’s image segmentation is a form of automatic thresholding and is not suitable for the analysis of noisy images. This principle is utilized in scanning documents, fingerprints, and recognizing patterns.

# Otsu's image segmentation in python programming language

# importing files

from google.colab import files

import numpy as np

import cv2

from matplotlib import pyplot as plt

#reading files

#The image to be imported is the PHIL ID #23354 (Transmission electron microscopic image of an isolate from the first U.S. case of COVID-19, formerly known as 2019-nCoV. The spherical viral particles, colorized blue, contain cross-section through the viral genome, seen as black dots)

img = cv2.imread(r'covid.png')

plt.imshow(img)

b,g,r = cv2.split(img)

rgb_img = cv2.merge([r,g,b])

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

ret, thresh = cv2.threshold(gray,0,255,cv2.THRESH_BINARY_INV+cv2.THRESH_OTSU)

# noise removal

kernel = np.ones((2,2),np.uint8)

# opening = cv2.morphologyEx(thresh,cv2.MORPH_OPEN,kernel, iterations = 2)

closing = cv2.morphologyEx(thresh,cv2.MORPH_CLOSE,kernel, iterations = 2)

# sure background area

sure_bg = cv2.dilate(closing,kernel,iterations=3)

# Finding sure foreground area

dist_transform = cv2.distanceTransform(sure_bg,cv2.DIST_L2,3)

# Threshold

ret, sure_fg = cv2.threshold(dist_transform,0.1*dist_transform.max(),255,0)

# Finding unknown region

sure_fg = np.uint8(sure_fg)

unknown = cv2.subtract(sure_bg,sure_fg)

# Marker labelling

ret, markers = cv2.connectedComponents(sure_fg)

# Add one to all labels so that sure background is not 0, but 1

markers = markers+1

# Now, mark the region of unknown with zero

markers[unknown==255] = 0

markers = cv2.watershed(img,markers)

img[markers == -1] = [255,0,0]

plt.subplot(211),plt.imshow(rgb_img)

plt.title('Input Image'), plt.xticks([]), plt.yticks([])plt.subplot(212),plt.imshow(thresh, 'gray')

plt.imsave(r'thresh.png',thresh)

plt.title("Otsu's binary threshold"), plt.xticks([]), plt.yticks([])plt.tight_layout()

plt.show()

Output:

Clustering-based image segmentation algorithms

This approach uses methods like K-means, Fuzzy C-means, improved K-means ML algorithms, etc. to partition an image.

When using the K-means algorithm, several K-points are selected (this could be done either manually or automatically by the algorithm) and cluster centers (centroid) are assigned. Then the distance between each image pixel to the centroid is calculated and each pixel is assigned to the centroid that it has the closest distance to. New centroids are then reselected by averaging all of the pixels in the cluster then the process of assigning the image pixel to clusters is repeated until that point where no pixel changes cluster. Medical imaging uses this technique to identify tissue types in their images.

Trainable segmentation algorithms

The aforementioned algorithms use pixel information such as color and value to segment images that aren’t enough. However, trainable algorithms like Neural Networks can model basic segmentation knowledge from datasets of labeled pixels i.e. they are trained from previously identified datasets of objects in an image. An image segmentation neural network can process small areas of an image to extract simple features such as edges, points, lines, etc.

Example of artificial trainable segmentation algorithms are Mask R-CNN, Pulse-coupled neural networks (PCNNs), and Faster R-CNN. CNN is a good approach for image segmentation but it can take more time during training if the dataset is huge. Clustering-based segmentation takes huge computation time. Edge-based segmentation is good for images having better contrast between objects.

Types of image segmentation

Now that we know how algorithms analyze pixels to identify objects, we might also need to discuss the different forms that these algorithms handle. The various types include,

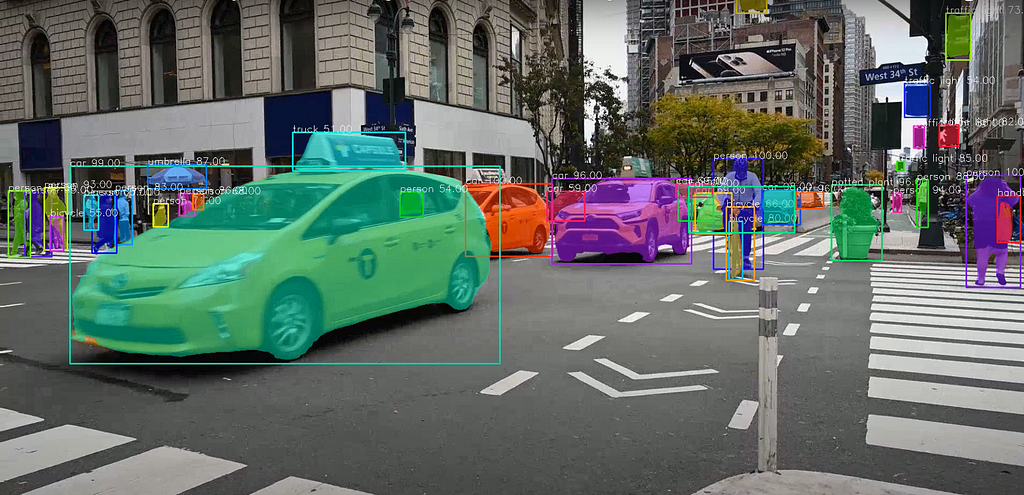

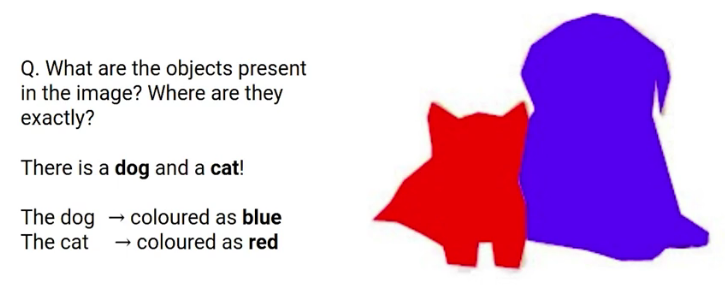

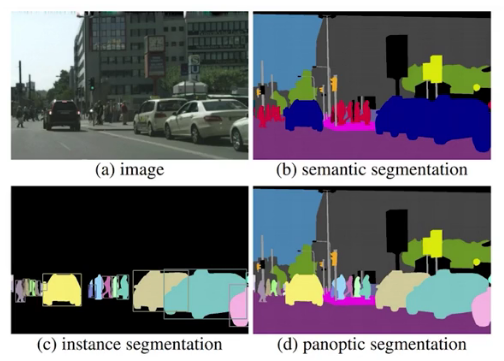

Semantic segmentation

This process associates each pixel to a class, for instance, flower, road, car, etc. Hence, all pixels of a car present in an image are assigned to the class “car” i.e. a common representation. Automated driving uses this type of image segmentation.

Instance segmentation

This goes a bit further by representing each pixel to an instance object on the image. Therefore each object is a unique object. Real-time road signs are instance segmented in automated driving systems.

Panoptic segmentation

A combination of instance and semantic segmentation gives more details.

Endnotes

In a nutshell, starting from the facial unlock property in smartphones, down to that self-driving Tesla, image segmentation techniques have been adopted as part of our world as we now utilize it extensively in our daily lives to enhance automation and improve intelligence.

This article has just discussed image segmentation which is an extensively utilized digital image processing technique.

Image Segmentation — An Overview On How Its Algorithms Identify Objects In An Image was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Joshua Salako

Joshua Salako | Sciencx (2022-09-26T02:59:07+00:00) Image Segmentation — An Overview On How Its Algorithms Identify Objects In An Image. Retrieved from https://www.scien.cx/2022/09/26/image-segmentation-an-overview-on-how-its-algorithms-identify-objects-in-an-image/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.