This content originally appeared on DEV Community 👩💻👨💻 and was authored by propelauthblog

One of my favorite things to do is to find interesting libraries and test out unusual use cases with them. The python library geneticalgorithm is beautifully open ended—exposing a simple but powerful interface that we can use for all sorts of weird stuff.

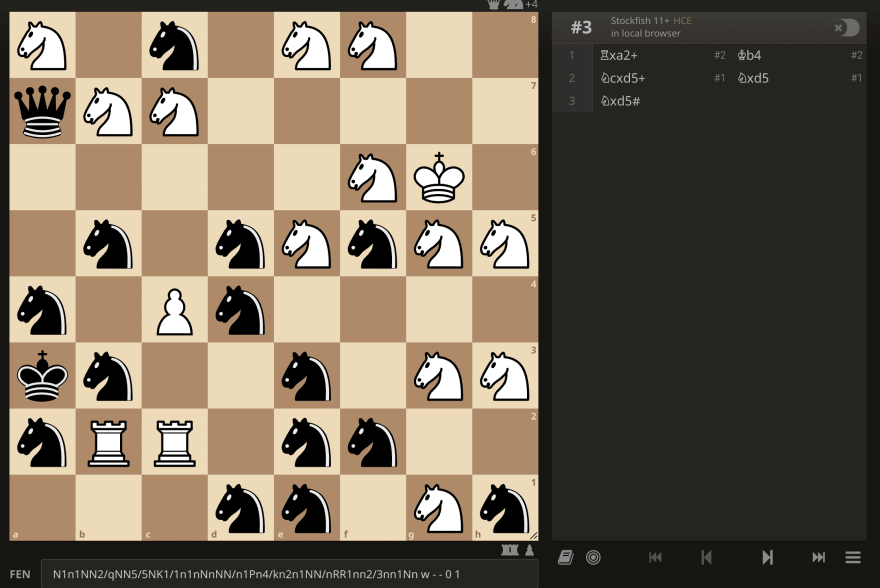

In this post, we’ll use it to generate chess puzzles that look like this:

or more tame ones like this:

If you aren’t familiar with chess, these are both called “mate in 3” puzzles. This means that White can win the game in 3 moves, but only if they find one specific move.

The first puzzle was generated with the constraint “Include as many Knights as possible,” which explains why both sides have way too many Knights.

But before we get into all that, we’ll start by understanding the library.

geneticalgorithm

There are a number of good articles that explain what genetic algorithms are (like this one). The main thing to note, from that article, is

in order to find a solution using the GA, random changes applied to the current solutions to generate new ones

So, at a high level, we take some solution to a problem and randomly modify it to get new possible solutions. We won’t go into the details here, and instead will focus on what interfaces the library exposes. Let’s start with an example from the docs:

import numpy as np

from geneticalgorithm import geneticalgorithm as ga

def f(X):

return np.sum(X)

varbound=np.array([[0,10]]*3)

model=ga(function=f,dimension=3,variable_type='int',variable_boundaries=varbound)

model.run()

which outputs:

The best solution found:

[0. 0. 0.]

Objective function:

0.0

If we break this down, what we see is:

- We have a function f(X) that takes in an array of 3 integers

- Those integers are all between 0 and 10, inclusive

- Our goal is to minimize the function f(X)

- Since f(X) just sums up the 3 integers, the best solution is if they are all 0

How complicated can our function be?

Adding three numbers together is cool and all, but let’s try something slightly more complicated.

def f(X):

return abs(X[0] - 3) + abs(X[1] - 1) + abs(X[2] - 10)

# The best solution found:

# [ 3. 1. 10.]

# Objective function:

# 0.0

And that works pretty easily. It’s also worth contrasting that function, with this one:

def f(X):

if X[0] == 3 and X[1] == 1 and X[2] == 10:

return 0

return 1

# The best solution found:

# [2. 9. 3.]

# Objective function:

# 1

These two functions have the same best solution, but the second one fails occasionally (using the default parameters) whereas the first one doesn’t. If you think about how our black box model.run() might work, you can probably figure out why this happens.

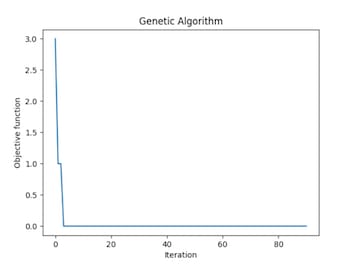

In our first function, solutions close to [3, 1, 10] have lower values than solutions further from [3, 1, 10]. In our second function, our algorithm basically gets no feedback until we happen to get [3, 1, 10]. You can see this in the graphs that are produced:

On the left, we see that the algorithm quickly converged on the right answer, getting feedback that it was on the right path. On the right, we just constantly got the same value until we gave up.

Modeling Chess

Our function is pretty arbitrary though—who’s to say that it needs to represent some mathematical function.

What if we generate 64 integers - one for each chess position. And the integers will be between 0 and 12, representing an empty square or a piece.

We can use the python library python-chess to construct a chess board object. This will allow us to check more properties of the board—like if it’s a valid position, if it’s currently a stalemate, etc.

def value_to_piece(value):

if value == 0:

return None

elif value <= 6:

# Pieces have values 1 through 6

return chess.Piece(value, chess.WHITE)

else:

return chess.Piece(value - 6, chess.BLACK)

def array_to_chess_board(arr):

# construct an empty chess board

board = chess.Board(fen='8/8/8/8/8/8/8/8 w - - 0 1')

for i, value in enumerate(arr):

piece = value_to_piece(value)

if piece:

board.set_piece_at(i, piece)

return board

Now all we need is a function to minimize. Let’s start off simple by asking it to generate a valid chess position with as few pieces as possible.

def f(X):

board = array_to_chess_board(X)

# We want as few pieces as possible, so each piece adds to our penalty

penalty = len(board.piece_map()) * 0.1

# Add a big penalty for invalid positions

if not board.is_valid():

penalty += 10

return penalty

We also need to modify our constraints to include 64 integers:

varbound = np.array([[0, 12]] * 64)

and the docs say that we should experiment with our own configuration, I used this but these are all values that can be tweaked:

algorithm_param = {'max_num_iteration': 50000,

'population_size': 20,

'mutation_probability': 0.05,

'elit_ratio': 0.01,

'crossover_probability': 0.9,

'parents_portion': 0.3,

'crossover_type': 'two_point',

'max_iteration_without_improv': 5000}

Finally, let’s run our code and print out a string representation of our best board:

model = ga(function=f, dimension=64, variable_type='int', variable_boundaries=varbound, algorithm_parameters=algorithm_param)

model.run()

best_board = array_to_chess_board(list(model.best_variable))

print(best_board.fen())

# 8/8/2K5/8/8/8/8/5k2 w - - 0 1

You can visualize the FEN string on Lichess, and you’ll see:

This should match what you’d expect. For a position to be “valid” it must include a white and black king. It doesn’t need any other pieces, so this is actually the optimal solution to our function - a valid chess board with the fewest possible pieces!

Using Stockfish to generate chess puzzles

A chess puzzle is a position where there is one, and only one, good move. Puzzles are typically used for training, since it can be a challenge to find the sole good move in a position.

Typically, the way puzzles are generated is by looking at positions in real games and determining if each position is a puzzle or not (meaning the main move is good and every subsequent move is not).

How can we know that there’s exactly one good move? We can use the open source chess engine Stockfish. Our python-chess library actually has support for communicating with an engine like Stockfish. That means that getting a detailed analysis of a position is as simple as:

# a chess position where white can win in one move

board = chess.Board("5Q2/5K1k/8/8/8/8/8/8 w - - 0 1")

# initialize Stockfish

engine = chess.engine.SimpleEngine.popen_uci("/opt/homebrew/bin/stockfish")

# you can control the engine's search by time or depth

info = engine.analyse(board, chess.engine.Limit(time=0.1))

info contains a lot of fields, but the two most important are:

[{

'score': PovScore(Mate(+1), WHITE),

'pv': [Move.from_uci('f8g7')],

}]

The score tells us what Stockfish thinks of the position, which is a mate in one.

The pv (short for principal variation) tells us the sequence of moves that the engine expects to be played. In this case, it’s saying that it expects White to move their queen on f8 to g7, which is checkmate.

Let’s use both of these in a function that generates mate in three puzzles, meaning puzzles where white can win in exactly three moves.

def f(X):

board = array_to_chess_board(X)

# Let's reward having as few pieces as possible

penalty = len(board.piece_map()) * 0.1

# Penalize invalid boards heavily, we cannot even analyze them

if not board.is_valid():

return 10 + penalty

# You can tune the depth for performance reasons

info = engine.analyse(board, chess.engine.Limit(depth=10), multipv=2)

# If there are no moves (meaning the game is over), return a high penalty

if len(info) < 1:

return 9 + penalty

# Also heavily penalize having only 1 move, puzzles are only interesting

# if we have a choice to make

if len(info) < 2:

return 8 + penalty

# We're specifically looking for puzzles where White can mate in 3 moves

# so we'll penalize cases where white does not have a forced mate

score = info[0]["score"].white()

if not score.is_mate() or score.mate() <= 0:

return 6 + penalty

# Add a penalty for the distance away from mate in 3

penalty += min(3, abs(score.mate() - 3)) / 3

# Finally, add a high penalty if the second best move is also good.

# The defining characteristic of a puzzle is that the second best move is bad

second_move_score = info[1]["score"].white().score(mate_score=1000)

if second_move_score > 100:

penalty += min(10.0, second_move_score / 100)

return penalty

Similar to above, we want chess positions that are close to our target to have lower values than chess positions that are further away. That’s why we added cases like:

- A mate in 4 puzzle is better than a mate in 7 puzzle

- An invalid position is heavily penalized, as are cases where the game is already over

You’ll get a different result every time you run this, but here’s one example:

6kr/7b/8/r6Q/8/B7/5K2/3R2b1 w - - 0 1

I included the top four engine moves on the right, but as you can see there’s only one move white can play which will win the game. Every other move allows black to equalize or even gain a small advantage.

But, how much control do we really have? Our current function tries to include as few pieces as possible. What if instead, we decide that we want as many Knights as possible, preferably enemy Knights? We’ll change our function to add this as a penalty:

# Removed

# penalty = len(board.piece_map()) * 0.1

penalty = 1 - len(board.pieces(chess.KNIGHT, chess.WHITE)) * 0.01

peanlty += 6 - len(board.pieces(chess.KNIGHT, chess.BLACK)) * 0.1

And when we run that we get:

So many Knights, and yet, it’s still a mate in 3 puzzle.

A quick aside: Can we generate realistic puzzles?

All the puzzles we generated are a little questionable. The Knight one is pretty obviously not reachable in a real game. This warrants an entire separate post, but one fun way you can generate realistic looking puzzles is by incorporating a “realism score” into the function.

If you had a classifier that took in chess position and output a score of how “realistic” it is, you can add that to your penalty to penalize unrealistic boards. This requires many examples of “realistic” positions—but luckily Lichess is amazing and has a dataset of way more games than you’d need to build a good classifier.

Why do this?

The puzzles you get from actual games are absolutely higher quality than what we generated, however, I think of this as an interesting proof of concept.

We took a library used for function minimization, attached Stockfish to it, and used it to generate surprisingly complex mate in 3 chess puzzles without too much code. Libraries like this excite me because it feels like the limit is your imagination and your ability to transform ideas into code.

This content originally appeared on DEV Community 👩💻👨💻 and was authored by propelauthblog

propelauthblog | Sciencx (2022-10-13T20:41:28+00:00) Generating Chess Puzzles with Genetic Algorithms. Retrieved from https://www.scien.cx/2022/10/13/generating-chess-puzzles-with-genetic-algorithms/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.