This content originally appeared on DEV Community 👩💻👨💻 and was authored by Beppe Catanese

As C++ application engineers in the Mobile In-Person Payments Team at Adyen, we continuously explore new technology that can make our payment applications more reliable and more secure. In this article, we will talk about our journey to evaluate, adopt and use GraalVM to migrate our existing terminal-based payment applications into the cloud.

Let's first start with a background information on the technical challenge we encountered, we will then follow with our analysis of GraalVM's capabilities and why we have chosen it. Finally we will summarize our findings about the advantages and disadvantages of using GraalVM.

Current situation: technical background

Payment applications have been written in C/C++ and widely utilized in various types of terminals for years since the typical embedded system has limited hardware resources and it requires efficient low-level interaction between the application and operating system.

Everything works fine until the day when we start considering the possibility of running the C/C++ payment application in the cloud: a Java world in Adyen. Undoubtedly, when migrating an application from the embedded device to the cloud, some design paradigms such as more asynchronous communication, multi-threading, non-blocking calls etc, would be needed, in order to make the application more suitable for the new environment.

Besides the consideration of the application redesign, the essential question is: Do we rewrite the application or reuse the code we have at hand?

Rewriting the code

Rewriting the payment application from scratch in Java may be a valid choice. This gives us a chance to redesign a modern application that is scalable and maintainable in the long run.

However, developing a new application requires a large effort, especially when redesigning the complex application that has been developed and run for years. The existing payment application has gone through more than a thousand iterations and has been verified in the field at large scale globally. How much time and effort do we need in order to re-implement the same features (payments, refunds, card acquisition, etc..) and reach the same level of scalability and robustness as the existing application?

Reusing the existing code

In contrast, the idea of reusing the existing working code comes naturally. It speeds up the development cycle by having most payment logic already available so we can put effort into dealing with the difference between physical terminal and cloud environment. However, the challenge we have now is running C/C++ code safely next to the Java applications.

When running the C/C++ code in a native process, segmentation fault by accessing illegal memory is completely possible. To overcome this type of issue, process management would be required, where it can not only restart a new process when a crash occurs, but also redirects a request to an active process. Moreover, the interface between a native process and a Java application should be defined and the cost of latency should be taken into account, though it’s implementation dependent.

We have looked at JNI (Java Native Interface) that nicely supports the communication between Java code and other languages, such as C/C++.

However, the potential memory issue in C/C++ can lead to the crash in JVM. What it means to a payment application is that thousands of payments would be affected. To ensure a high quality and robust payment service, any crash would be critical and should be avoided in the first place.

In the end, we established that GraalVM EE can help us integrate our existing C/C++ application within the Java ecosystem seamlessly with additional memory safety and acceptable performance.

What’s GraalVM?

GraalVM is a Java virtual machine that is mainly implemented in Java and supports additional programming languages interpretation, such as Python, Javascript and programming languages that can be transformed into LLVM (Low Level Virtual Machine) intermediate code (bitcode). It aims to provide a more natural way of interfacing between Java and other languages. The Enterprise Edition (EE), which we will be using, provides the managed memory for those languages that are traditionally compiled to native code directly.

Increased memory protection

The GraalVM EE offers better security by monitoring memory allocation and deallocation. By doing this, the illegal memory access becomes just a thrown exception instead of a segmentation fault. This makes the application more reliable without worrying about unhandled memory issues in runtime.

Moreover, GraalVM EE creates an extra layer to redirect all system calls to corresponding Java interfaces instead of performing system calls directly. In this way the application and its functionalities are not affected while the extra security is added as managing system calls in GraalVM.

Limitations and challenges

GraalVM is quite new technology and it’s still evolving. The GraalVM managed mode provides desired memory protection and extra security, but it also brings its own limitations as below.

First of all, all libraries used by the application need to be pre-compiled into LLVM bitcode by GraalVM LLVM toolchain. This gives the GraalVM capability to examine all executions and memory access like executing the code in a sandbox environment. Besides, calling native code and accessing native memory will not be allowed. A runtime exception will be thrown as a result of an invalid access.

However, the memory protection and the prevention of calling native code come at a price. It increases the time of compilation as well as the complexity of the building process. For instance, many system libraries, such as openssl, zlib etc, can't be linked directly. All of them have to be recompiled with GraalVM LLVM toolchain, which adds up the compilation time and increases the effort to maintain the building configuration of each library.

Besides, the pre-compiled 'musl libc' standard C library is shipped with the LLVM toolchain, which implements the redirection of system calls in Java as mentioned earlier. From the application point of view, executing system calls should work the same with or without GraalVM. However, because GraalVM is a new technology there’re still certain anomalies regarding the unexpected behavior with system calls in our multi-threading application.

Luckily, these issues were reported to GraalVM and got fixed quickly in the corresponding Java code. Hence, extra testing and troubleshooting may be necessary when some system calls are unsupported or only partially supported in GraalVM.

Apart from this, there are performance related concerns in terms of execution speed and memory usage where we’re going to discuss in the next section with more details.

Performance impact

The GraalVM compiler is a JIT (Just-in-Time) compiler that compiles the hot parts of code in runtime and continuously optimizes the code until the performance is as good as native machine code. However, this means we won't get the best performance at the start-up of the application. Accordingly, the additional warm-up is required to achieve better performance. In order to know how much warm-up is appropriate, we can use the result of profiling a JSON parser as an example to illustrate the basic flow.

Performance analysis: simple JSON parser

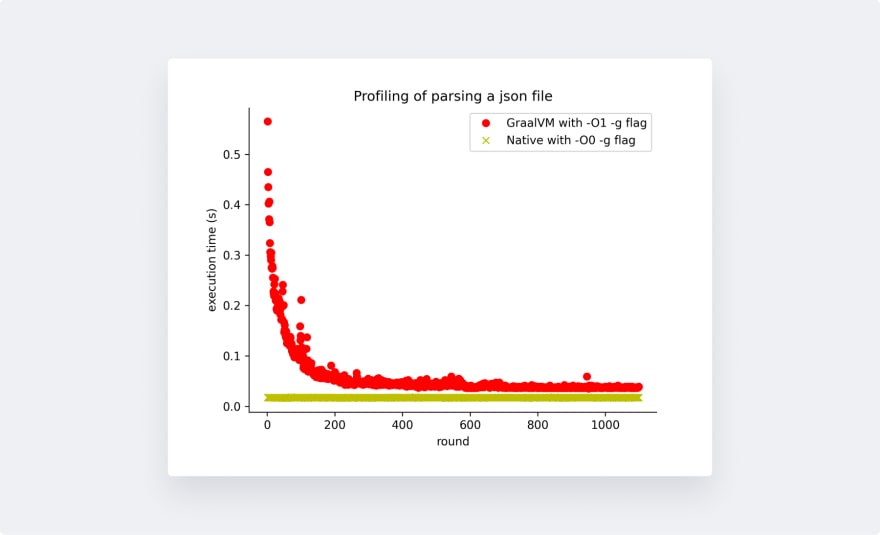

The goal of profiling is to execute the JSON parser function back to back over 1000 iterations and compare the performance between GraalVM and the native execution.

During our benchmarking we have defined the same JSON payload used during real payments, with the important objective to reproduce scenarios close to the live system.

The profiling result is shown in the figure below:

The first observation is that the performance of the JSON parser is continuously optimized by the JIT compiler until it reaches stable performance. We used the compiler option -O0 (no optimization) for the native compilation, while -O1 (basic optimization) is used for GraalVM as suggested by the GraalVM official document. However, the native execution still outperforms the execution of GraalVM. The extra overhead of GraalVM may be contributed by different components, such as additional memory protection, the optimization made by the JIT compiler in runtime, and the speed of the bitcode interpreter.

It's also good to mention that we’ve also performed the same profiling for the native code with the compilation flags -O1 and -O2, which can further improve the execution time. However, the performance of GraalVM (see the stable red line) is acceptable and is not necessarily fully optimized when it’s running in a sandboxed prototype environment. We will monitor and optimize the performance in the following iterations if needed.

The second observation provides the insight of deciding the proper warm-up at application start-up. This result gives us useful information on how much execution time it takes by accumulating the execution of those approximately 100 iterations if we aim at achieving 80% performance improvement (the execution time goes from 0.5s down to 0.1s).

Ideally, the profiling flow for the JSON parser above mentioned can be applied to the profiling of the whole application. However, the real application is more complex and has different execution flows. If we only optimize for one specific flow during warm-up, we may not get the improved performance when executing another flow.

Luckily, in our case those different flows share most of the common functions so we didn't see much performance impact as executing other flows after warm-up. If this is not the case, the warm-up for different flows needs to be taken into account.

Memory usage

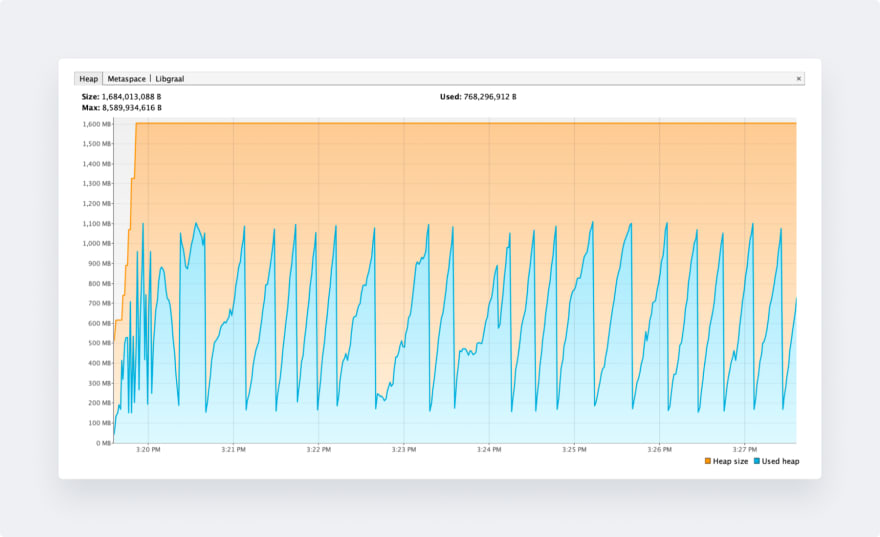

To monitor memory usage during runtime, GraalVM provides a powerful tool called VisualVM that can monitor system metrics such as CPU usage, memory usage etc. We’ve performed two experiments on the same JSON parser example abovementioned.

For the first experiment we measured the heap usage of a single JSON parser. This gives the baseline how much memory may be needed to run a simple JSON parser in GraalVM. We can see the maximum heap usage is around 1.1GB as shown below. This includes the memory usage from the JSON parser application, GraalVM, and the engine, i.e. the interpreter of bit code.

For the second experiment we measured the heap usage of 2 JSON parsers that run in 2 separate threads. It is worth mentioning that the engine is shared among threads which can reduce some heap usage when increasing the number of threads.

The maximum heap usage when running JSON parsers in 2 threads is around 1.2GB. We’ve also run the experiment with 4 threads, but we didn’t see an obvious difference compared with 2 threads.

We have concluded that the memory usage is not the bottleneck of running a C/C++ application on the server, though the memory usage is still high compared to running the application natively in an embedded device. The GraalVM uses more memory in either the measurement of baseline or the measurement when scaling the number of threads. However, the server typically has more memory resources that are capable of dealing with this amount of memory usage.

Conclusion

Undoubtedly, GraalVM is quite new technology and we are the pilot users to leverage GraalVM with a C/C++ application in production on the cloud. We did learn a lot from this journey: our analysis demonstrated that the performance of running the application with GraalVM is not as good as other more native options such as JNI or the native application with Java process management. Additionally, we could see that the warm-up is necessary in order to have acceptable performance at the first request to the application.

On the other hand, GraalVM mitigates the essential stability concerns that we considered more important when running a C/C++ application next to Java. What GraalVM offers us is a sandbox environment that enables us to quickly prototype and test our existing application, and to adapt it to the new environment meeting our security requirements.

Eventually adopting GraalVM and leveraging the existing C++ code base allowed us to keep our development velocity and ship our product faster: we launch fast and iterate, after all time to market is crucial, customer feedback essential and continuous delivery a must.

References

Safe and sandboxed execution of native code

GraalVM Interaction with native code and managed execution mode

VisualVM

This content originally appeared on DEV Community 👩💻👨💻 and was authored by Beppe Catanese

Beppe Catanese | Sciencx (2022-11-14T13:41:43+00:00) GraalVM: running C/C++ application safely in the Java world. Retrieved from https://www.scien.cx/2022/11/14/graalvm-running-c-c-application-safely-in-the-java-world/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.