This content originally appeared on Level Up Coding - Medium and was authored by Dheeraj Inampudi

Background

A couple of years ago, I was a consultant for an airline IoT company, where I was responsible for building the analytics architecture, including the complete data engineering pipeline and analytics dashboards.

3000+ IoT devices send 200 GB/day to the ETL pipelines. This data is stored temporarily in a SQL database, and every four hours, it is dumped into S3 as CSV files. With input from their head of engineering, I settled on the following plan of action.

Proposed Solution

As a consultant, I proposed the following four major changes to the existing architecture:

- Entire historical data will be transformed to hour-level Date Partitioned Snappy Compressed Parquet format (DPSCP)

- Existing pipelines continue to dump data to S3 in CSV, but we use our tried-and-true scripts to convert each file into DPSCP over the two-week migration period — Problem occurred here

- Develop a new pipelines which dumps the upcoming data in DPSCP

- Use Athena queries to create the final BI dashboard ( Note: realtime dashboard is a different usecase)

Success criteria: Providing that everything is in working order. We must be able to query all accessible data with minimal pay-as-you-go query costs and just pay for the data storage on S3.

Problem & Cause

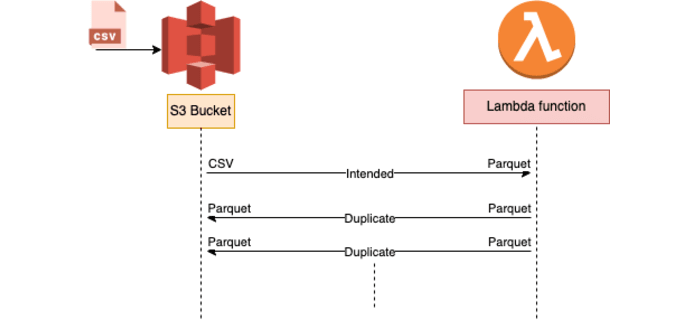

During migration (Step 2), we created a 2-week temporary Lambda that listens for S3 bucket events, transforms them into Parquet, and publishes them to S3. Due to the fact that both the source and destination buckets and prefixes were assigned to the same bucket, it triggered millions of times.

Ideally, Lambda should convert 24 CSV files each day to Parquet for two weeks. But since we didn't delete the CSV files after processing (for validation) and made a trigger based on an S3 event, whenever a new parquet file is dumped after processing, the S3 event triggers Lambda again, and Lambda repeats the process.

The infinite loop led Lambda to scale to use all available concurrency, while S3 continued to write objects and create new events for Lambda.

Cost breakdown

By the end of the 2-day period, the loop had run for about 40 hours and cost approx. $40,000, mostly because of Lambda concurrency and S3 put requests.

Prevention ways

- Use a positive trigger: For instance, an S3 object trigger might use a naming convention or meta tag that only works the first time it is called. This stops objects that were written by the Lambda function from calling the same function again.

- Use reserved concurrency: When the reserved concurrency of a function is set to a lower limit, the function can’t scale concurrently past that limit. It doesn’t stop the recursion, but as a safety measure, it limits the amount of resources used. During the development and testing phases, this can be helpful.

- Use CloudWatch monitoring and alarming: By setting an alarm on a function’s concurrency metric, you can get alerts if the concurrency suddenly goes up and take the right steps.

In the following AWS articles, similar patterns can be seen in other situations and services.

Recursive patterns that cause run-away Lambda functions

How we accidentally burned $40,000 by calling recursive patterns was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Dheeraj Inampudi

Dheeraj Inampudi | Sciencx (2022-11-14T03:23:16+00:00) How we accidentally burned $40,000 by calling recursive patterns. Retrieved from https://www.scien.cx/2022/11/14/how-we-accidentally-burned-40000-by-calling-recursive-patterns/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.