This content originally appeared on DEV Community 👩💻👨💻 and was authored by Nicolas El Khoury

Introduction

With the rapid evolution of the software industry in general, developing and deploying web applications is not as easy as writing the code and deploying it on remote servers. Today’s software development lifecycle necessitates the collaboration of different teams (e.g., developers, designers, managers, system administrators, etc), working on different tools and technologies to serve the challenging application requirements in an organized and optimized matter. Such collaboration may prove to be extremely complex and costly if not properly managed.

This tutorial provides an introduction to Web Applications and the different Infrastructure Types. To put the information provided into good use, a demo is applied on Amazon Web Services.

Moreover, multiple infrastructure types exist to deploy and serve these web applications. Each option possesses its advantages, disadvantages, and use cases.

This tutorial comprises a theoretical part, which aims to list and describe different concepts related to web applications and infrastructure types, followed by a practical part to apply and make sense of the information discussed.

What is Everything and Why

Websites vs Web Applications

By definition, websites are a set of interconnected documents, images, videos, or any other piece of information, developed usually using HTML, CSS, and Javascript. User interaction with websites is limited to the user fetching the website’s information only. Moreover, websites are usually stateless, and thus requests from different users yield the same results at all times. Examples of websites include but are not limited to company websites, blogs, news websites, etc.

Web applications on the other hand are more complex than websites and offer more functionalities to the user. Google, Facebook, Instagram, Online gaming, and e-commerce are all examples of web applications. Such applications allow the user to interact with them in different ways, such as creating accounts, playing games, buying and selling goods, etc. In order to provide such complex functionalities, the architecture of the web application can prove to be much more complex than that of a website.

A web application is divided into three layers. More layers can be added to the application design, but for simplicity purposes, this tutorial focuses on only three:

- Presentation Layer: Also known as the client side. The client applications are designed for displaying the application information and for user interaction. Frontend applications are developed using many technologies: AngularJS, ReactJS, VueJS, etc.

- Application (Business Logic) Layer: is part of the application’s server side. It accepts and processes user requests, and interacts with the databases for data modification. Such applications can be developed using NodeJS, Python, PHP, Java, etc.

- Database Layer: This is where all the data resides and is persisted.

Example Deployment on AWS

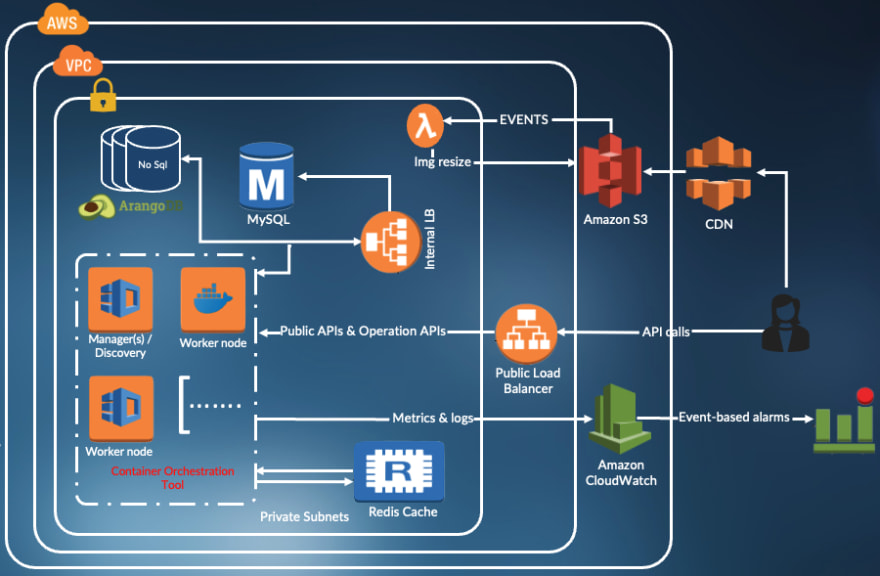

The diagram above displays an example deployment of a web application on AWS, and how users can interact with it:

- A database is deployed and served using AWS’ managed database service (e.g., AWS RDS). As a best practice, it is recommended not to expose the database to the Internet directly, and to properly secure the access.

- The backend application is deployed on a server with a public IP.

- The frontend application is deployed and served on AWS S3.

- All the application components reside within an AWS VPC in a region.

A typical HTTP request/response cycle could be as follows:

- The client sends an HTTP request to the front-end application.

- The frontend application code is returned and loaded on the client’s browser.

- The client sends an API call through the frontend application, to the backend application.

- The backend application validates and processes the requests.

- The backend application communicates with the database for managing the data related to the request.

- The backend application sends an HTTP response containing the information requested by the client.

Web Application Components

As discussed, a website is composed of a simple application, developed entirely using HTML, CSS, and Javascript. On the other hand, web applications are more complex and are made of different components. In its simplest form, a web application is composed of:

- Frontend Application.

- Backend Application.

- Database.

The components above are essential to creating web applications. However, the latter may require additional components to serve more complex functionalities:

- In memory database: For caching.

- Message bus: For asynchronous communication.

- Content Delivery Network: For serving and caching static content.

- Workflow Management Platform: For organizing processes.

As the application’s use case grows in size and complexity, so will the underlying web application. Therefore, a proper way to architect and organize the application is needed. Below is a diagram representing the architecture of a web application deployed on AWS.

Infrastructure Types

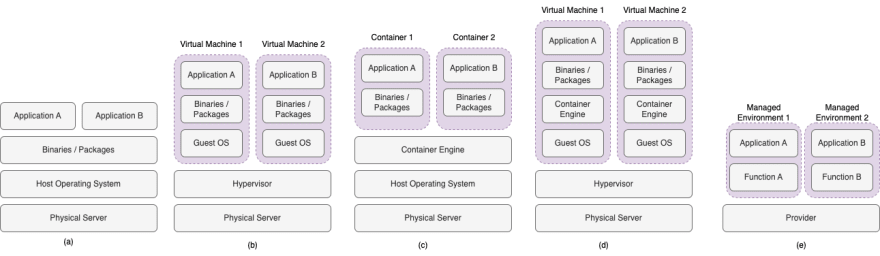

The diagram above lists different infrastructure options for deploying and managing applications.

Physical Servers

In this type of infrastructure, hardware resources would have to be purchased, configured, and managed in a physical location (e.g., a datacenter). Once configured, and an operating system is installed, applications can be deployed on them. The correct configuration and management of the servers and applications must be ensured throughout their lifecycle.

Advantages

- Ownership and Customization: Full control over the server and application.

- Performance: Full dedication of server resources to the applications.

Disadvantages

- Large CAPEX and OPEX: Setting up the required infrastructure components may require a large upfront investment, in addition to another one for maintaining the resources.

- Management overhead: to continuously support and manage the resources.

- Lack of scalability: Modifying the compute resources is not intuitive, and requires time, and complicated labor work.

- Resource Mismanagement: Due to the lack of scalability.

- Improper isolation between applications: All the applications deployed on the same physical host share all the host’s resources together.

- Performance Degradation over time: Hardware components will fail over time.

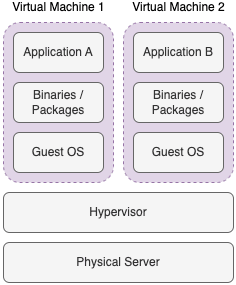

Virtual Machines

One of the best practices when deploying web applications is to isolate the application components on dedicated environments and resources. Consider an application composed of the following components:

- A MySQL database.

- A NodeJS backend API service.

- A Dotnet backend consumer service.

- A ReactJS frontend application.

- RabbitMQ.

Typically, each of these components must be properly installed and configured on the server, with enough resources available. Deploying and managing such an application on physical servers becomes cumbersome, especially at scale:

- Deploying all the components on one physical server may pose several risks:

- Improper isolation for each application component.

- Race conditions, deadlocks, and resource overconsumption by components.

- The server represents a single point of failure.

- Deploying the components on multiple physical servers is not an intuitive approach due to the disadvantages listed above, especially those related to cost and lack of scalability.

Virtual Machines represent the digitized version of the physical servers. Hypervisors (e.g., Oracle VirtualBox, Hyper-v, and VMWare) are software solutions that allow the creation and management of one or more Virtual Machines on one Physical Server. Different VMs with different flavors can be created and configured on the same physical host. For instance, one physical server may host three different VMs with the following specs:

- VM1 —> 1 vCPU —> 2 GB RAM —> 20 GB SSD —> Ubuntu 18.04.

- VM2 —> 2 vCPU —> 4 GB RAM —> 50 GB SSD —> Windows 10.

- VM3 —> 4 vCPU —> 3 GB RAM —> 30 GB SSD —> MAC OS.

Each virtual machine possesses its dedicated resources and can be managed separately from the other ones.

Advantages

- Low Capital Expenditure: No need to buy and manage hardware components.

- Flexibility: The ability to quickly create, destroy, and manage different VM sizes with different flavors.

- Disaster Recovery: Most VM vendors are shipped with solid backup and recovery mechanisms for the virtual machines.

- Reduced risk of resource misuse (over and under-provisioning).

- Proper environment isolation: for application components.

Disadvantages

- Performance issues: Virtual machines have an extra level of virtualization before accessing the computing resources, rendering them less performant than the physical machines.

- Security issues: Multiple VMs share the compute resources of the underlying host. Without proper security mechanisms, this may pose a huge security risk for the data in each VM.

- Increased overhead and resource consumption: Virtualization includes the Operating System. As the number of VMs placed on a host, more resources are wasted as overhead to manage each VM's requirements.

Containers

While Virtual Machines virtualize the underlying hardware, containerization is another form of virtualization, but for the Operating System only. The diagram above visualizes containers. Container Engines (e.g., Docker), are software applications that allow the creation of lightweight environments containing only the application, and the required binaries for it to run on the underlying server. All the containers on a single machine share the system resources and the operating system, making containers a much more lightweight solution than virtual machines in general. A container engine, deployed on the server (whether physical or virtual), takes care of the creation and management of Containers on the server (Similar function to hypervisors and VMs)

Advantages

- Decreased Overhead: Containers require fewer resources than VMs, especially since virtualization does not include the Operating System.

- Portability: Container Images are highly portable, and can be easily deployed on different platforms; a Docker image can be deployed on any Container Engine that supports it (e.g., Docker, Kubernetes, AWS ECS, AWS EKS, Microsoft AKS, Google Kubernetes Engine, etc).

- Faster build and release cycles: Containers, due to their nature, enhance the Software development lifecycle, from development to continuous delivery of software changes.

Disadvantages

- Data Persistence: Containers, although support data persistence, through different mechanisms, is still considered a bad solution for applications that require persistent data (e.g., stateful applications, databases, etc). Up until today, it is not advised to deploy such applications as containers.

- Cross-Platform incompatibility: Containers designed to work on one platform, will not work on other platforms. For instance, Linux containers do not work on Windows Operating Systems and vice versa.

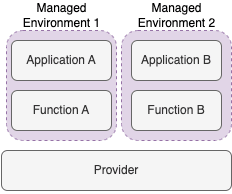

Serverless

Serverless solutions (e.g., AWS Lambda Functions, Microsoft Azure Functions, Google Functions), mainly designed by cloud providers not long ago, are also becoming greatly popular nowadays. Despite the name, serverless architectures are not really without servers. Rather, solution providers went deeper into the virtualization, removing the need the focus on anything but writing the application code. The code is packaged and deployed into specialized “functions” that take care of managing and running it. Serverless solutions paved the way for new concepts, especially Function as a service (FaaS), which promotes the creation and deployment of a single function per serverless application (e.g., one function to send verification emails, as soon as a new user is created).

The diagram showcases the architecture of serverless solutions. Application code is packaged and uploaded to a function, which represents a virtualized environment that is completely taken care of by the provider.

Serverless architecture, although alleviate a lot of challenges presented by the previous three infrastructure types, is still unable to replace any of them due to its many limitations. Serverless architectures do not meet the requirements of all use cases and therefore work best in conjunction with other infrastructure types.

Most serverless solutions are offered by providers, rather than being a solution that can be deployed and managed by anyone, thus making it a less favored solution.

Advantages

- Cost: Users only pay for the resources consumed during the time of execution. Idle functions generally do not use any resources, and therefore the cost of operation is greatly reduced (as opposed to paying for a server that is barely used).

- Scalability: Serverless models are highly scalable by design, and do not require the intervention of the user.

- Faster build and release cycles: Developers only need to focus on writing code and uploading it to a readily available infrastructure.

Disadvantages

- Security: The application code and data are handled by third-party providers. Therefore, security measures are all outsourced to the managing provider. The security concerns are some of the biggest for users of this serverless model, especially when it comes to sensitive applications that have strict security requirements.

- Privacy: The application code and data are executed on shared environments with application codes, which poses huge privacy (and security) concerns.

- Vendor Lock-in: Serverless solutions are generally offered by third-party providers (e.g., AWS, Microsoft, Google, etc). Each of these solutions is tailored to the providers’ interests. For instance, a function deployed on AWS Lambda functions may not necessarily work on Azure functions without code modifications. Excessive use and dependence on a provider may lead to serious issues of vendor lock-ins, especially as the application grows.

- Complex Troubleshooting: In contrast with the ease of use and deployment of the code, troubleshooting and debugging the applications are not straightforward. Serverless models do not provide access whatsoever to the underlying infrastructure and provide their generic troubleshooting tools, which may not always be enough.

Docker

Docker is a containerization software that aids in simplifying the workflow, by enabling portable and consistent applications that can be deployed rapidly anywhere, thus allowing software development teams to operate the application in a more optimized way.

Container Images

A container image is nothing but a snapshot of the desired environment. For instance, a sample image may contain a MySQL database, NGINX, or a customized NodeJS RESTful service. A Docker image is a snapshot of an isolated environment, usually created by a maintainer, and can be stored in a Container Repository.

Containers

A container is the running version of an image. A container cannot exist without an image. A container uses an image as a starting point for that process.

Container Registries

A container registry is a service to store and maintain images. Container registries can be either public, allowing any user to download the public images, or private, requiring user authentication to manage the images. Examples of Container Registries include but are not limited to: Docker Hub, Amazon Elastic Container Registry (ECR), and Microsoft Azure Container Registry.

Dockerfiles

A Dockerfile is a text document, interpreted by Docker, and contains all the commands required to build a certain Docker image. A Dockerfile holds all the required commands and allows for the creation of the resulting image using one build command only.

Knowledge Application

To better understand the difference between the concepts above, this tutorial will perform the following:

- Creation of the networking and compute resources on AWS

- Deployment of a simple application on an AWS EC2 machine

- Containerization of the application

- Deployment of the containerized application on an AWS EC2 machine

AWS Infrastructure

The infrastructure resources will be deployed in the region of Ireland (eu-west-1), in the default VPC.

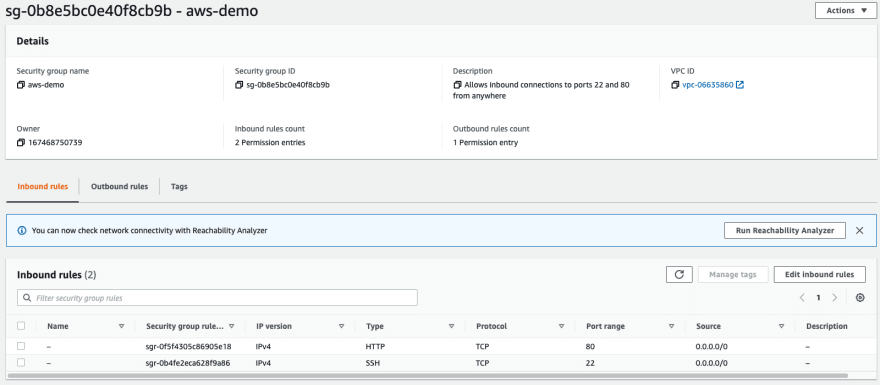

Security Group

A Security group allowing inbound connection from anywhere to ports 22, and 80 is needed. To create such a security group, navigate to AWS EC2 —> Security Groups —> Create security group, with the following parameters:

- Security group name: aws-demo

- Description: Allows inbound connections to ports 22 and 80 from anywhere

- VPC: default VPC

-

Inbound rules:

-

Rule1:

- Type: SSH

- Source: Anywhere-IPv4

-

Rule2:

- Type: HTTP

- Source: Anywhere-IPv4

-

Rule1:

Key pair

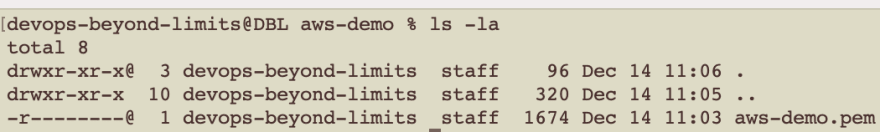

An SSH key pair is required to SSH to Linux EC2 instances. To create a key-pair, navigate to AWS EC2 —> Key pairs —> Create key pair, with the following parameters:

- Name: aws-demo

- Private key file format: .pem

Once created, the private key is downloaded to your machine. For it to properly work, the key must be moved to a hidden directory, and its permissions modified (the commands below work on Mac OS. The commands may be different for other operating systems).

# Create a hidden directory

mkdir ~/.keypairs/aws-demo

# Move the key to the created directory

mv ~/Downloads/aws-demo.pem ~/.keypairs/aws-demo/

# Change the permissions of the key

sudo chmod 400 ~/.keypairs/aws-demo/aws-demo.pem

IAM Role

An IAM role contains policies and permissions granting access to actions and resources in AWS. The IAM role is assigned to an AWS resource (in this case the EC2 machine). To create an IAM role, navigate to IAM —> Roles —> Create Role, with the following paramters:

- *Trusted entity type:* AWS Service

- Common use cases: EC2

- Permissions policies: AdministratorAccess

- Role Name: aws-demo

Create Role. This role will be assigned to the EC2 machine during creation.

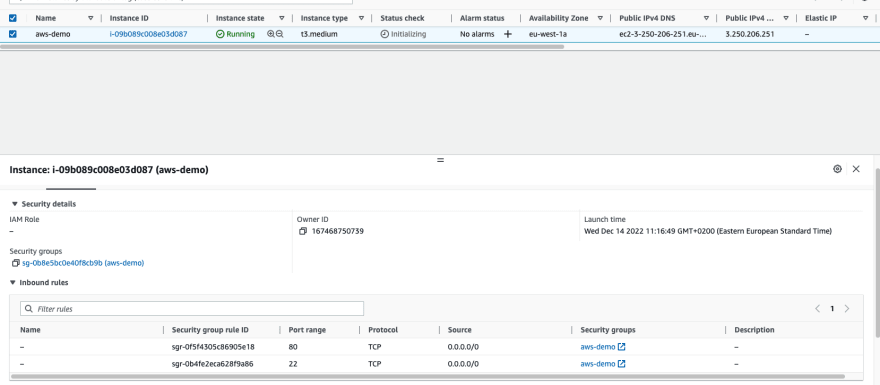

AWS EC2 Machine

An AWS EC2 machines, with Ubuntu 20.04 is required. To create the EC2 machine, navigate to AWS EC2 —> instances —> Launch instances, with the following parameters:

- Name: aws-demo

- AMI: Ubuntu Server 20.04 LTS (HVM), SSD Volume Type

- Instance Type: t3.medium (t3.micro can be used for free tier, but may suffer from performance issues)

- Key pair name: aws-demo

-

Network Settings:

- Select existing security group: aws-demo

- Configure storage: 1 x 25 GiB gp2 Root volume

- Advanced details:

- IAM instance profile: aws-demo

Leave the rest as defaults and launch the instance

An EC2 VM is created, and is assigned both a private and a public IPv4 addresses.

In addition, the security group created is attached correctly to the machine. Telnet is one way to ensure the machine is accessible on ports 22 and 80:

# Make sure to replace the machine's IP with the one attributed to your machine

telnet 3.250.206.251 22

telnet 3.250.206.251 80

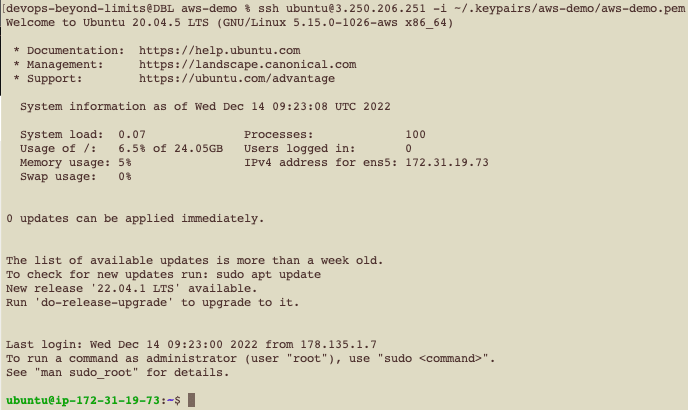

Finally, SSH to the machine, using the key pair created: ssh ubuntu@3.250.206.251 -i aws-demo.pem

The last step would be to install the AWS CLI:

# Update the package repository

sudo apt-get update

# Install unzip on the machine

sudo apt-get install -y unzip

# Download the zipped package

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" \

-o "awscliv2.zip"

# unzip the package

unzip awscliv2.zip

# Run the installer

sudo ./aws/install

Ensure the AWS CLI is installed by checking the version: aws --version

Application Deployment on the EC2 machine

Application Code

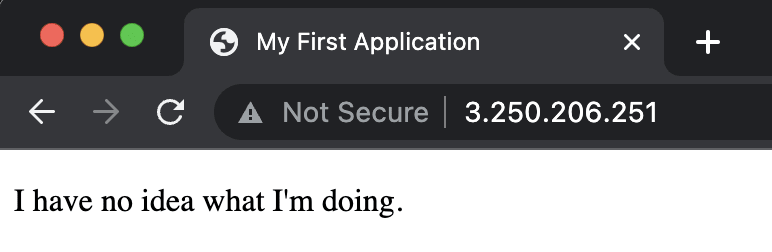

The application to be deployed is a simple HTML document:

<!DOCTYPE html>

<html>

<head>

<title>My First Application</title>

</head>

<body>

<p>I have no idea what I'm doing.</p>

</body>

</html>

Apache2 Installation

A Webserver is needed to serve the web application. To install Apache2:

- Update the local package index to reflect the latest upstream changes:

sudo apt-get update - Install the Apache2 package:

sudo apt-get install -y apache2 - Check if the service is running:

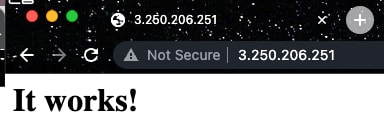

sudo service apache2 status - Verify that the deployment worked by hitting the public IP of the machine:

Application Deployment

To deploy the application, perform the following steps:

# Create a directory

sudo mkdir /var/www/myfirstapp

# Change the ownership to www-data

sudo chown -R www-data:www-data /var/www/myfirstapp

# Change the directory permissions

sudo chmod -R 755 /var/www/myfirstapp

# Create the index.html file and paste the code in it

sudo nano /var/www/myfirstapp/index.html

# Change the owership to www-data

sudo chown -R www-data:www-data /var/www/myfirstapp/index.html

# Create the log directory

sudo mkdir /var/log/myfirstapp

# Change the ownership of the directory

sudo chown -R www-data:www-data /var/log/myfirstapp/

Virtual Host

- Create the virtual host file:

sudo nano /etc/apache2/sites-available/myfirstapp.conf - Paste the following:

<VirtualHost *:80>

DocumentRoot /var/www/myfirstapp

ErrorLog /var/log/myfirstapp/error.log

CustomLog /var/log/myfirstapp/requests.log combined

</VirtualHost>

- Enable the configuration:

# Enable the site configuration

sudo a2ensite myfirstapp.conf

# Disable the default configuration

sudo a2dissite 000-default.conf

# Test the configuration

sudo apache2ctl configtest

# Restart apache

sudo systemctl restart apache2

- Perform a request on the server. The response will now return the HTML document created:

In conclusion, to deploy the application on the EC2 machine, several tools had to be deployed and configured. While this is manageable for one simple application, things won’t be as easy and straightforward when the application grows in size. For instance, assume an application of 5 components must be deployed. Managing each component on the server will be cumbersome. The next part demonstrates how containerization can alleviate such problems.

- Remove the apache webserver:

sudo apt-get purge -y apache2

Application Deployment using containers

Docker Installation

Install Docker on the machine:

# Update the package index and install the required packages

sudo apt-get update

sudo apt-get install -y ca-certificates curl gnupg lsb-release

# Add Docker’s official GPG key:

sudo mkdir -p /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg \

| sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

# Set up the repository

echo "deb [arch=$(dpkg --print-architecture) \

signed-by=/etc/apt/keyrings/docker.gpg] \

https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list \

> /dev/null

# Update the package index again

sudo apt-get update

# Install the latest version of docker

sudo apt-get install -y docker-ce docker-ce-cli containerd.io \

docker-compose-plugin

# Add the Docker user to the existing User's group

#(to run Docker commands without sudo)

sudo usermod -aG docker $USER

To validate that Docker is installed and the changes are all applied, restart the SSH session, and query the docker version: docker ps -a. A response similar to the below indicates the success of the installation.

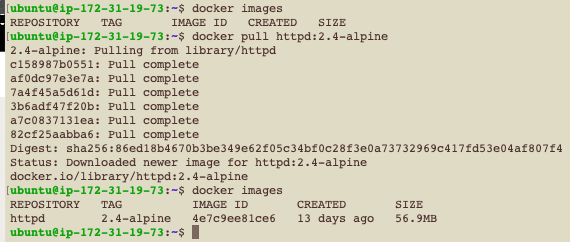

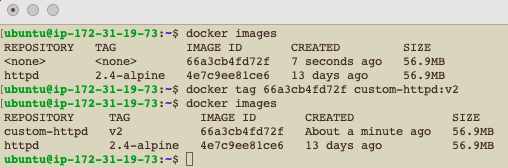

Base Image

There exists several official Docker images curated and hosted on Docker Hub, aiming to serve as starting points for users attempting to build on top of them. There exists an official repository for the Apache server, containing all the information necessary to deploy and operate the image. Start by downloading the image to the server: docker pull httpd:2.4-alpine. 2.4-alpine is the image tag, an identifier to distinguish the different image versions available. After downloading the image successfully, list the available images docker images. The image below clearly shows the successful deployment of the image.

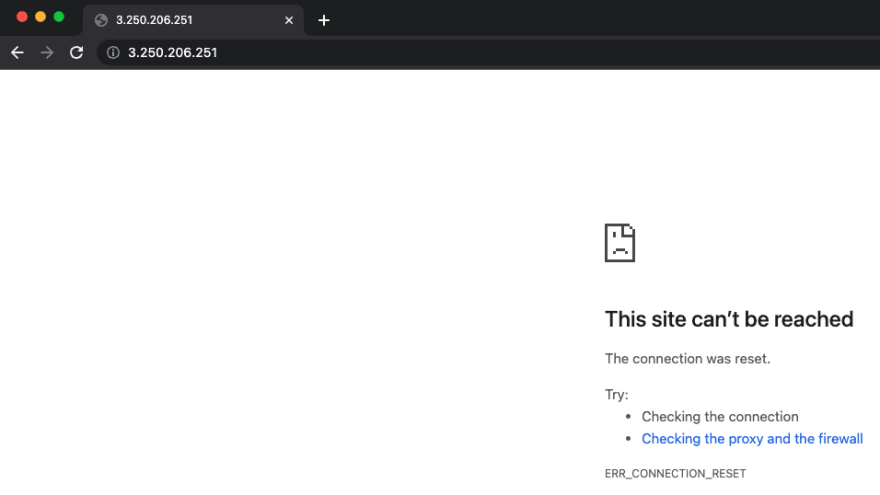

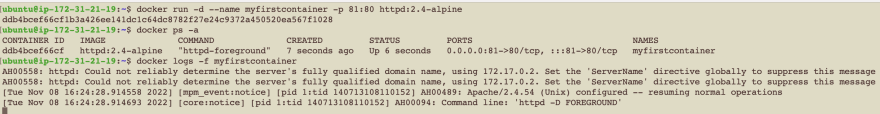

Next, it is time to run an container from this image. The goal is to run the apache server on port 80, and have it accept requests. Create a Docker container using the command:

docker run -d --name myfirstcontainer -p 80:80 httpd:2.4-alpine

The command above is explained as follows:

-

run: instructs docker to run a container. -

-d: Flag to run the container in the background (Detached mode). Omitting this flag will cause the container to run in the foreground. -

--name: A custom name can be given to the container. If no name is given, Docker will assign a random name for the container. -

-p: Publish a container's port to the host. by default, if the ports are not exposed, containers cannot be accessible from outside the Docker network. To bypass this, the-pflag maps the container’s internal ports to those of hosts, allowing the containers to be reachable from outside the network. In the example above, The Apache server listens internally on port 80. The command above instructs Docker to map the container’s port 80, to the host’s port 81. Now, the container can be reached via the machine’s public IP, and on port 81, which will translate it to the container’s default port.

ensure that the container is successfully running: docker ps -a

Monitor the container logs: docker logs -f myfirstcontainer

Using any browser, attempt to make a request to the container, using the machine’s public IP and port 81: http:/3.250.206.251:80

Customize the Base Image

Now that the base image is successfully deployed and running, it is time to customize it. The official documentation presents clear instructions on the different ways the image can be customized, namely, creating custom Virtual Hosts, adding static content, adding custom certificates, etc.

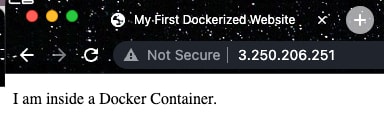

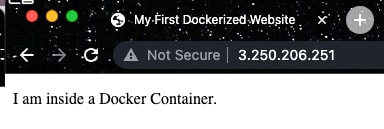

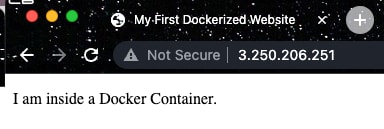

In this example, the following simple HTML page representing a website will be added, thus creating a custom image.

<!DOCTYPE html>

<html>

<head>

<title>My First Dockerized Website</title>

</head>

<body>

<p>I am inside a Docker Container.</p>

</body>

</html>

The official documentation on Docker Hub clearly states that the default location for adding static content is in /usr/local/apache2/htdocs/. To do so, create an interactive sh shell on the container docker exec -it myfirstcontainer sh. Once inside the container, navigate to the designated directory cd /usr/local/apache2/htdocs/. The directory already has a file named index.html which contains the default Apache page loaded above. Modify it to include the custom HTML page above, and hit the container again: http:/3.250.206.251:80

Clearly, the image shows that the changes have been reflected.

Create a Custom Image

Unfortunately, The changes performed will not persist, especially when the container crashes. As a matter of fact, by default, containers are ephemeral, which means that custom data generated during runtime will disappear as soon as the container fails. To verify it, remove the container and start it again:

docker rm -f myfirstcontainer

docker ps -a

docker run -d --name myfirstcontainer -p 80:80 httpd:2.4-alpine

Now hit the server again http://3.250.206.251:80. The changes performed disappeared. To persist the changes, a custom image must be built. The custom image is a snapshot of the container after adding the custom website. Repeat the steps above to add the HTML page, and ensure the container is returning the new page again.

To create a new image from the customized running container, perform the following, create a local image from the running container docker commit myfirstcontainer.

Clearly, a new image with no name and no tag has been just created using the docker commit command. Name and tag the image: docker tag <image ID> custom-httpd:v2. The image should be that created by Docker, and the name and tag can be anything.

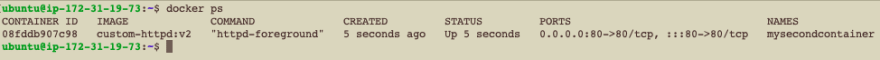

Remove the old container, and create a new one using the new image:

docker rm -f myfirstcontainer

docker run -d --name mysecondcontainer -p 80:80 custom-httpd:v2

A new docker container named mysecondcontainer is now running using the custom-built image. Hitting the machine on port 80 should return the new HTML page now no matter how many times the container is destroyed and created.

The custom image is now located on the Virtual Machine. However, storing the image on the VM alone is not a best practice:

- Inability to efficiently share the image with other developers working on it.

- Inability to efficiently download and run the image on different servers.

- Risk of losing the image, especially if the VM is not well backed up.

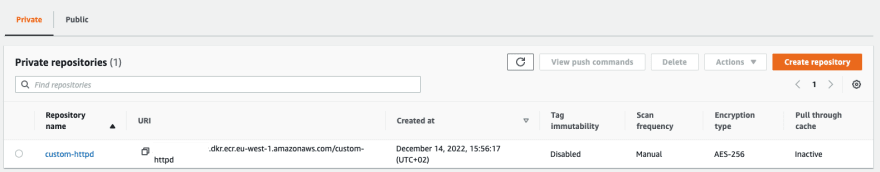

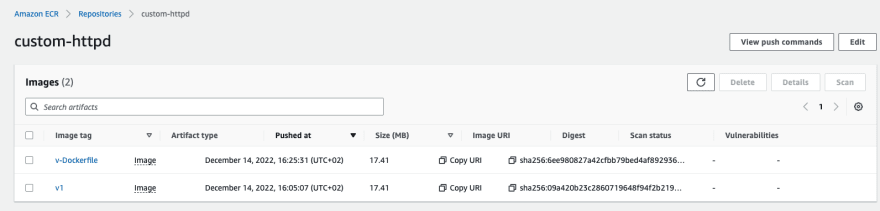

A better solution would be to host the image in a Container Registry. In this tutorial, AWS ECR will be used to store the image. To create a Container repository on AWS ECR, navigate to Amazon ECR —> Repositories —> Private —> Create repository, with the following parameters:

- Visibility Settings: Private

- Repository name: custom-httpd

Leave the rest as defaults and create the repository.

Now that the repository is successfully created, perform the following to push the image from the local server to the remote repository:

- First, login to the ECR from the machine:

aws ecr get-login-password --region eu-west-1 | docker login --username AWS \

--password-stdin <AWS_ACCOUNT_ID>.dkr.ecr.eu-west-1.amazonaws.com

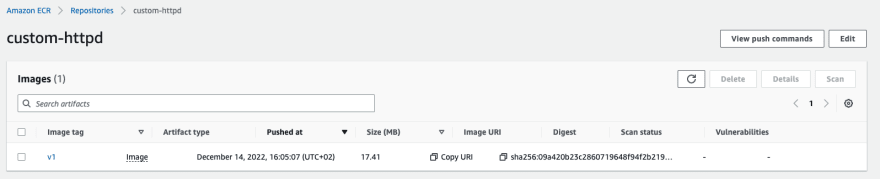

The repository created on AWS ECR possesses a different name than the one we created, therefore, we need to tag the image with the correct name, and then push it:

# Tag the image with the correct repository name

docker tag custom-httpd:v2 \

<AWS_ACCOUNT_ID>.dkr.ecr.eu-west-1.amazonaws.com/custom-httpd:v1

# Push the image

docker push <AWS_ACCOUNT_ID>.dkr.ecr.eu-west-1.amazonaws.com/custom-httpd:v1

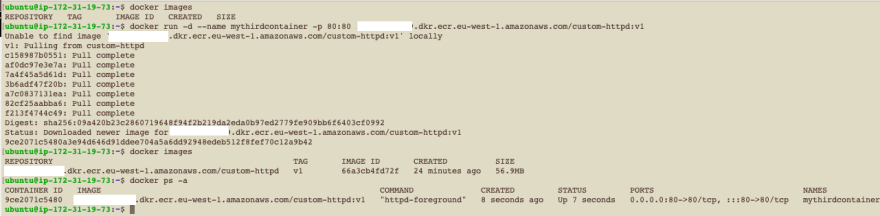

Clearly, we were able to push the image to AWS ECR. Now, to finally test that the new custom image is successfully built and pushed, attempt to create a container from the image located in the Container Registry.

# Delete all the containers from the server

docker rm -f $(docker ps -a)

# Delete all the images from the server

docker rmi -f $(docker images)

# List all the available images and containers (should return empty)

docker images

docker ps -a

create a third container, but this time, reference the image located in the ECR: docker run -d --name mythirdcontainer -p 80:80 <AWS_ACCOUNT_ID>.dkr.ecr.eu-west-1.amazonaws.com/custom-httpd:v1

The image clearly shows that the image was not found locally (on the server), and therefore fetched from the ECR.

Finally, hit the server again http://3.250.206.251:80

The server returns the custom page created. Cleaning up the server from the applications is as simple as deleting all the containers and all the images:

# Delete all the containers from the server

docker rm -f $(docker ps -a -q)

# Delete all the images from the server

docker rmi -f $(docker images)

# List all the available images and containers (should return empty)

docker images

docker ps -a

Create a Docker image using Dockerfiles

Creating images from existing containers is one intuitive way. However, such an approach may prove to be inefficient, and inconsistent:

- Docker images may require to be built several times a day.

- A Docker image may require several complex commands to be built.

- Difficult to maintain as the number of services and teams grows.

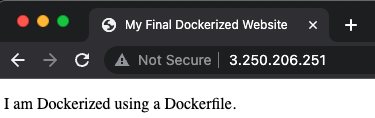

Dockerfiles are considered a better alternative, capable of providing more consistency and allowing for automating the build steps. To better understand Dockerfiles, the rest of this tutorial attempts to Containerize the application, using Dockerfiles.

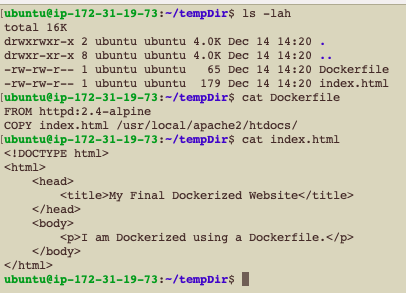

To do so, The application code and the Dockerfile must be placed together:

- Create a temporary directory:

mkdir ~/tempDir - and place the application code inside the directory in a file called

index.html

<!DOCTYPE html>

<html>

<head>

<title>My Final Dockerized Website</title>

</head>

<body>

<p>I am Dockerized using a Dockerfile.</p>

</body>

</html>

- Create a Dockerfile next to the index.html file, with the following content:

FROM httpd:2.4-alpine

COPY index.html /usr/local/apache2/htdocs/

The Dockerfile above has two instructions:

- Use

httpd:2.4-alpineas base image - Copy

index.htmlfrom the server to the/usr/local/apache2/htdocs/inside the container

The resultant directory should look as follows:

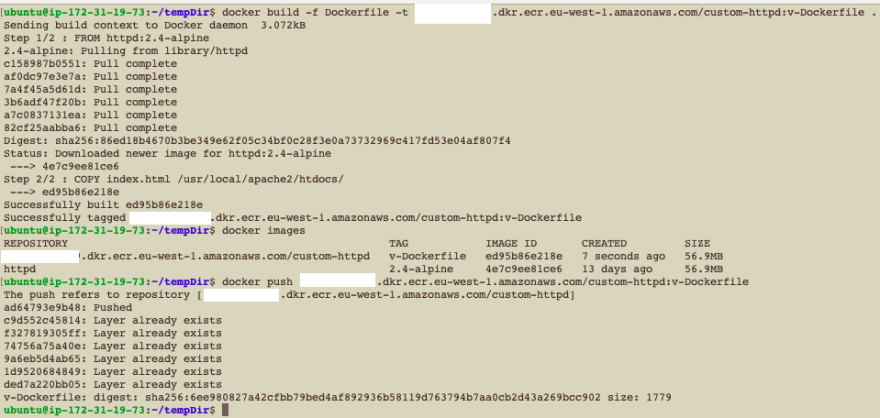

To build the image using a Dockerfile, perform the following command: docker build -f Dockerfile -t <AWS_ACCOUNT_ID>.dkr.ecr.eu-west-1.amazonaws.com/custom-httpd:v-Dockerfile .

Breaking down the command above:

-

docker build: Docker command to build a Docker image from a Dockerfile -

-f Dockerfile: The path and filename of the Dockerfile (Can be named anything) -

-t <AWS_ACCOUNT_ID>.dkr.ecr.eu-west-1.amazonaws.com:v-dockerfile: The name and tag of the resulting image -

.: The path to the context, or the set of files to be built.

Push the image to the ECR: docker push <AWS_ACCOUNT_ID>.dkr.ecr.eu-west-1.amazonaws.com/custom-httpd:v-Dockerfile

Finally, to simulate a fresh installation of the image, remove all the containers and images from the server, and create a final container from the newly pushed image:

# Remove existing containers

docker rm -f $(docker ps -a -q)

# Remove the images

docker rmi -f $(docker images)

# Create the final container

docker run -d --name myfinalcontainer -p 80:80 <AWS_ACCOUNT_ID>.dkr.ecr.eu-west-1.amazonaws.com/custom-httpd:v-Dockerfile

Hit the machine via its IP:

This content originally appeared on DEV Community 👩💻👨💻 and was authored by Nicolas El Khoury

Nicolas El Khoury | Sciencx (2022-12-19T07:09:30+00:00) Web Application Deployment on AWS. Retrieved from https://www.scien.cx/2022/12/19/web-application-deployment-on-aws/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.