This content originally appeared on Level Up Coding - Medium and was authored by Fahim ul Haq

ChatGPT wrote this limerick that effectively captures the excitement and hopes around artificial intelligence (AI) and machine learning (ML).

Ever since OpenAI launched the new application ChatGPT, people around the world have been using it for various purposes — from generating code snippets and essays to conducting research.

The AI research laboratory behind ChatGPT, OpenAI, states their mission is to create AI that “benefits all of humanity.” We’ve yet to see whether OpenAI will fulfill such an ambitious mission statement, but there’s no question that ChatGPT has sparked the interest of software developers.

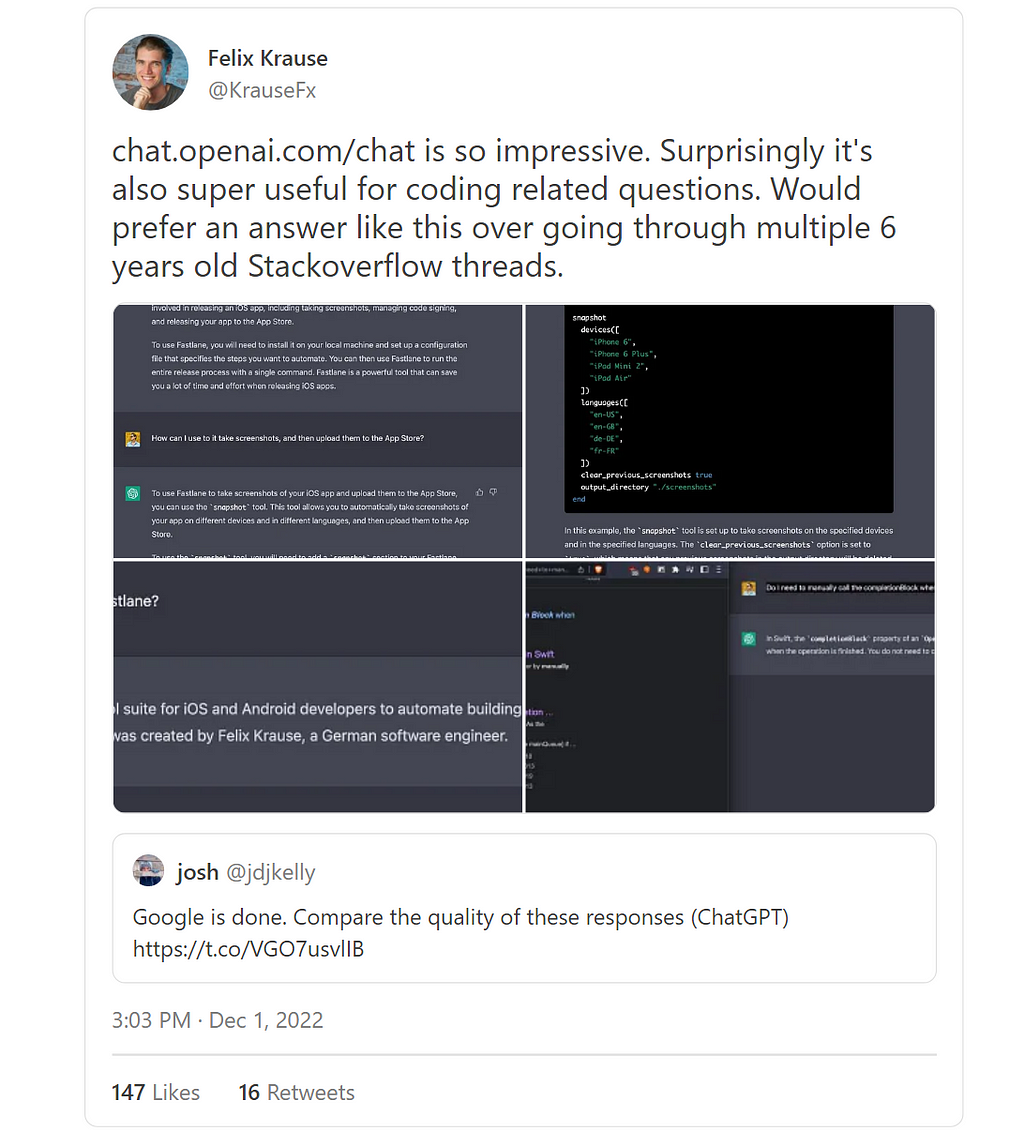

From analyzing and debugging code, to generating code based on problem statements, ChatGPT has already begun to help with various common development use cases. iOS developer Felix Krause explains one such use case:

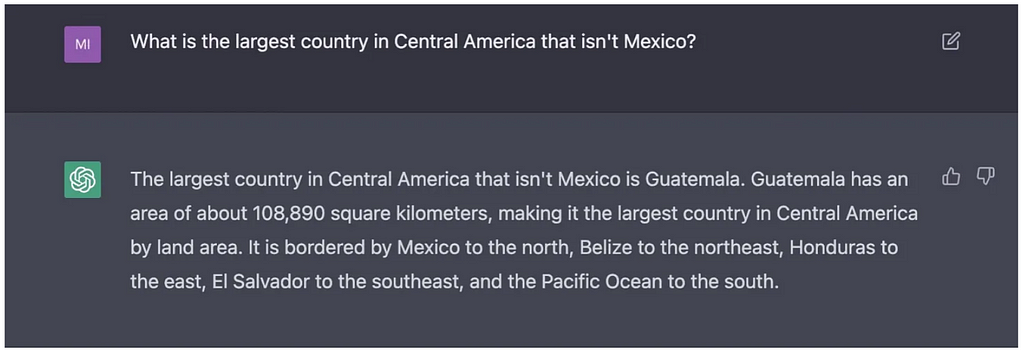

On the other hand, ChatGPT is hardly perfect. While it can respond directly to various human prompts, it’s also capable of providing inaccurate answers to simple questions. Senior Editor at Mashable Mike Pearl points out several instances where ChatGPT has been found to be incorrect, including this response (which I believe should have been Nicaragua):

Despite its limitations, ChatGPT has stirred up a familiar anxiety that often comes with advancements in AI technology: will AI be our new overlord?

…just kidding. The real anxiety ChatGPT has stirred up is this: will AI take developer jobs?

AI like ChatGPT will change the landscape of software development — but not in the way that many fear. As a software engineer and developer learning advocate, I believe ChatGPT can help us make better software, but it can’t replace developer jobs. Here’s why.

Why we should celebrate ChatGPT

ChatGPT is not the first machine learning tool to serve as a coding assistant.

Autocomplete has been helping us type code and even email faster for several years. We also have GitHub Copilot, which uses a production version of OpenAI’s GPT-3 to suggest improvements and flag potential problems in our code. As a coding assistant, ChatGPT distinguishes itself from Copilot with its ability to formulate detailed responses to conversational prompts, as opposed to basic pre-programmed commands.

Making coding more accessible

Throughout the history of computer science, we’ve seen advancements in technology that have enabled more people to become developers. Largely thanks to methods of abstraction, it has become easier for more and more people to leverage complex technologies which were once only understood by highly specialized engineers.

For instance, high-level programming languages, in tandem with compilers and IDEs, allow today’s engineers to write human-readable code without having to write machine code (which is in binary, and not human-friendly). Similarly, the improvement of AI assistants like Github Copilot is a promising sign that we’re still moving in a direction that can make coding a more accessible and enjoyable experience for all.

Benefitting from abstraction doesn’t necessarily mean that developers would be any less skilled or knowledgeable. Similarly, not knowing how a car engine works doesn’t make you a bad driver, and using autocomplete doesn’t make you a bad engineer. We can still build wonderful applications while benefiting from high-level languages like Java, or machine learning tools like ChatGPT.

ChatGPT as research assistant

ChatGPT has been trained on over 45 terabytes of text data from various sources, including CommonCrawl, WebText2, and code in Python, JavaScript, and CSS.

ChatGPT generates responses based on this vast training dataset — and conveniently does so in response to human input. The ability to interpret human input can make ChatGPT a helpful research assistant. While its results still need validation, it can still provide accurate results that can even save us from scouring Google search results or StackOverflow. It can even provide further explanations that will aid coders in learning and understanding new concepts.

This benefit can help us streamline our search for new and relevant knowledge while coding.

How ChatGPT can transform our lives by reducing tedium

ChatGPT is going to make coding more productive and bug-free. As it accommodates more complex requirements, we can look forward to it helping eliminate grunt work and accelerate productivity and testing.

As assistants such as ChatGPT evolve, many of the tedious tasks that have occupied developers could go away in the next decade, including:

- Automating unit tests

- Generating test cases based on parameters

- Analyzing code to suggest security best practices

- Automating QA

Freeing developers from menial tasks can free us to think about more complex issues and optimizations, and even higher-level concerns such as an application’s implications for its users or business.

Some people think assistant tools make developers lazy. I disagree. Once you’ve learned something, there’s no cognitive or productivity benefit to retyping the same line of code over and over again. If the best code for a certain task has already been tried and tested, why reinvent the wheel? Besides, the problem you’re solving is probably more complicated than copying/pasting a few code snippets.

This benefit is analogous to how APIs simplified our lives as devs. For instance, the payment processing that Stripe now hides behind a single API once required developers to write 1,000 lines of code to process payments. (In case you missed last week’s analysis of Stripe’s System Design, you can find it on my Engineering Enablement Newsletter.)

Where ChatGPT falls short

ChatGPT is not magic. It looks at a huge corpus of data to generate what it considers the best responses based on existing code. Accordingly, it has its limitations.

We still need human judgment

ChatGPT is a useful tool, but it surely doesn’t replace human judgment. Its learning models are based on consuming existing content — some of which contains mistakes and errors.

No matter what code snippet is generated by ChatGPT, you still need to apply your judgment to ensure it’s working for your problem. ChatGPT generates snippets based on code that’s been written before, so there’s no guarantee that the generated code is right for your particular problem. As with any snippet you find on StackOverflow, you still have to make sure your intention was fully understood, and that the code snippet is suitable for your program.

In the end, we can’t blindly copy/paste code snippets from ChatGPT, and the consequences of doing so could be severe. On that note…

ChatGPT can’t problem solve

Problem-solving is the essential skill that developers need to have, which is why machine learning tools won’t have a dire impact on developer jobs.

As a developer, your job consists of understanding a problem, coming up with several potential solutions, then using a programming language to translate the optimal solution for a computer or compiler. While machine learning tools can help us type code faster, they can’t do problem-solving for us.

While ChatGPT can enable a lot of people to become better, more efficient developers, it’s not capable of building large-scale applications for humans. In the end, we still need human judgment to discern between good and bad code. Even if we’re receiving help when writing code, we’re not running out of big problems to solve.

ChatGPT doesn’t have multiple perspectives

ChatGPT has a limited perspective. Its suggestions are based on the data it is trained on, and this comes with many risks.

For one, if ChatGPT mistakes a highly repeated code snippet as a best practice, it can suggest and perpetuate a vulnerability or inefficiency. While it didn’t involve AI, one such instance snowballed into a large incident at Microsoft. Years ago, Microsoft released documentation about a toy driver they created. Even though they indicated that it was a toy driver and not actually the best practice to build a real driver, everyone ignored the warning and started using it anyway. This took Microsoft years to clean up!

ChatGPT is fully capable of generating incorrect answers, but like any other answer, it will do so with utmost confidence. Unfortunately there’s no metric that helps manage expectations about the potential error in a response. This is a disadvantage against other sources we visit for guidance. Sites like StackOverflow or GitHub are capable of giving us more multidimensional data. We can validate others’ suggestions by looking at their context, responses, upvotes, etc. In this sense, other sources are better equipped to touch on the nuances of real-world problems.

ChatGPT’s limited perspective can make it something of an echo chamber, which can be very problematic.

We’ve long known that machine learning algorithms can inherit bias, and as a result, AI is vulnerable to adopting harmful biases from racism and sexism to xenophobia. Despite guardrails that OpenAI has implemented, ChatGPT is also capable of inheriting bias. (If you’re interested, reporter Davey Alba discusses ChatGPT’s susceptibility to bias on Bloomberg.)

All in all, we have to take every ChatGPT response with a huge grain of salt — and sometimes, it might be easier to just write your code from scratch than to work backwards to validate a generated code snippet.

ChatGPT can’t get you hired

Though it can generate a code snippet, ChatGPT is not the end of coding interviews. Besides, the majority of the coding interview consists of problem-solving — not writing code. Writing code will only take about 5–10 minutes of a 45-minute coding interview. (If you’re curious about how to prepare for interviews efficiently, check out my full breakdown of the FAANG coding interview).

The rest of the coding interview requires you to give other hireable signals. You still need to ensure that you’re asking the right questions to articulate and understand your problem and narrating your thought process to demonstrate how you narrow your solution space. ChatGPT can’t help you with any of this. However, these critical thinking and problem-solving skills carry just as much weight in your hireability as your coding competency.

Looking ahead

IDEs and API efficiencies didn’t decrease the demand for developers, and neither will ChatGPT. Machine learning tools help us do tasks more efficiently, but they don’t replace our need to think. Sometimes they’re right and other times they’re incredibly (and hilariously) wrong.

While assistants like Siri and Alexa can help us with basic tasks, they can’t help us with complex efforts like making huge life changes. Similarly, ChatGPT can’t help us with complex problems, nor can it replace innovation. But these technologies do help alleviate some of the menial tasks that distract us from tackling more ambitious problems (such as improving AI technologies).

As a developer, you shouldn’t stop investing in your learning or your long-term coding career. If anything, be open to incorporating these tools into your life in the future. If you are interested, you can learn how to leverage AI yourself.

If you’re curious about AI technologies, there has never been a better time to start learning ML. With Educative Premium, you can access these terrific ML courses, along with hundreds of other courses, projects, and Skill Paths created by industry experts:

- Become a Machine Learning Engineer (Skill Path)

- Using OpenAI API for Natural Language Processing in Python (Course)

- Build a Web Assistant with OpenAI GPT-3 (Project)

Try it out and let me know what you think!

Now, over to you: What do you think of ChatGPT? How have you seen it used to make coding more efficient?

Happy learning!

This post is from originally Grokking the Tech Career, a free newsletter available on Substack from Fahim ul Haq, the CEO and Co-founder of Educative, the world’s best learning platform for software developers.

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

🚀👉 Join the Level Up talent collective and find an amazing job

Is ChatGPT our new AI overlord? (No, and here’s how it can help devs) was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Fahim ul Haq

Fahim ul Haq | Sciencx (2023-01-05T15:33:51+00:00) Is ChatGPT our new AI overlord? (No, and here’s how it can help devs). Retrieved from https://www.scien.cx/2023/01/05/is-chatgpt-our-new-ai-overlord-no-and-heres-how-it-can-help-devs/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.