This content originally appeared on TPGi and was authored by Aaron Farber

A Comparison of Automated Accessibility Testing Tools

TPGi’s accessibility resource center – ARC Platform provides the tools and resources to execute every area of an accessibility program. The platform combines manual and automated accessibility testing in one location.

Automated accessibility testing can be summed up as a program that runs a set of accessibility rules on the user interface for a website, web application and key user journeys, and generates a report with its evaluation.

The set of automated accessibility rules used is collectively known as the accessibility rules engine – it provides the logic which powers automated accessibility testing.

The Web Content Accessibility Guidelines (WCAG) is the prevailing standard for testing web accessibility. WCAG’s most recent version, 2.1, at Level AA, is made up of 50 accessibility guidelines, or in WCAG terms, Success Criteria.

Fundamentally, a rules engine (and the rules engine’s author) is making decisions about what elements to identify as WCAG violations and to bring to the attention of testers and engineers.

These decisions deeply impact organizations, in how they approach accessibility testing, grow their accessibility knowledge, and above all, in the user experience they provide for disabled people.

To test content and view results from TPGi’s own rules engine, ARC Rules, use:

- Free Chrome extension (ARC Toolkit): Run tests on the content currently open in your browser and visually inspect ARC’s results. Easily scan pages which require authentication, specific points in a user journey – for example, after you’ve opened a dialog, or local file URLs.

- Web API: Run tests from the command line via shell scripts. Slice and dice ARC Rules’ results for custom reports or for export to your preferred bug tracker.

- Node SDK: Integrate ARC Rules into your CI/CD build processes without having to call ARC’s servers outside your network.

- Web platform (ARC Monitoring): Automate accessibility monitoring for domains and key user journeys and track progress over time, with access to all the ARC Platform’s tools and resources.

ARC Monitoring is notable for providing two rules engines to use, ARC Rules and Axe. And potentially, a third one as ARC users may use their own custom rules engine.

When used in ARC Monitoring, ARC Rules and Axe overlap in the results they return, but they have different philosophies, and in practice, have had different outcomes for organizations.

Before reviewing the difference in outcomes, let’s examine each rules engine.

Axe

Axe is built from the open-source API, officially known as Axe-core. Axe is driven by its philosophy and marketing pitch, promising “no false positives”, which means no mistaken accessibility issues. To be consistent with this philosophy, when using Axe, ARC Monitoring reports only Errors – only the issues that Axe identifies as automatic WCAG failures.

With this configuration, Axe can find automated errors for 16 of the 50 Success Criteria. These kinds of definitive errors often require a low level of effort for teams to solve – given that a team has full control over its codebase. Solving these errors can usually be achieved without summoning significant technical resources or changing a site’s visual appearance.

Axe is concerned with low-hanging-fruit – code-level errors such as duplicate ID’s or an img lacking an alt attribute, or elements that can’t be accessed by any screen reader, such as a button lacking any discernible text. These issues have more to do with concrete technical specifications.

Many vendors use derivatives of Axe in their services; Google Chrome’s built-in automated testing tool, Lighthouse, uses a subset of Axe’s rules. With the wide reach that comes from being open-source, one could speculate Axe’s authors are cautious about what they report as an error. Automated testing results could be used in an adversarial manner – by people looking for targets for drive-by lawsuits. However, this behavior is at odds with Axe’s intent. In fact, in the terms of service, for TPGi and vendors such as WAVE, which has its own rules engine, the use of automated test results for any purpose related to legal action is not allowed without written permission.

The high cost of “no false positives”

The rationale for “no false positives”, is to help teams avoid wasting time fixing issues falsely identified as WCAG violations. In theory, this sounds good, but in practice, it conflicts with how to combine manual and automated testing.

All accessibility issues require a level of manual inspection. Errors are objective but the solutions are subjective. Automated testing is great at detecting an image that lacks alternative text, but it takes a human to explain an image’s purpose and solve for the issue. Teams must understand accessibility. You can’t automate something that you don’t understand.

Moreover, only a human tester has the context and empathy necessary to understand an error’s impact on user experience and determine whether to prioritize solving for that error.

The “no false positives” philosophy is restrictive. With browsers and the core languages of the web (HTML, CSS, and JavaScript) becoming more powerful, the web supports an ever-widening range of content. Consequently, the underlying Axe-core API has added a second category, besides Failures, for its findings: Needs Review. In the past 5 years, a higher and higher percentage of Axe’s rules require manual inspection and do not detect automatic errors. With increasingly complex websites, the web is full of edge cases and probably even more so.

This leads to issues being missed. Engineers, with less WCAG or accessibility experience, rely on automated testing, to evaluate their site. They could end up delivering inaccessible online experiences and being unaware of the issues in their site. Automated testing can be a powerful tool in supporting manual testing, but less so when its results are limited to a narrow range of code-level issues.

ARC

TPGi’s exclusive rules engine, ARC Rules, supplements manual testing and focuses on identifying the elements which could impact the user experience for disabled people and limit access.

When using ARC Rules, ARC Monitoring reports Errors and Alerts. ARC Rules has a high-confidence interval that an Error represents an accessibility issue. These errors overlap with Axe’s results but ARC Rules does not share the quixotic need for no false positives. An Alert identifies an element that requires further manual inspection or could be indicative of larger accessibility issues. An Alert is not to be thought of as less severe than an error.

A high-quality user experience

ARC Rules focuses on supporting the different permutations of browser and assistive technology, which make up the great diversity of people that use the web. This leads to greater predictability and confidence in your code and a lower probability of needing accessibility patches to respond to an unexpected complaint. Legal risk arises not for having a WCAG violation but because someone cannot access an experience as they normally use the web.

ARC Rules detect issues that often go missed in manual testing. ARC applies the experience TPGi has accrued conducting thousands of manual accessibility reviews. ARC provides smoke signals; identifying issues which teams less experienced with manual accessibility testing could miss. ARC recognizes the patterns which require specific considerations for accessibility. There are many instances in which an element could pass technical specifications but still present an accessibility barrier.

ARC Rules empowers teams to address issues while growing their understanding of accessibility. A main strength of ARC Monitoring is that each rule maps to a tailored set of resources from the ARC Platform’s KnowledgeBase. It’s a three step process – Understand, Test, and Solve. Each issue detected comes with a resource to understand the accessibility guideline, step-by-step manual testing procedures for the accessibility guideline to test the issue, and proven implementations to solve the issue.

With these clear steps, teams even with less accessibility expertise, can accurately evaluate ARC’s results. Teams don’t waste time fixing mistaken accessibility issues because before they touch code, they first understand an issue’s impact on accessibility. While ARC casts a wider net, ARC enables teams to scope testing to components, reducing the number of results to inspect.

Let’s delve into a few rules unique to the ARC Rules Engine, which epitomize its perspective.

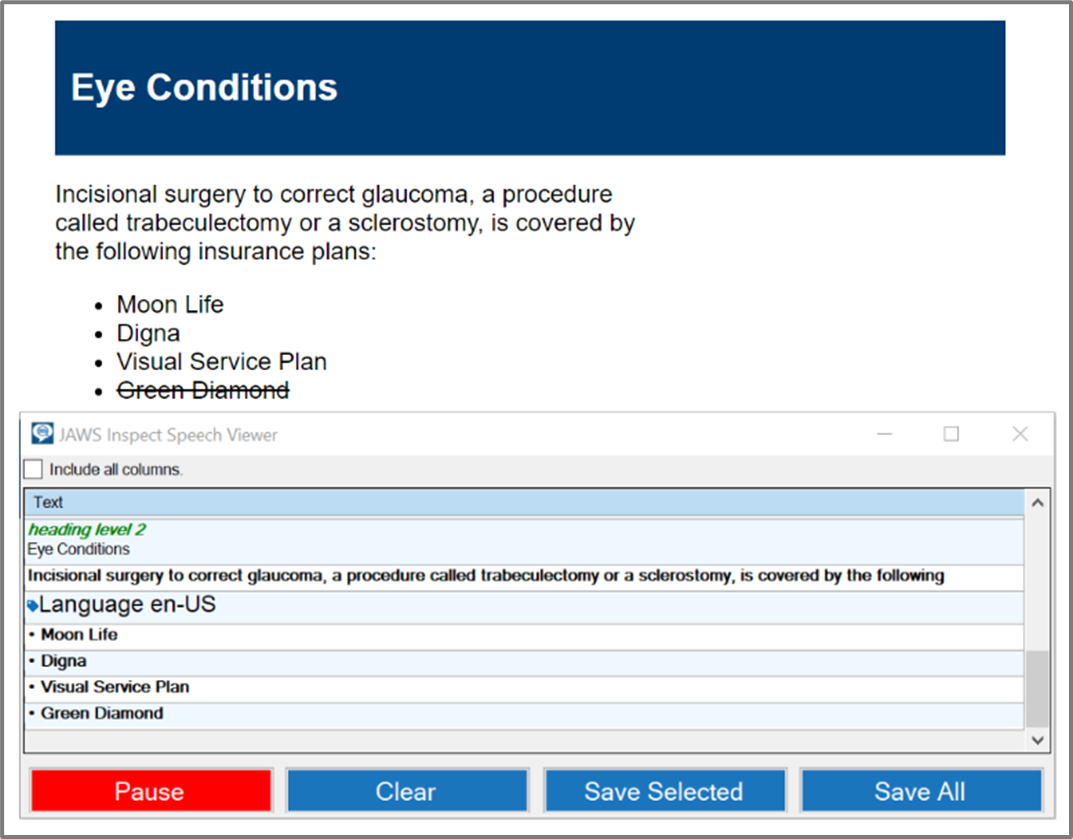

Strikethrough text – Alert

ARC raises an alert for strikethrough text, which is can be identified by use of the strike HTML element or by text having the CSS property, text-decoration: line-through.

Strikethrough text does not inherently violate WCAG or any technical specifications, but most screen readers don’t, by default, announce text formatting styles such as text having a strikethrough. However, text having a strikethrough usually signifies a retraction, and that carries significant meaning. A price could have changed or a condition to a contract could have been removed. This is critically important information that is not conveyed to assistive technology users.

Manual testers, with less experience may miss this issue. Moreover, strikethrough text is often added by content managers, after comprehensive accessibility testing has already been completed on the application. Based on TPGi’s familiarity with this workflow, ARC Rules provides an alert.

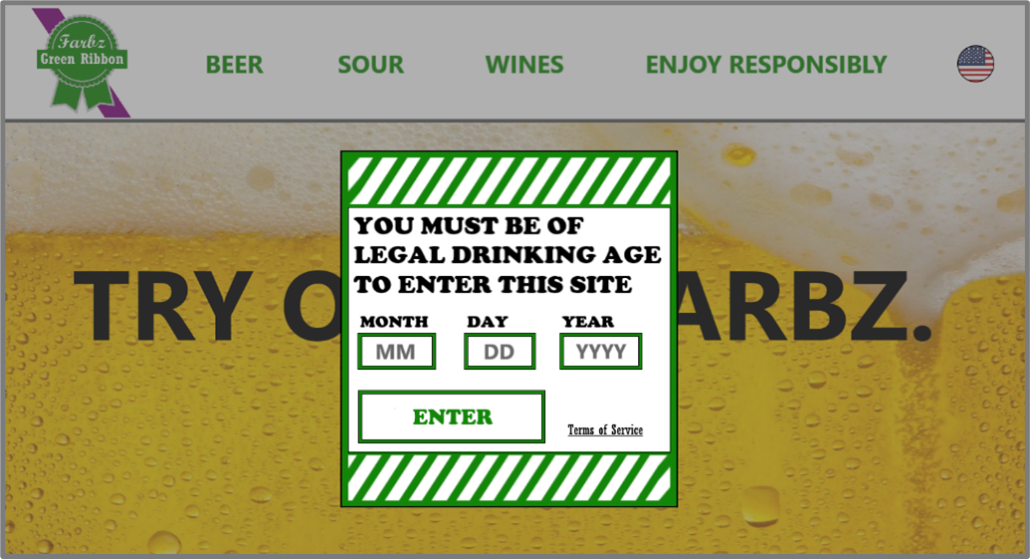

Content behind modal is still accessible – Alert

A modal dialog is a pop-up of information where users must complete an action in the dialog, before interacting with the main page content outside the dialog. Therefore, when a modal dialog is open, the main page content outside the dialog must be hidden from assistive technologies to prevent users from navigating to content that is intended to be non-interactive.

ARC Rules detects when the content outside the dialog is not hidden. While it’s possible for a dialog to be non-modal, where users can navigate to and from the dialog, ARC Rules does not prioritize this uncommon circumstance. When ARC Rules conclusively determines a dialog is modal, ARC Rules instead raises an error.

While manually inspecting a dialog flagged by this rule, testers learn of and test for a dialog’s full range of accessibility requirements. They often detect other WCAG violations; for example, when a dialog appears, it’s critical that keyboard focus is set to the dialog otherwise, screen reader users may not be aware of the dialog’s presence. While focus management is difficult to test using automated means, ARC removes this gap in test coverage and alerts site authors to a potential accessibility barrier.

Which Rules Engine to Use

In ARC Monitoring, teams can use both ARC Rules and Axe; they don’t have to use only one.

Both ARC Rules and Axe follow the Accessibility Conformance Testing (ACT) Rules Format, which defines a format for writing automated accessibility test rules. The goal is for automated testing tools to have greater consistency in how they test and interpret WCAG. This standardization makes it easier to compare the results returned by ARC Rules and Axe. Using both engines allows for the cross-checking of results which increases the likelihood that elements are correctly identified as automatic failures. However, to have a single baseline measure of accessibility, most organizations end up primarily relying on ARC Rules or Axe.

For the past year, I supported organizations using ARC Monitoring. I’m often asked which rules engine to use. At first, I was agnostic and said the choice of rules engine was a matter of personal preference. However, I quickly found that this was not the correct advice.

It turned out teams using Axe alone, particularly those newer to accessibility, caught fewer issues, increasing the risk for their organization. It’s risky for a team to know of an accessibility issue on their website that has not been fixed. It’s even riskier for a team to carry on in false confidence, believing they’ve fixed the errors, unaware of other critical accessibility issues on their site.

Teams that favored ARC Rules in monitoring found more accessibility barriers, including issues that had gone undetected in their own manual testing. Equipped with the ARC Platform’s tools and resources, fewer issues reached production. Teams gained a more fundamental understanding of accessibility and delivered a higher quality user experience.

The post Reach Above Low-Hanging Fruit for A High-Quality User Experience appeared first on TPGi.

This content originally appeared on TPGi and was authored by Aaron Farber

Aaron Farber | Sciencx (2023-01-23T16:50:07+00:00) Reach Above Low-Hanging Fruit for A High-Quality User Experience. Retrieved from https://www.scien.cx/2023/01/23/reach-above-low-hanging-fruit-for-a-high-quality-user-experience/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.