This content originally appeared on Level Up Coding - Medium and was authored by Shanaka DeSoysa

Discover how to easily and efficiently make predictions on large datasets using the power of Python’s FastAPI

Introduction

Batch predictions are a powerful tool in Machine Learning projects, allowing you to make predictions on large amounts of data at once. This can be much more efficient and save a lot of time, especially when working with large datasets. In this article, we will discuss the importance of batch predictions, provide an example of how to implement a batch prediction endpoint using Python’s FastAPI, and show how it works by providing a simple example of the endpoint that accepts an excel file and outputs an excel file with predictions added.

Importance of batch predictions

Batch predictions have many benefits in Machine Learning projects, such as saving time and increasing efficiency. For example, instead of making individual predictions one at a time, a batch prediction allows you to make predictions on all of the data at once, thousands of rows of data. This can be especially useful when working with large datasets. In the industry, batch predictions are used in many applications such as natural language processing, image classification, and others.

TLDR; Show Me the Code

In this article, we discussed the importance of batch predictions in Machine Learning projects, and provided an example of how to implement a batch prediction endpoint using Python’s FastAPI. We also discussed other examples of batch prediction endpoints that can be implemented. The example provided accepts an excel file and returns an excel file with predictions added.

You can find the complete code for this example in this Github repository: https://github.com/shanaka-desoysa/fastapi-excel-batch-processor

By implementing a batch prediction endpoint, you can make predictions on large amounts of data at once, saving time and increasing efficiency. With the help of Python’s FastAPI library, it’s easy to create a batch prediction endpoint that can handle large amounts of data, improve efficiency, and keep your server secure.

Batch Prediction APIs in ML

There are many Machine Learning projects that implement batch prediction endpoints, as batch predictions are a common requirement in many ML applications. Some examples include:

- TensorFlow Serving: an open-source framework for serving Machine Learning models. It allows you to deploy your models and make predictions in a batch mode.

- MLflow: an open-source platform to manage the ML lifecycle, including tracking experiment runs between multiple users within a reproducible environment, packaging ML code in a reusable, reproducible form in addition to support for deploying models.

- AWS SageMaker: a fully managed service that enables developers and data scientists to build, train, and deploy Machine Learning models quickly, also provide batch predictions.

- Microsoft Azure Machine Learning: a cloud-based platform that allows you to build, deploy, and manage Machine Learning models, also allows you to make batch predictions using the platform’s REST API.

- Hugging Face’s Transformers: an open-source library that provides pre-trained models and allows to make batch predictions on input data.

Implementing a batch prediction endpoint with FastAPI

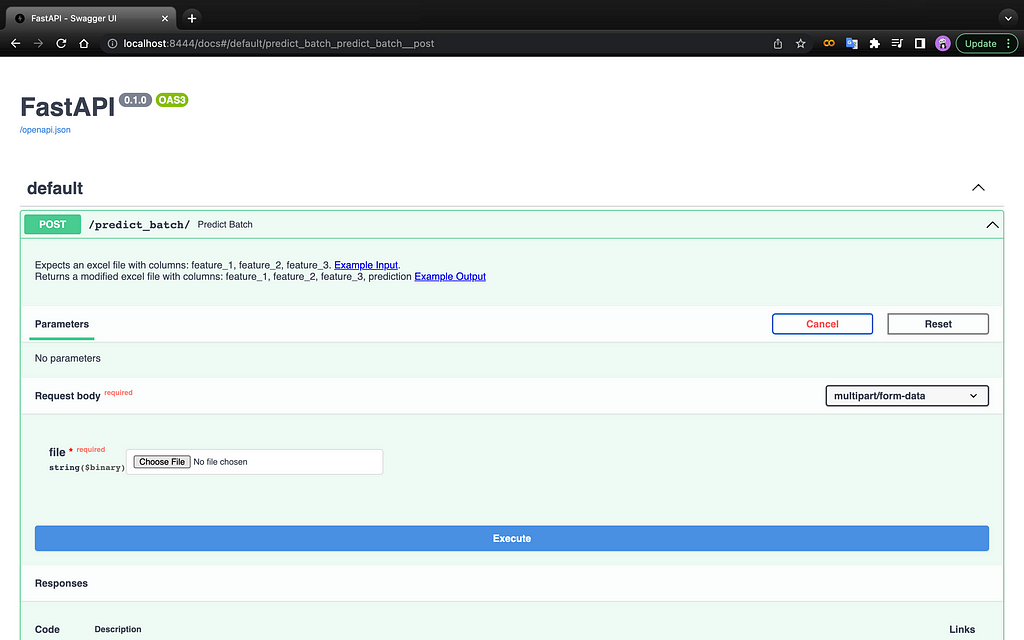

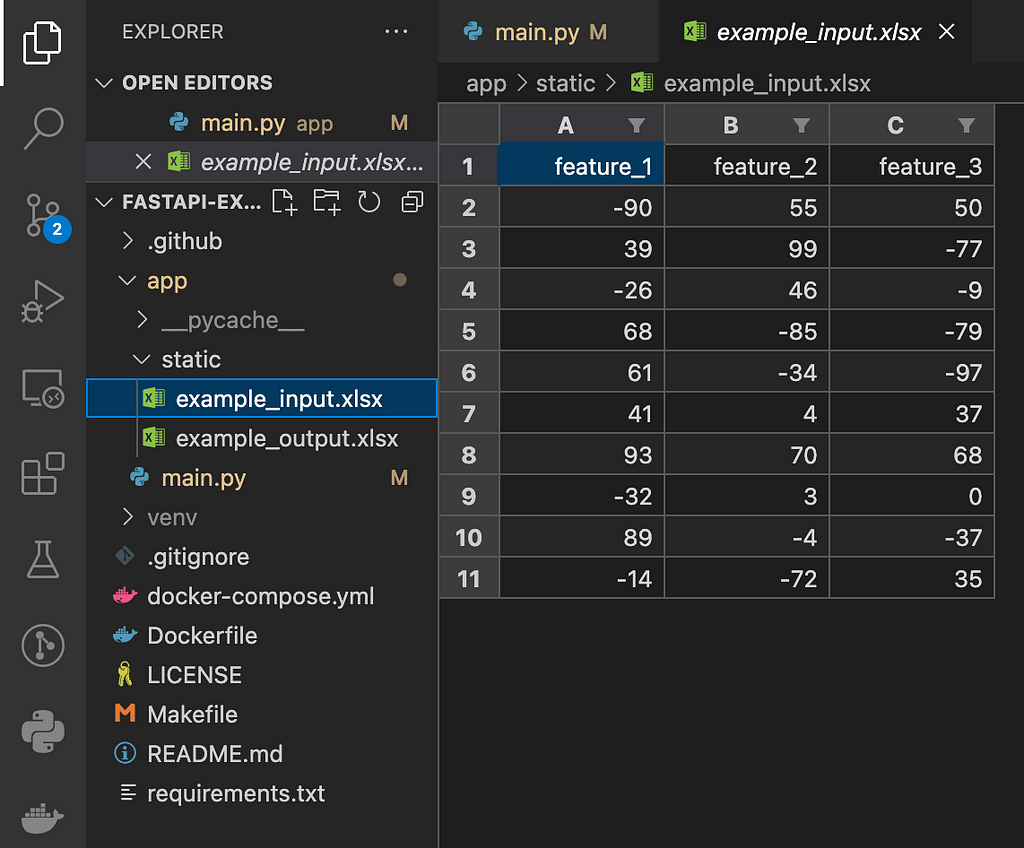

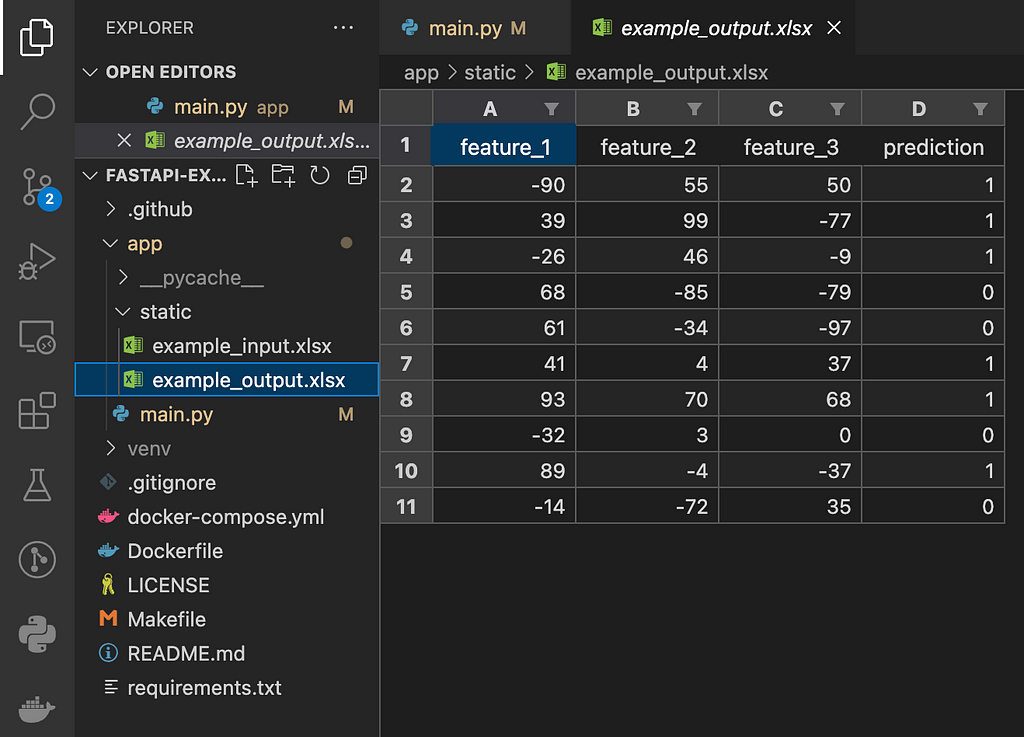

In this section, we will show how to use Python’s FastAPI library to create a batch prediction endpoint on your own project easily. The endpoint will accept an excel file as input and output an excel file with predictions added. The code below shows an example of a simple endpoint that accepts an excel file, reads it into a Pandas DataFrame, and applies a simple model to make predictions. The modified DataFrame is then written to a temporary file and returned as an Excel file response.

import io

import tempfile

import logging

from fastapi import FastAPI, File, HTTPException

from fastapi.responses import FileResponse

from fastapi.staticfiles import StaticFiles

import pandas as pd

logger = logging.getLogger(__name__)

app = FastAPI()

app.mount("/static", StaticFiles(directory="static"), name="static")

def predict(feature_1, feature_2, feature_3):

# Simple model: predict 1 if feature_1 + feature_2 + feature_3 > 3, else predict 0

return 1 if feature_1 + feature_2 + feature_3 > 3 else 0

@app.post("/predict_batch/")

async def predict_batch(file: bytes = File(...)):

"""

Expects an excel file with columns: feature_1, feature_2, feature_3.

<a href="/static/example_input.xlsx">Example Input</a>.<br>

Returns a modified excel file with columns: feature_1, feature_2, feature_3, prediction

<a href="/static/example_output.xlsx">Example Output</a>

"""

try:

# Read the Excel file into a Pandas DataFrame

df = pd.read_excel(io.BytesIO(file))

# Make predictions using the predict function and the apply method

df['prediction'] = df[['feature_1', 'feature_2', 'feature_3']].apply(

lambda row: predict(row['feature_1'], row['feature_2'], row['feature_3']), axis=1)

# Write the modified DataFrame to a temporary file and return it as an Excel file response

stream = io.BytesIO()

df.to_excel(stream, index=False)

stream.seek(0)

with tempfile.NamedTemporaryFile(mode="w+b", suffix=".xlsx", delete=False) as FOUT:

FOUT.write(stream.read())

return FileResponse(

FOUT.name,

media_type="application/vnd.openxmlformats-officedocument.spreadsheetml.sheet",

headers={

"Content-Disposition": "attachment; filename=predictions.xlsx",

"Access-Control-Expose-Headers": "Content-Disposition",

}

)

except Exception as e:

logger.error(e)

raise HTTPException(status_code=400, detail="Batch prediction failed.")

The endpoint expects an excel file with columns: feature_1, feature_2, feature_3. An example input file can be found at the link “/static/example_input.xlsx” and the endpoint will return a modified excel file with columns: feature_1, feature_2, feature_3, and prediction. An example output file can be found at the link “/static/example_output.xlsx”.

Conclusion

In conclusion, batch predictions are an essential tool in Machine Learning projects that can save time and improve efficiency when working with large datasets. We demonstrated how to implement a batch prediction endpoint using Python’s FastAPI, and provided an example of how it can be used to make predictions on an excel file. Additionally, we discussed other examples of Machine Learning projects and frameworks that provide batch prediction endpoints, such as Tensorflow Serving, MLFlow, and SageMaker. These endpoints can handle large amounts of data, improve efficiency, and keep your server secure. By implementing a batch prediction endpoint, you can streamline your Machine Learning pipeline and make predictions on large amounts of data with ease.

Stay informed and stay ahead of the game by following our channel for the latest insights and tutorials on ML, DS, coding, and hacking!

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

🚀👉 Join the Level Up talent collective and find an amazing job

Streamline Your Machine Learning Pipeline: Implement a Batch Predictions API with FastAPI and Excel was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Shanaka DeSoysa

Shanaka DeSoysa | Sciencx (2023-01-30T17:28:27+00:00) Streamline Your Machine Learning Pipeline: Implement a Batch Predictions API with FastAPI and Excel. Retrieved from https://www.scien.cx/2023/01/30/streamline-your-machine-learning-pipeline-implement-a-batch-predictions-api-with-fastapi-and-excel/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.