This content originally appeared on DEV Community 👩💻👨💻 and was authored by costica

We’re going to explore how to set yourself up for success - when it comes to exploring Kubernetes and running Dockerized applications.

It all starts with understanding whys and hows to deliver your application while being productive in your local dev environment.

Why

Every time I want to start a new project, I feel like reinventing the wheel. There are so many tutorials that cover Node, Docker, and debugging node… but not how to combine those.

While individually each of those is a 5-minute setup, the internet runs short when it comes to an explanation of how to set up the whole thing.

So let us follow The twelve factors and start thinking “cloud-first”, and have a project setup that makes sense for both delivery and development.

What’s covered

In this article: building & running your container. In-depth examples and explanations of how I reason about setting things up.

In the follow-up article: in-depth explanation about using containers for “one-command, ready-to-run” projects. Hot-reload, debugger, tests.

Delivery-first mentality

Say you finished your project and want to deploy it in the wild, so other people can access it. All you have to do is run node src/app.js, right? Right?!

Not quite.

- What were the steps you took on your local environment to run your application?

- Any prerequisites?

- Any OS dependencies?

- etc, etc…

The same goes for any server. It needs to have some things installed so that it can run your code.

This applies to your friend’s PC and to your mom’s laptop, too. You need to actually install

node(and ideally, the same version) so that it runs the same way as it does for you.

The problem, if you only do this as a “final” step is that, most likely, your app won’t work anywhere else than on your local machine.

Or you are a remember-it-all kind of person, that knows exactly all the steps needed to run the application. And you’re willing and have the time to do that setup manually on multiple machines!

The project

Just in case you want to follow along, this is the app that we’re going to consider: a simple express application, with cors enabled, and nodemon for local development & hot-reload.

// src/app.js

import * as dotenv from 'dotenv'

dotenv.config()

import cors from 'cors'

import express from 'express'

const app = express()

app.use(cors())

app.get('/', (req, res) => {

const testDebug = 4;

res.send('Hello World!')

})

const port = process.env.APP_PORT

app.listen(port, () => {

console.log(`App listening on port ${port}`)

})

// package.json

{

"scripts": {

"dev": "nodemon --inspect=9500 ./src/app.js"

},

"dependencies": {

"cors": "^2.8.5",

"dotenv": "^16.0.3",

"express": "^4.18.2"

},

"devDependencies": {

"nodemon": "^2.0.20"

},

"type": "module"

}

While there are many other things a real project would need, this should suffice for this demo. This is the commit used for this explanation, btw: node-quickstart-local-dev.

After cloning/downloading the files, all you have to do is to run a simple npm install; it will fetch all the dependencies defined in the package.json.

Running npm run dev will start the nodemon process simulating node --watch and exposing port 9500 for debugging.

Or we can run this in “prod” mode by calling node src/app.js. This is all that’s needed to run this project on our local machine.

🤦 No, it’s not, you silly! You also need node to run the app and npm to install the dependencies. To add to that, probably some specific versions of those.

Containers

While we’re discussing the simple case of just having node & npm here, in time, projects and their runtime dependencies grow.

While keeping in sync your laptop configuration with the server’s config is probably possible, wouldn’t it be easier if you… don’t have to do it?

How about a way of deploying your application on any server _(some conditions may apply*)_ or laptop without having to install node? Or any other dependencies our app might have?

Say ‘Hello’ to containerization, the buzzword for the past decade.

Instead of giving a wishlist of prerequisites to your mom and then emailing her the app.js file, we’re going to wrap everything inside a… let’s call it bundle, for simplicity.

What bundle means

This bundle is actually a… “bundle”. It has both application code (the app.js that we wrote) and all the dependencies needed to run our app: node engine and app’s dependencies, found in the node_modules folder.

At a first glance, this is nice. We have everything we need to run the app in the bundle.

However, I am still in the same uncomfortable position as earlier, the only difference being that instead of “you need to install node” I can now say to my mom “you need to install a container runtime so you can run the image I sent you”.

It is, however, an improvement. While not all laptops or servers will have the exact same version of node, most “dev-compatible” machines will have a container runtime: something that knows how to run the bundle. There are more of them out there, but, for brevity, we’re just going to call the runtime Docker.

So we have changed our run instructions from “install node” to “install Docker” and run the file I gave you like so: docker run bla-bla.

Why it is an improvement: I can now send any file to my mom and she will be able to run it. Let’s say we install Docker on her laptop beforehand - then all she has to do is to run them.

That’s a nice improvement, I’d say.

Let’s create a bundle

Creating a bundle starts from a template - a Dockerfile. It looks something like this:

# Dockerfile

FROM node:18.2.0

ARG WEB_SERVER_NODE_APP_PORT=7007

ENV WEB_SERVER_NODE_APP_PORT=$WEB_SERVER_NODE_APP_PORT

# Create app directory

WORKDIR /node-app

# Install app dependencies

# A wildcard is used to ensure both package.json AND package-lock.json are copied

# where available (npm@5+)

COPY package*.json ./

RUN npm install --production

COPY src/* ./src/

EXPOSE $WEB_SERVER_NODE_APP_PORT

CMD ["npm", "run", "server"]

I know it doesn’t make sense. And all tutorials that I’ve seen so far mix multiple concepts (like building, running, exposing ports, binding volumes, etc) without any explanation and everyone gets confused.

Let’s take it step by step.

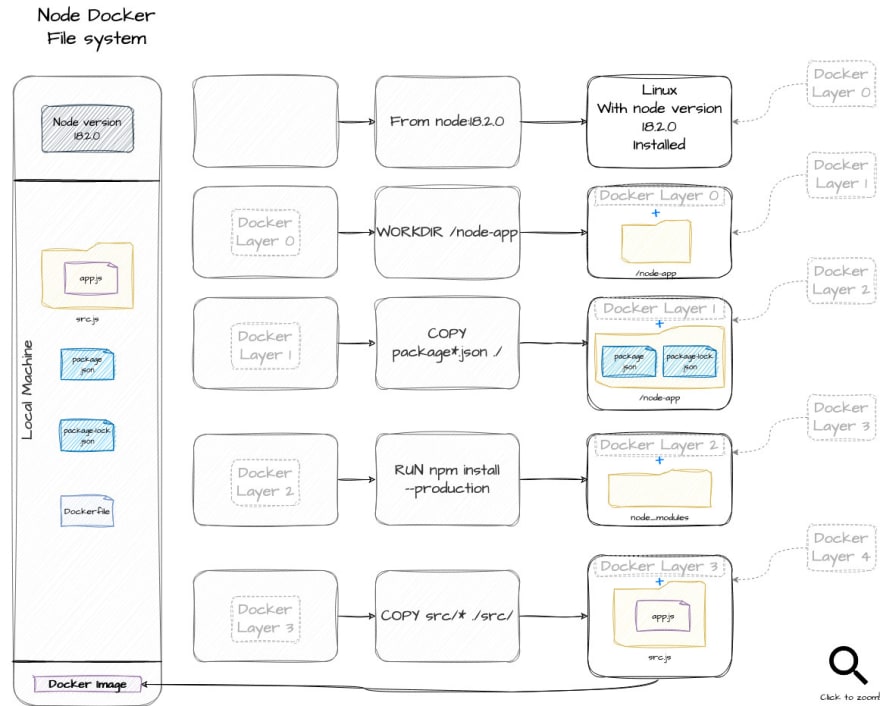

Build & run your application - Files only

As we’ve seen so far, just running the app on our local machine is simple. Things get complicated only when we add different things into the mix. So let’s keep it simple:

## Dockerfile

# Set up the base: a Linux environment with node.js version 18.2 installed

FROM node:18.2.0

# Create a new folder inside the "node:18.2.0" Linux OS"

# This command both creates the folder & `cd`'s into it (pwd output after running this command is `/node-app`)

WORKDIR /node-app

# Copy our package.json & package-lock.json to `/node-app`

# Because we're already in `/node-app`, we can just use the current directory as the destination (`./`)

# Copy HOST_FILES -> Bundle Files

COPY package*.json ./

# Now we want to install the dependencies **inside** the bundle

# While you might be tempted to copy the `node_modules`

# just because you already have it on your local machine, don't give in to the temptation!

# The advantage of running this inside the bundle-container is that you get reproducibility;

# it will run inside the context of `node:18.2`

# and it doesn't even need your machine to have `node` or `npm` installed!

RUN npm install --omit=dev

# And finally, make sure we also copy our actual application files to the bundle

COPY src/* ./src/

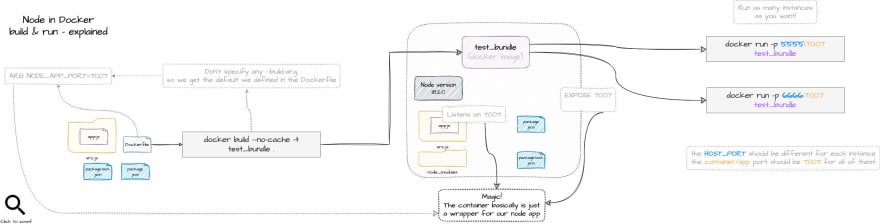

Behind the scenes, this is a rough estimation of what’s going on:

That is the template - the instructions of how the bundle should be built. The actual building is done by running docker build:

# Make sure the terminal has its current folder `cd`ed into the root project, same level as the `Dockerfile`.

docker build --no-cache --tag test_bundle .

docker build : I’m sure you got that part - we’re at the

buildstep–no-cache : Optional, but I like to add it because Docker cache is something I don’t master; why get frustrated things don’t get changed when we can make sure, with this simple flag, that we get a clean slate every time?

–tag TAG_NAME : set how the

bundleis going to be called"." : set the context of the command; in our case, since we are in the same folder as the

Dockerfile, we can just mark it as “.” - which means the current folder

Running what we created

Now, let’s run our bundle:

docker run test_bundle

Aaand it might seem that nothing happened. But, it did actually run - even for just a moment.

But since we didn’t specify what command it should run, it defaulted to run a simple node command. It defaulted to that because that’s defined in the node:18.2.0 template we’re using as a base.

And because node is supposed to run and then exit, our container also exited instantly. Containers are supposed to be “short-lived”.

Let’s make it run our app, instead. And since our app happens to be a web-server-long-running-process, the container won’t exit immediately.

docker run test_bundle node src/app.js

Now we get the output:

App listening on port undefined

Environment values

Our app port is “dynamic”, listening on whatever port is specified in the environment variable called APP_PORT.

Let’s hard-code that value for a bit to something like 3003, just to test our bundle:

// const port = process.env.APP_PORT

const port = 3003

❗ We can’t test this simple change again by just re-running the

docker run test_bundle node src/app.jsagain because thebundlewas already built with the version of theapp.jsfile where it reads thePORTvalue from an environment variable.

We will now have to rebuild the template & run the application again:

docker build --no-cache --tag test_bundle . && docker run test_bundle node src/app.js

Success!

App listening on port 3003

Let’s see it in action. In any browser, go to localhost:3003. And it won’t work…

Checking the app inside the container

Everything’s alright, I promise. The app really does work on the 3003 PORT, but that’s inside the running container.

Let’s run a GET request from inside the container, similar to what the browser does when we access it from the host machine:

First, we need to be inside the container. For that, we need to know what the container ID is:

docker ps

docker exec -it CONTAINER_NAME /bin/bash

A more user-dev-friendly way to get a terminal into a container running in your local machine is via Docker’s Dashboard:

Now that we have a shell terminal inside the container, let’s test our application:

curl localhost:3003

Success! We get a “Hello world!” back.

Exposing ports so we can use our app from outside the container

That’s not helpful, is it?

A web server is supposed to be accessed from a browser, not from inside a container through a terminal. What we need to do next, is to set up some port forwarding.

-p HOST_PORT:CONTAINER_PORT

docker build --no-cache --tag test_bundle . && docker run -p 7007:3003 test_bundle node src/app.js

Finally, accessing localhost:7007 inside a browser works.

Ok, we tested our bundle. It works just fine, even though all we did was make sure we have some files inside of it.

A glance into the future - How containers are actually run

Let’s go back to the “hardcoded” app port. It isn’t an issue to hardcode your app port like this.

We can rely on the fact that the app will always listen on the 3003 port and then just set up the container to do the forwarding between CUSTOM_PORT<->3003.

I’m not a big fan of this solution, though. Simply because if I want to understand why a bundle behaves as it does I have to check its build command, its run command, and also the application code.

This is not a real issue now, but it will become one if we try to run this container inside Kubernetes, for example. Without going into too many details, in the future we will want to configure something like this:

spec:

containers:

- image: localhost:55000/project1

name: project1

ports:

- containerPort: CONTAINER_PORT

Preparing for the real world

It will be a lot easier if we just set our container and app port binding right from the beginning.

The trick here is to make sure we keep the two values in sync:

- on one hand, we care about the PORT that the container exposes to the outer world

- on the other hand, we care about the PORT that the apps listen to

So revert our app code to read the env variable instead of being hardcoded to 3003:

// const port = 3003

const port = process.env.APP_PORT

The end goal is to have complete control over:

- what APP_PORT gets defined at build time (when the

bundleis created); - keep APP_PORT in sync with the port exposed by the container

- what HOST_PORT is bound to the APP_PORT

If the two (APP_PORT, CONTAINER_EXPOSED_PORT) are the same, the exposing part of the run command will be -p HOST_PORT:APP_PORT.

The advantage of doing that is that it allows our run command to be as slim as:

docker run -p HOST_PORT:APP_PORT_EXPOSED_PORT test_bundle node src/app.js

And the good news is we can find the APP_PORT_EXPOSED_PORT without looking into the app.js file - we can just check the Dockerfile!

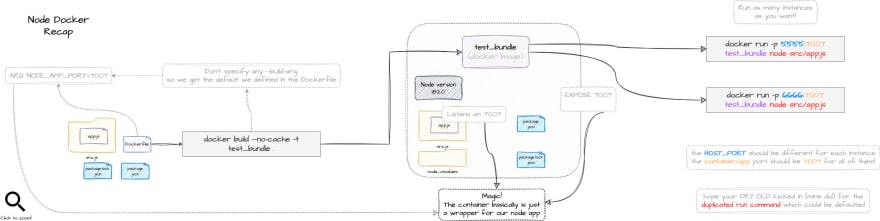

Build arguments

For that, we’re going to use a combination of build arguments and env variables.

# Dockerfile

# Set the BUILD_ARG variable with the default value of 7007;

# Can be overriden with the `--build-arg`; Note!: if overriden, the run command must also change...

# We rely on this build argument to be the same in the `ENV` and the `EXPOSE` commands

ARG ARG_WEB_SERVER_NODE_APP_PORT=7007

# If no env variable is provided, default it to the ARG we already set

ENV WEB_SERVER_NODE_APP_PORT=$ARG_WEB_SERVER_NODE_APP_PORT

# Expose the port we define when we build the container or the default setting

EXPOSE $ARG_WEB_SERVER_NODE_APP_PORT

The complete Dockerfile looks like so:

# Dockerfile

FROM node:18.2.0

ARG ARG_WEB_SERVER_NODE_APP_PORT=7007

ENV APP_PORT=$ARG_WEB_SERVER_NODE_APP_PORT

WORKDIR /node-app

COPY package*.json ./

RUN npm install --omit=dev

COPY src/* ./src/

EXPOSE $ARG_WEB_SERVER_NODE_APP_PORT

Building the bundle command now becomes:

docker build --no-cache -t test_bundle .

And we can run it using:

docker run -p 5555:7007 test_bundle node src/app.js

Since we didn’t specify any build arg, both the APP_PORT and the exposed port defaulted to the defined 7007. That means all we have to do is to choose what host port to forward to the container exposed port - in the example above, we forwarded 5555 to the default 7007.

What happens if we want to use something else for the APP_PORT?

docker build --no-cache --build-arg ARG_WEB_SERVER_NODE_APP_PORT=8008 -t test_bundle .

And then run:

docker run -p 5555:8008 test_bundle node src/app.js

Short recap

So we know how to build our app and how to run it (using docker run ... node src/app.js).

However, that’s not a standard. That’s how I chose to run it. This is not ideal for multiple reasons:

- figuring out how to run the container requires some application-code investigation

- if I want to change the command, I should notify everyone using the container to run it using the new command; there’s also the chance that they will find out (surprise!) that their way of running it no longer works

So let’s default the command that is run inside the Dockerfile:

CMD ["node", "src/app.js"]

We can now rebuild & run the container in a much simpler way:

docker build --no-cache --tag test_bundle . && docker run -p 5555:7007 test_bundle

This doesn’t reduce our flexibility in any way, as the cmd can still be overridden at runtime:

docker run -p 5555:7007 test_bundle

Build & run - aka Deliver

A final overview of what we achieved so far is this:

From “install node” and run “npm install” and “node src/app.js” to docker run.

It’s not much, but it’s honest work.

However, this only solves the delivery part.

Coming up - shareable dev setups with Docker

While this article covers the delivery part and some basic concepts of Docker, environment variables, and port binding… we’re just scratching the surface.

The real fun begins in the 2nd part of the article, where we start creating Docker configurations for the dev environment.

The next article covers:

- how to run the code and hot-reload it inside a container each time you hit “save”

- how to debug your code when it is run inside a container

- how to not rely on long, hard-to-remember

docker run ...commands

And the good news is… it’s going to be easy since we’re going to piggy-bank on some of the concepts learned here.

Until the next one, by-bye!

This content originally appeared on DEV Community 👩💻👨💻 and was authored by costica

costica | Sciencx (2023-02-18T08:15:00+00:00) Backend Delivery – Hands-On Node & Docker 1/2. Retrieved from https://www.scien.cx/2023/02/18/backend-delivery-hands-on-node-docker-1-2/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.