This content originally appeared on DEV Community and was authored by Kihara, Takuya

Sometimes I want to store Amazon DynamoDB records in AWS S3 in order to use Amazon Athena.

We can do it simply with Amazon Kinesis Data Stream and Amazon Kinesis Data Firehose.

I am writing this article on how to set them up.

Table Of Contents

-

Set up Amazon DynamoDB with Amplify Studio

- Open AWS Console

- Create Amplify Project and Amazon DynamoDB

- Create Amazon DynamoDB table

-

Create Amazon Kinesis in Amazon DynamoDB configuration

- Show Amazon DynamoDB configuration

- Create Amazon Kinesis Data Stream

- Create Amazon Kinesis Data Firehose

- Connect Amazon DynamoDB to Amazon Kinesis Data Stream

- Insert data to Amazon DynamoDB and check Amazon S3

1. Set up Amazon DynamoDB with Amplify Studio

I recommend using Amplify Studio for setting up Amazon DynamoDB because of the helpful Data Manager.

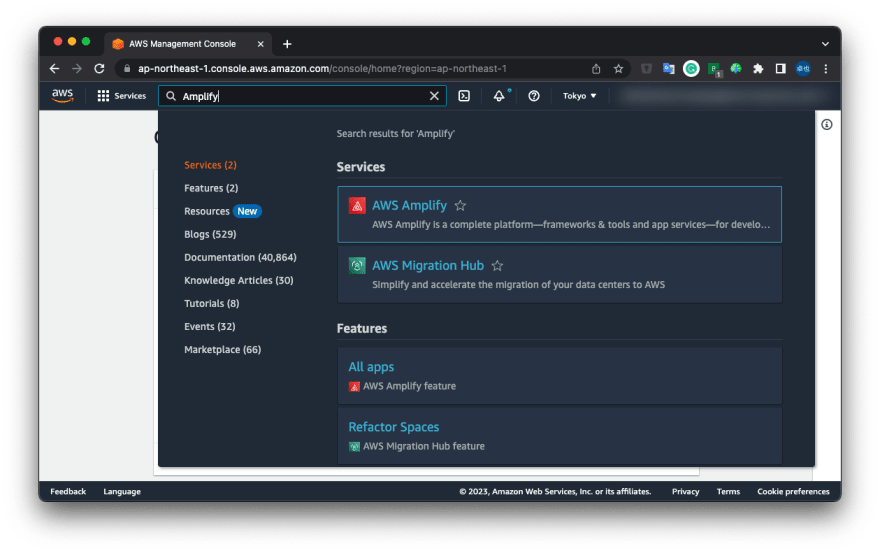

1.1. Open AWS Console

Open AWS Console and choose "AWS Amplify".

1.2. Create Amplify Project and Amazon DynamoDB

Create a new Amplify Project.

Click "New app" and "Build an app".

Input "App name". This time I input "SampleKinesis".

Click "Confirm deployment" and wait a few minutes.

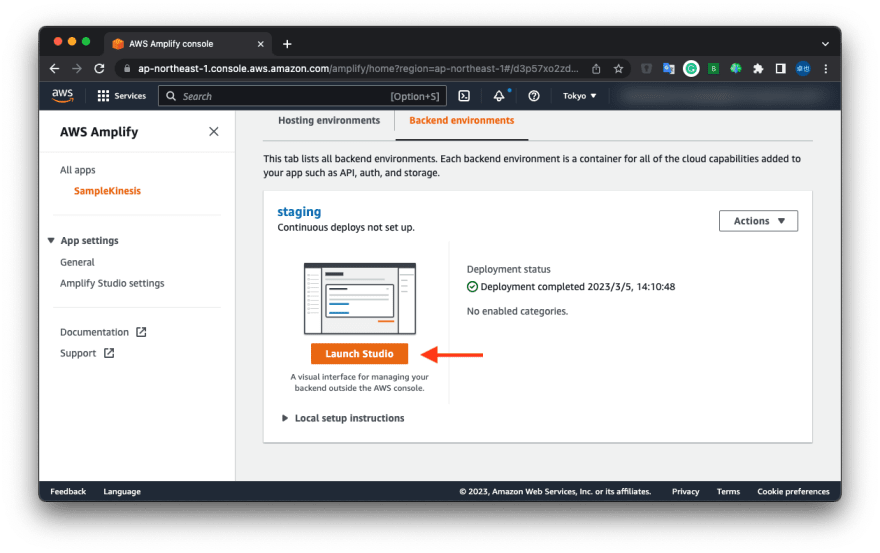

To finish the deployment, you can see "Launch Studio" and click it.

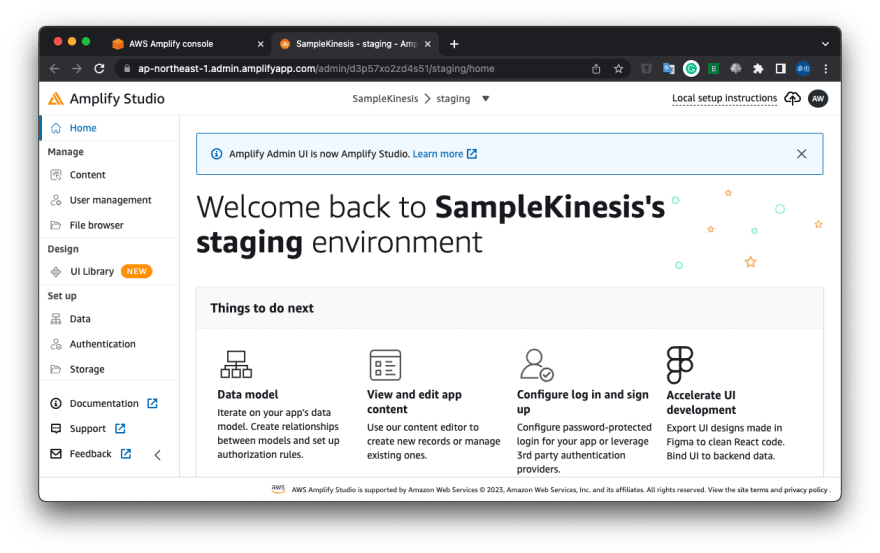

And then, you can see the "Amplify Studio" page.

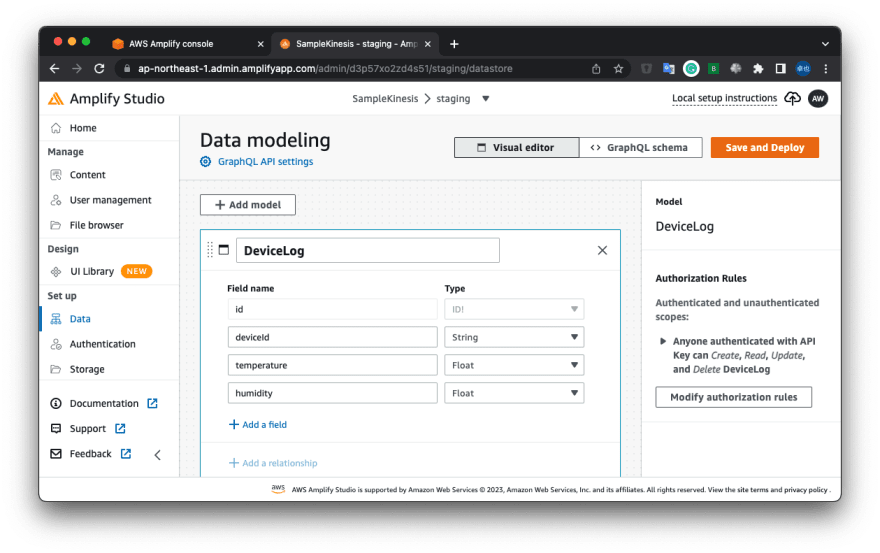

1.3. Create Amazon DynamoDB table

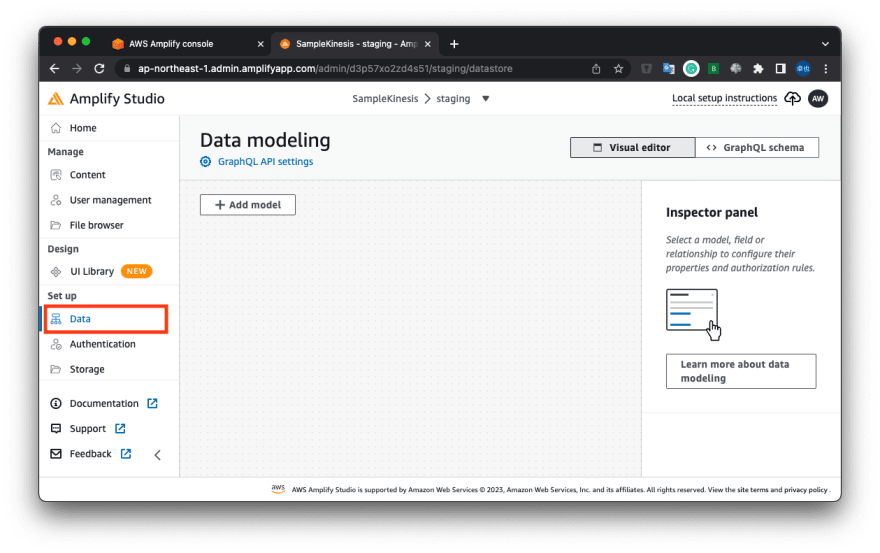

Click "Data" in the left-side menu.

Then, you can see the "Data modeling" page.

Next, click "Add model".

Input table name and add fields.

For example, I input the table name and added the below fields.

Table name

DeviceLog

Fields

| Field name | Type |

|---|---|

| deviceId | String |

| temperature | Float |

| humidity | Float |

Click "Save and Deploy" and wait some minutes.

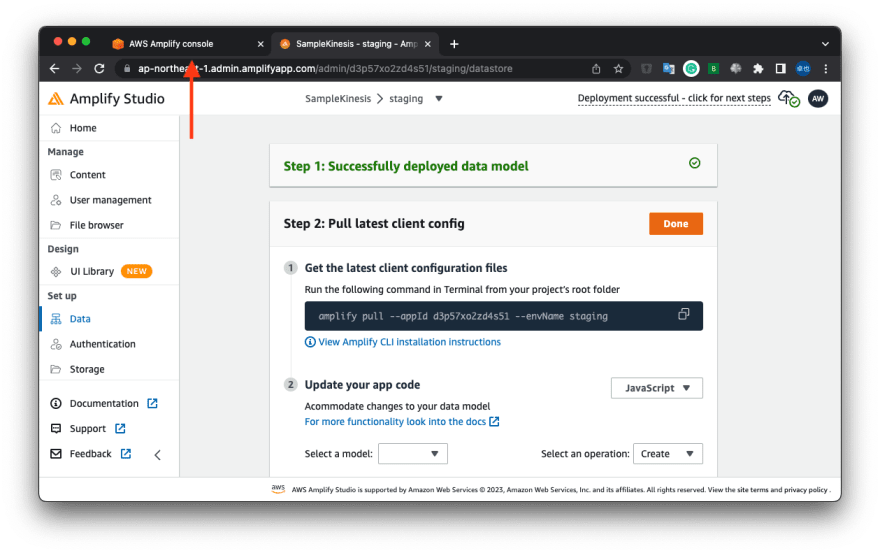

Then, you can see "Successfully deployed data model" and return to the "AWS Amplify console" browser tab.

2. Create Amazon Kinesis in Amazon DynamoDB configuration

2.1. Show Amazon DynamoDB configuration

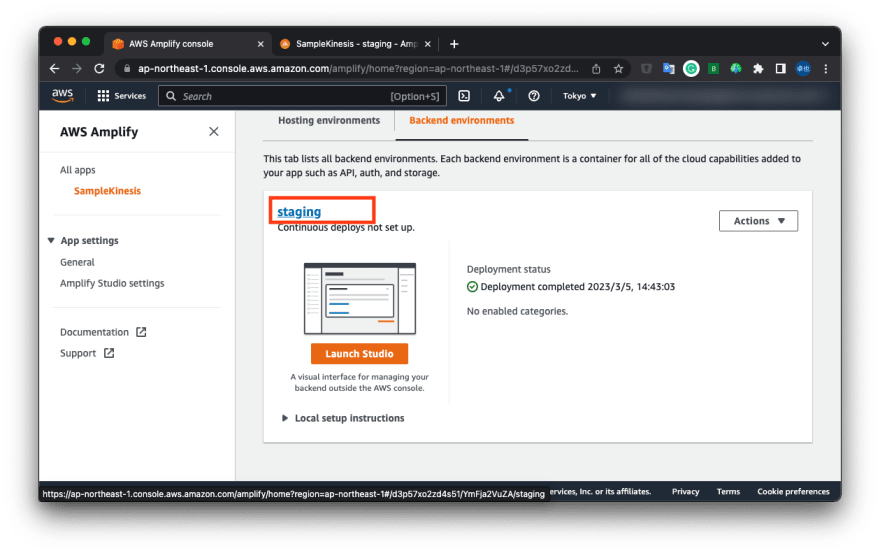

Go back to the AWS Amplify console, and click the link labeled "staging".

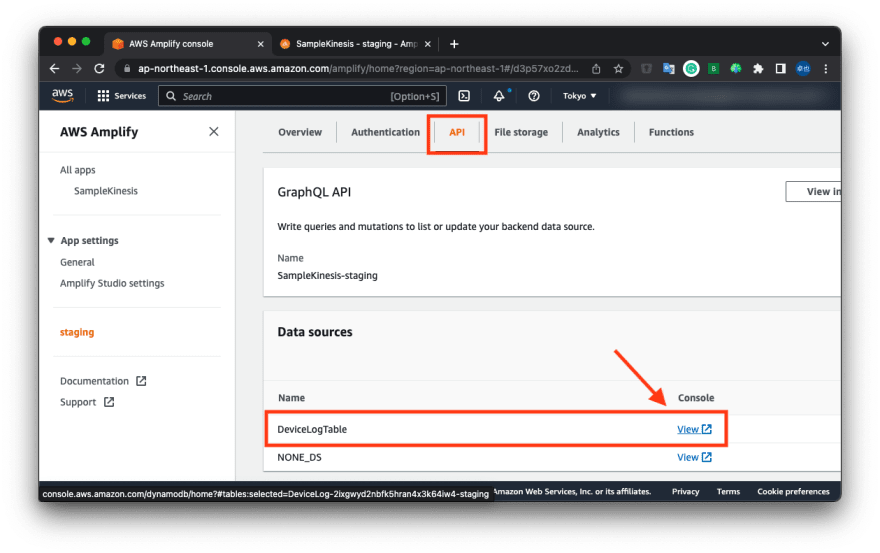

Click the "API" tab and the "View" link.

And then, You can see Amazon DynamoDB console.

2.2. Create Amazon Kinesis Data Stream

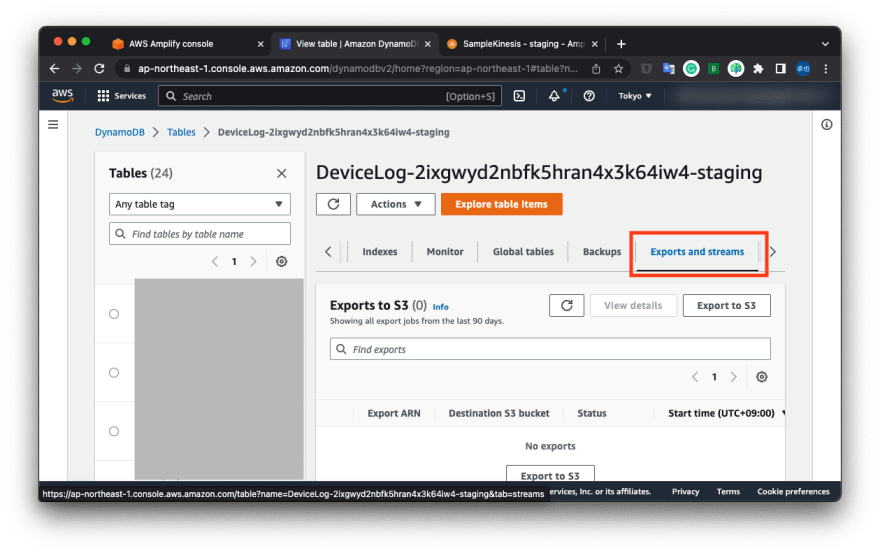

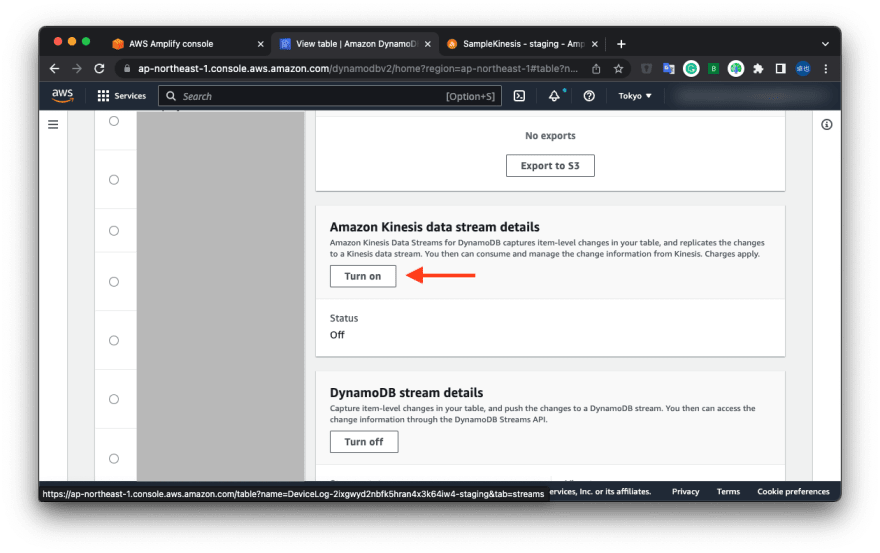

Next, click the "Exports and streams" tab.

And click "Turn on" in Amazon Kinesis data stream details.

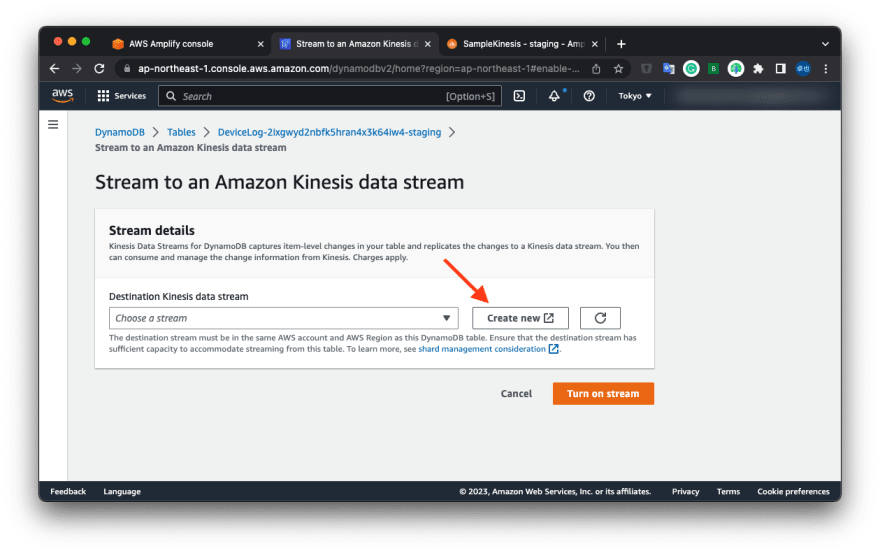

You can see the "Stream to an Amazon Kinesis data stream" page, then click "Create new".

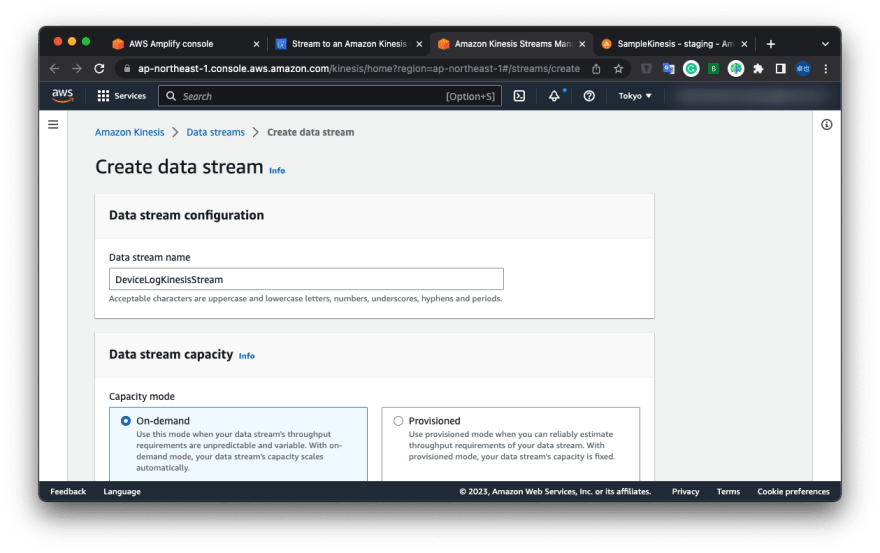

You can see the "Create data stream" page and input "Data stream name".

I input "DeviceLogKinesisStream".

Then, click "Create data stream" bottom of the page.

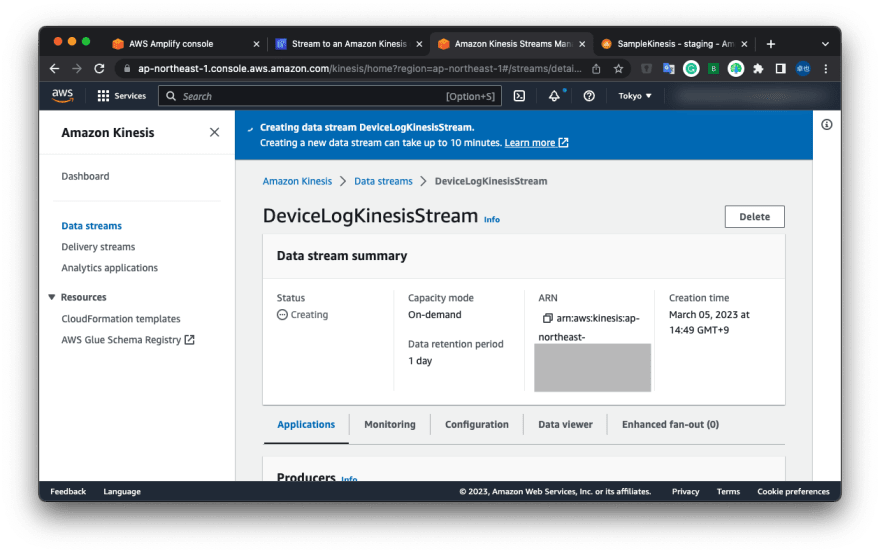

You can see the "DeviceLogKinesisStream" page.

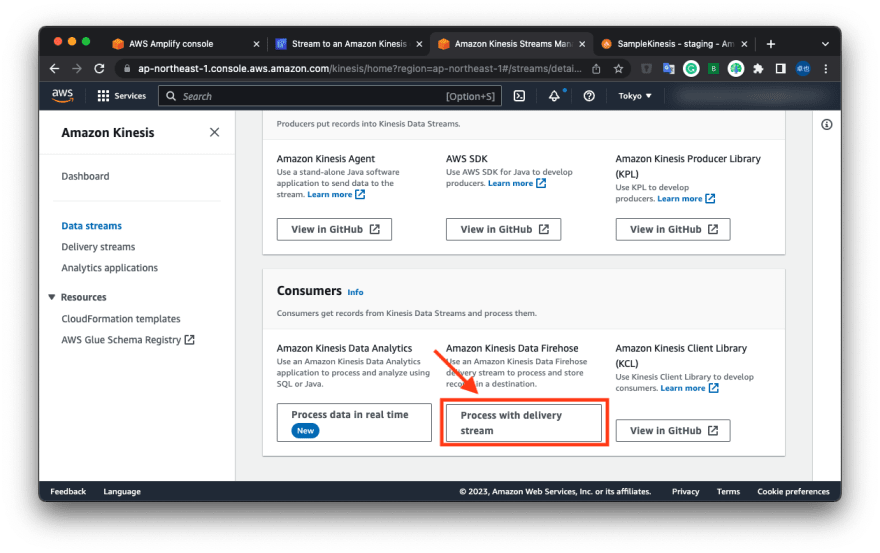

2.3. Create Amazon Kinesis Data Firehose

Scroll to the bottom of the page, and click the "Process with delivery stream" under the "Amazon Kinesis Data Firehose".

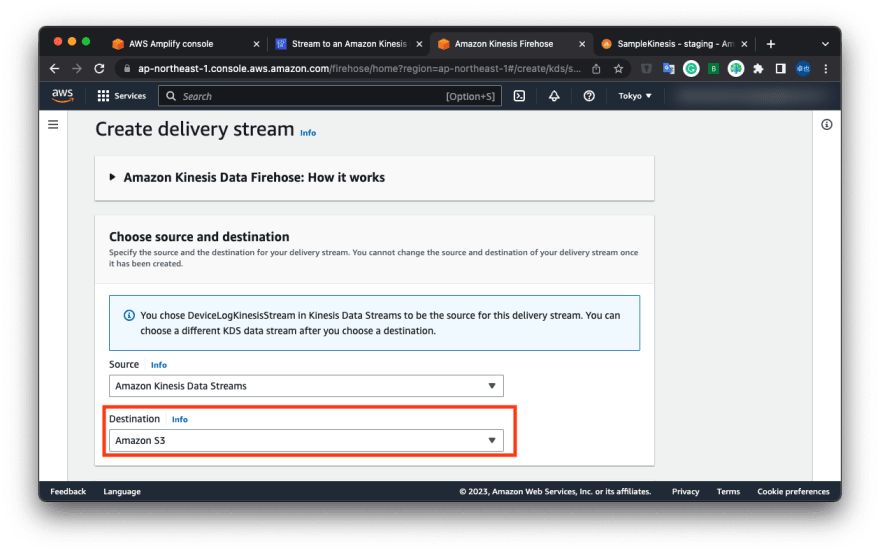

You can see the "Create delivery stream" page.

Change to "Amazon S3" in the "Destination".

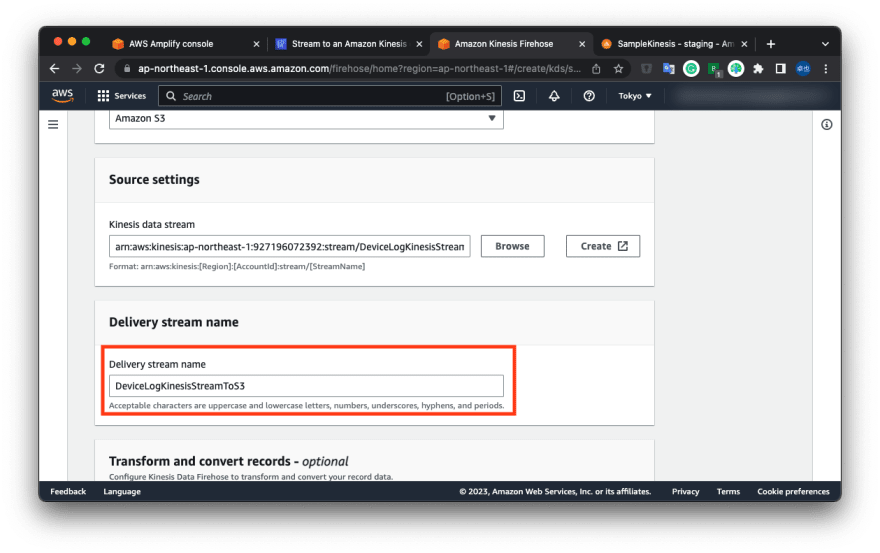

Change the "Delivery stream name" to "DeviceLogKinesisStreamToS3".

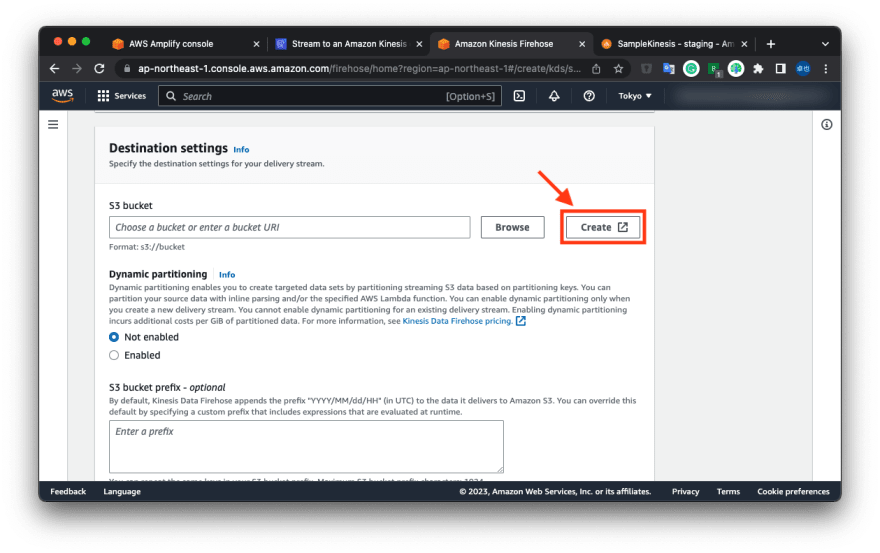

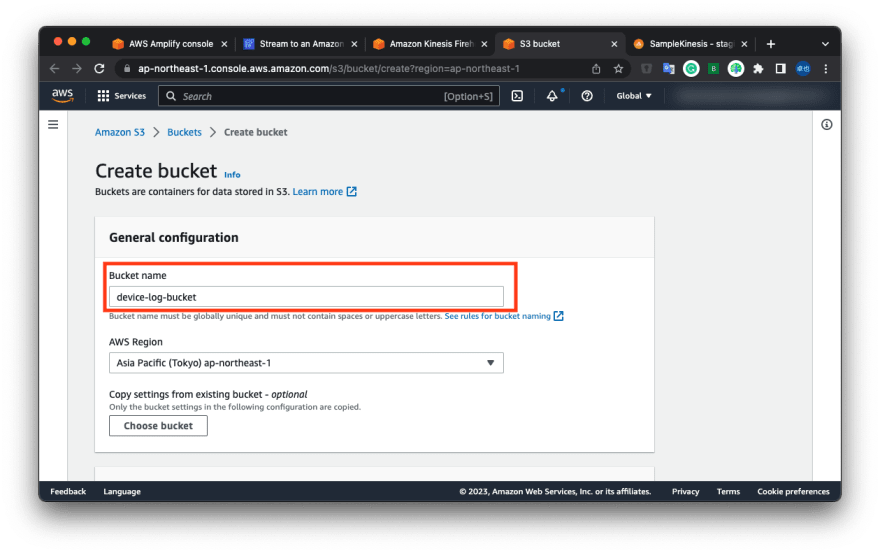

Next, create an Amazon S3 bucket in the "Destination settings" category.

Click "Create".

Input "Bucket name" and click "Create bucket" bottom of the page.

I input "device-log-bucket".

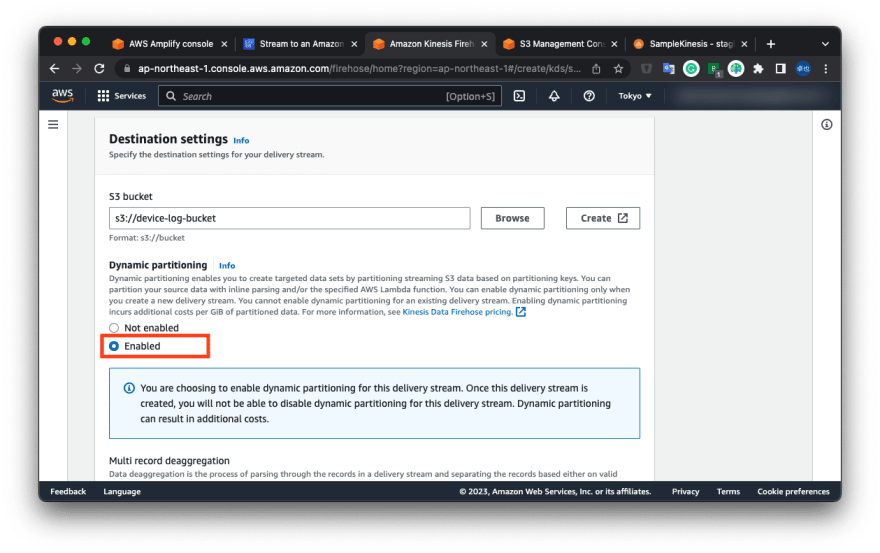

Return to the "Create delivery stream" page, and click "Browse".

Click reload button and select the bucket created above.

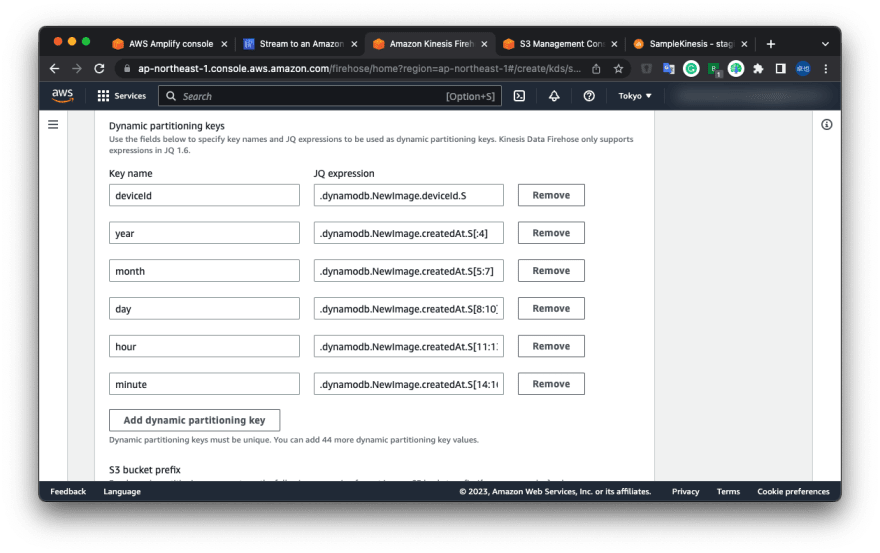

These configurations are for Amazon Athena's partitioning.

Next, click "Enabled" in "Dynamic partitioning".

In "Dynamic partitioning keys", insert the following Key names and JQ Expressions.

| Key name | JQ expression |

|---|---|

| deviceId | .dynamodb.NewImage.deviceId.S |

| year | .dynamodb.NewImage.createdAt.S[:4] |

| month | .dynamodb.NewImage.createdAt.S[5:7] |

| day | .dynamodb.NewImage.createdAt.S[8:10] |

| hour | .dynamodb.NewImage.createdAt.S[11:13] |

| minute | .dynamodb.NewImage.createdAt.S[14:16] |

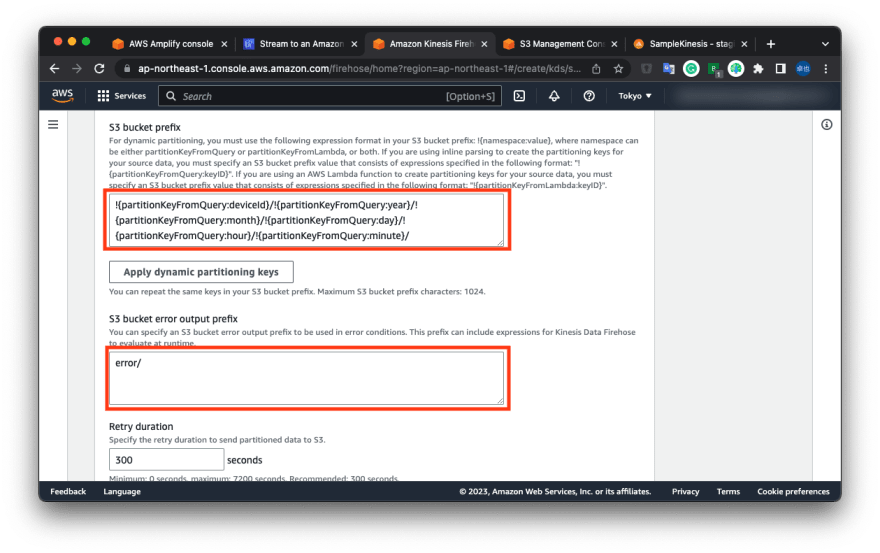

Input "S3 bucket prefix" and "S3 bucket error output prefix".

I input follow configurations.

S3 bucket prefix

!{partitionKeyFromQuery:deviceId}/!{partitionKeyFromQuery:year}/!{partitionKeyFromQuery:month}/!{partitionKeyFromQuery:day}/!{partitionKeyFromQuery:hour}/!{partitionKeyFromQuery:minute}/

S3 bucket error output prefix

error/

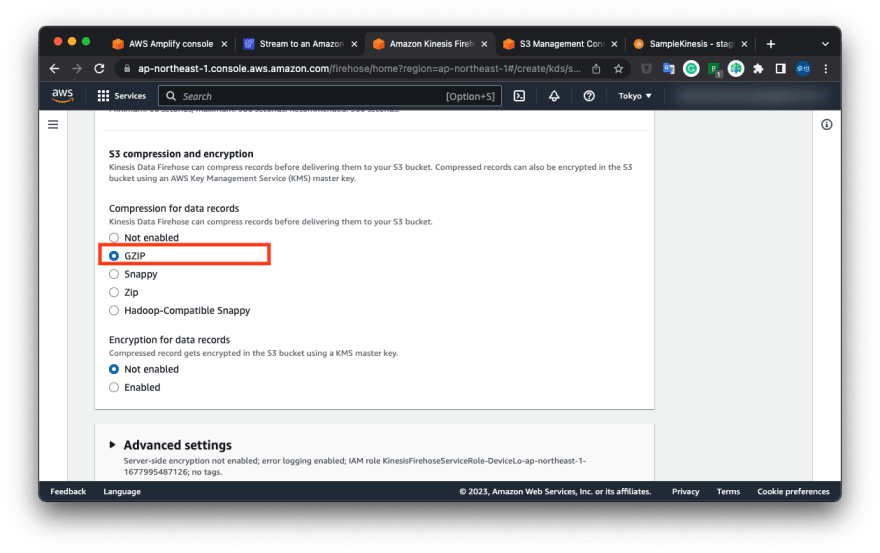

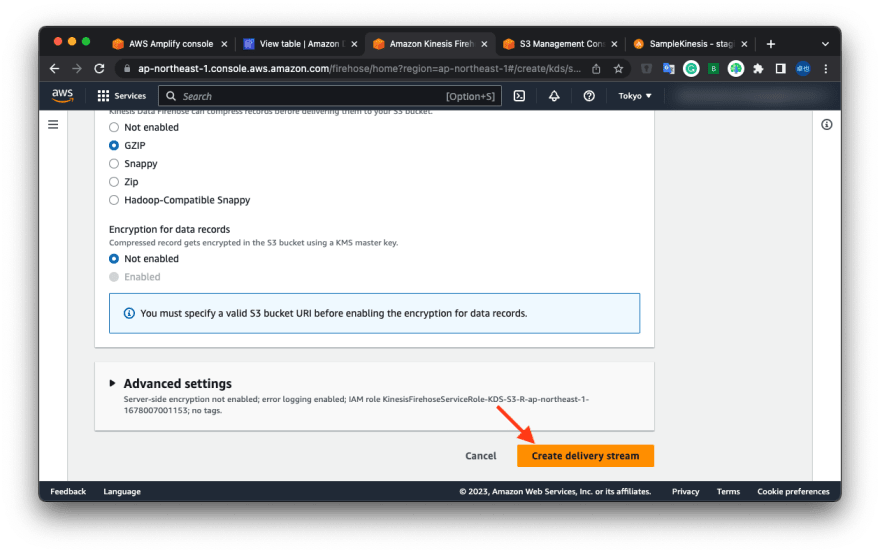

Then, click "Buffer hints, compression, and encryption" and click "GZIP" in the "Compression for data records" category.

Finally, click "Create delivery stream" bottom of the page.

2.4. Connect Amazon DynamoDB to Amazon Kinesis Data Stream

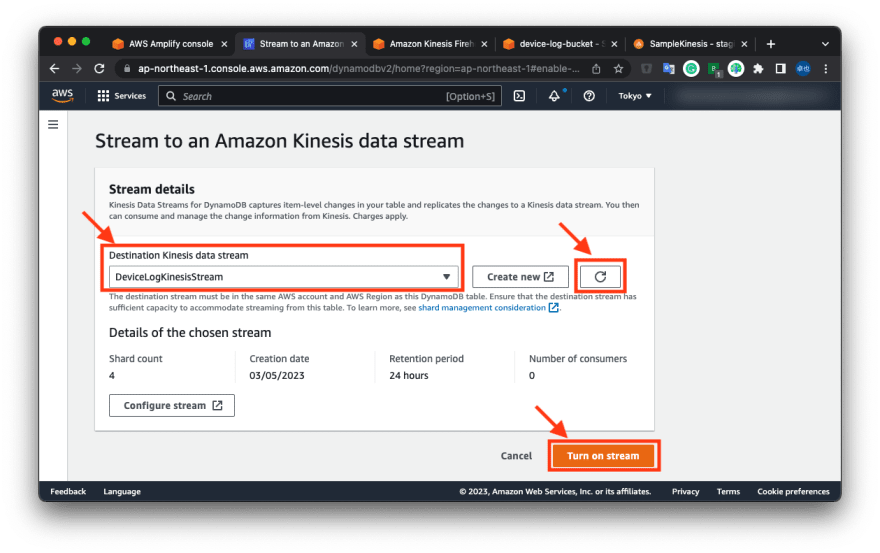

Return to the "Stream to an Amazon Kinesis data stream" page (appeared in section 2.2.).

Click reload button, and select "DeviceLogKinesisStream" in the "Destination Kinesis data stream".

Then click "Turn on stream" bottom of the page.

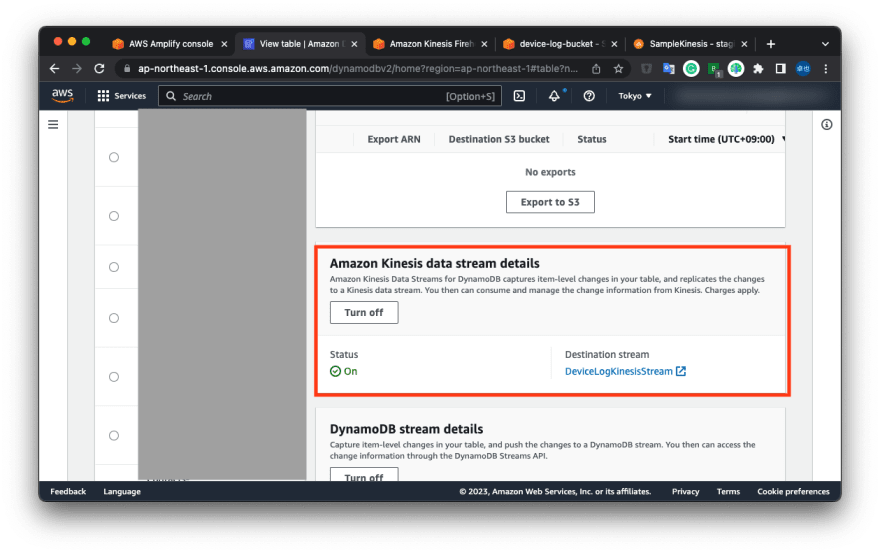

It's a long journey.

Now you finished connecting Amazon DynamoDB to Amazon Kinesis Data Stream.

3. Insert data to Amazon DynamoDB and check Amazon S3

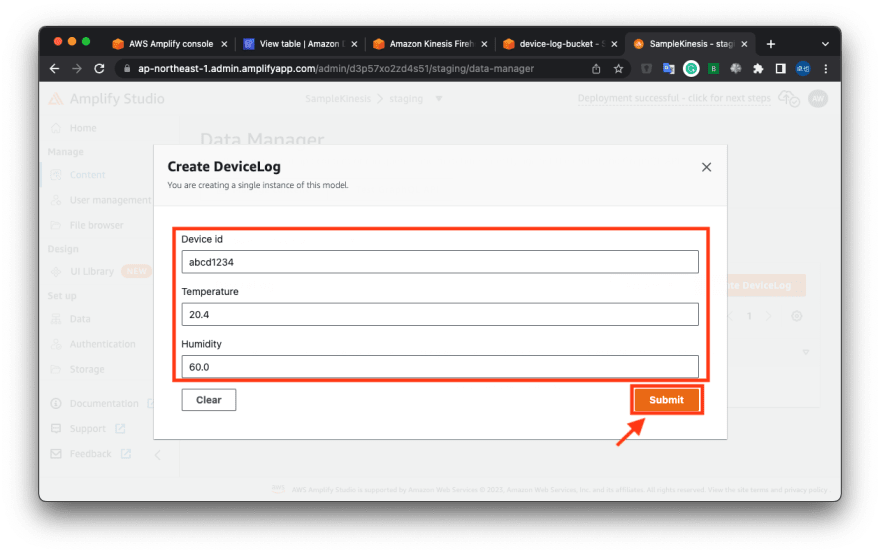

Go back to Amplify Studio, click "Content" in the left-side menu.

Then click "Create DeviceLog".

Input fields and click "Submit".

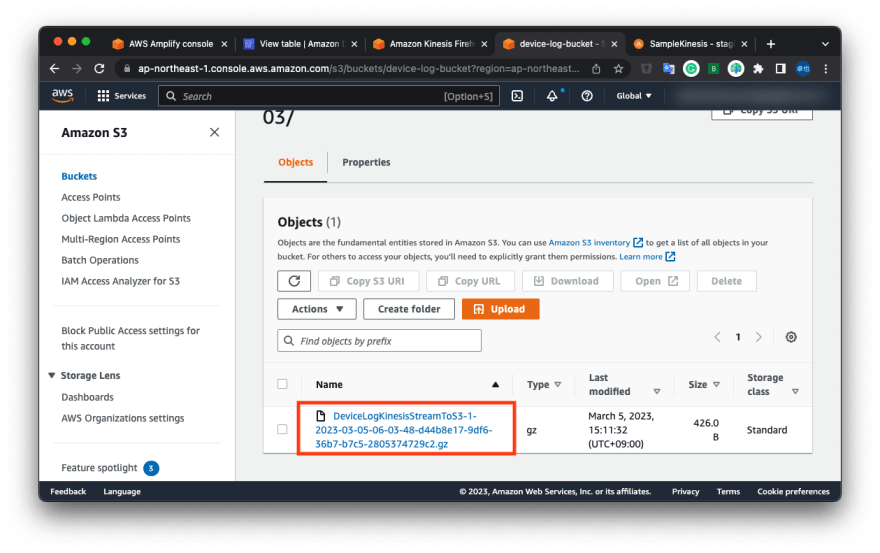

Go to the Amazon S3 page and check objects.

You should wait 5 or more minutes, and you can see stored data from Amazon DynamoDB.

You can check the stored data when you download it.

{

"awsRegion": "ap-northeast-1",

"eventID": "cce6ab95-2efd-4b24-b7a6-acf119a8a7b1",

"eventName": "INSERT",

"userIdentity": null,

"recordFormat": "application/json",

"tableName": "DeviceLog-2ixgwyd2nbfk5hran4x3k64iw4-staging",

"dynamodb": {

"ApproximateCreationDateTime": 1677996227900,

"Keys": { "id": { "S": "c09657d6-54b0-4777-b274-6cf3036849d1" } },

"NewImage": {

"__typename": { "S": "DeviceLog" },

"_lastChangedAt": { "N": "1677996227878" },

"deviceId": { "S": "abcd1234" },

"_version": { "N": "1" },

"updatedAt": { "S": "2023-03-05T06:03:47.846Z" },

"createdAt": { "S": "2023-03-05T06:03:47.846Z" },

"humidity": { "N": "60" },

"id": { "S": "c09657d6-54b0-4777-b274-6cf3036849d1" },

"temperature": { "N": "20.4" }

},

"SizeBytes": 233

},

"eventSource": "aws:dynamodb"

}

Now you can store Amazon DynamoDB records in AWS S3 with Amazon Kinesis Data Stream / Firehose.

You can use Amazon S3 folders for Amazon Athena's partitions.

The next step is setting up Amazon Athena with partitions.

This content originally appeared on DEV Community and was authored by Kihara, Takuya

Kihara, Takuya | Sciencx (2023-03-05T10:49:18+00:00) Step by step: Store Amazon DynamoDB records in AWS S3 with Amazon Kinesis Data Stream / Firehose. Retrieved from https://www.scien.cx/2023/03/05/step-by-step-store-amazon-dynamodb-records-in-aws-s3-with-amazon-kinesis-data-stream-firehose/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.