This content originally appeared on DEV Community and was authored by amananandrai

OpenAI has launched its new multimodal language model GPT 4 on 14th March, 2023. Multimodal means that it can take both image and text as input. It will power ChatGPT Plus, an upgraded version of the original ChatGPT tool which took the world by storm, available on waitlist basis for users. GPT-4 is already powering the Bing search. It also works on multiple languages and even on low resource languages like Latvian, Welsh, and Swahili.

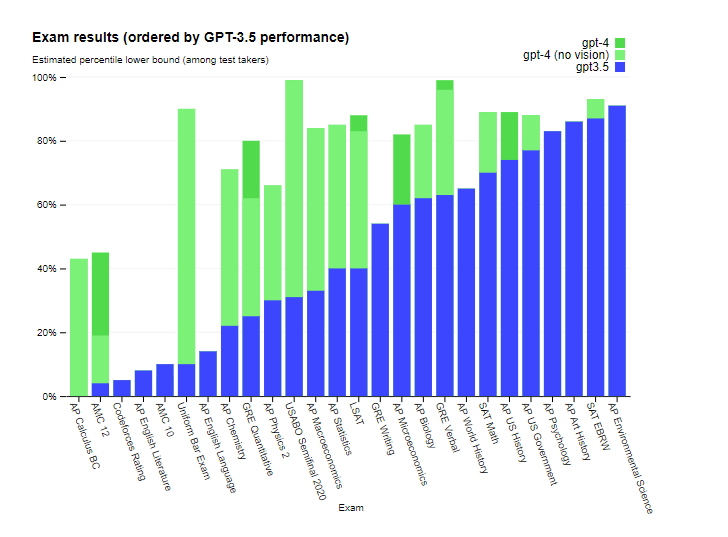

It performs better or similar to humans on many academic examinations. A comparison of GPT-4 and GPT-3.5 on various academic exams is shown below.

Some of the examples of exams taken by GPT-4 are - Uniform Bar Exam (MBE+MEE+MPT), LSAT, SAT Evidence-Based Reading & Writing, SAT Math, and Graduate Record Examination (GRE). It can even solve Leetcode programming questions. The reasoning capability of GPT-4 has increased compared to the previous feature.

The best feature about GPT-4 is that it can recognise complex images given as input and give output based on the instructions provided to it. Some companies have already partenered with OpenAI to use GPT-4. The most famous of them are Stripe, Duolingo, Morgan Stanley, and Khan Academy. Duolingo launched a new feature Duolingo Max which will help users learn new languages easily. Stripe used it for streamlining user experience and combatting fraud.

To know more about the tool follow the below link -

https://openai.com/research/gpt-4

This content originally appeared on DEV Community and was authored by amananandrai

amananandrai | Sciencx (2023-03-15T17:04:17+00:00) OpenAI launches GPT-4 a multimodal Language model. Retrieved from https://www.scien.cx/2023/03/15/openai-launches-gpt-4-a-multimodal-language-model/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.