This content originally appeared on Level Up Coding - Medium and was authored by Tassilo Smola

Introduction

From time to time, I like browsing through the Kubernets Dashboard UI instead of using the kubectl commands so that I can have a quick overview of workloads, services and pods. But deploying it on a dev cluster is manual effort and as soon as you reset your dev cluster (which I often do to test things from scratch) you have to deploy the Dashboard again.

The second thing I don't like is the dashboard authentication, as you have to manually execute a kubectl get secret -o jsonpath etc. which returns the admin token to authenticate. But I wanted to have authentication. Because everyone loves authentication.

This was reason enough that I automated it.

When it comes to technology selection, a helm chart containing all YAML definitions for the dashboard and configuring a post-install hook for retrieving the admin-user token would have made way more sense. But this would also have been way too easy. As this is just for fun and play purposes, we can totally overengineer this task without any guilt using Terraform.

Let me explain terraform in a nutshell. It's an infrastructure as code tool which uses providers to define resources in its own designed HCL language.

The actual state of the deployment is stored in the terraform.tfstate file and compared against the definitions written by us. Therefore, it is idempotent and can be iteratively fired hundreds of times against the cluster.

While running the apply command, Terraform will (mostly) automatically detect dependencies and execute all *.tf files in a sequence.

Terraform can do much more than that. If you want to have a look at the possibilities, check out the Terraform Registry.

Initialize the Terraform project

Let's start with the definition of the terraform provider. In our case, we need of course Kubernetes and Docker. We can create a providers.tf file and adjust the Kubernetes configuration depending on our setup.

terraform {

required_providers {

kubernetes = {

source = "hashicorp/kubernetes"

version = "2.18.1"

}

docker = {

source = "kreuzwerker/docker"

version = "3.0.1"

}

}

}

provider "kubernetes" {

# adjust the config settings based on your k8s setup

config_path = "~/.kube/config"

config_context = "docker-desktop"

}

provider "docker" {}To download the providers in your .terraform folder, run an init command:

$terraform init

Initializing the backend...

Initializing provider plugins...

- Finding kreuzwerker/docker versions matching "3.0.1"...

- Finding hashicorp/kubernetes versions matching "2.18.1"...

- Installing kreuzwerker/docker v3.0.1...

- Installed kreuzwerker/docker v3.0.1 (self-signed, key ID BD080C4571C6104C)

- Installing hashicorp/kubernetes v2.18.1...

- Installed hashicorp/kubernetes v2.18.1 (signed by HashiCorp)

Partner and community providers are signed by their developers.

If you'd like to know more about provider signing, you can read about it here:

https://www.terraform.io/docs/cli/plugins/signing.html

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

That was nice, now we need to convert the Kubernetes definitions provided in the documentation to terraforms HCL language. For getting used to HCL it is great to do this manually. For a lacy person like me, there is also a tool available called k2tf which will do the groundwork for you.

Once you converted the definitions, store them in a .tf file called dashboard.tf

As you can see in the definitions, there are a lot of duplicate values in there. I defined some variables in the variables.tf file and referenced them using the var.<VAR-NAME> syntax:

variable "kubernetes-dashboard-name" {

type = string

default = "kubernetes-dashboard"

}

variable "app-selector" {

type = string

default = "k8s-app"

}Now we have to create an admin-user which is allowed to have full access to the Dashboard. To do this, we have to create a service account for the user, a secret for the token to authenticate and a cluster role binding which maps the cluster-admin role to the admin-user:

resource "kubernetes_secret_v1" "admin-user" {

metadata {

name = "admin-user-token"

namespace = var.kubernetes-dashboard-name

annotations = {

"kubernetes.io/service-account.name" = "admin-user"

}

}

type = "kubernetes.io/service-account-token"

depends_on = [

kubernetes_namespace_v1.kubernetes-dashboard,

kubernetes_service_account_v1.admin-user

]

}

resource "kubernetes_cluster_role_binding_v1" "admin-user" {

metadata {

name = "admin-user"

}

role_ref {

api_group = "rbac.authorization.k8s.io"

kind = "ClusterRole"

name = "cluster-admin"

}

subject {

kind = "ServiceAccount"

name = "admin-user"

namespace = var.kubernetes-dashboard-name

}

depends_on = [

kubernetes_namespace_v1.kubernetes-dashboard,

kubernetes_service_account_v1.admin-user

]

}Notice the depends_on fields in the resource definitions. With them, you can define the sequential deployment behavior of other resources. Every resource which depends on another will only be executed after successful deployment of the dependency. You can specify dependencies using the <RESOURCE_TYPE>.<RESOURCE_NAME> syntax.

We're almost done, but how do we retrieve the admin-token for authenticating via the UI?

Terraform also has a variable type output which can return values. The cool thing about this is we can reference fields of resources in the same syntax we specified the dependencies. So define the output variable admin-token:

output "admin-token" {

value = kubernetes_secret_v1.admin-user.data.token

}Now we can execute a terraform plan command to verify our configuration against the cluster:

$terraform plan

...

│ Error: Output refers to sensitive values

│

│ on variables.tf line 13:

│ 13: output "admin-token" {

│

│ To reduce the risk of accidentally exporting sensitive data that was intended to be only internal, Terraform requires that any root module output containing sensitive data be explicitly marked as sensitive, to confirm your

│ intent.

│

│ If you do intend to export this data, annotate the output value as sensitive by adding the following argument:

│ sensitive = true

Oh no! We faced an error!

This is because the token is a sensitive value and Terraform doesn't allow returning them in an output variable, which makes perfectly sense for production environments.

However, as we set this up only for dev purposes, we can dig around this by using the nonsensitive function:

output "admin-token" {

value = nonsensitive(kubernetes_secret_v1.admin-user.data.token)

}Now we can again execute the plan command and check if the configuration is valid. You should see something like this:

$terraform plan

...

+ session_affinity = "None"

+ type = "ClusterIP"

+ port {

+ node_port = (known after apply)

+ port = 443

+ protocol = "TCP"

+ target_port = "8443"

}

}

}

Plan: 17 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ admin-token = (known after apply)

You can see all lines that will be applied in a git-diff fashion, ready to review, marked with green plus. If resources get deleted, the line is marked with a red minus. Also, some values like admin-token or node-port aren't displayed because they rely on the cluster deployment and are only known after applying the resources.

From the output we can also see that Terraform will add 17 resources, change 0 and destroy 0. If you change some values after apply and run the plan command again, only the changed resources will be displayed.

So run the terraform apply command to deploy the resources on the cluster. The console output will once again show the diff and ask you to accept the actions described above:

$terraform plan

...

+ session_affinity = "None"

+ type = "ClusterIP"

+ port {

+ node_port = (known after apply)

+ port = 443

+ protocol = "TCP"

+ target_port = "8443"

}

}

}

Plan: 17 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ admin-token = (known after apply)

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: hell yeah!

If you're not as dumb as me and really enter yes in the command prompt, the output logs display the created resources and finally prints the admin-token as output:

...

kubernetes_namespace_v1.kubernetes-dashboard: Creating...

kubernetes_namespace_v1.kubernetes-dashboard: Creation complete after 0s [id=kubernetes-dashboard]

kubernetes_service_account_v1.kubernetes-dashboard: Creating...

kubernetes_cluster_role_binding_v1.kubernetes-dashboard: Creating...

kubernetes_service_account_v1.admin-user: Creating...

kubernetes_secret_v1.kubernetes-dashboard-key-holder: Creating...

kubernetes_config_map_v1.kubernetes-dashboard-settings: Creating...

kubernetes_cluster_role_v1.kubernetes-dashboard: Creating...

kubernetes_service_v1.kubernetes-dashboard: Creating...

kubernetes_role_v1.kubernetes-dashboard: Creating...

kubernetes_secret_v1.kubernetes-dashboard-key-holder: Creation complete after 0s [id=kubernetes-dashboard/kubernetes-dashboard-key-holder]

kubernetes_deployment.dashboard-metrics-scraper: Creating...

kubernetes_config_map_v1.kubernetes-dashboard-settings: Creation complete after 0s [id=kubernetes-dashboard/kubernetes-dashboard-settings]

kubernetes_deployment.kubernetes-dashboard: Creating...

kubernetes_role_binding_v1.kubernetes-dashboard: Creating...

kubernetes_cluster_role_v1.kubernetes-dashboard: Creation complete after 0s [id=kubernetes-dashboard]

kubernetes_secret_v1.kubernetes-dashboard-csrf: Creating...

kubernetes_service_account_v1.admin-user: Creation complete after 0s [id=kubernetes-dashboard/admin-user]

kubernetes_service_account_v1.kubernetes-dashboard: Creation complete after 0s [id=kubernetes-dashboard/kubernetes-dashboard]

kubernetes_cluster_role_binding_v1.kubernetes-dashboard: Creation complete after 0s [id=kubernetes-dashboard]

kubernetes_role_v1.kubernetes-dashboard: Creation complete after 0s [id=kubernetes-dashboard/kubernetes-dashboard]

kubernetes_service_v1.kubernetes-dashboard: Creation complete after 0s [id=kubernetes-dashboard/kubernetes-dashboard]

kubernetes_secret_v1.kubernetes-dashboard-certs: Creating...

kubernetes_service_v1.dashboard-metrics-scraper: Creating...

kubernetes_cluster_role_binding_v1.admin-user: Creating...

kubernetes_secret_v1.admin-user: Creating...

kubernetes_role_binding_v1.kubernetes-dashboard: Creation complete after 0s [id=kubernetes-dashboard/kubernetes-dashboard]

kubernetes_secret_v1.kubernetes-dashboard-csrf: Creation complete after 0s [id=kubernetes-dashboard/kubernetes-dashboard-csrf]

kubernetes_secret_v1.kubernetes-dashboard-certs: Creation complete after 0s [id=kubernetes-dashboard/kubernetes-dashboard-certs]

kubernetes_cluster_role_binding_v1.admin-user: Creation complete after 0s [id=admin-user]

kubernetes_service_v1.dashboard-metrics-scraper: Creation complete after 0s [id=kubernetes-dashboard/dashboard-metrics-scraper]

kubernetes_secret_v1.admin-user: Creation complete after 0s [id=kubernetes-dashboard/admin-user-token]

kubernetes_deployment.dashboard-metrics-scraper: Creation complete after 3s [id=kubernetes-dashboard/dashboard-metrics-scraper]

kubernetes_deployment.kubernetes-dashboard: Creation complete after 3s [id=kubernetes-dashboard/kubernetes-dashboard]

Apply complete! Resources: 17 added, 0 changed, 0 destroyed.

Outputs:

admin-token = "SomeCrypticStuffLikeeyJhbGciOiJSUzI1NiIs..."

All you have to do now is executing a proxy command to expose the Dashboard to localhost:

$kubectl proxy

Starting to serve on 127.0.0.1:8001

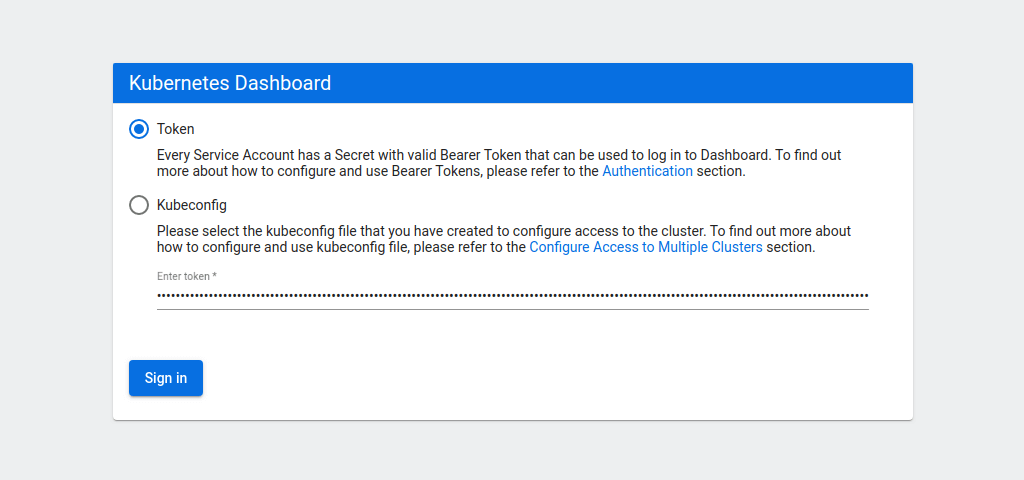

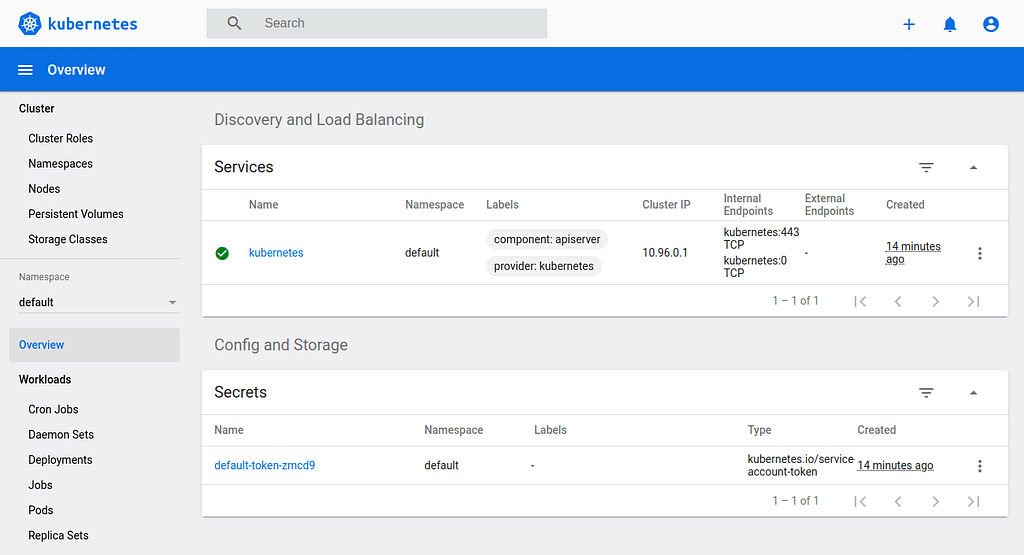

The dashboard should now be available, and you can authenticate with the token from the output:

If you want to delete the dashboard again, you can run a destroy command. This will again display the diff with red minus symbols, ask for accepting the changes and output the deleted resources:

$terraform destroy

...

# kubernetes_service_v1.kubernetes-dashboard will be destroyed

- resource "kubernetes_service_v1" "kubernetes-dashboard" {

- id = "kubernetes-dashboard/kubernetes-dashboard" -> null

- status = [

- {

- load_balancer = [

- {

- ingress = []

},

]

},

] -> null

- wait_for_load_balancer = true -> null

- metadata {

- annotations = {} -> null

- generation = 0 -> null

- labels = {

- "k8s-app" = "kubernetes-dashboard"

} -> null

- name = "kubernetes-dashboard" -> null

- namespace = "kubernetes-dashboard" -> null

- resource_version = "13745" -> null

- uid = "891bae3e-3633-4943-bb13-16bccda7201e" -> null

}

- spec {

- allocate_load_balancer_node_ports = true -> null

- cluster_ip = "10.101.155.31" -> null

- cluster_ips = [

- "10.101.155.31",

] -> null

- external_ips = [] -> null

- health_check_node_port = 0 -> null

- internal_traffic_policy = "Cluster" -> null

- ip_families = [

- "IPv4",

] -> null

- ip_family_policy = "SingleStack" -> null

- load_balancer_source_ranges = [] -> null

- publish_not_ready_addresses = false -> null

- selector = {

- "k8s-app" = "kubernetes-dashboard"

} -> null

- session_affinity = "None" -> null

- type = "ClusterIP" -> null

- port {

- node_port = 0 -> null

- port = 443 -> null

- protocol = "TCP" -> null

- target_port = "8443" -> null

}

}

}

Plan: 0 to add, 0 to change, 17 to destroy.

Changes to Outputs:

- admin-token = "eyJhbGciOiJSUzI1NiIsImtpZCI6ImUweTk5bzRlb2ZWLUxuY0RWczNPX2RQaWg0Q181NzZJaFktaDg1MEl5U00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2NTlmNWM0OC03MDQ3LTQ4ZDctOTExMS1lOGFiNTYzMWI3YTEiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.FZr3tugAvdh49V4c5CFC8vWR_aqSaUohZoT-xUYer0Qljs_UrfMrHHJP2_4TGfHAsLBiq4pXmPNNcfj9ihsTI_ihhItoJv-74f1jubTRI97KgnAoFani-5nF-bF0IdquhOpvJO-3gLAoBcKtQeNQJ4i-pFM3k_-IGHXqVYXtr5RSZu54DXPDURIZVmWCRC53GHt-tsw6bGUkBixYfhBtpCneBGutth21PXTABfggVrPg-anKBzgEOH6C5RVjeZcvTtDPDtlCd1PeY_-dzsQ1rWKSYEEOGvMLU4Y9ipT1GA8xSQrEQPCFyRzWyJxy_KByAqmT1dBvkk10-Y0e2L2zKQ" -> null

Do you really want to destroy all resources?

Terraform will destroy all your managed infrastructure, as shown above.

There is no undo. Only 'yes' will be accepted to confirm.

Enter a value: yes

kubernetes_secret_v1.kubernetes-dashboard-certs: Destroying... [id=kubernetes-dashboard/kubernetes-dashboard-certs]

kubernetes_cluster_role_binding_v1.kubernetes-dashboard: Destroying... [id=kubernetes-dashboard]

kubernetes_secret_v1.kubernetes-dashboard-key-holder: Destroying... [id=kubernetes-dashboard/kubernetes-dashboard-key-holder]

kubernetes_cluster_role_binding_v1.admin-user: Destroying... [id=admin-user]

kubernetes_cluster_role_v1.kubernetes-dashboard: Destroying... [id=kubernetes-dashboard]

kubernetes_secret_v1.kubernetes-dashboard-csrf: Destroying... [id=kubernetes-dashboard/kubernetes-dashboard-csrf]

kubernetes_role_v1.kubernetes-dashboard: Destroying... [id=kubernetes-dashboard/kubernetes-dashboard]

kubernetes_service_v1.dashboard-metrics-scraper: Destroying... [id=kubernetes-dashboard/dashboard-metrics-scraper]

kubernetes_service_v1.kubernetes-dashboard: Destroying... [id=kubernetes-dashboard/kubernetes-dashboard]

kubernetes_deployment.dashboard-metrics-scraper: Destroying... [id=kubernetes-dashboard/dashboard-metrics-scraper]

kubernetes_cluster_role_v1.kubernetes-dashboard: Destruction complete after 0s

kubernetes_role_v1.kubernetes-dashboard: Destruction complete after 0s

kubernetes_secret_v1.kubernetes-dashboard-csrf: Destruction complete after 0s

kubernetes_secret_v1.kubernetes-dashboard-certs: Destruction complete after 0s

kubernetes_secret_v1.kubernetes-dashboard-key-holder: Destruction complete after 0s

kubernetes_secret_v1.admin-user: Destroying... [id=kubernetes-dashboard/admin-user-token]

kubernetes_cluster_role_binding_v1.admin-user: Destruction complete after 0s

kubernetes_cluster_role_binding_v1.kubernetes-dashboard: Destruction complete after 0s

kubernetes_role_binding_v1.kubernetes-dashboard: Destroying... [id=kubernetes-dashboard/kubernetes-dashboard]

kubernetes_deployment.kubernetes-dashboard: Destroying... [id=kubernetes-dashboard/kubernetes-dashboard]

kubernetes_deployment.dashboard-metrics-scraper: Destruction complete after 0s

kubernetes_service_v1.dashboard-metrics-scraper: Destruction complete after 0s

kubernetes_service_v1.kubernetes-dashboard: Destruction complete after 0s

kubernetes_secret_v1.admin-user: Destruction complete after 0s

kubernetes_config_map_v1.kubernetes-dashboard-settings: Destroying... [id=kubernetes-dashboard/kubernetes-dashboard-settings]

kubernetes_service_account_v1.kubernetes-dashboard: Destroying... [id=kubernetes-dashboard/kubernetes-dashboard]

kubernetes_deployment.kubernetes-dashboard: Destruction complete after 0s

kubernetes_config_map_v1.kubernetes-dashboard-settings: Destruction complete after 0s

kubernetes_role_binding_v1.kubernetes-dashboard: Destruction complete after 0s

kubernetes_service_account_v1.kubernetes-dashboard: Destruction complete after 0s

kubernetes_service_account_v1.admin-user: Destroying... [id=kubernetes-dashboard/admin-user]

kubernetes_service_account_v1.admin-user: Destruction complete after 1s

kubernetes_namespace_v1.kubernetes-dashboard: Destroying... [id=kubernetes-dashboard]

kubernetes_namespace_v1.kubernetes-dashboard: Destruction complete after 6s

Destroy complete! Resources: 17 destroyed.

Conclusion

That's it, we can now apply and destroy the resources with one command and also make the deployment replicable across multiple environments.

Terraform is a great tool for defining infrastructure related resources. You have built-in functions, variables, modules etc. and a really nice overview of what's going on during resource creation.

Of course, this covers only the very basics of using Terraform. In a production environment, you won't store the terraform.tfstate file locally but define for example an S3 backend to share it with other users across your organization. Because of the automatic locking behavior of the file, it's also error-prone to simultaneously apply commands from multiple sources.

The complete code that I used can be found in this Git repository:

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 💰 Free coding interview course ⇒ View Course

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

🚀👉 Join the Level Up talent collective and find an amazing job

How to Deploy a Kubernetes Dashboard using Terraform was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Tassilo Smola

Tassilo Smola | Sciencx (2023-03-16T13:07:06+00:00) How to Deploy a Kubernetes Dashboard using Terraform. Retrieved from https://www.scien.cx/2023/03/16/how-to-deploy-a-kubernetes-dashboard-using-terraform/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.