This content originally appeared on DEV Community and was authored by Daniele Frasca

Testing serverless applications can seem complicated due to their unique architecture, especially if you are relatively new. But, of course, it does not help to hear that serverless computing requires a new mindset because it changes how developers build and deploy applications.

I can't entirely agree with this statement because even with the traditional server-based architecture, where I should have complete control over the environment in which my code runs, it is still complex to make it run perfectly in my local environment. I still remember tedious configurations and different software to install to run the entire application.

Serverless testing is not more complicated than traditional testing unless I am developing a Hello World project. It is different and follows different rules.

Since the cloud provider manages the underlying infrastructure, it can be challenging to recreate the same environment in which the code will run. This can lead to unexpected issues when deploying code to production. For example, a typical serverless application could be composed of a few Lambda and other services to glue them together and requires developers to adapt and move away from traditional monolithic test designs.

To mitigate these challenges, developers must adopt new testing strategies that consider the unique characteristics of serverless computing. This may include using specialised testing tools, building more monitoring and logging capabilities, and relying more heavily on automated testing and deployment pipelines.

Regardless of the type of project, a practical approach and strategy when testing a distributed serverless application involve following a clear process.

My serverless testing strategy is divided into the following steps:

unit tests

component tests

e2e tests

Unit tests aim for 100% coverage of the business logic, proving it logically. Assuming one assertion for each test, I can ensure that each line of code is tested thoroughly. For example, if the code contains an if statement, a unit test should cover each branch of the statement

1 await myService.doSomething();

2 const result = await myService.doSomethingElse();

3 if result?.items && something {

4 await myService2.doSomething();

5 return "ok";

6 }

7

8 return "ciao";

I expect to see the following:

Asserting that line 1 is called with the expected parameters, if any

result is null or undefined

result is an object, but result.items is undefined or null

result is an object, and result.items is an empty array

result is an object, and result.items is a non-empty array, but something is false

result is an object, and result.items is a non-empty array, and something is true

result is an object, and result.items is a non-empty array, and something is true and assert line 4 with the expected parameters if any

Once there is a high degree of confidence with unit tests, it is time to test the Lambda and its connections. I test all my infrastructure in the cloud because it gives me real experience. This is because, most of the time, many simulators need configurations or lack support for some services, requiring to compromise. Ultimately, I test all in the cloud to make my life easier.

Based on my serverless testing strategy, I have now left the following:

component tests

e2e tests

For both of them, I use cucumber and a test look likes

Component tests and e2e:

Live in the same solution.

Have different tags

Have different setups

Component testing involves testing individual functions in complete isolation.

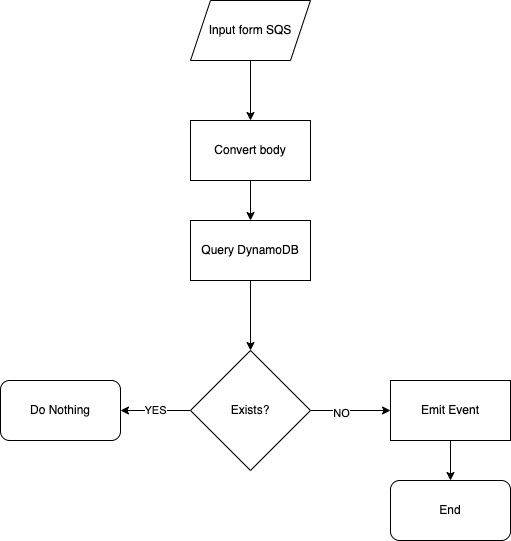

A function triggered by an SQS message does some processing, calls DynamoDB, and emits an event with EventBridge.

While logical flow is tested with unit tests

IAM roles for using services like DynamoDB or EventBridge need confirmation. For example, I need to determine if EventBridge or SQS has the correct rule or filtering.

With the component test, I change, for example, the Lambda EventSourcing with my Test SQS and subscribe to the EventBridge event with my new Test target. Then, based on the business rule, I can place specific SQS messages as input and assert the event in the output target.

Cucumber has conditional hooks, they have for example, different scopes, and they also are executed at different times.

What I can do before all testing starts:

The replacement of the Lambda EventSourcing

The creation of the new EventBridge target

Replacing a third-party endpoint with my Mock service

All of this can happen inside the beforeAll. This function is executed once before all the tests in a test suite, and it is perfect for setting up the test environment, initialising services and so on.

I can have something similar:

export abstract class Steps {

public hooks: StepDefinitions = () => {

beforeAll(async (): Promise<void> => {

jest.setTimeout(Wait.JEST_TIMEOUT);

require("custom-env")

.env(getEnvironmentVariable("CI_ENVIRONMENT_NAME"));

const initActions = [];

if (!getEnvironmentVariable("TEST_TAG") || ["@myservice", "@myservice2"].includes(getEnvironmentVariable("TEST_TAG"))) {

initActions.push(setSqsAsTrigger(functionName));

initActions.push(setSqsAsTarget(eventBridge));

initActions.push(setMockService(functionName));

...

}

await Promise.all(initActions);

});

}

}

The method setSqsAsTrigger could be as simple as:

import Lambda, { CreateEventSourceMappingRequest, ListEventSourceMappingsResponse } from "aws-sdk/clients/lambda";

async function setSqsAsTrigger(functionName: string, sqsArn: string): Promise<void> {

// listEventSourceMapping to see if it exists or not

// CreateEventSourceMappingRequest

// Waiting for event source mapping to be enabled

}

While it could seem a bit overkill, achieving it with some modular code is simple. With this in place, I can dynamically replace my component's input and output services based on the test I run.

Components tests are usually handy over e2e testing because:

I limit the scope of testing, meaning they are faster to run.

I can replace things like timeout, batching, and other parameters to run the test more quickly.

I can run them automatically at each commit.

With component tests green and hooked to my CI, I can deploy to production, enabling scenarios such as Continuous Deployments (CD) or not waste a lot of QA time when passing my work over.

What is the difference with e2e testing?

While they are very similar to component tests, in this case, I do not change the services between the microservices or the batching, rules, or timeout.

Instead, based on my application business rules, I create inputs at the beginning of the chain and wait for the output at the very end, where I assert and validate my application.

One test could run, for example, for many minutes, so the overall suite could take hours to run, making it unsuitable for quick and continuous deployments.

I keep the option to mock third-party API using unique tags and environment variables. This is because while I know the interface of a third-party team/service, I cannot rely on them to have their system up and running or in a healthy status just for my test to run. So I primarily run with a Mock service (APIGW + DynamoDB) where I set up the data that I expect to be returned. If, instead, for some reason, I need to test my application with other teams/services dependency because an interface has changed or for other reasons, I trigger the e2e with the unique tag or environment variable.

Conclusion

The strategy above is working well so far. It has a steep curve initially because it requires setting up all, but in the end, it gives you speed and stability of the application, providing you with the confidence to release at any time of the year. I hope you find this helpful and can re-use some parts and apply them to your serverless testing strategy.

This content originally appeared on DEV Community and was authored by Daniele Frasca

Daniele Frasca | Sciencx (2023-04-13T04:51:06+00:00) Serverless testing is not complicated. It just requires some effort. Retrieved from https://www.scien.cx/2023/04/13/serverless-testing-is-not-complicated-it-just-requires-some-effort/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.