This content originally appeared on Level Up Coding - Medium and was authored by Kamini Kamal

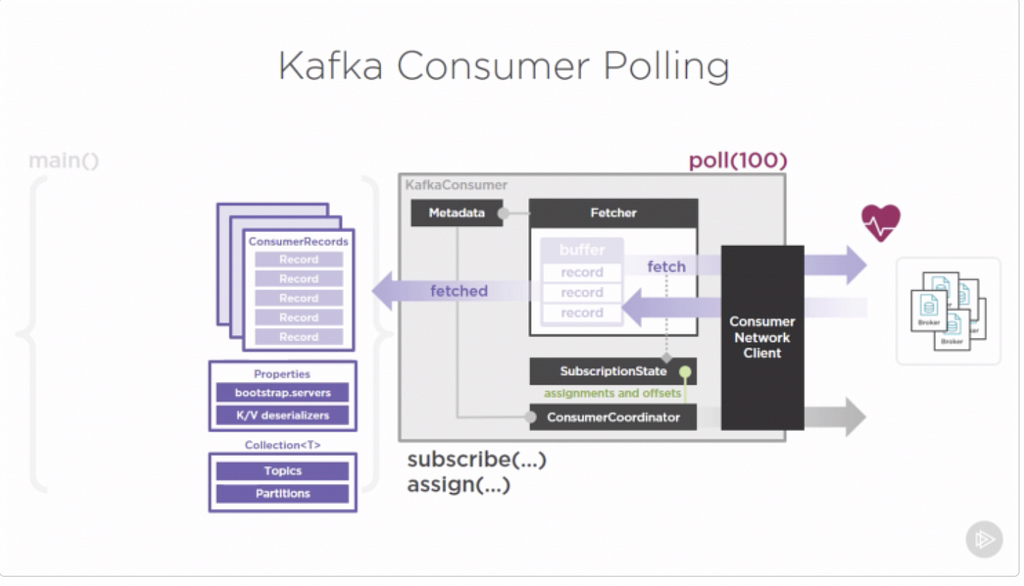

The poll loop is a critical component of the Kafka consumer API. It is responsible for fetching messages from the Kafka brokers and processing them by calling the user-defined message handler. The poll loop follows the following basic steps:

- The consumer sends a fetch request to the Kafka broker, requesting a batch of messages from the assigned partitions.

- The broker responds with a batch of messages, or a timeout message if no messages are available for consumption.

- The consumer processes the messages by calling the user-defined message handler, which can be a simple function or a more complex processing pipeline.

- The consumer commits the offset of the last message it has processed. This step is important to ensure that the consumer does not re-read the same messages in case of failure or restart.

- The consumer returns to step 1 to fetch the next batch of messages.

It is important to note that the poll loop is a blocking operation, meaning that it will block until it receives a batch of messages from the broker or a timeout occurs. Therefore, the poll loop must be run in a separate thread or event loop to avoid blocking the main application thread.

To optimize the performance of the poll loop, you can tune various configuration parameters such as the batch size, fetch size, and poll timeout. By setting these parameters appropriately, you can balance the trade-off between latency and throughput and achieve the desired level of performance for your Kafka consumer application.

Optimize the performance of the poll loop in Kafka Consumer

[Image src: https://dan.iftodi.com/2019/02/apache-kafka-consumer/]

To optimize the performance of the poll loop in a Kafka consumer, you can consider the following tips:

- Increase the batch size:

To increase the batch size in a Kafka consumer, you can use the max.poll.records configuration parameter. This parameter determines the maximum number of records returned in a single call to poll() method. By default, it is set to 500 records. You can increase this value to increase the batch size and reduce the number of network round-trips required to fetch messages.

Here’s an example of how to set max.poll.records to a larger value:

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("group.id", "test-group");

props.put("enable.auto.commit", "true");

props.put("auto.commit.interval.ms", "1000");

props.put("max.poll.records", "1000"); // Set batch size to 1000 records

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props, new StringDeserializer(), new StringDeserializer());

consumer.subscribe(Arrays.asList("test-topic"));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100));

for (ConsumerRecord<String, String> record : records) {

System.out.println("Received message: " + record.value());

}

}

The batch size determines the maximum number of messages that the consumer will fetch in a single poll request. By increasing the batch size, you can reduce the number of network round-trips required to fetch messages, which can improve the overall throughput of the consumer. However, increasing the batch size too much can also increase the latency of the consumer.

NOTE: Increasing the batch size can also increase the memory usage of the consumer. You should monitor the memory usage of the consumer and ensure that it has enough memory available to handle the larger batch size.

2. Tune the fetch size:

To tune the fetch size of a Kafka consumer, you can use the fetch.max.bytes configuration parameter. This parameter determines the maximum number of bytes that the consumer will fetch in a single request. By default, it is set to 50 MB.

To optimize the fetch size, you can adjust this parameter to a value that balances the trade-off between network latency and memory usage. A larger fetch size can reduce the number of requests required to fetch a set of records, thereby reducing network overhead. However, a larger fetch size also means that more memory will be required to buffer the fetched records.

Here’s an example of how to set fetch.max.bytes to a larger value:

props.put("fetch.max.bytes", "10485760"); // Set fetch size to 10 MBThe fetch size determines the maximum number of bytes that the consumer will fetch in a single poll request. By increasing the fetch size, you can reduce the number of network round-trips required to fetch messages, which can also improve the overall throughput of the consumer. However, increasing the fetch size too much can increase the memory usage of the consumer.

3. Reduce the poll timeout:

The poll timeout determines how long the consumer will wait for messages before returning an empty response. By reducing the poll timeout, you can make the consumer more responsive to new messages, which can reduce the overall latency of the consumer. However, reducing the poll timeout too much can also increase the number of empty responses, which can reduce the overall throughput of the consumer.

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100)); // Set poll timeout to 100 milliseconds

for (ConsumerRecord<String, String> record : records) {

System.out.println("Received message: " + record.value());

}

}4. Use asynchronous processing:

If your message processing logic is CPU-bound or involves I/O operations, you can consider using asynchronous processing techniques such as threading or non-blocking I/O. By processing messages asynchronously, you can reduce the amount of time that the poll loop is blocked, which can improve the responsiveness and throughput of the consumer.

ExecutorService executorService = Executors.newFixedThreadPool(10);

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100));

for (ConsumerRecord<String, String> record : records) {

executorService.submit(() -> {

processRecord(record); // Process the record asynchronously

});

}

}

public void processRecord(ConsumerRecord<String, String> record) {

// Process the record

System.out.println("Received message: " + record.value());

}

5. Use parallel processing:

If your message processing logic can be parallelized, you can consider using multiple threads or processes to process messages in parallel. By processing messages in parallel, you can take advantage of multiple CPU cores and increase the overall throughput of the consumer.

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("group.id", "test-group");

props.put("enable.auto.commit", "true");

props.put("auto.commit.interval.ms", "1000");

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props, new StringDeserializer(), new StringDeserializer());

consumer.subscribe(Arrays.asList("test-topic"));

int numThreads = 10;

int batchSize = 100;

List<ConsumerRecord<String, String>> records = new ArrayList<>();

while (true) {

ConsumerRecords<String, String> batchRecords = consumer.poll(Duration.ofMillis(100));

for (ConsumerRecord<String, String> record : batchRecords) {

records.add(record);

}

if (records.size() >= batchSize) {

List<List<ConsumerRecord<String, String>>> partitions = Lists.partition(records, batchSize / numThreads);

List<Callable<Void>> tasks = new ArrayList<>();

for (List<ConsumerRecord<String, String>> partition : partitions) {

tasks.add(() -> {

for (ConsumerRecord<String, String> record : partition) {

processRecord(record); // Process the record

}

return null;

});

}

ExecutorService executorService = Executors.newFixedThreadPool(numThreads);

try {

executorService.invokeAll(tasks);

} catch (InterruptedException e) {

// Handle the exception

} finally {

executorService.shutdown();

}

consumer.commitSync();

records.clear();

}

}

public void processRecord(ConsumerRecord<String, String> record) {

// Process the record

System.out.println("Received message: " + record.value());

}

6. Monitor the consumer performance:

It is important to monitor the performance of the consumer regularly to detect any bottlenecks or issues that may affect its performance. You can use tools such as JConsole or Kafka consumer lag monitoring tools to monitor the consumer performance and identify any performance issues.

By applying these tips, you can optimize the performance of the poll loop in a Kafka consumer and achieve the desired level of throughput and latency for your application.

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 💰 Free coding interview course ⇒ View Course

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

🚀👉 Join the Level Up talent collective and find an amazing job

Optimize the performance of the poll loop in Kafka Consumer was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Kamini Kamal

Kamini Kamal | Sciencx (2023-04-18T20:20:18+00:00) Optimize the performance of the poll loop in Kafka Consumer. Retrieved from https://www.scien.cx/2023/04/18/optimize-the-performance-of-the-poll-loop-in-kafka-consumer/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.