This content originally appeared on Level Up Coding - Medium and was authored by Mehran

It is not a secret to anyone that companies and even individuals prefer to store their files into cloud storage like AWS S3, and of course, S3 is one of the best Cloud Storages available at our disposal.

S3 provides many features that make it unique and attractive to use, and one of these features is Multipart upload. This feature lets a service breaks a big file into smaller chunks and then uploads them; once all parts are uploaded, S3 could merge all in one single file. By doing this, you could also benefit from multithreading and start uploading many chunks simultaneously.

In this post, I am going to show you how to achieve this goal!.

I do not need any explanation.

If you think you do not need any further explanation and need a code, then you can directly access the finished and runnable snippet here:

Could you explain it to me?

To make this happen, you need these packages.

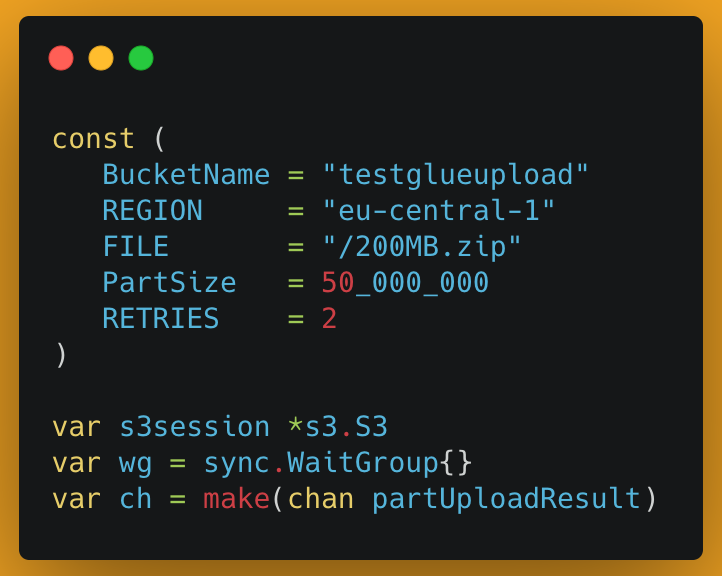

These are the only needed global variables; the naming is obvious!

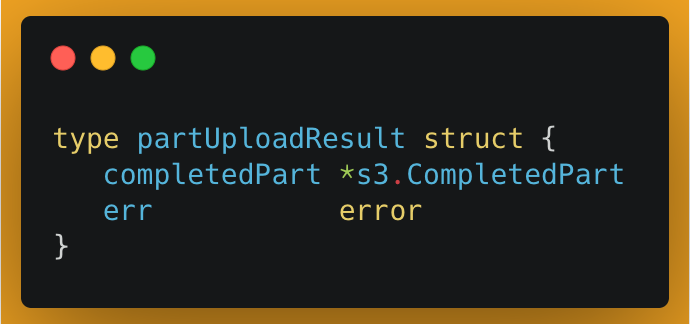

A custom type for the AWS SDK response:

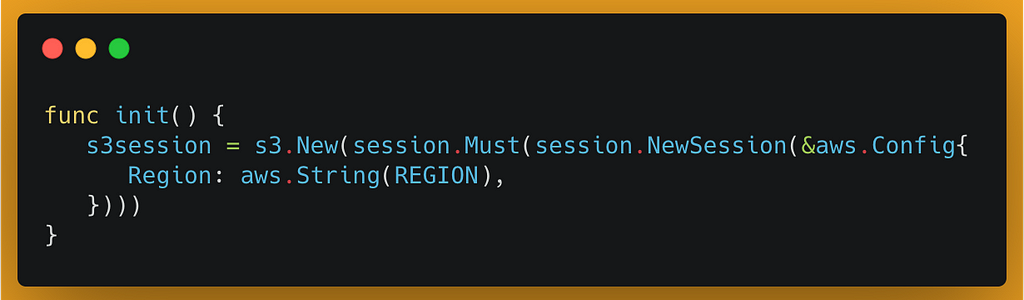

Once you are done with all the variables and types, you have to initiate the S3 client. init function is the best place to initiate any lib as it gets triggered every time the main function is called.

Main function

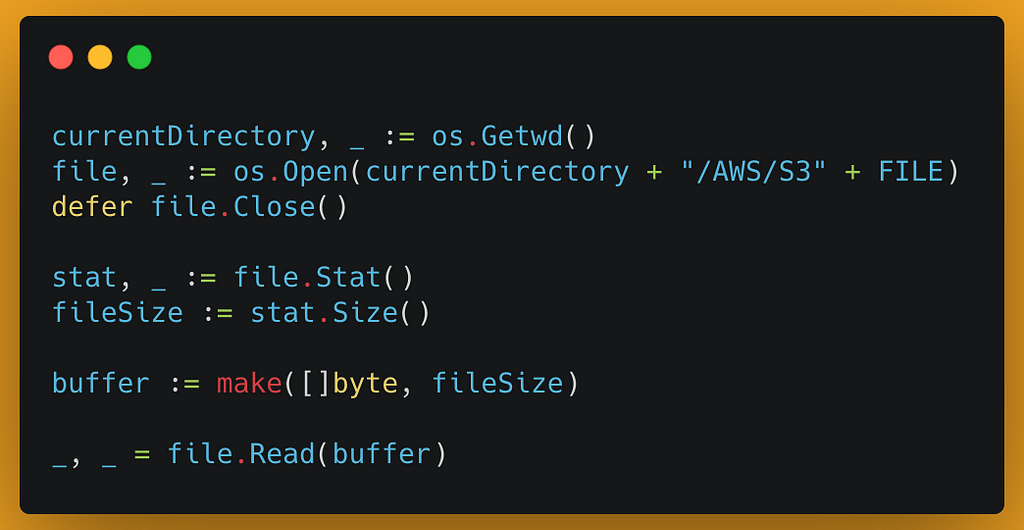

The first step is to access the file in a local machine and convert it to an array of bytes to make it ready for upload.

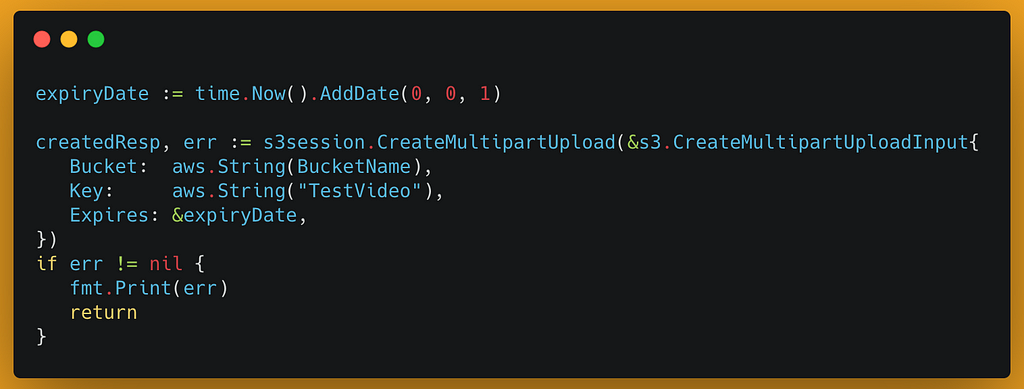

It is time to create a CreateMultipartUpload object that holds basic settings, and later you use it to init the actual upload:

Why do I need expiryDate?

Always there is a chance that one or more upload tasks fail due to an exception like a network error. Because the whole file is not fully uploaded into the S3 bucket, you cannot see the file, but technically, you get charged for the storage allocated to that file. To avoid such behavior, we will set an expiry date if the Multipart operation stops in the middle and the file gets deleted after the expiry date!

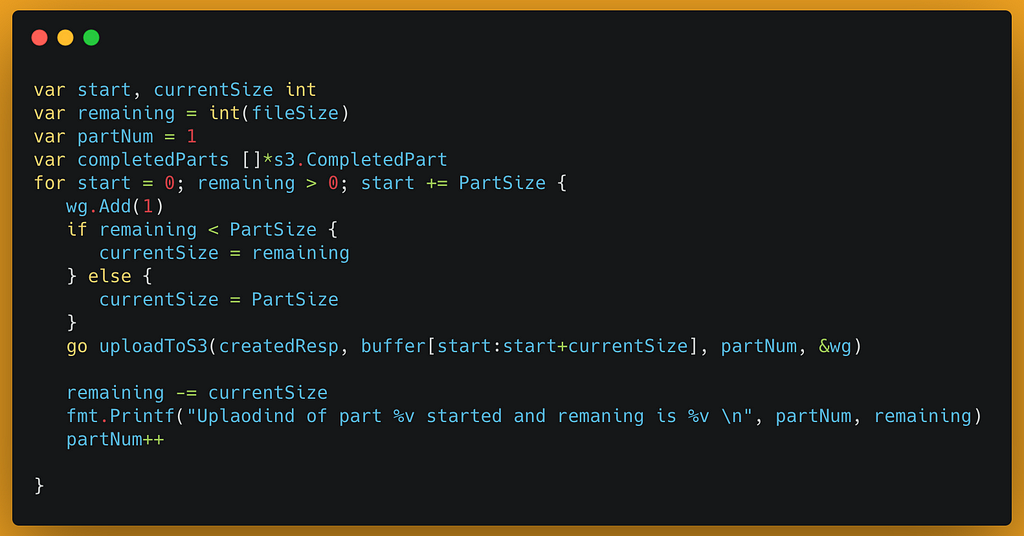

Preparing chunks for upload

To achieve this goal, we use a straightforward loop that iterates over the number of possible chunks and, in each iteration, calls the Upload function that later I explained.

Goroutine is used in this for loop to achieve the highest upload performance. One item was added to WaitGroup in each iteration of the loop.

Later on, new grouting gets triggered by putting the go command in front of the uploadToS3 function.

The next and most important part of the main function is handling the goroutine and its messages.

As you may know, wg.Wait() used to wait for all the triggered goroutines, but the wait() function blocks the thread and stops the service from receiving channel messages from the uploadToS3 function. To avoid this problem, we will trigger the wait() within another goroutine. Do not forget to close the channel as below.

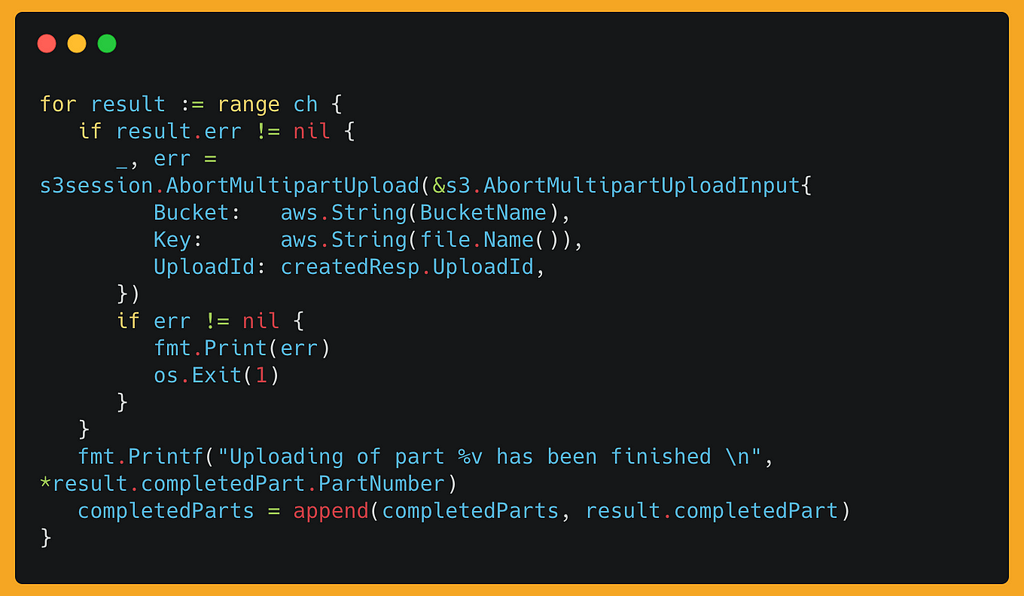

Now that you took care of the wait(), you can get back to the channel's messages from the upload function.

You already know that one goroutine gets triggered for uploading each chunk of the file. Therefore, please expect to receive more than one message over the channel, and as the number of chunks is not clear, the for loop used to read the message from the channel whenever it is ready! You benefit from using it a for-loop because you do not need to take care of exceptions when there is no more message on the channel!

The message received from the channel has the type of partUploadResult declared at the beginning of this module. The message tells that if the upload was successful or not. In case of an error, the whole upload process has to be aborted because one part of the file could not be uploaded, and the final file would be corrupted! To achieve this purpose, check the error object, and if it is not nil, then call AbortMultipartUpload.

How to inform the AWS that the upload is finished?

AWS does not know that the multipart upload is finished as there is no way for it to know the size of the file in advance, so the service must inform AWS that the upload is finished. There are few steps to take before doing so!

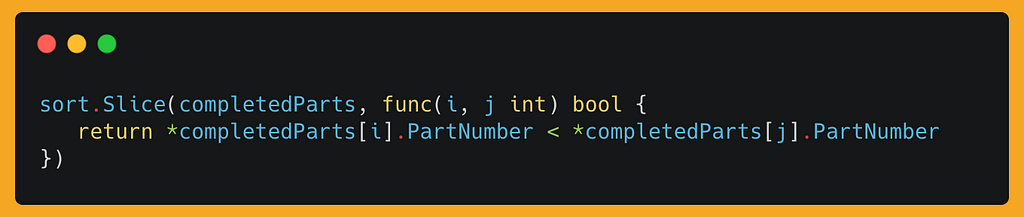

Order the completedParts array?

Every time an upload response is received from AWS, it will be sorted in an array called completedparts. Because our code uses goroutines and triggers many uploadToS3 functions simultaneously, then there is no guarantee that the responses arrived in order. Before marking the operation as complete, you have to sort the array based on the PartNumber.

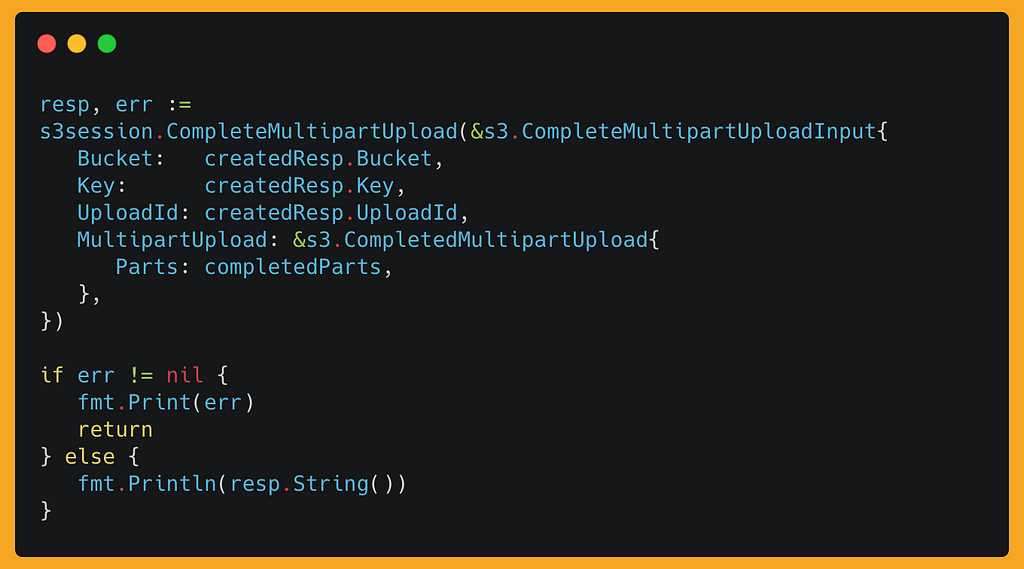

Now that everything in order, it is time to signal AWS about the completion of the operation.

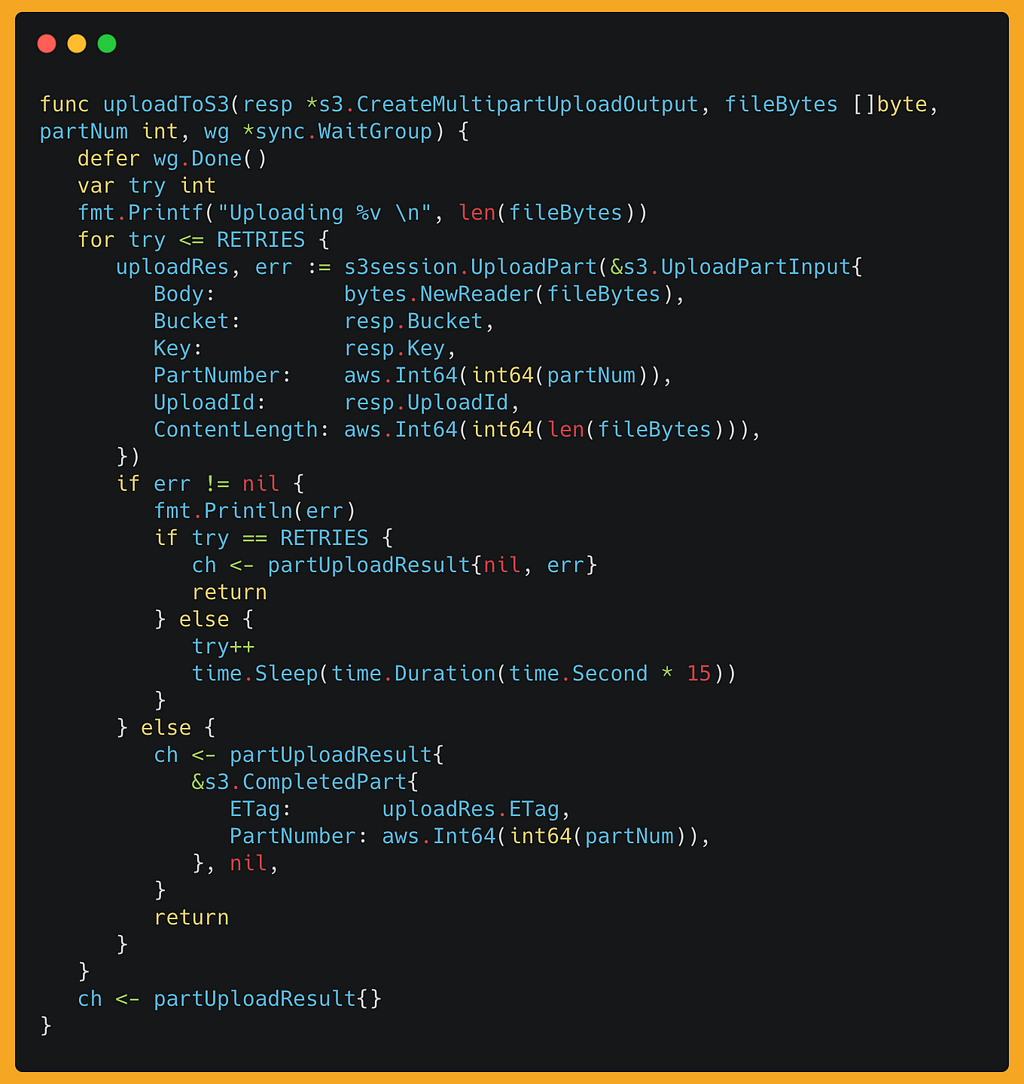

By now, you should have a better understanding of how MultiPart upload and goroutines work together. So let’s talk about the Upload function.

Upload function accepts 4 parameters as below:

- resp

A resp is a CreateMultipartUploadOutput object that carries the basic information about the upload such as bucket name, key, etc. - fileByte

It is an array of bytes that, in reality, is just a small portion of a file that should be uploaded to S3 as a chunk. - partNum

It is an integer variable that carries the current number of the chunk that is going to be uploaded. Basically is a running number that starts from 1. - wg

Wg is a WaitGroup that tracks the number of goroutines that are triggered.

As explained earlier, each call to uploadToS3 function runs by a separate goroutine, and each goroutine must report back to WaitGroup once it is done. This goal achieved by this command:

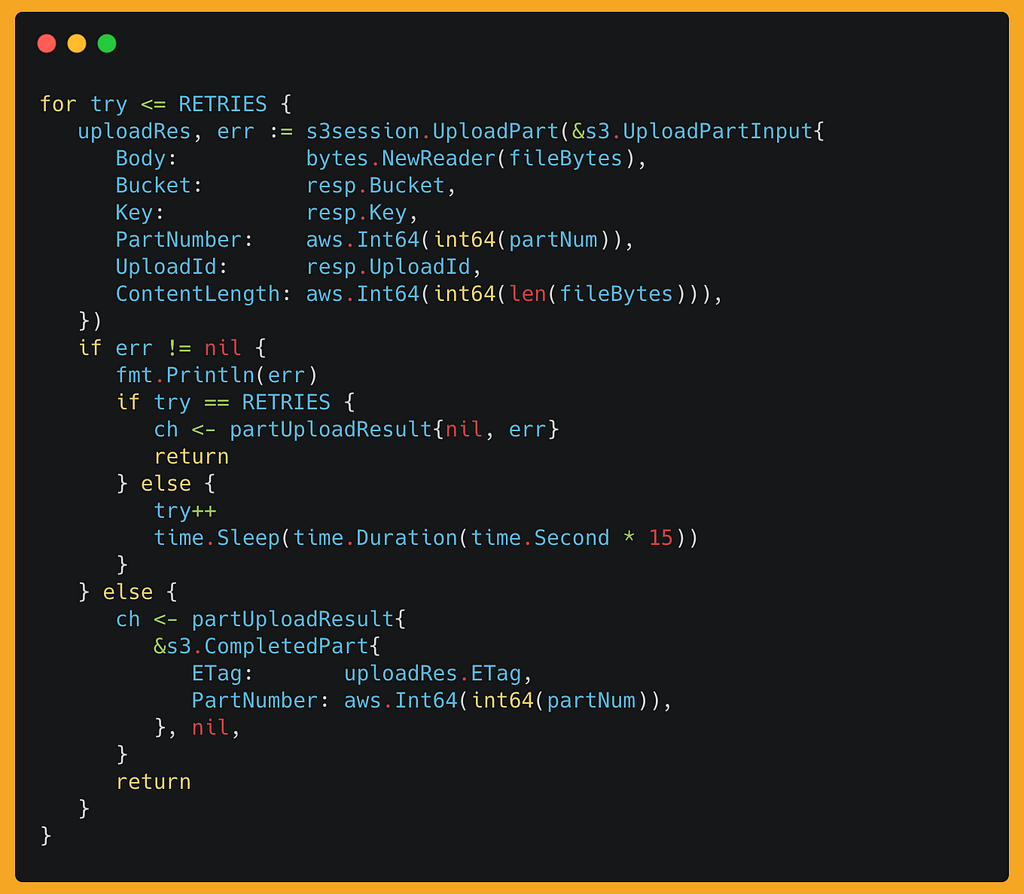

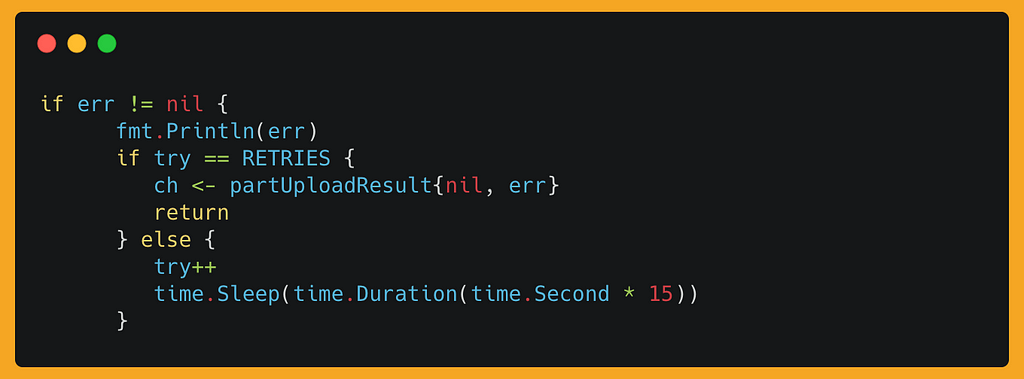

It is always good to have a retry mechanism in our code, especially if dealing with network and API. In this example, there are two times retry that could be easily updated by changing the RETRIES variable's value.

By calling UploadPart and passing all the necessary parameters, you could trigger the upload process. In case there was an upload error, the retry mechanism takes care of it here. To have a better chance of success, use sleep to not try immediately after a failure as some network connections could be temporary and recovered by themselves!

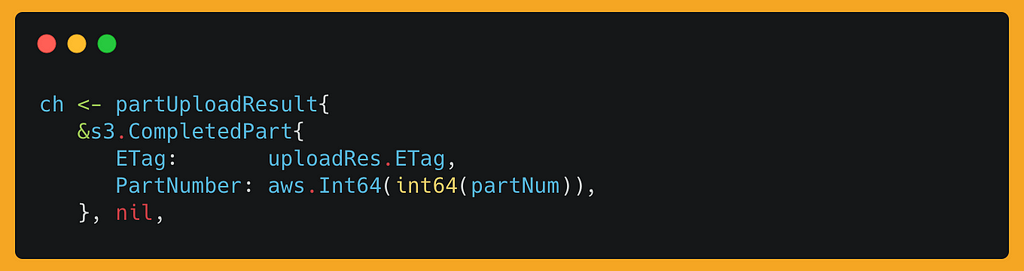

Channels are used to communicate with the outside world; There are three occasions that the uploadToS3 function puts the message into the channel:

- After two failed retr

2. After successfully uploaded the chuck

3. If there is no value set for RETRIES.

I hope you enjoyed this post, and please leave your comments below if you have any questions.

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 💰 Free coding interview course ⇒ View Course

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

🚀👉 Join the Level Up talent collective and find an amazing job

S3 Multipart Upload With Goroutines was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Mehran

Mehran | Sciencx (2023-04-18T23:05:59+00:00) S3 Multipart Upload With Goroutines. Retrieved from https://www.scien.cx/2023/04/18/s3-multipart-upload-with-goroutines/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.