This content originally appeared on Bits and Pieces - Medium and was authored by nairihar

How you can balance the socket server, what kind of problems may occur, and how you can solve them.

Server scaling and distribution are among the most exciting topics in back-end development.

There are many ways to scale your app and handle many requests and connections. This article will explain one of the most popular ways of scaling Node.js applications, especially Socket connections.

Imagine you have a Node application which receives 300 requests per second. It works fine, but one day the request count becomes 10 or 100 times more. Then, you will have a big problem. Node applications aren't meant for handling 30k requests per second (in some cases, they can, but only thanks to CPU and RAM).

As we know, Node is a single thread and doesn't use the much resources of your machine (CPU, RAM). Anyway, it will be ineffective.

You don't have any guarantee that your application will not crash, or you can't update your Server without stopping it. If you have only one instance, your application will most likely experience some downtime.

How can we decrease downtimes? How can we practically use RAM and CPU? How can we update the application without stopping all systems?

NGINX Load Balancer

One of the solutions is a Load Balancer. In some cases, you can also use Cluster — but our suggestion is not to use Node Cluster because Load Balancers are more effective and provide more valuable things.

In this article, we will use only the Load Balancer. In our case, it will be Nginx. Here is an article which will explain to you how to install Nginx.

So, let's go ahead.

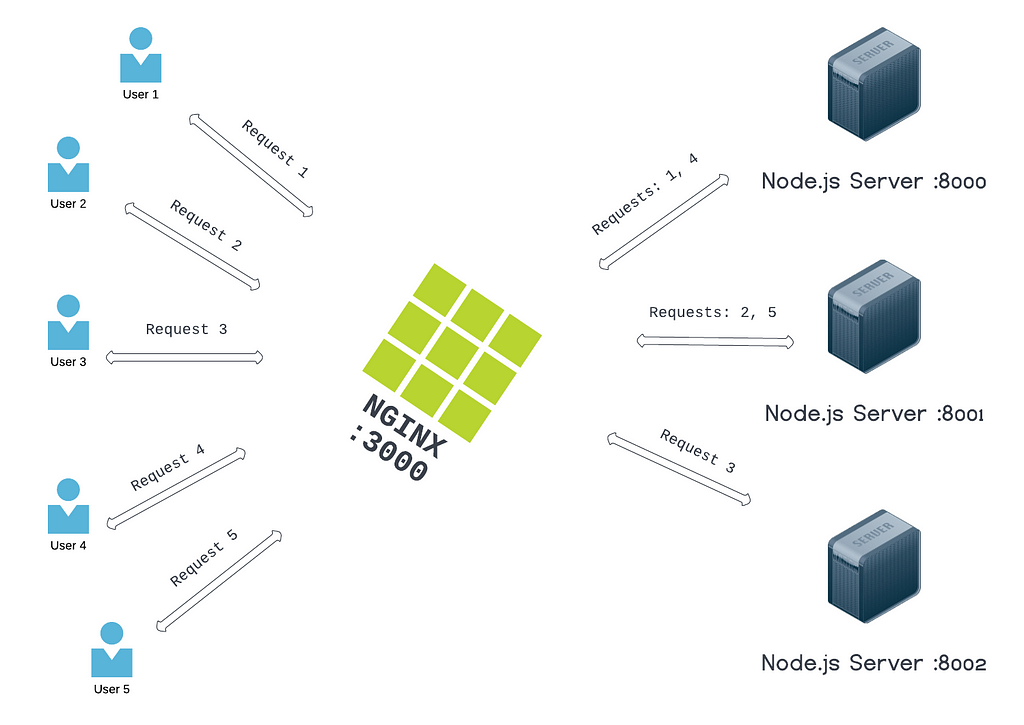

We can run multiple instances of a Node application and use an Nginx server to proxy all requests/connections to a Node server. By default, Nginx will use round robin logic to send requests to different servers in sequence.

As you can see, we have an Nginx server that receives all client requests and forwards them to different Node servers. As I have said, Nginx, by default, uses round robin logic, which is why the first request reaches server:8000, the second to 8001, the third to 8002 and so on.

Nginx also has some more features (i.e. creating backup servers, which will help when a server crashes, and Nginx will automatically move all requests to the backup server), but in this article, we will only use the Proxy.

Here is a primary Express.js server, which we will use with Nginx.

Using env we can send the port number from the terminal, and the express app will listen to that port number.

Let's run and see what happens.

PORT=8000 node server.js

We can see the Server PID in the console and the browser, which will help identify which Server received our call.

Let's run two more servers in the 8001 and8002 ports.

PORT=8001 node server.js

PORT=8002 node server.js

Now we have three Node servers in different ports.

Let's run the Nginx server.

Our Nginx server listens to the 3000 port and Proxy to upstream node servers.

Restart the Nginx server and go to http://127.0.0.1:3000/

Refresh multiple times, and you will see different PID numbers. We have just created a primary Load Balancer server which forwards the requests to other Node servers. You can handle many requests and use full CPU and RAM.

Let's see how the Socket works and how we can balance the Socket server in this way.

Socket Server Load Balancing

First of all, let's see how the Socket works in browsers.

There are two ways how Socket opens connections and listens for events. They are Long Polling and WebSocket — which are called transports.

By default, all browsers start Socket connections with Polling and then, if the browser supports WebSocket, it switches to WebSocket transport. But we can add an optional transports option and specify which transport or transports we want to use for the connection. And then, we can open the socket connection at once using WebSocket transport, or the opposite will only use Polling transport.

Let's see what the difference between Polling and WebSocket is.

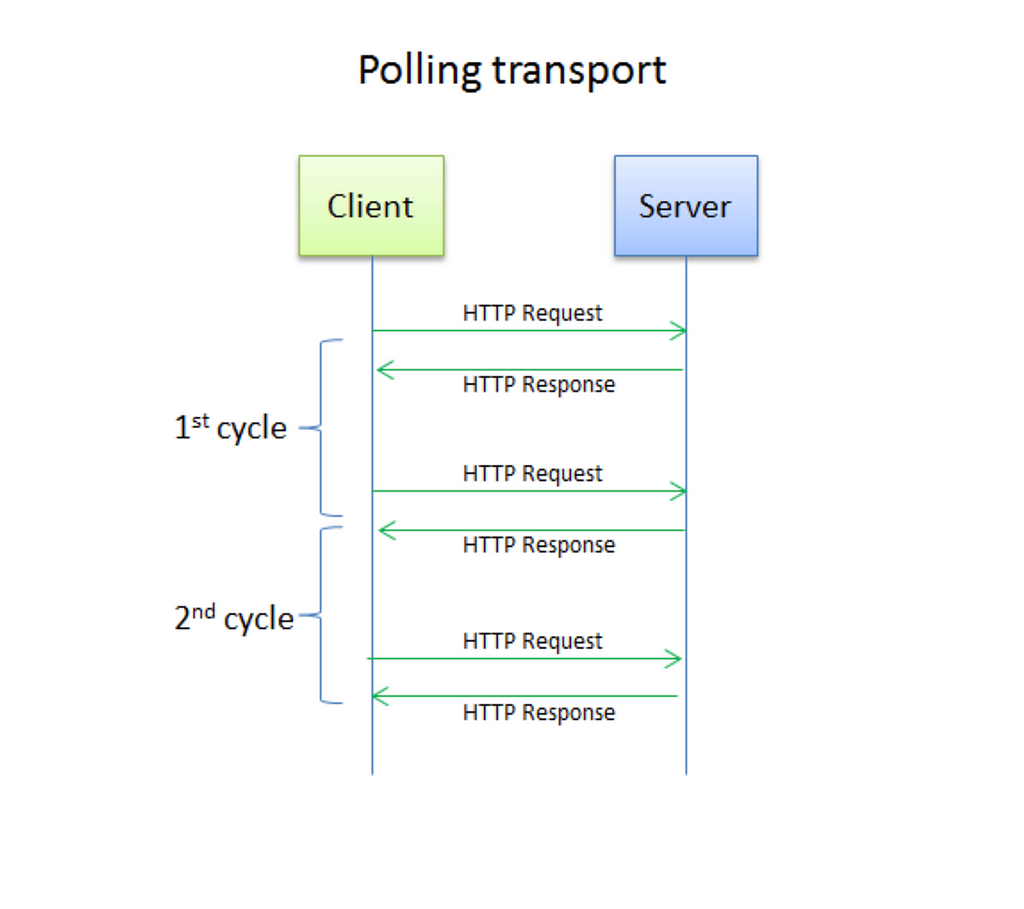

Long Polling

Sockets allow receiving events from the Server without requesting anything, which can be used for games and messengers. You don't know when your friend will send you a message to request the Server and get the response. Using Sockets, the Server will automatically send an event to you, and you will receive that data.

How can we implement functionality which will use only HTTP requests and provide a layer which will be able to receive some data from the Server without requesting that data? In other words, how do we implement Sockets using only HTTP requests?

Imagine you have a layer which sends requests to the Server, but the Server doesn't respond to you at once — in other words, you wait. When the Server has something that needs to be sent to you, the Server will send that data to you using the same HTTP connection you opened a little while ago.

As soon as you receive the response, your layer will automatically send a new request to the Server and again will wait for another response without checking the response of the previous request.

This way, your application can receive data/events from the Server anytime because you always have an open request waiting for the Server's response.

This is how Polling works. Here is a visualization of its work.

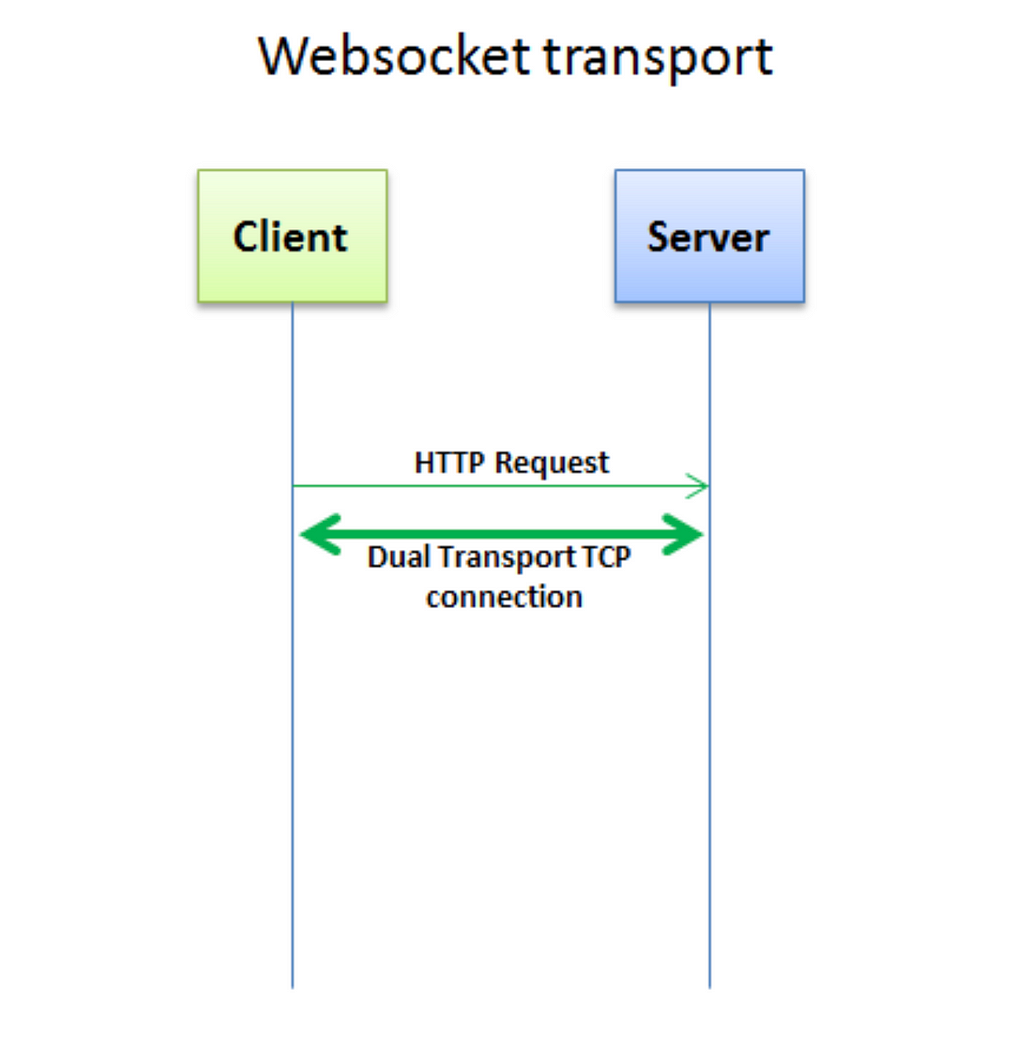

WebSocket

WebSocket is a protocol which allows to open only one TCP connection and keeps it for a long time. Here is another visualization image which shows how WebSocket works.

As I said, most browsers default connect Socket server using Polling transport (with XHR requests). Then, the Server requests to change transport to WebSocket. But, if the browser doesn't support WebSockets, it can continue using Polling. For some old browsers that cannot use WebSocket transport, the application will continue to use Polling transport and won't upgrade the transport layer.

Let's create a basic socket server and see how it works in Chrome's Inspect Network.

Run Node.js server on port 3000 and open index.html in Chrome.

As you can see, we're connecting to the Socket using polling, so it makes HTTP requests under the hood. If we open XHR on the Inspect Network page, we will see how it sends requests to the Server.

Open the Network tab from the browser's Inspect mode and look at the last XHR request in the network. It's always in the waiting process. There is no response. After some time, that request will be ended, and a new request will be sent — because if one request isn't responded from the Server for a long time, you will get the timeout error. So, if there is no response from the Server, it will update the request and send a new one.

Also, pay attention to the response of the Server's first request to the Client. The response data looks like this:

96:0{"sid":"EHCmtLmTsm_H8u3bAAAC","upgrades":["websocket"],"pingInterval":25000,"pingTimeout":5000}2:40As I have said, the Server sends options to the Client to upgrade transport from "polling" to "websocket". However, as we have only "polling" transport in the options, it will not switch.

Try to replace the connection line with this:

const socket = io('http://0.0.0.0:3000');Open the console and choose "All" from the Inspect Network page.

When you refresh the page, you will notice that, after some XHR requests, the client upgrades to "websocket". Pay attention to the type of Network items in the Network console. As you can see, "polling" is basic XHR, and WebSocket is the type of "websocket". When you click on that, you will see Frames. You will receive a new frame when the Server emits a new event. There are also some events (just numbers, i.e. 2, 3) that the client/server sends to each other to keep the connection. Otherwise, we will get a timeout error.

Now you have basic knowledge of how Socket works. But what problems can we have when we try to balance a socket server using Nginx, as in the previous example?

Problems

There are two significant problems.

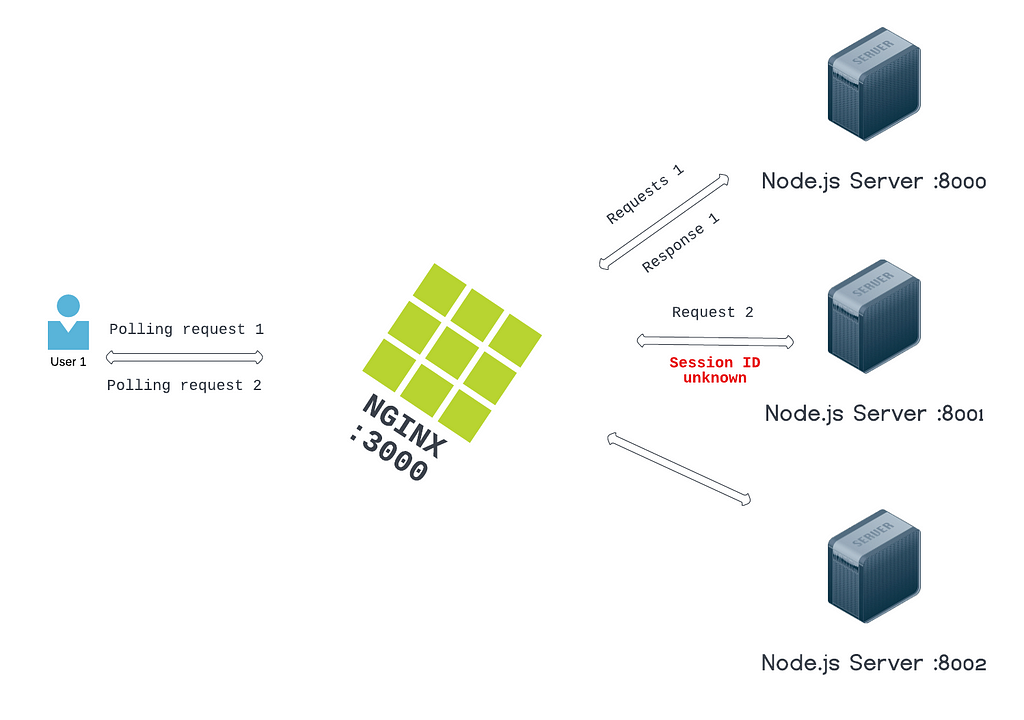

First, there is an issue when we have an Nginx load balancer with multiple Node servers, and the Client uses Polling.

As you may remember, Nginx uses round-robin logic to balance requests, so every request the Client sends to Nginx will be forwarded to a Node server.

Imagine you have three Node servers and an Nginx load balancer. A user requests to connect Server using Polling (XHR requests), Nginx balances that request to Node:8000, and the Server registers the Client's Session ID to be informed about the Client connected to this Server. The second time, when the user does any action, the Client sends a new request which Nginx forwards to Node:8001.

What should the second Server do? It receives an event from the Client who isn't connected to it. The Server will return an error with a Session ID unknown message.

The balancing becomes a problem for clients who use Polling. In the Websocket way, you will not get any error like this because you connect once and then receive/send frames.

Where should this problem be fixed: on the Client or Server?

Definitely in the Server! More specifically, in Nginx.

We should change the form of Nginx's logic, which balances the load. Another logic which we can use is ip_hash.

Every Client has an IP address, so Nginx creates a hash using the IP address and forwards the client request to a Node server, which means that every request from the same IP address will always be forwarded to the same Server.

This is the minimal solution to that problem; there are other possibilities. If you wish to go deeper, sometimes this solution will come short. You can research different logics for Nginx/Nginx PLUS or use other Load Balancers (i.e. HAProxy).

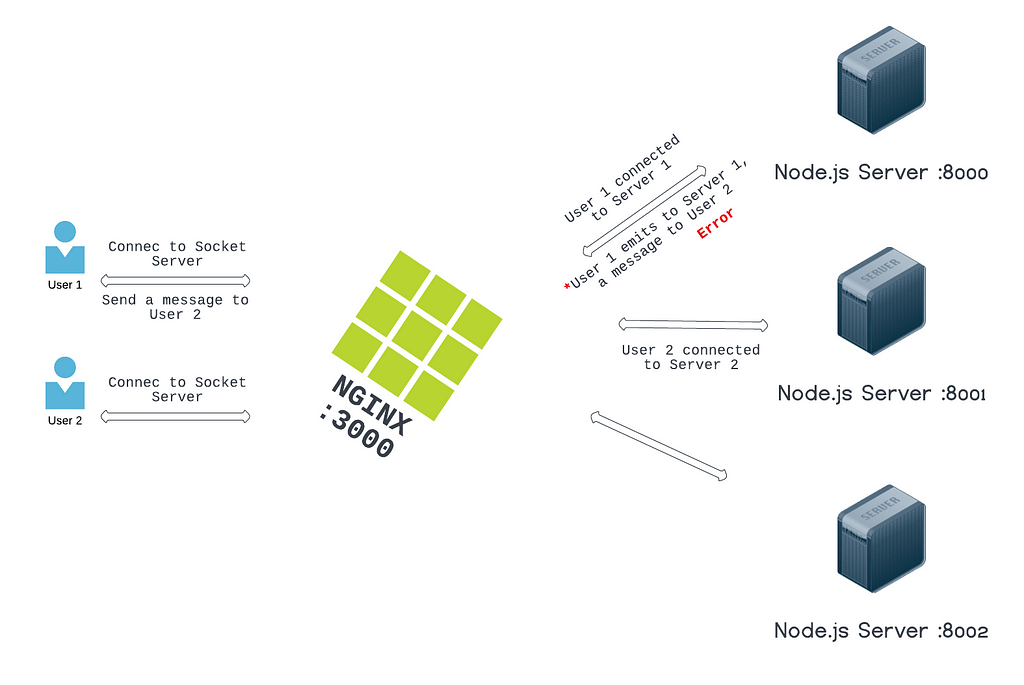

Moving on to the second problem: the user connects to one Server.

Imagine a situation where you are connected to Node:8000, a friend of yours is connected to Node:8001, and you want to send them a message. You send it by Socket, and the Server receives an event and wants to send your message to another user (your friend). I think you already guessed what problem we can have: the Server wants to send data to the user who is not connected to it but is connected to another server in the system.

There is only one solution, which can be implemented in many ways.

Create an internal communication layer for servers.

It means each Server will be able to send requests to other servers.

This way Node:8000 sends a request to Node:8001 and Node:8002. And they check if user2 is connected to it. If user2 is connected, that Server will emit the data provided by Node:8000.

Let's discuss one of the most popular technologies, which provides a communication layer that we can use in our Node servers.

Redis

As is written in the official documentation:

Redis is an in-memory data structure store used as a database

As so, it allows you to create key-value pairs in memory. Also, Redis provides some valuable and helpful features. Let's talk about one of these popular features.

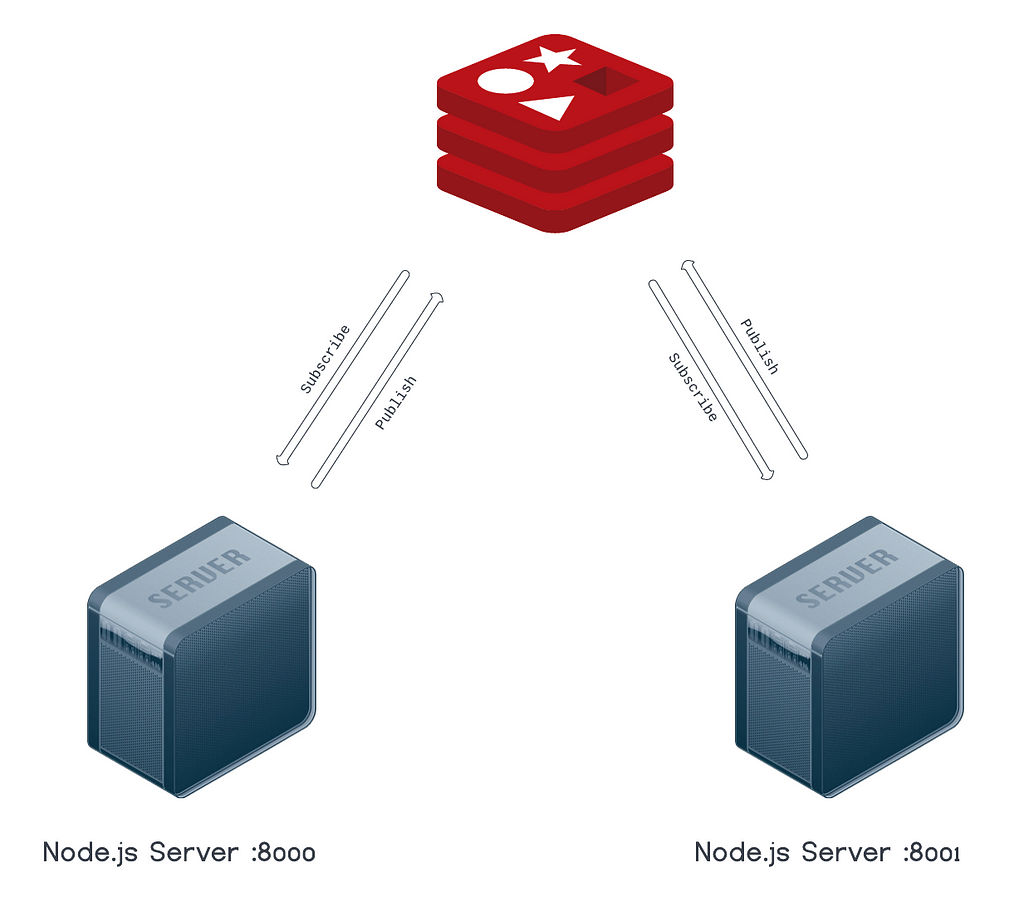

PUB/SUB

This messaging system provides us a Subscriber and Publisher.

Using Subscriber in a Redis client, you can subscribe to a channel and listen for messages. With Publisher, you can send messages to a specific channel which the Subscriber will receive.

It's like Node.js EventEmitter. But EventEmitter will not help when the Node application needs to send data to another.

Let's see how it works with Node.js.

Now, to run this code, we need to install the redis module. Also, don't forget to install Redis on your local machine.

npm i redis --save

Let's run and see the results.

node subscriber.js

and

node publisher.js

In the subscriber window, you will see this output:

Message "hi" on channel "my channel" arrived!

Message "hello world" on channel "my channel" arrived!

Congratulations! You have just established communication between different Node applications.

This way, we can have subscribers and publishers in one application to receive and send data.

You can read more about Redis PUB/SUB on the official documentation. Also, you can check the node-redis-pubsub module, which provides a simple way to use Redis PUB/SUB.

💡 Using an OSS tool such as Bit, this part becomes much easier. You can encapsulate Redis functionality into a module that can be stored in your Bit repository, independently tested, documented, and versioned, and easily integrated into multiple projects.

One approach would be to define a Redis client configuration object that takes host, port, and auth credentials as input and creates a client instance with it, and export it as a module function with Bit. Then, to use it in other projects, you can simply install it with npm i @bit/your-username/your-redis-service, import it, and pass it the project specific config.

Learn more here:

Extracting and Reusing Pre-existing Components using bit add

Connecting All These Pieces

Finally, we have come to one of the most exciting parts.

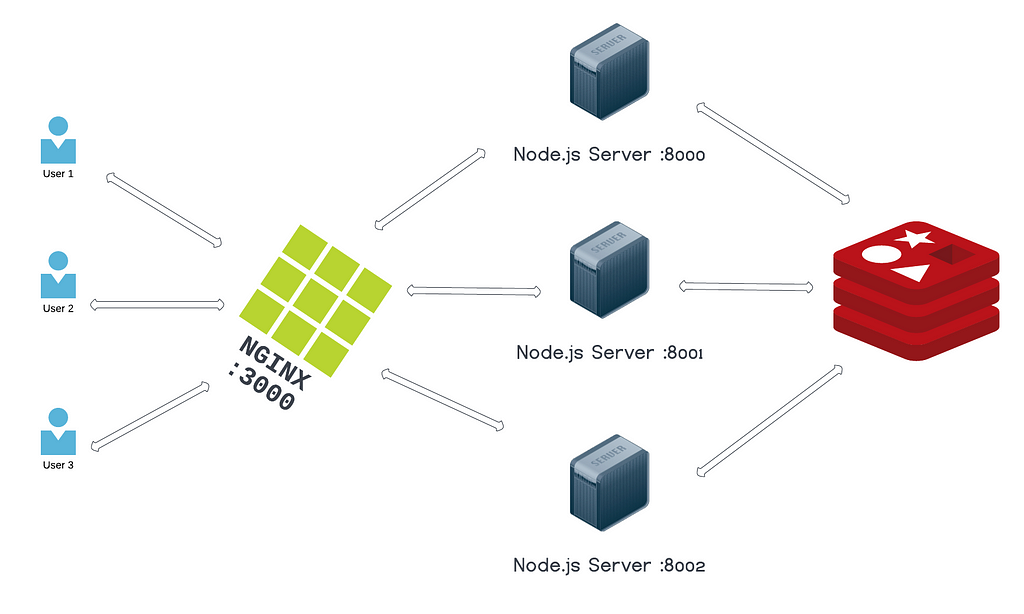

For handling lots of connections, we run multiple instances of the Node.js application and then balance the load to those servers using Nginx.

Using Redis PUB/SUB, we establish communications between Node.js servers. Whenever any Server wants to send data to a client that is not connected, the Server publishes the data. Then, every Server receives it and checks if the user is connected to it. In the end, that Server sends the provided data to the Client.

Here is the big picture of how the Back-end architecture is organized:

Let's see how we can implement this in Node.js. You won't need to create all this from scratch. There already are packages which do some of that work.

These two packages work for socket.io.

One interesting thing that the socket.io-emitter dependency provides emitting events to users from outside of the socket server. If you can publish valid data to Redis, which the servers will receive, then one of the servers can send the socket event to the Client. This means that it isn't important to have a Socket server to be able to send an event to the user. You can run a custom server that will connect to the same Redis and, using PUBLISH send socket events to the clients.

Also, there is another package named SocketCluster. SocketCluster is more advanced — it uses a cluster with brokers and workers. Brokers help us with the Redis PUB/SUB part; workers are our Node.js applications.

There is also Pusher which helps to build big scalable apps. It provides an API to their hosted PUB/SUB messaging system and has an SDK for some platforms (e.g., Android, IOS, Web). However, note that this is paid service.

Conclusion

In this article, we explained how you can balance the socket server, what kind of problems may occur, and how you can solve them.

We used Nginx to balance server load to multiple nodes. There are many load balancers, but we recommend Nginx/Nginx Plus or HAProxy. Also, we saw how the Socket works and the difference between polling and WebSocket transport layers. Finally, we saw how we can establish communication between Node.js instances and use all of them together.

As a result, we have a load balancer which forwards requests to multiple Node.js servers. Note that you must configure the load balancer logic to avoid any problems. We also have a communication layer for the Node.js Server. We used Redis PUB/SUB in our case, but you can use other communication tools.

I have worked with Socket.io (with Redis) and SocketCluster, and advise you to use them both in small and big projects. With these strategies, it is possible to have a game with SocketCluster, which can handle 30k socket connections. The SocketCluster library is a little bit old, and its community isn't so big, but it likely won't pose any issues.

Many tools will help you balance your load or distribute your system. We advise that you also learn about Docker and Kubernetes. Start researching about them ASAP!

Thank you, feel free to ask any questions or tweet me @nairihar.

Build Apps with reusable components, just like Lego

Bit’s open-source tool help 250,000+ devs to build apps with components.

Turn any UI, feature, or page into a reusable component — and share it across your applications. It’s easier to collaborate and build faster.

Split apps into components to make app development easier, and enjoy the best experience for the workflows you want:

→ Micro-Frontends

→ Design System

→ Code-Sharing and reuse

→ Monorepo

Learn more:

- Creating a Developer Website with Bit components

- How We Build Micro Frontends

- How we Build a Component Design System

- How to reuse React components across your projects

- 5 Ways to Build a React Monorepo

- How to Create a Composable React App with Bit

- How to Reuse and Share React Components in 2023: A Step-by-Step Guide

How to Scale Node.js Socket Server with Nginx and Redis was originally published in Bits and Pieces on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Bits and Pieces - Medium and was authored by nairihar

nairihar | Sciencx (2023-04-26T07:07:46+00:00) How to Scale Node.js Socket Server with Nginx and Redis. Retrieved from https://www.scien.cx/2023/04/26/how-to-scale-node-js-socket-server-with-nginx-and-redis/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.