This content originally appeared on Level Up Coding - Medium and was authored by Vivien Chua

This is the fourth article in a series that will guide you through the process of creating and fine-tuning a GPT-3.5-Turbo chatbot on an AWS EC2 instance.

The latest GPT-3.5-Turbo model from OpenAI was released in March 2023 and has been integrated into the popular ChatGPT product. This model is the most advanced language model to date and is more cost-effective, being 10 times cheaper than previous GPT-3 models. Unlike previous models that processed unstructured text as a sequence of tokens, the new GPT-3.5-Turbo model uses a different approach that consumes a sequence of messages in a format called Chat Markup Language (“ChatML”).

In this article, we will explore how to build a customized chatbot using GPT-3.5-Turbo on AWS. By following the steps outlined in this article, you can create a fully functional chatbot that can understand natural language and engage in meaningful conversations.

Before we begin, you will need to deploy Python on AWS, sign up for an OpenAI API key and install the Python packages.

Import OpenAI and Gradio

We will use the OpenAI model in conjunction with Gradio’s web-based user interface for creating machine learning demos and applications.

For this step, you need to replace “sk-xxxx” with your own OpenAI API key.

import openai

import gradio as gr

openai.api_key = "sk-xxxx"

Use Messages for Chat Completions

Prior to making an API call for GPT-3.5-Turbo, we will set the messages parameter. Messages is an array of message objects, where each object has a role of either “system”, “user” or “assistant” along with its corresponding content.

In this example, I created a chatbot instructed to answer only questions related to myself (“Vivien Chua”). This is a suitable test scenario as I had to provide information specifically about myself since OpenAI does not know me.

Note that the total tokens (both input and output) must fit within the model’s maximum limit, which is 4096 tokens for gpt-3.5-turbo.

messages = [

{"role":"system", "content": "This is a chatbot that only answer questions related to Vivien Chua. For questions not related to Vivien Chua, reply with Sorry, I do not know."},

{"role":"user","content": "Who is Vivien Chua?"},

{"role":"assistant","content": "Vivien Chua is Chief Investment Officer and co-founder at Meadowfield Capital. Her professional experience includes private equity, data analytics, and risk management. Vivien started her career as an Assistant Professor at the National University of Singapore, after returning from a Singapore National Research Foundation Ph.D. scholarship. Vivien graduated with a Ph.D. and M.S. in Engineering from Stanford University, and a B.S. in Engineering from Georgia Institute of Technology. She was awarded MIT Technology Review Innovators Under 35 Asia Pacific, SG 100 Women In Tech and Insurance Business Elite Women."}

]

Call OpenAI API

An example API call for GPT-3.5-Turbo is shown below.

def generate_response(prompt):

if prompt:

messages.append({"role": "user", "content": prompt})

chat = openai.ChatCompletion.create(

model="gpt-3.5-turbo", messages=messages

)

reply = chat.choices[0].message.content

messages.append({"role": "assistant", "content": reply})

return reply

Here we defined a function generate_response() that takes a prompt as an input, generates a response using the GPT-3.5-Turbo model from OpenAI, and returns the response.

The function first checks if a prompt is provided. If it is, the prompt is appended to messages with the role of "user". The openai.ChatCompletion.create() function is then called, passing in the model and messages parameters. This function generates a response based on the prompt using the GPT-3.5-Turbo model. The assistant’s reply is extracted with chat.choices[0].message.content. The generated response is then retrieved from the chat object and appended to messages with the role of "assistant".

Integrate with User Interface

In the following step, we will combine GPT-3.5-Turbo with the user interface that was created using the Gradio library. The chatbot takes user input through a textbox and responds with generated text using the generate_response() function mentioned earlier.

You can find an explanation of the functions my_chatbot() and gr.Blocks() in Hands-On Tutorial: Using OpenAI and Gradio to Create a Chatbot on AWS.

def my_chatbot(input, history):

history = history or []

my_history = list(sum(history, ()))

my_history.append(input)

my_input = ' '.join(my_history)

output = generate_response(my_input)

history.append((input, output))

return history, history

with gr.Blocks() as demo:

gr.Markdown("""<h1><center>My Chatbot</center></h1>""")

chatbot = gr.Chatbot()

state = gr.State()

text = gr.Textbox(placeholder="Hello. Ask me a question.")

submit = gr.Button("SEND")

submit.click(my_chatbot, inputs=[text, state], outputs=[chatbot, state])

demo.launch(share = True)

Test the Model

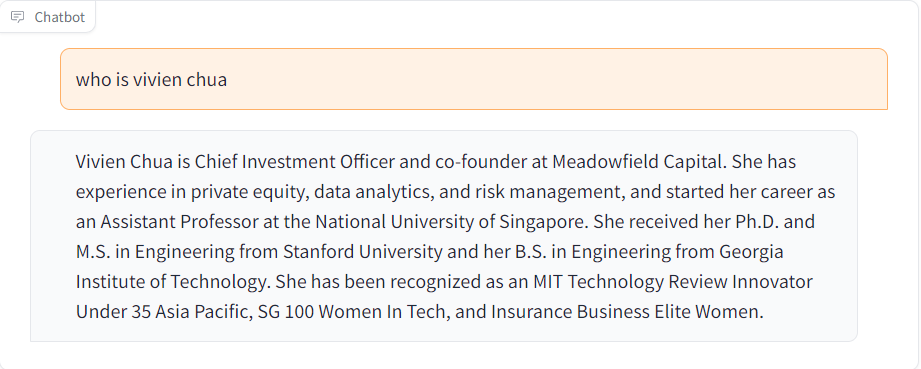

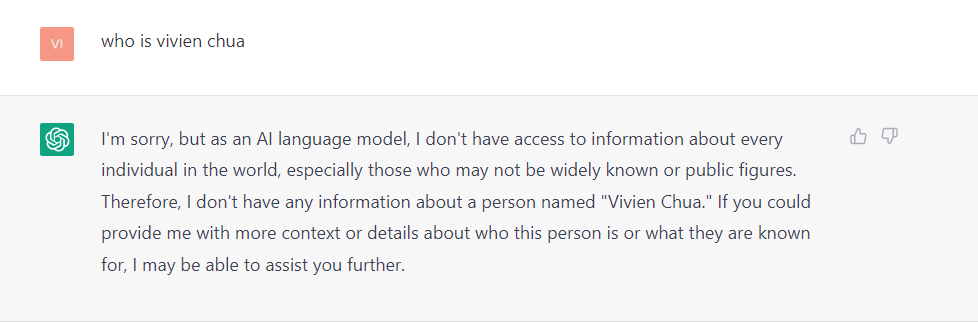

We are ready to see the chatbot in action. Our customized chatbot possesses knowledge that is beyond the scope of regular ChatGPT. We have successfully trained the GPT-3.5-Turbo model using information that was not in the ChatGPT training material.

Output from trained GPT-3.5-Turbo is shown below:

Output from ChatGPT is shown below:

Final Thoughts

While there may be limitations to using GPT-3.5-Turbo for training chatbots with large amounts of data, there are techniques that can be employed to overcome these challenges and create highly effective and customized chatbots.

If you are interested in learning more, feel free to email me at vivien@meadowfieldcapital.com.

With the right approach and resources, building a customized chatbot using GPT-3.5-Turbo and other advanced tools and platforms can be a valuable investment that can provide significant benefits to users and businesses alike.

Also read:

- A Beginners Guide to Deploying Python on AWS

- Hands-On Tutorial: Using OpenAI and Gradio to Create a Chatbot on AWS

- Fine-tuning GPT-3 with a Custom Dataset on AWS

Thank you for reading!

If you liked the article and would like to see more, consider following me. I post regularly on topics related to on-chain analysis, artificial intelligence and machine learning. I try to keep my articles simple but precise, providing code, examples and simulations whenever possible.

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 💰 Free coding interview course ⇒ View Course

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

🚀👉 Join the Level Up talent collective and find an amazing job

Building a Customized Chatbot from Scratch with GPT-3.5-Turbo and AWS was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Vivien Chua

Vivien Chua | Sciencx (2023-04-28T14:19:14+00:00) Building a Customized Chatbot from Scratch with GPT-3.5-Turbo and AWS. Retrieved from https://www.scien.cx/2023/04/28/building-a-customized-chatbot-from-scratch-with-gpt-3-5-turbo-and-aws/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.