This content originally appeared on Bits and Pieces - Medium and was authored by Tuhin Banerjee

Real-time Collaboration Made Easy

Microservices architecture has emerged as a popular approach for developing large, complex applications that can be broken down into smaller, independently deployable services. And when it comes to building high-performance microservices, Node.js, gRPC, and TypeScript make for a powerful combination.

In my previous blog post, we explored the fundamentals of building microservices with Node.js, gRPC, and TypeScript. Now, it’s time to take things up a notch and dive deeper into some advanced techniques.

In this blog post, we’ll cover some essential strategies for building real-time collaboration services.

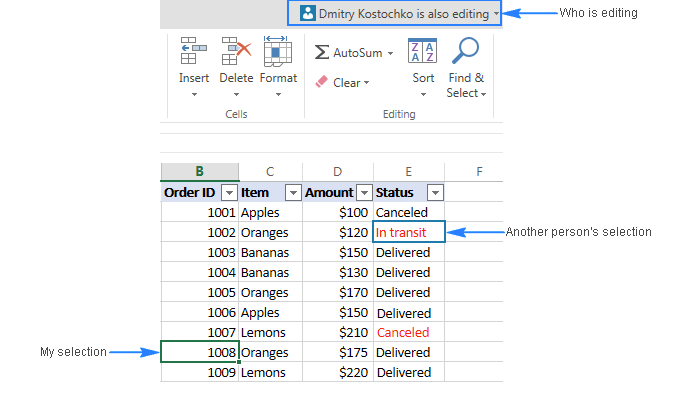

Real-time collaboration is an essential feature of modern applications, especially in the field of real-time communication, online gaming, and collaborative document editing. In this write-up, we’ll explore how to build real-time collaboration using gRPC, Node.js, and TypeScript.

💡 For Node.js-based microservices, a good solution would be using Bit. You can set it up as your main package and dependencies manager and it will abstract you from all the tools on the workflow and they’d be able to compose and deploy different versions only using a single tool. The extra benefit is that you can share internal components (i.e libraries and functions you create for your use case) with other microservices easily.

Learn more:

Component-Driven Microservices with NodeJS and Bit

Bit can also help you share reusable types across multiple microservices to reduce the amount of code that needs to be written.

Find out more:

Sharing Types Between Your Frontend and Backend Applications

Let's go over the basics again

What is gRPC?

gRPC is a high-performance, open-source, universal RPC framework developed by Google. It allows you to define the service interfaces and generate the server-side and client-side code in multiple languages, including JavaScript and TypeScript.

gRPC uses the Protocol Buffers (protobuf) serialization format by default, which is a language-agnostic binary format that is compact, fast, and extensible. gRPC supports both unary and streaming RPCs, which means that you can send and receive single values or a stream of values in real time.

What is Node.js?

Node.js is a popular server-side JavaScript runtime that allows you to build scalable, high-performance, and event-driven applications. Node.js uses an event loop architecture that enables non-blocking I/O operations, making it suitable for real-time applications.

Node.js provides many built-in modules and packages, as well as a large ecosystem of third-party libraries and tools that simplify web development.

What is TypeScript?

TypeScript is a superset of JavaScript that adds static type checking, class-based object-oriented programming, and other features to JavaScript. TypeScript compiles JavaScript and can run on any platform that supports JavaScript.

TypeScript provides many benefits, including better code readability, easier maintenance, and improved code quality. It also allows you to catch type-related errors at compile-time, rather than at runtime.

Prerequisites

Before we begin, make sure you have the following prerequisites installed:

- Node.js (version 12 or higher)

- NPM (Node.js package manager)

- Protocol Buffer compiler (protoc)

Step 1: Define the Protocol Buffer File

syntax = "proto3";

import "google/protobuf/empty.proto";

package spreadsheet;

message Cell {

string id = 1;

string value = 2;

Location location = 3;

bool locked = 4;

string error = 5; // Add error field

repeated Collaborator collaborators = 6;

}

message Collaborator {

string id = 1;

string name = 2;

}

message Location {

int32 row = 1;

int32 column = 2;

}

service Spreadsheet {

rpc AddCell(Cell) returns (google.protobuf.Empty) {}

rpc UpdateCell(Cell) returns (google.protobuf.Empty) {}

rpc GetCells(google.protobuf.Empty) returns (stream Cell) {}

rpc UpdateCellStream(stream Cell) returns (stream Cell) {}

rpc realtimeCollaboration(stream Collaborator) returns (stream Cell) {}

}

In this file, we have three message types: Cell, Collaborator, and Location. Cell represents a cell in the spreadsheet, Collaborator represents a user collaborating on the spreadsheet, and Location represents the location of a cell in the spreadsheet.

We also have a Spreadsheet service that defines the methods we'll be using to communicate between the client and server. We have four methods defined: AddCell, UpdateCell, GetCells, UpdateCellStream, and RealtimeCollaboration.

AddCell and UpdateCell are used to add or update a cell in the spreadsheet. GetCells is used to retrieve all cells in the spreadsheet. UpdateCellStream is used to stream updates to the cells in the spreadsheet. And finally, RealtimeCollaboration is used for real-time collaboration, where users can collaborate on the spreadsheet in real time.

Step 2: Generate Code from the Protocol Buffer File

Once we’ve defined the protocol buffer file, we need to generate code from it so we can use it in our server and client applications. To do this, we’ll use the Protocol Buffer compiler (protoc) and the gRPC Node.js plugin.

Refer to my previous blog to generate the typescript files

Building High-Performance Microservices with Node.js, gRPC, and TypeScript

Step 3: Define functions in the server.ts

function addCell(call: grpc.ServerUnaryCall<Cell>, callback: grpc.sendUnaryData<Empty>) {

const cell = call.request;

if (locks.has(cell.getId())) {

return callback(new Error('Cell is currently locked'), cell);

}

locks.set(cell.getId(), call);

cells.set(cell.getId(), cell);

locks.delete(cell.getId());

// Simulate a long-running operation to test locks

// setTimeout(() => {

// locks.delete(cell.getId());

// callback(null, new Empty());

// }, 5000);

console.log(`-----added cell`, cells);

callback(null, new Empty());

}function updateCell(call: grpc.ServerUnaryCall<Cell>, callback: grpc.sendUnaryData<Empty>) {

const cell = call.request;

if (locks.has(cell.getId())) {

return callback(new Error('Cell is currently locked'), cell);

}

locks.set(cell.getId(), call);

cells.set(cell.getId(), cell);

locks.delete(cell.getId());

console.log(`-----updateCell`, cells);

callback(null, new Empty());

}

function getCells(call: grpc.ServerWritableStream<Empty>) {

for (const cell of cells.values()) {

call.write(cell);

}

call.end();

}function startServer() {

const server: any = new grpc.Server();

server.addService(SpreadsheetService, { addCell, updateCell, getCells, updateCellStream, realtimeCollaboration });

server.bind('0.0.0.0:50051', grpc.ServerCredentials.createInsecure());

server.start();

console.log('Server started on port 50051');

}

startServer();The server implements the gRPC service and maintains the state of the spreadsheet using a Map data structure. The addCell and updateCell functions handle incoming requests from clients to add or update cells, while the getCells the function provides a stream of all cells currently

How to handle concurrency?

import grpc from 'grpc';

import { Spreadsheet, Cell } from './proto/spreadsheet_pb';

import { Empty } from 'google-protobuf/google/protobuf/empty_pb';

const cells = new Map<string, Cell>();

const locks = new Map<string, grpc.ServerUnaryCall<Cell>>();

function addCell(call: grpc.ServerUnaryCall<Cell>, callback: grpc.sendUnaryData<Empty>) {

const cell = call.request;

if (locks.has(cell.getId())) {

return callback(new Error('Cell is currently locked'));

}

locks.set(cell.getId(), call);

cells.set(cell.getId(), cell);

locks.delete(cell.getId());

callback(null, new Empty());

}

function updateCell(call: grpc.ServerUnaryCall<Cell>, callback: grpc.sendUnaryData<Empty>) {

const cell = call.request;

if (locks.has(cell.getId())) {

return callback(new Error('Cell is currently locked'));

}

locks.set(cell.getId(), call);

cells.set(cell.getId(), cell);

locks.delete(cell.getId());

callback(null, new Empty());

}

function getCells(call: grpc.ServerWritableStream<Empty>) {

for (const cell of cells.values()) {

call.write(cell);

}

call.end();

}

function startServer() {

const server = new grpc.Server();

server.addService(Spreadsheet.service, { addCell, updateCell, getCells });

server.bind('0.0.0.0:50051', grpc.ServerCredentials.createInsecure());

server.start();

console.log('Server started on port 50051');

}

startServer();

We add a locks map to keep track of which cells are currently locked by a client. When a client wants to add or update a cell, we first check if the cell is currently locked. If it is, we return an error indicating that the cell is currently locked. Otherwise, we add the cell to the cells map and release the lock.

Note that this is a very simple lock mechanism and may not be sufficient for handling more complex concurrency scenarios. In a real-world application, you may need to use a more advanced locking mechanism such as distributed locks using a tool like Redis or etcd

Let's add client.ts and test the current implementation.

import grpc from 'grpc';

import { SpreadsheetClient } from './generated/proto/spreadsheet_grpc_pb';

import { Cell, Location, Collaborator } from './generated/proto/spreadsheet_pb';

import { Empty } from 'google-protobuf/google/protobuf/empty_pb';

const client = new SpreadsheetClient('localhost:50051', grpc.credentials.createInsecure());

function addCell(location: Location, value: string, id: string) {

const cell = new Cell();

cell.setLocation(location);

cell.setValue(value);

cell.setId(id);

client.addCell(cell, (error, response) => {

if (error) {

console.error('Failed to add cell:', error);

} else {

console.log('Cell added successfully');

}

});

}

function updateCell(location: Location, value: string, id: string) {

const cell = new Cell();

cell.setLocation(location);

cell.setValue(value);

cell.setId(id);

client.updateCell(cell, (error, response) => {

if (error) {

console.error('Failed to update cell:', error);

} else {

console.log('Cell updated successfully');

}

});

}

function getCells() {

const call = client.getCells(new Empty());

call.on('data', (cell) => {

console.log('Received cell:', cell.toObject());

});

call.on('end', () => {

console.log('Finished receiving cells');

});

}

// Usage examples:

const location = new Location();

location.setRow(1);

location.setColumn(1);

addCell(location, 'Hello', 'A1');

addCell(location, 'Foo', 'A2');

updateCell(location, 'World', 'A1');

updateCell(location, 'World2', 'A1');

updateCell(location, 'World23', 'A1');

getCells();

How can I test if the locks are working?

You can also modify the addCell updateCell functions in server.ts to add a delay before releasing the lock. This will simulate a long-running operation and allow you to test if the locks are working correctly.

function addCell(call: grpc.ServerUnaryCall<Cell>, callback: grpc.sendUnaryData<Empty>) {

const cell = call.request;

if (locks.has(cell.getId())) {

return callback(new Error('Cell is currently locked'), cell);

}

locks.set(cell.getId(), call);

cells.set(cell.getId(), cell);

// Simulate a long-running operation

setTimeout(() => {

locks.delete(cell.getId());

callback(null, new Empty());

}, 5000);

}

function updateCell(call: grpc.ServerUnaryCall<Cell>, callback: grpc.sendUnaryData<Empty>) {

const cell = call.request;

if (!locks.has(cell.getId())) {

return callback(new Error('Cell is not locked'), cell);

}

const lockedCall = locks.get(cell.getId());

if (lockedCall !== call) {

return callback(new Error('Cell is locked by another request'), cell);

}

cells.set(cell.getId(), cell);

// Simulate a long-running operation

setTimeout(() => {

locks.delete(cell.getId());

callback(null, new Empty());

}, 5000);

}Now that we’ve achieved the ability to send and receive multiple cell updates while maintaining the state of the cells, it’s time to take things to the next level and introduce real-time collaboration. By incorporating collaborative details and broadcasting cell updates to connected clients from the server, we can enhance the overall functionality and usability of our application. With this addition, users can collaborate on the same document in real-time, making for a more seamless and efficient experience.

ReadTimeCollaboration handler in server

function realtimeCollaboration(call: grpc.ServerDuplexStream<Collaborator, Cell>) {

call.on('data', (collaborator: Collaborator) => {

console.log(`Adding collaborator ${collaborator.getName()}`);

clients.add(call);

console.log(`Received cell update: ${JSON.stringify(collaborator.toObject())}`);

if (!hasCell(collaborator.getId())) {

console.log(`Cell ${collaborator.getId()} not found`);

return;

}

// // Make changes to the local copy of the document

// const newDoc = Automerge.change(sharedDoc, (doc: any) => {

// // Add a new item to the document

// doc.cards.push({ title: 'Rewrite everything in Clojure', done: false })

// })

// let binary = Automerge.save(newDoc)

// console.log(`binary`, binary)

const broadcastCell = cells.get(collaborator.getId());

broadcastCell.setValue(collaborator.getName() + `_value`);

cells.set(collaborator.getId(), broadcastCell);

console.log(`Broadcasting cell update`);

broadcast(broadcastCell);

});

call.on('end', () => {

console.log('Client disconnected');

clients.delete(call);

});

call.on('error', () => {

console.log('Client disconnected due to error');

clients.delete(call);

});

call.on('close', () => {

console.log('Client closed connection');

clients.delete(call);

});

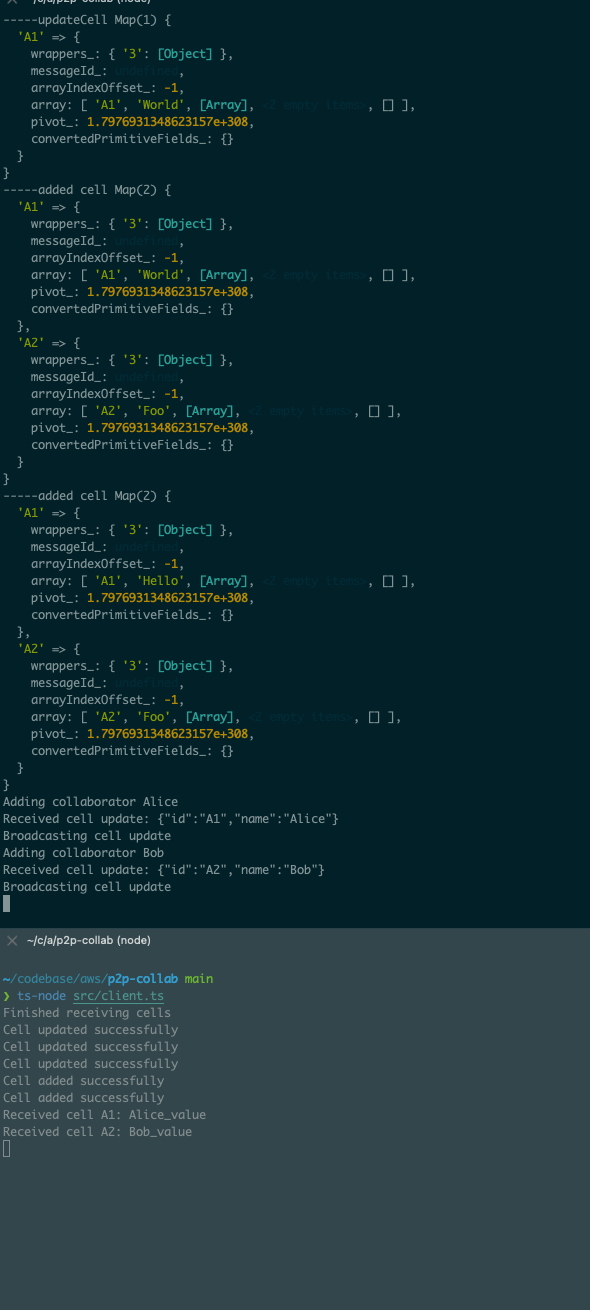

}This is a function that handles real-time collaboration updates sent from the client to the server. It takes a gRPC duplex stream as its input, which allows for bi-directional communication between the client and server.

The function registers a listener for the ‘data’ event, which is triggered whenever the client sends a new Collaborator object over the stream. When a Collaborator object is received, the function first logs a message indicating that a new collaborator is being added. Then, it adds the stream to a set of active client streams called ‘clients’. Next, it checks to see if the cell ID of the collaborator is present in the ‘cells’ Map object by calling the ‘hasCell’ function. If the cell ID is not present, the function logs a message indicating that the cell was not found and returns.

If the cell ID is present in the ‘cells’ Map object, the function retrieves the corresponding Cell object and updates its value to be the name of the collaborator plus “_value”. The updated Cell object is then stored back in the ‘cells’ Map. Finally, the function calls the ‘broadcast’ function to send the updated Cell object to all connected clients.

The function also registers listeners for the ‘end’, ‘error’, and ‘close’ events on the stream, which allow for the server to clean up the ‘clients’ set when a client disconnects or encounters an error.

Note that there are commented-out lines of code that reference the Automerge library, which is a library for building collaborative applications. These lines are not currently being used in the function. - We will understand the use case later in this blog.

Test real-time collaboration with the client

// real time collaboration testing

const collaborator1 = new Collaborator();

collaborator1.setId('A1');

collaborator1.setName('Alice');

const collaborator2 = new Collaborator();

collaborator2.setId('A2');

collaborator2.setName('Bob');

const call = client.realtimeCollaboration();

call.on('data', (cell: Cell) => {

console.log(`Received cell ${cell.getId()}: ${cell.getValue()}`);

});

call.on('end', () => {

console.log('Connection ended');

});

call.on('error', (err) => {

console.error(err);

});

setTimeout(() => {

const cell = new Cell();

cell.setId('A1');

cell.setValue('Hello from Alice!');

cell.addCollaborators(collaborator1);

cell.addCollaborators(collaborator2);

call.write(collaborator1);

call.write(collaborator2);

}, 1000);

Woo-hoo, we did it!

Github link to play with the above code — https://github.com/geektuhin123/p2p-collab

Further thoughts

IMO debugging gRPC code is tough than any other communication mode.

So the question is — Is gRPC really worth it when comes to real-time collaboration?

Well my answer is Yes

Both gRPC and WebSockets can be used for a real-time collaboration project, but they have some differences in terms of performance, ease of use, and flexibility.

gRPC is designed to be a high-performance, low-latency, and efficient remote procedure call framework. It uses the HTTP/2 protocol and binary serialization to achieve fast and efficient communication between client and server. gRPC also provides support for various programming languages and platforms, which makes it a popular choice for building microservices and distributed systems.

WebSockets, on the other hand, is a standard protocol for creating real-time, bidirectional, and event-driven communication between a client and a server over a single TCP connection. It is widely used in web applications and provides a simpler and more flexible API than gRPC.

In terms of performance, gRPC is generally faster than WebSockets due to its use of binary serialization and HTTP/2 protocol. However, WebSockets can be more flexible and easier to use for certain types of applications, such as web-based collaboration tools.

Now it's time for some real scalability using the Local first approach.

Remember the commented code in our above RealTimeCollaboration function. With gRPC, we can combine the power of Automerge.

What is Automerge?

Automerge is a JavaScript library that allows for real-time collaboration by automatically merging changes made by multiple users into a single shared document. Here are the steps to use Automerge for real-time collaboration:

- Install Automerge: You can install Automerge by running the following command in your terminal:

- npm install automerge

- Create a shared document: In order to collaborate on a document, you first need to create a shared document that all users can access. You can do this by creating a new Automerge document using the Automerge.init() function.

- Connect to a network: In order for multiple users to collaborate on a document, they need to be connected to a network that allows them to communicate with each other in real-time. There are various ways to achieve this, such as using WebSockets or a peer-to-peer network.

- Synchronize the document: Once multiple users are connected to the network, they can synchronize the document by sharing the document’s state with each other. This is done using the Automerge.applyChanges() function, which applies the changes made by other users to the local copy of the document.

- Collaborate on the document: Once the document is synchronized, all users can collaborate on it in real-time by making changes to their local copy of the document. When a user makes a change, Automerge automatically merges the change with the changes made by other users, ensuring that everyone has the latest version of the document.

- Handle conflicts: In some cases, conflicts can arise when multiple users make changes to the same part of the document at the same time. Automerge provides a conflict resolution mechanism that allows you to resolve conflicts in a deterministic way.

Overall, using Automerge for real-time collaboration involves creating a shared document, connecting to a network, synchronizing the document, collaborating on the document, and handling conflicts as they arise. With Automerge, you can build applications that allow multiple users to collaborate on a single document in real-time, making it ideal for use cases such as collaborative editing, real-time chat, and collaborative task management.

Final Thoughts

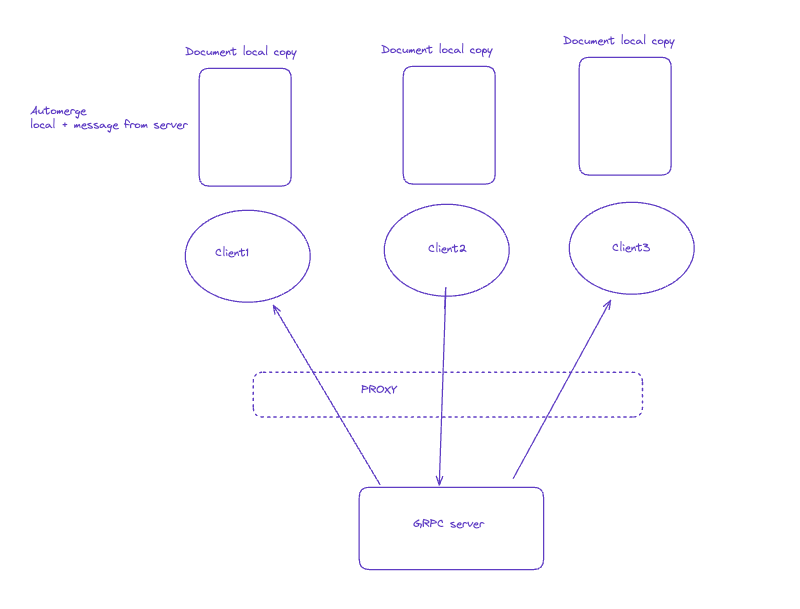

Automerge + gRPC looks really promising but we are not truly a local first model.

IMO Local First is good but comes with a lot of challenges. This is often called peer-to-peer (P2P) networking.

One way to implement P2P networking in a real-time collaborative application is to use a technique called “flooding.” In this approach, when a client makes a change to the shared state, it broadcasts the change to all other connected clients. Each client then applies the change to its local copy of the state and forwards it to all other clients. This process continues until all clients have received and applied the change.

To implement this approach, you would need to establish a direct communication channel between the clients. This could be done using WebRTC data channels, which allow for low-latency, peer-to-peer communication in the browser.

Here’s a high-level overview of how you could use Automerge with WebRTC to implement P2P networking:

- Each client creates a local Automerge document to represent the shared state of the application. The initial state of the document could be loaded from a server or generated locally.

- When a client makes a change to the document, it creates a patch using Automerge’s getChanges function and broadcasts the patch to all other connected clients using the WebRTC data channel.

- When a client receives a patch, it applies the patch to its local copy of the document using Automerge’s applyChanges function, and then broadcasts the patch to all other connected clients using the WebRTC data channel.

- Each client listens for incoming patches on the WebRTC data channel and applies them to its local copy of the document as they arrive.

- When a client connects to the network, it requests the current state of the document from one of the other clients. This could be done by sending a message over the WebRTC data channel or by using some other signalling mechanism.

Note that this approach has some limitations and trade-offs. For example, it can be difficult to guarantee that all clients will eventually converge in the same state due to network delays, message loss, and other factors. Additionally, the more clients that are connected to the network, the more bandwidth and processing power will be required to keep the state in sync. However, for small-scale applications with a relatively small number of clients, this approach can be effective and efficient.

Are you interested in a detailed working model that utilizes auto-merge and gRPC to handle real-time collaboration? Stay tuned for updates on the progress and implementation of this model.

From monolithic to composable software with Bit

Bit’s open-source tool help 250,000+ devs to build apps with components.

Turn any UI, feature, or page into a reusable component — and share it across your applications. It’s easier to collaborate and build faster.

Split apps into components to make app development easier, and enjoy the best experience for the workflows you want:

→ Micro-Frontends

→ Design System

→ Code-Sharing and reuse

→ Monorepo

Learn more:

- From Monolith to Microservices Using Tactical Forking

- Building Microservices with Confidence: Key Strategies and Techniques

- Microservices Aren’t Magic, but You Might Need Them

- How We Build Micro Frontends

- How we Build a Component Design System

- How to reuse React components across your projects

- 5 Ways to Build a React Monorepo

- How to Create a Composable React App with Bit

- Different Protocols for Microservice Communication

Building a Real-time Collaboration Service with Node.js, gRPC, and Automerge was originally published in Bits and Pieces on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Bits and Pieces - Medium and was authored by Tuhin Banerjee

Tuhin Banerjee | Sciencx (2023-05-04T14:16:50+00:00) Building a Real-time Collaboration Service with Node.js, gRPC, and Automerge. Retrieved from https://www.scien.cx/2023/05/04/building-a-real-time-collaboration-service-with-node-js-grpc-and-automerge/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.