This content originally appeared on DEV Community and was authored by Robert Johnson

There's plenty of advice around on how to create small Docker containers, but what is often missed is the value of making your main application layer as small as possible. In this article I'm going to show you how to how to do this for a Java application, and why it matters.

I'm assuming that you're already familiar with Docker layer caching; if not, then the official docs will give you a good overview.

TL;DR

- Instead of a single uberjar creation build step, split it into two steps:

- Copy dependencies to a

libfolder (e.g. via Maven'scopy-dependenciesplugin) - Create a jar, ensuring that the

libfolder is added to its manifest

- Copy dependencies to a

- The resulting docker image is much the same size - but Docker can now cache the dependencies, meaning that each subsequent build requires only a few extra kilobytes to store & transfer, rather than tens of MBs

- As an added benefit to splitting out the libraries, it makes it easier to trim down our dependency jars, such as removing useless alternate native libraries from JNI jars

The downsides of uberjars for Docker

For a long time the standard way to deploy Java applications has been to deploy the humble uberjar, which contains both our compiled code and all our application's dependencies. Packaging Java apps in this made sense back when deploying an application meant transferring files to and from servers directly, but it's not so useful in this age of containerized apps.

The reason for this is that for every single change to your application's source, all those dependencies bundled inside the uberjar end up inside the same docker layer as your app - and so each subsequent build effectively duplicates all of those third-party dependencies within your Docker repository. This is especially wasteful when we consider that your application code is what is going to change the vast majority of the time.

Suppose we have a nicely slimmed-down dockerfile, using an Alpine base image,

and even creating a tiny "custiom" JRE using jlink. However, suppose we

have a lot of dependencies - 77MB in this example.

The key part of our Dockerfile will look like

WORKDIR /app

CMD ["java", "-jar", "/app/my-app.jar"]

COPY --from=app-build /app/target/my-app-1.0-SNAPSHOT-jar-with-dependencies.jar /app/my-app.jar

And the docker layers created will look something like

$ docker history my-app

IMAGE CREATED CREATED BY SIZE COMMENT

548d9d7dc060 28 seconds ago COPY /app/target/my-app-1.0-SNAPSHOT-jar-wit… 77.7MB buildkit.dockerfile.v0

<missing> About a minute ago CMD ["java" "-jar" "/app/my-app.jar"] 0B buildkit.dockerfile.v0

<missing> About a minute ago WORKDIR /app 0B buildkit.dockerfile.v0

<missing> About a minute ago RUN /bin/sh -c apk add --no-cache libstdc++ … 2.55MB buildkit.dockerfile.v0

<missing> About a minute ago COPY /javaruntime /opt/java/openjdk # buildk… 37.1MB buildkit.dockerfile.v0

<missing> About a minute ago ENV PATH=/opt/java/openjdk/bin:/usr/local/sb… 0B buildkit.dockerfile.v0

<missing> About a minute ago ENV JAVA_HOME=/opt/java/openjdk 0B buildkit.dockerfile.v0

<missing> 3 months ago /bin/sh -c #(nop) CMD ["/bin/sh"] 0B

<missing> 3 months ago /bin/sh -c #(nop) ADD file:40887ab7c06977737… 7.05MB

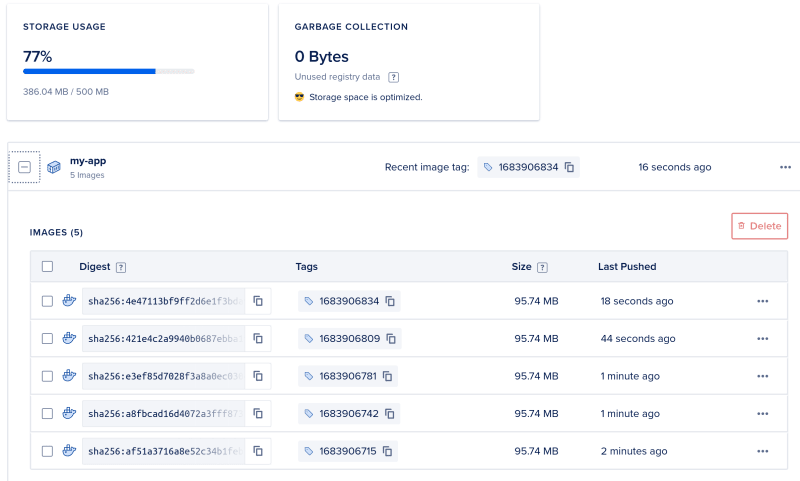

The total image size is 128mb, and after pushing the first version, this takes up 96MB in my container registry:

On each subsequent build & push of the new image, we're pushing up a whole layer including all our dependencies all over again:

The push refers to repository [registry.digitalocean.com/my-repo/my-app]

f78ba99c16ec: Pushing 77.74MB/77.74MB

76d6a32df414: Layer already exists

a7b85e634de7: Layer already exists

0eea560269c8: Layer already exists

7cd52847ad77: Layer already exists

So after just 5 pushes, we've already used up 386MB (!)

Splitting apart our dependencies from our application code

Using Maven, we simply replace any uberjar creating step(s) with the following 2 steps:

- to copy the dependencies to

target/lib - create the jar containing our compiled code only - but set up to look for its dependencies in

./lib:

<plugins>

<!-- (other plugins...) -->

<!-- Copy runtime dependencies to lib folder -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-dependency-plugin</artifactId>

<executions>

<execution>

<id>copy-dependencies</id>

<phase>prepare-package</phase>

<goals>

<goal>copy-dependencies</goal>

</goals>

<configuration>

<outputDirectory>${project.build.directory}/lib</outputDirectory>

<includeScope>runtime</includeScope>

</configuration>

</execution>

</executions>

</plugin>

<!-- Creates the jar and configures classpath to use lib folder -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<configuration>

<archive>

<manifest>

<addClasspath>true</addClasspath>

<classpathPrefix>lib/</classpathPrefix>

<mainClass>path.to.MainClass</mainClass>

</manifest>

</archive>

</configuration>

</plugin>

</plugins>

All we need to do to our Dockerfile is add an extra build step to copy the libs:

WORKDIR /app

CMD ["java", "-jar", "/app/my-app.jar"]

COPY --from=app-build /app/target/lib /app/lib

COPY --from=app-build /app/target/my-app-1.0-SNAPSHOT.jar /app/my-app.jar

(NB It's important here that the lib directory copy happens before the jar copy; if not, then application updates would invalidate the layer cache for the lib directory, thus losing the benefit!)

Benefits of the split

Upon pushing the first version of this build, there's not much difference to what we had before - the overall image size stored in the repository is almost the same. The benefit comes when we push subsequent new builds where we've changed only our source code; after 5 builds (where only our app source has changed), the total size in our image repo is only a few kb larger:

During each push, we only have to push a few more kb each time; now that the libs are in their own layer, which remains unchanged, the image repository is able to re-use the dependencies pushed in previous builds:

b54d889308d2: Pushing 16.9kB

8a9ec9b0baaa: Layer already exists

76d6a32df414: Layer already exists

a7b85e634de7: Layer already exists

0eea560269c8: Layer already exists

7cd52847ad77: Layer already exists

We can now push hundreds of new image builds without getting anywhere near to the limit of the free tier of this image repository, whereas before we'd hit the limit after not even 10 builds!

How the thin jar works

All we're doing with the above build configuration is creating a jar with just our compiled code in it, but telling it where to find the dependencies. If you're not familiar with the structure of a jar file, it's basically a zip file that contains

- a load of compiled java classes (

.classfiles) - a "manifest" file, there to tell java things like where your main class is and where any external dependencies are, when you try to run it via

java -jar

Uberjars simplify things by putting all the dependency compiled code into the jar along with your own app. But as described in the official docs for jar manifests, all we need to do to split out the dependencies is to specify them in a Class-Path\ entry in our manifest. With the split in place, our manifest file will look something like:

Manifest-Version: 1.0

Created-By: Maven JAR Plugin 3.3.0

Build-Jdk-Spec: 20

Class-Path: lib/kafka-clients-3.4.0.jar lib/zstd-jni-1.5.2-1.jar lib/lz4

-java-1.8.0.jar lib/slf4j-api-2.0.6.jar lib/slf4j-simple-2.0.6.jar

Main-Class: my.app.Main

Notice that annoyingly we have to manually specify each jar file explicitly in the manifest; thankfully, the maven-jar-plugin does this work for us (as should other build tools).

Bonus - Slimming down JNI dependencies

An added bonus to splitting out our library jars is that it makes it easier to further trim down the contents of JNI (Java Native Interface) dependencies. JNI dependencies often contain native libraries for multiple platforms - more waste! With an extra command in our Dockerfile, we can remove these unwanted native libraries from within those jars to trim down the image size even further.

The example application I'm working with is a Kafka Streams app, and so has a transitive dependency on rocksdbjni. This is a whopping 53MB! Within our Dockerfile we can remove the unwanted libraries from it using zip --delete (recall that jar files are just zip files), like so:

RUN zip --delete ./target/lib/rocksdbjni-7.1.2.jar \

librocksdbjni-linux32-musl.so \

librocksdbjni-linux32.so \

librocksdbjni-linux64.so \

librocksdbjni-linux-ppc64le.so \

librocksdbjni-linux-ppc64le-musl.so \

librocksdbjni-linux-aarch64.so \

librocksdbjni-linux-aarch64-musl.so \

librocksdbjni-linux-s390x.so \

librocksdbjni-linux-s390x-musl.so \

librocksdbjni-win64.dll \

librocksdbjni-osx-arm64.jnilib \

librocksdbjni-osx-x86_64.jnilib

This trimmed-down rocksdbjni jar is only 4MB - nice!

Conclusion

We get a lot of bang for our buck here: storage & network costs converted from megabytes to kilobytes with just a few tweaks to our app & container builds. This might sound rather academic, but as we've seen here it can make the difference between hitting your repository storage limit VS having headroom for hundreds of builds.

Give it a try, and let me know what you think!

This content originally appeared on DEV Community and was authored by Robert Johnson

Robert Johnson | Sciencx (2023-05-12T22:30:09+00:00) How to deploy thin jars for better Docker caching. Retrieved from https://www.scien.cx/2023/05/12/how-to-deploy-thin-jars-for-better-docker-caching/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.