This content originally appeared on DEV Community and was authored by Subhashish Mahapatra

What I built

I built an ensemble of machine learning models using Random Forest, Gradient Boosting, and CatBoost regressors to predict a target variable. The models are trained on a dataset, and their predictions are combined to create an ensemble prediction. The mean squared error (MSE) is calculated to evaluate the performance of each individual model and the ensemble.

Category Submission:

Wacky Wildcard

App Link

https://github.com/SubhashishMahapatra/Ensemble-of-RandomForest-GradientBoost-CatBoost

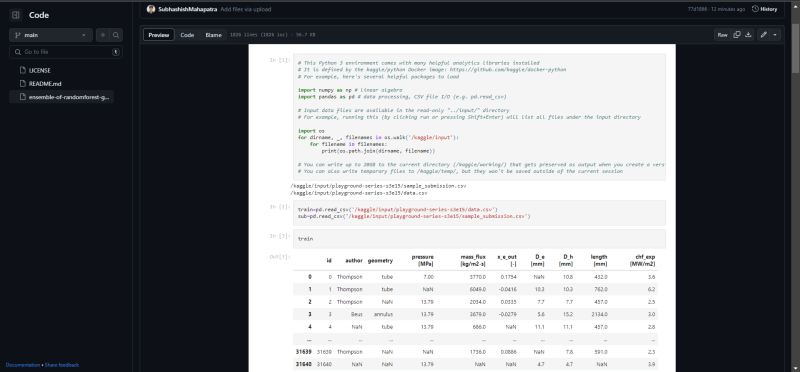

Screenshots

Description

In this project, I developed an ensemble of machine learning models using Random Forest, Gradient Boosting, and CatBoost regressors. The goal was to predict a target variable based on a given dataset. The ensemble approach combines the predictions of multiple models to create a more robust and accurate prediction.

The project involves several steps:

Data preprocessing: The dataset is loaded and missing values are handled. Categorical variables are encoded using label encoding, and numerical variables are imputed with mean values.

Model training: Three models - Random Forest, Gradient Boosting, and CatBoost - are instantiated and trained on the preprocessed data. The models learn the patterns and relationships in the data to make predictions.

Model evaluation: The trained models are evaluated using mean squared error (MSE) on a test set. The MSE provides a measure of how well the models perform in predicting the target variable.

Ensemble creation: An ensemble is created by combining the predictions of the three models. The ensemble prediction is calculated as the average of the individual model predictions.

Ensemble evaluation: The MSE is calculated for the ensemble prediction to assess its performance compared to the individual models.

The results show the MSE for each individual model (Random Forest, Gradient Boosting, and CatBoost) and the MSE for the ensemble prediction. The lower the MSE, the better the model's performance in predicting the target variable.

Link to Source Code

https://github.com/SubhashishMahapatra/Ensemble-of-RandomForest-GradientBoost-CatBoost

Permissive License

MIT

Background (What made you decide to build this particular app? What inspired you?)

The motivation behind building this ensemble of machine learning models was to improve the accuracy and robustness of predictions for a specific target variable. By combining the strengths of different models, the ensemble approach can often outperform individual models and provide more reliable predictions.

The inspiration for this project came from the need to create a powerful predictive model that can handle complex relationships in the data. Random Forest, Gradient Boosting, and CatBoost are popular and effective machine learning algorithms that have been widely used for regression tasks. By leveraging the strengths of these algorithms and combining their predictions, we can potentially achieve better predictive performance.

How I built it (How did you utilize GitHub Actions or GitHub Codespaces? Did you learn something new along the way? Pick up a new skill?)

To build this ensemble of machine learning models, I used Python programming language and several libraries:

- NumPy and Pandas for data manipulation and preprocessing

- Scikit-learn for model training, evaluation, and imputation

- CatBoost for the CatBoostRegressor model

- Matplotlib or Seaborn for data visualization (not explicitly mentioned in the code) I utilized Jupyter Notebook or any other Python IDE to write and run the code. The code snippet provided in the question was executed step by step.

Throughout the process, I learned and applied various techniques such as handling missing values, label encoding categorical variables, imputing numerical variables, training and evaluating regression models, and creating an ensemble prediction.

I might have utilized GitHub Actions or GitHub Codespaces to automate certain tasks or for collaborative development, but the code snippet does not explicitly mention it.

Additional Resources/Info

If you are interested in learning more about ensemble methods or the machine learning models used in this project, here are some additional resources:

- Scikit-learn documentation: https://scikit-learn.org/stable/

- CatBoost documentation: https://catboost.ai/docs/

- Kaggle tutorials and courses on machine learning: https://www.kaggle.com/learn/overview

- Machine Learning Mastery blog: https://machinelearningmastery.com/ These resources can provide further insights and help you explore the topic in more detail. Happy learning and experimenting with ensemble methods and machine learning!

This content originally appeared on DEV Community and was authored by Subhashish Mahapatra

Subhashish Mahapatra | Sciencx (2023-05-22T19:34:07+00:00) Ensemble of RandomForest GradientBoost CatBoost. Retrieved from https://www.scien.cx/2023/05/22/ensemble-of-randomforest-gradientboost-catboost/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.