This content originally appeared on HackerNoon and was authored by Auto Encoder: How to Ignore the Signal Noise

:::info Authors:

(1) Bobby He, Department of Computer Science, ETH Zurich (Correspondence to: bobby.he@inf.ethz.ch.);

(2) Thomas Hofmann, Department of Computer Science, ETH Zurich.

:::

Table of Links

Simplifying Transformer Blocks

Discussion, Reproducibility Statement, Acknowledgements and References

A Duality Between Downweighted Residual and Restricting Updates In Linear Layers

A DUALITY BETWEEN DOWNWEIGHTED RESIDUALS AND RESTRICTING UPDATES IN LINEAR LAYERS

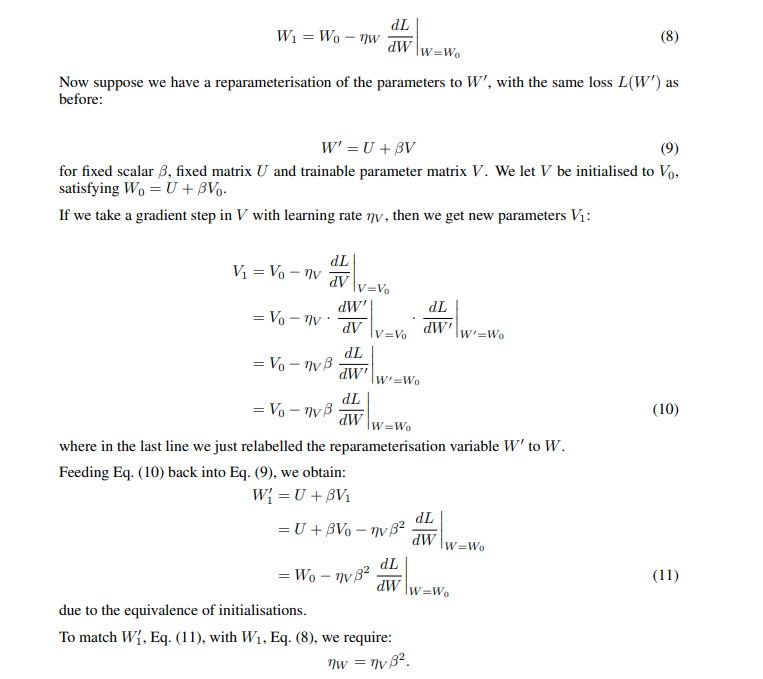

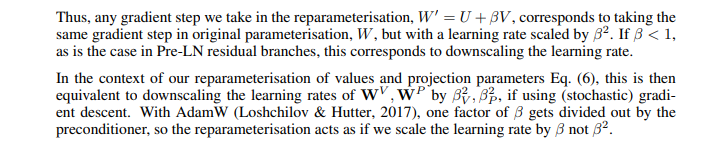

In Sec. 4.1, we motivated our reparameterisation of the value and projection parameters, Eq. (6), through a duality between downweighted residuals branches and restricting parameter updates (materialised through smaller learning rates) in linear layers. This is a relatively simple argument, found elsewhere in the literature e.g. Ding et al. (2023), which we outline here for completeness.

\ We suppose we have a (differentiable) loss function L(W), which is a function of some parameter matrix W. We consider taking a gradient step to minimise L, with learning rate ηW from initialisation W0. This would give new parameters W1:

\

\

\

:::info This paper is available on arxiv under CC 4.0 license.

:::

\

This content originally appeared on HackerNoon and was authored by Auto Encoder: How to Ignore the Signal Noise

Auto Encoder: How to Ignore the Signal Noise | Sciencx (2024-06-19T13:00:19+00:00) A Duality Between Downweighted Residual and Restricting Updates In Linear Layers. Retrieved from https://www.scien.cx/2024/06/19/a-duality-between-downweighted-residual-and-restricting-updates-in-linear-layers/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.