This content originally appeared on HackerNoon and was authored by Auto Encoder: How to Ignore the Signal Noise

:::info Authors:

(1) Bobby He, Department of Computer Science, ETH Zurich (Correspondence to: bobby.he@inf.ethz.ch.);

(2) Thomas Hofmann, Department of Computer Science, ETH Zurich.

:::

Table of Links

Simplifying Transformer Blocks

Discussion, Reproducibility Statement, Acknowledgements and References

A Duality Between Downweighted Residual and Restricting Updates In Linear Layers

3 PRELIMINARIES

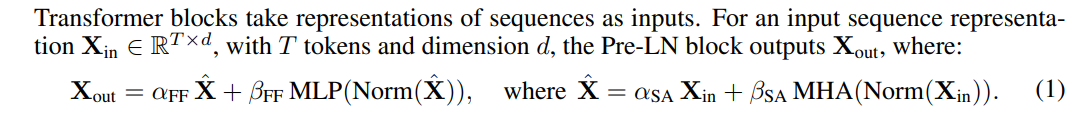

A deep transformer architecture of depth L is formed by sequentially stacking L transformer blocks. The most common block is Pre-LN, depicted in Fig. 1 (left), which we treat as a baseline for comparing training speed, both in terms of per-update and runtime. It differs from the original Post-LN block only in the position of the normalisation layers relative to the skip connections, but is more popular as the Post-LN block suffers from poor training stability and signal propagation in deep layers (Xiong et al., 2020; Liu et al., 2020; Noci et al., 2022; He et al., 2023).

\

\ with scalar gain weights αFF, βFF, αSA, βSA fixed to 1 by default. Here, “MHA” stands for MultiHead Attention (detailed below), and “Norm” denotes a normalisation layer (Ba et al., 2016; Zhang & Sennrich, 2019). In words, we see that the Pre-LN transformer block consists of two sequential sub-blocks (one attention and one MLP), with normalisation layers and residual connections for both sub-blocks, and crucially the normalisation layers are placed within the residual branch. The MLP is usually single hidden-layer, with hidden dimension that is some multiple of d (e.g. 4 (Vaswani et al., 2017) or 8/3 (Touvron et al., 2023)), and acts on each token in the sequence independently.

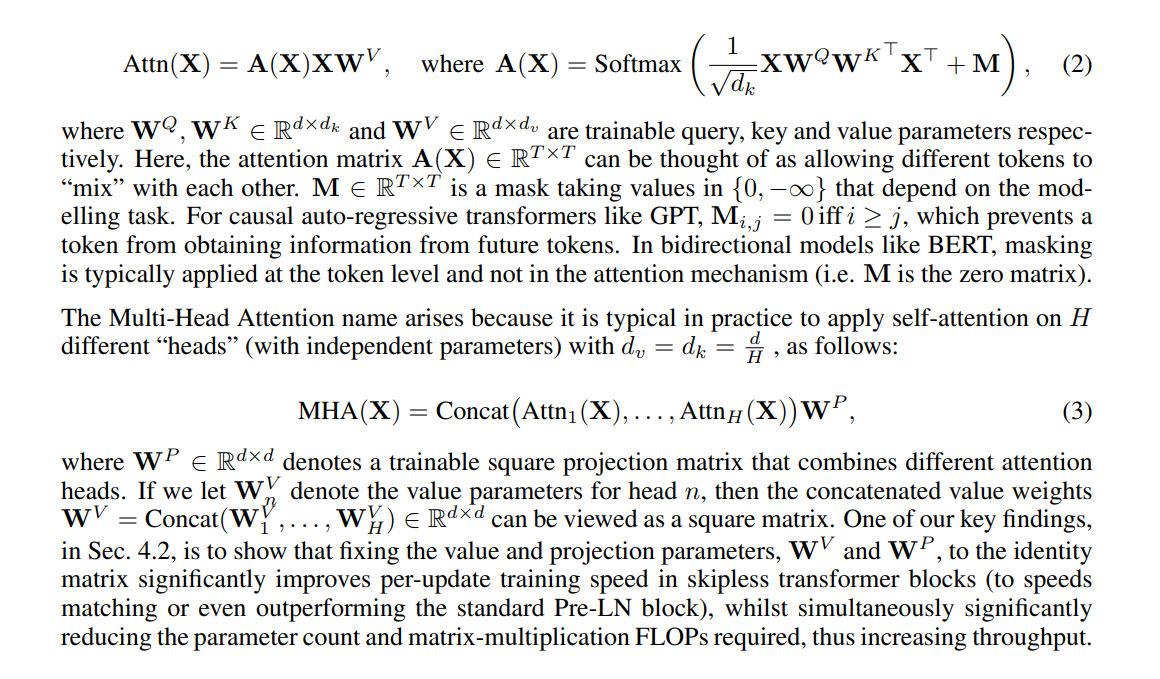

\ The MHA sub-block allows tokens to share information between one another using self-attention. For input sequence X, the self-attention mechanism outputs:

\

\

:::info This paper is available on arxiv under CC 4.0 license.

:::

\

This content originally appeared on HackerNoon and was authored by Auto Encoder: How to Ignore the Signal Noise

Auto Encoder: How to Ignore the Signal Noise | Sciencx (2024-06-19T09:00:16+00:00) Improving Training Stability in Deep Transformers: Pre-LN vs. Post-LN Blocks. Retrieved from https://www.scien.cx/2024/06/19/improving-training-stability-in-deep-transformers-pre-ln-vs-post-ln-blocks/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.