This content originally appeared on HackerNoon and was authored by Vijay Murganoor

\

Introduction

Generative artificial intelligence (generative AI) has captured the imagination of organizations and is transforming the customer experience in industries of every size across the globe. This leap in AI capability, fueled by multi-billion-parameter large language models (LLMs) and transformer neural networks, has opened the door to new productivity improvements, creative capabilities, and more.

As organizations evaluate and adopt generative AI for their employees and customers, cybersecurity practitioners must assess the risks, governance, and controls for this evolving technology at a rapid pace. As a security leader working with the largest, most complex customers at various cloud providers, I’m regularly consulted on trends, best practices, and the rapidly evolving landscape of generative AI and the associated security and privacy implications. In that spirit, I’d like to share key strategies that you can use to accelerate your own generative AI security journey.

\ This post, the first in a series on securing generative AI, establishes a mental model that will help you approach the risk and security implications based on the type of generative AI workload you are deploying. We then highlight key considerations for security leaders and practitioners to prioritize when securing generative AI workloads. Follow-on posts will dive deep into developing generative AI solutions that meet customers’ security requirements, best practices for threat modeling generative AI applications, approaches for evaluating compliance and privacy considerations, and explore ways to use generative AI to improve your own cybersecurity operations.

\

Where to Start

As with any emerging technology, a strong grounding in the foundations of that technology is critical to helping you understand the associated scopes, risks, security, and compliance requirements. To learn more about the foundations of generative AI, I recommend starting by reading more about what generative AI is, its unique terminologies and nuances, and exploring examples of how organizations are using it to innovate for their customers.

If you’re just starting to explore or adopt generative AI, you might imagine that an entirely new security discipline will be required. While there are unique security considerations, the good news is that generative AI workloads are, at their core, another data-driven computing workload, and they inherit much of the same security regimen. If you’ve invested in cloud cybersecurity best practices over the years and embraced prescriptive advice from sources like top security frameworks and best practices, you’re well on your way!

Core security disciplines like identity and access management, data protection, privacy and compliance, application security, and threat modeling are still critically important for generative AI workloads, just as they are for any other workload. For example, if your generative AI application is accessing a database, you’ll need to know what the data classification of the database is, how to protect that data, how to monitor for threats, and how to manage access. But beyond emphasizing long-standing security practices, it’s crucial to understand the unique risks and additional security considerations that generative AI workloads bring. This post highlights several security factors, both new and familiar, for you to consider.

Determine Your Scope

Your organization has made the decision to move forward with a generative AI solution; now what do you do as a security leader or practitioner? As with any security effort, you must understand the scope of what you’re tasked with securing. Depending on your use case, you might choose a managed service where the service provider takes more responsibility for the management of the service and model, or you might choose to build your own service and model.

Let’s look at how you might use various generative AI solutions in a generic cloud environment. Security is a top priority, and providing customers with the right tool for the job is critical. For example, you can use serverless, API-driven services with simple-to-consume, pre-trained foundation models (FMs) provided by various vendors. Managed AI services provide you with additional flexibility while still using pre-trained FMs, helping you to accelerate your AI journey securely. You can also build and train your own models using cloud-based machine learning platforms. Maybe you plan to use a consumer generative AI application through a web interface or API such as a chatbot or generative AI features embedded into a commercial enterprise application your organization has procured. Each of these service offerings has different infrastructure, software, access, and data models and, as such, will result in different security considerations. To establish consistency, I’ve grouped these service offerings into logical categorizations, which I’ve named scopes.

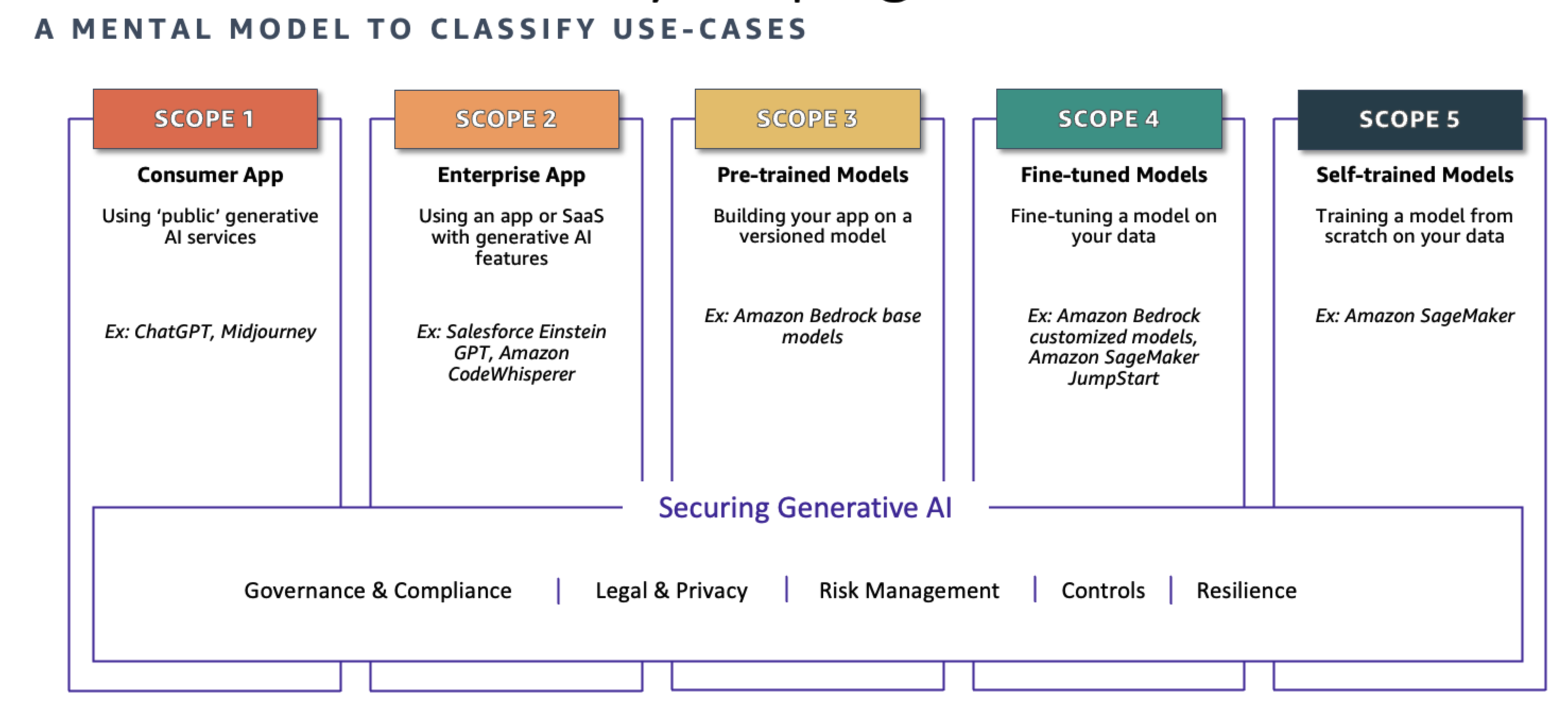

In order to help simplify your security scoping efforts, I’ve created a matrix that conveniently summarizes key security disciplines that you should consider, depending on which generative AI solution you select. This is called the Generative AI Security Scoping Matrix, shown in Figure 1.

\

The first step is to determine which scope your use case fits into. The scopes are numbered 1–5, representing least ownership to greatest ownership.

Buying Generative AI:

- Scope 1: Consumer app – Your business consumes a public third-party generative AI service, either at no-cost or paid. At this scope, you don’t own or see the training data or the model, and you cannot modify or augment it. You invoke APIs or directly use the application according to the terms of service of the provider.

- Example: An employee interacts with a generative AI chat application to generate ideas for an upcoming marketing campaign.

- Scope 2: Enterprise app – Your business uses a third-party enterprise application that has generative AI features embedded within, and a business relationship is established between your organization and the vendor.

- Example: You use a third-party enterprise scheduling application that has a generative AI capability embedded within to help draft meeting agendas.

Building Generative AI:

- Scope 3: Pre-trained models – Your business builds its own application using an existing third-party generative AI foundation model. You directly integrate it with your workload through an application programming interface (API).

- Example: You build an application to create a customer support chatbot that uses a foundation model through cloud provider APIs.

- Scope 4: Fine-tuned models – Your business refines an existing third-party generative AI foundation model by fine-tuning it with data specific to your business, generating a new, enhanced model that’s specialized to your workload.

- Example: Using an API to access a foundation model, you build an application for your marketing teams that enables them to build marketing materials specific to your products and services.

- Scope 5: Self-trained models – Your business builds and trains a generative AI model from scratch using data that you own or acquire. You own every aspect of the model.

- Example: Your business wants to create a model trained exclusively on deep, industry-specific data to license to companies in that industry, creating a completely novel LLM.

\

Conclusion

We outlined how well-established cloud security principles provide a solid foundation for securing generative AI solutions. While you will use many existing security practices and patterns, you must also learn the fundamentals of generative AI and the unique threats and security considerations that must be addressed. Use the Generative AI Security Scoping Matrix to help determine the scope of your generative AI workloads and the associated security dimensions that apply. With your scope determined, you can then prioritize solving for your critical security requirements to enable the secure use of generative AI workloads by your business. By approaching generative AI security with a structured, informed strategy, you can harness the transformative potential of this technology while safeguarding your organization’s data, compliance, and operational integrity.

This content originally appeared on HackerNoon and was authored by Vijay Murganoor

Vijay Murganoor | Sciencx (2024-06-19T16:56:41+00:00) Mental Model for Generative AI Risk and Security Framework. Retrieved from https://www.scien.cx/2024/06/19/mental-model-for-generative-ai-risk-and-security-framework/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.