This content originally appeared on DEV Community and was authored by Douglas Makey Mendez Molero

In my previous article, we discussed endianness, its importance, and how to work with it. Understanding endianness is crucial for dealing with data at the byte level, especially in network programming. We examined several examples that highlighted how endianness affects data interpretation.

One key area we focused on was the relationship between endianness and TCP stream sockets. We explained why the bind syscall expects certain information for the INET family to be in a specific endianness, known as network byte order. This ensures that data is correctly interpreted across different systems, which might use different native endianness.

One of the next interesting topics after binding the socket is putting it into listening mode with the listen syscall. In this article, we are going to dive a little deeper into this next phase.

Listen: Handshake and SYN/Accept Queues

Recall that TCP is connection-oriented protocol. This means the sender and receiver need to establish a connection based on agreed parameters through a three-way handshake. The server must be listening for connection requests from clients before a connection can be established, and this is done using the listen syscall.

We won't delve into the details of the three-way handshake here, as many excellent articles cover this topic in depth. Additionally, there are related topics likeTFO (TCP Fast Open)and syncookies that modify its behavior. Instead, we'll provide a brief overview. If you're interested, we can explore these topics in greater detail in future articles.

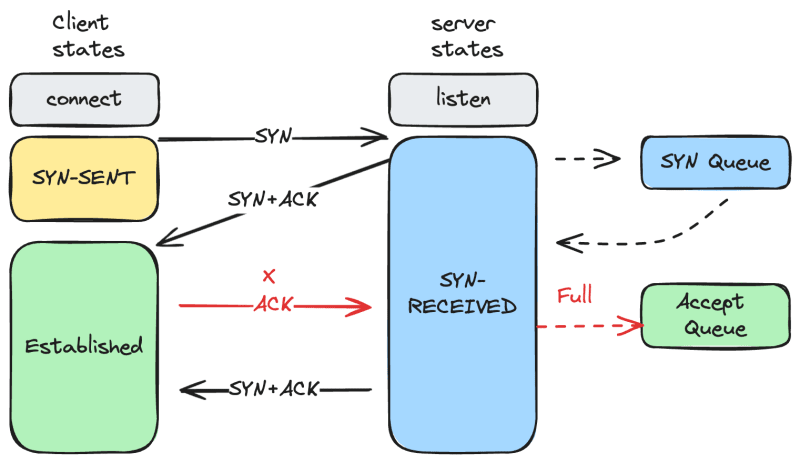

The three-way handshake involves the following steps:

- The client sends a SYN (synchronize) packet to the server, indicating a desire to establish a connection.

- The server responds with a SYN-ACK (synchronize-acknowledge) packet, indicating a willingness to establish a connection.

- The client replies with an ACK (acknowledge) packet, confirming it has received the SYN-ACK message, and the connection is established.

Do you remember in the first article when we mentioned that the listen operation involves the kernel creating two queues for the socket. The kernel uses these queues to store information related to the state of the connection.

The kernel creates these two queues:

- SYN Queue: Its size is determined by a system-wide setting. Although referred to as a queue, it is actually a hash table.

- Accept Queue: Its size is specified by the application. We will discuss this further later. It functions as a FIFO (First In, First Out) queue for established connections.

As shown in the previous diagram, these queues play a crucial role in the process. When the server receives a SYN request from the client, the kernel stores the connection in the SYN queue. After the server receives an ACK from the client, the kernel moves the connection from the SYN queue to the accept queue. Finally, when the server makes the accept system call, the connection is taken out of the accept queue.

Accept Queue Size

As mentioned, these queues have size limits, which act as thresholds for managing incoming connections. If the size limit of the queues are exceeded, the kernel may discard or drop the incoming connection request or return an RST (Reset) packet to the client, indicating that the connection cannot be established.

If you recall our previous examples where we created a socket server, you'll notice that we specified a backlog value and passed it to the listen function. This backlog value determines the length of the queue for completely established sockets waiting to be accepted, known as the accept queue.

// Listen for incoming connections

let backlog = Backlog::new(1).expect("Failed to create backlog");

listen(&socket_fd, backlog).expect("Failed to listen for connections");

If we inspect the Backlog::new function, we will uncover some interesting details. Here's the function definition:

...

pub const MAXCONN: Self = Self(libc::SOMAXCONN);

...

/// Create a `Backlog`, an `EINVAL` will be returned if `val` is invalid.

pub fn new<I: Into<i32>>(val: I) -> Result<Self> {

cfg_if! {

if #[cfg(any(target_os = "linux", target_os = "freebsd"))] {

const MIN: i32 = -1;

} else {

const MIN: i32 = 0;

}

}

let val = val.into();

if !(MIN..Self::MAXCONN.0).contains(&val) {

return Err(Errno::EINVAL);

}

Ok(Self(val))

}

We can notice a few things:

- On Linux and FreeBSD systems, the minimum acceptable value is

-1, while on other systems, it is0. We will explore the behavior associated with these values later. - The value should not exceed

MAXCONN, which is the default maximum number of established connections that can be queued. Unlike inC, where a value greater thanMAXCONNis silently capped to that limit, in Rust'slibccrate, exceedingMAXCONNresults in an error. - Interestingly, in the

libccrate,MAXCONNis hardcoded to4096. Therefore, even if we modifymaxconn, this won't affect the listening socket, and we won't be able to use a greater value.

The SOMAXCONN Parameter

The net.core.somaxconn kernel parameter sets the maximum number of established connections that can be queued for a socket. This parameter helps prevent a flood of connection requests from overwhelming the system. The default value for net.core.somaxconn is defined by the SOMAXCONN constant here .

You can view and modify this value using the sysctl command:

$ sudo sysctl net.core.somaxconn

net.core.somaxconn = 4096

$ sudo sysctl -w net.core.somaxconn=8000

net.core.somaxconn = 8000

Analyzing the Accept Queue with example

If we run the example listen-not-accept, which sets the backlog to 1 without calling accept, and then use telnet to connect to it, we can use the ss command to inspect the queue for that socket.

# server

$ cargo run --bin listen-not-accept

Socket file descriptor: 3

# telnet

$ telnet 127.0.0.1 6797

Trying 127.0.0.1...

Connected to 127.0.0.1.

Escape character is '^]'.

Using ss:

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 1 1 127.0.0.1:6797 0.0.0.0:*

-

Recv-Q: The size of the current accept queue. This indicates the number of completed connections waiting for the application to call

accept(). Since we have one connection fromtelnetand the application is not callingaccept, we see a value of 1. - Send-Q: The maximum length of the accept queue, which corresponds to the backlog size we set. In this case, it is 1.

Testing Backlog Behavior on Linux

We are going to execute the following code to dynamically test the backlog configuration:

fn main() {

println!("Testing backlog...");

for backlog_size in -1..5 {

let server_socket = socket(

AddressFamily::Inet,

SockType::Stream,

SockFlag::empty(),

SockProtocol::Tcp,

)

.expect("Failed to create server socket");

let server_address =

SockaddrIn::from_str("127.0.0.1:8080").expect("...");

bind(server_socket.as_raw_fd(), &server_address).expect("...");

listen(&server_socket, Backlog::new(backlog_size).unwrap()).expect("...");

let mut successful_connections = vec![];

let mut attempts = 0;

loop {

let client_socket = socket(

AddressFamily::Inet,

SockType::Stream,

SockFlag::empty(),

SockProtocol::Tcp,

).expect("...");

attempts += 1;

match connect(client_socket.as_raw_fd(), &server_address) {

Ok(_) => successful_connections.push(client_socket),

Err(_) => break,

}

}

println!(

"Backlog {} successful connections: {} - attempts: {}",

backlog_size,

successful_connections.len(),

attempts

);

}

}

The key functions of the code are:

- It iterates over a range of backlog values, creating a server socket and putting it in listen mode with each specific backlog value.

- It then enters a loop, attempting to connect client sockets to the server socket. If a connection is successful, it adds the client socket to the

successful_connectionsvector. If it fails, it breaks the loop.

This allows us to determine the exact number of successful connections for each backlog configuration.

When we run the program, we will see output similar to the following. Note that this process may take a few minutes:

$ cargo run --bin test-backlog

Backlog -1 successful connections: 4097 - attempts: 4098

Backlog 0 successful connections: 1 - attempts: 2

Backlog 1 successful connections: 2 - attempts: 3

Backlog 2 successful connections: 3 - attempts: 4

Backlog 3 successful connections: 4 - attempts: 5

Backlog 4 successful connections: 5 - attempts: 6

With the above output you can notice two things:

- When listen receive the the min acceptable value

-1it will use the default value ofSOMAXCONN, we already know if we pass a value greather thanSOMAXCONNit will return an error. - And the other "weird" that can notice is that always the connections are 1 more than the limit set by the backlog, for example, backlog is 3 but there are 4 connections,

The last result is due to the behavior of this function in Linux. The sk_ack_backlog represents the current number of acknowledged connections, while the sk_max_ack_backlog represents our backlog value. For example, when the backlog is set to 3, the function checks if sk_ack_backlog > sk_max_ack_backlog. When the third connection arrives, this check (3 > 3) evaluates to false, meaning the queue is not considered full, and it allows one more connection.

static inline bool sk_acceptq_is_full(const struct sock *sk)

{

return READ_ONCE(sk->sk_ack_backlog) > READ_ONCE(sk->sk_max_ack_backlog);

}

Handling a Full Accept Queue

As we can see from our program's logs, the client will attempt to connect to the server one more time than the specified backlog value. For example, if the backlog is 4, it will attempt to connect 5 times. In the last attempt, the client and server go through the handshake process: the client sends the SYN, the server responds with SYN-ACK, and the client sends an ACK back, thinking the connection is established.

Unfortunately, if the server's accept queue is full, it cannot move the connection from the SYN queue to the accept queue. The server's behavior when the accept queue overflows is primarily determined by the net.ipv4.tcp_abort_on_overflow option.

Normally, this value is set to 0, meaning that when there is an overflow, the server will discard the client's ACK packet from the third handshake, acting as if it never received it. The server will then retransmit the SYN-ACK packet. The number of retransmissions the server will attempt is determined by the net.ipv4.tcp_synack_retries option.

We can reduce or increase the

tcp_synack_retriesvalue. By default, this value is set to 5 on Linux.

In our final attempt, when the retransmission limit is reached, the connection is removed from the SYN queue, and the client receives an error. Again, this is an oversimplified overview of the process. The actual process is much more complex and involves additional steps and scenarios. However, for our purposes, we can keep things simpler.

By running the command netstat -s | grep "overflowed", we can check for occurrences of socket listen queue overflows. For example, in my case, the output indicates that "433 times the listen queue of a socket overflowed," highlighting instances where the system's accept queue was unable to handle incoming connection requests. This can lead to potential connection issues.

$ netstat -s | grep "overflowed"

433 times the listen queue of a socket overflowed

Testing Backlog Behavior on Mac

If we execute the same program on a Mac, we will observe some differences:

- As mentioned, for other systems, the minimum acceptable value is

0. Using this value gives us the default setting. - The default value for

SOMAXCONNis128, which can be retrieved using the commandsysctl kern.ipc.somaxconn. - Lastly, on a Mac, we don't encounter the issue of allowing

Backlog + 1connections. Instead, we accept the exact number of connections specified in the backlog.

$ cargo run --bin test-backlog

Backlog 0 successful connections: 128 - attempts: 129

Backlog 1 successful connections: 1 - attempts: 2

Backlog 2 successful connections: 2 - attempts: 3

Backlog 3 successful connections: 3 - attempts: 4

Backlog 4 successful connections: 4 - attempts: 5

SYN Queue Size Considerations

As the listen man page states, the maximum length of the queue for incomplete sockets (SYN queue) can be set using the tcp_max_syn_backlog parameter. However, when syncookies are enabled, there is no logical maximum length, and this setting is ignored. Additionally, other factors influence the queue's size. As mentioned earlier: its size is determined by a system-wide setting.

You can find the code for this example and future ones in this repo.

To Conclude

In this article, we explored the role of TCP queues in connection management, focusing on the SYN queue and the accept queue. We tested various backlog configurations and examined how different settings impact connection handling. Additionally, we discussed system parameters like tcp_max_syn_backlog and net.ipv4.tcp_abort_on_overflow, and their effects on queue behavior.

Understanding these concepts is crucial for optimizing server performance and managing high traffic scenarios. By running practical tests and using system commands, we gained insights into how to handle connection limits effectively.

As we move forward, keeping these fundamentals in mind will help ensure robust and efficient network communication.

Thank you for reading along. This blog is a part of my learning journey and your feedback is highly valued. There's more to explore and share regarding socket and network, so stay tuned for upcoming posts. Your insights and experiences are welcome as we learn and grow together in this domain. Happy coding!

This content originally appeared on DEV Community and was authored by Douglas Makey Mendez Molero

Douglas Makey Mendez Molero | Sciencx (2024-06-21T22:31:03+00:00) Networking and Sockets: Syn and Accept queue. Retrieved from https://www.scien.cx/2024/06/21/networking-and-sockets-syn-and-accept-queue/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.