This content originally appeared on Level Up Coding - Medium and was authored by Benjamin Dornel

Turn your web app into a versatile desktop application

Introduction

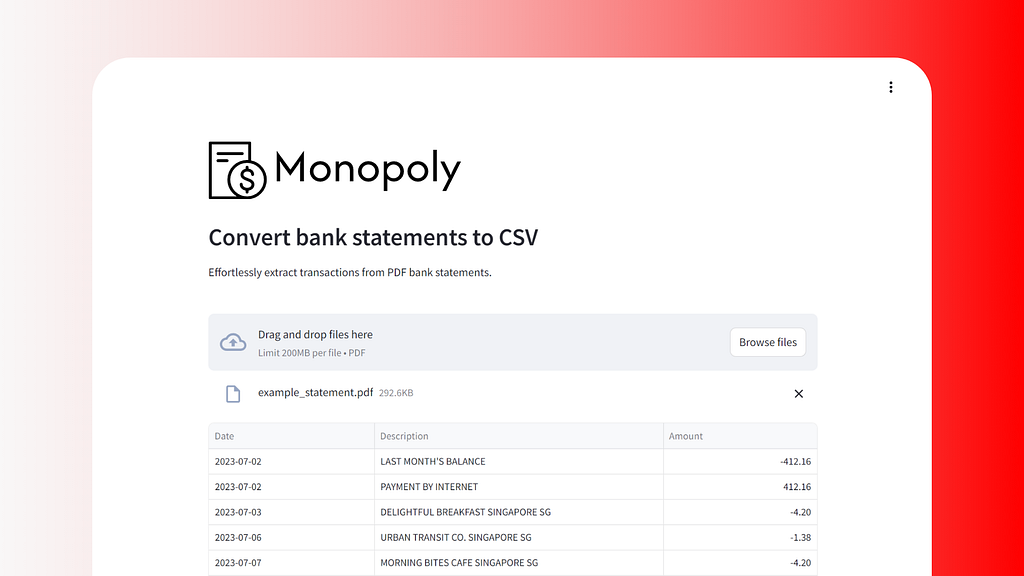

So, imagine that you have a Streamlit application. It’s nice, sleek and works well online. There’s only one small problem — for some reason or other, you need to run it offline.

There are several good reasons for this. For example, your application might be a bank statement parser, which deals with sensitive financial information.

At this point, you might feel like there’s no other solution but to re-write your application from scratch with Javascript and React (which is tough unless you have frontend experience). Luckily, there’s another path forward.

In this article, I’m going to cover:

- How to turn your Streamlit into an executable using PyInstaller.

- How to use Tauri with your PyInstaller executable to create an offline, desktop application.

Creating an Executable

The first step is to create an executable file from your Streamlit application. This process is the equivalent of creating a Python virtual environment, installing your dependencies, and then running:

streamlit run app.py

Which will then launch a local Streamlit server at http://localhost:8501.

Sounds simple enough, but this step can be deceptively tricky.

I’ll go ahead and cover some approaches that you might commonly find online.

The Nativefier/Pake Approach

If you’re unfamiliar with Nativefier, it’s a command-line tool that allows you to easily create a “desktop app” for any website.

Usage is fairly straightforward:

npm install -g nativefier

nativefier --name 'monopoly' 'https://monopoly.streamlit.app/'

However, this still requires an internet connection, and is simply a wrapper for a Chromium browser that accesses your application online.

It’s also deprecated — a good drop in replacement is Pake which has the same functionality but uses Rust + Tauri (more on this later) instead of Javascript + Electron which leads to vastly a decreased executable size.

TLDR; this approach gives an “online” desktop app

The Stlite Approach

Stlite is a version of Streamlit that can run directly in your web browser thanks to WebAssembly (a technology that allows code to run in the browser at near-native speed) and Pyodide (a tool that brings Python to the browser).

This approach uses npm and electron under the hood and allows you to add both system dependencies and Python dependencies. You can find the guide to do this here.

I think it’s a good solution that I would recommend to users. There’s quite a bit of customizability built in, and a focus on security which I like (although this means that you can’t link to external resources outside your app).

However, this approach might not work if you have Python dependencies that are not “pure” Python.

For example, my application uses PyMuPDF¹ and pdftotext, which are Python wrappers for C++ applications. To get this to work with Pyodide, these dependencies need to be manually compiled to get a wheel that is compatible with WASM (more details here).

Other “Python to X” approaches (Pyoxidizer, Nuitka, etc)

There are several other projects out there that attempt to convert your Python code into a compiled language like Rust (Pyoxidizer) and C++ (Nuitka).

This theoretically should lead to performance improvements, especially for Nuitka which focuses heavily on areas like statically inferring types, constant folding and propagation (calculating constant expressions at compile time and replacing variables with their constant values), and removing dead code (anti-bloat).

I’d recommend Nuitka over Pyoxidizer, since Pyoxidizer appears to be inactive. If you don’t have complex dependencies to manage, this might be a sound approach. Unfortunately, this didn’t work for me, since the compiler kept timing out during the compilation process.

The PyInstaller Approach

PyInstaller is similar to projects like Nuitka and Pyoxidizer, but doesn’t try to convert your Python code to any other language. It simply analyzes your application to determine all dependencies and recursively identifies all modules and packages used. It then “freezes” these dependencies, and converts them to Python byte code (.pyc files), which are accessed at runtime.

This approach is great since beyond a frozen copy of a Python interpreter, it also includes your Python scripts and modules, as well as third-party libraries that require external binaries.

To create an executable for your Streamlit application, you’ll need to follow these steps.

First, you’ll need to create an entry point for PyInstaller:

import os

import sys

from pathlib import Path

import streamlit.web.cli as stcli

def resolve_path(path: str) -> str:

base_path = getattr(sys, "_MEIPASS", os.getcwd())

return str(Path(base_path) / path)

if __name__ == "__main__":

sys.argv = [

"streamlit",

"run",

resolve_path("app.py"),

"--global.developmentMode=false",

"--server.headless=true",

]

sys.exit(stcli.main())

This entrypoint allows you to run Streamlit programmatically and pass arguments that do things like turning off Streamlit’s development mode. This is important, because when running your executable, Streamlit will automatically default to development mode since PyInstaller doesn’t create regular Python directories like site-packages, dist-packages, or __pypackages__. If development mode is enabled, Streamlit will not launch a webserver for your application.

You’ll also want to enable headless mode, to prevent message like this from blocking your application during startup:

Welcome to Streamlit!

If you are one of our development partners or are interested in

getting personal technical support, please enter your email address

below. Otherwise, you may leave the field blank.

Email:

Using PyInstaller means that your bundled files will not be stored at their usual paths meaning that a path would normally be: <venv>/site-packages/streamlit/runtime/... instead exists at sys._MEIPATH, which stores the location of unpacked files at runtime.

With the entry point out of the way, you’ll then need to create a PyInstaller hook for Streamlit:

# hooks/hook-streamlit.py

import site

from PyInstaller.utils.hooks import (

collect_data_files,

collect_submodules,

copy_metadata,

)

site_packages_dir = site.getsitepackages()[0]

datas = [(f"{site_packages_dir}/streamlit/runtime", "./streamlit/runtime")]

datas = copy_metadata("streamlit")

datas += collect_data_files("streamlit")

hiddenimports = collect_submodules("streamlit")

This tells PyInstaller to add Streamlit to the executable, and also ensures that static files that Streamlit needs to run like streamlit/static/index.html are copied over. This should also deal with strange errors like ModuleNotFoundError: No module named ‘streamlit.runtime.scriptrunner.magic_funcs’ that might otherwise appear.

With that done, you can then generate the PyInstaller .spec file:

pyinstaller --additional-hooks-dir=./hooks --clean entrypoint.py

The .spec file tells PyInstaller how to process your script. You might have to add additional hiddenimports if PyInstaller isn’t able to find certain dependencies, or otherwise import extra files/metadata for certain dependencies.

You can generate a single executable by removing the col1 = COLLECT(...) block.

For reference, my .spec file looks something like this:

# -*- mode: python ; coding: utf-8 -*-

from PyInstaller.utils.hooks import collect_submodules

hiddenimports = []

hiddenimports += collect_submodules("monopoly_streamlit")

hiddenimports += collect_submodules("pybadges")

a = Analysis(

['entrypoint.py'],

pathex=[],

binaries=[],

datas=[],

hiddenimports=hiddenimports,

hookspath=['./hooks'],

hooksconfig={},

runtime_hooks=[],

excludes=[],

noarchive=False,

optimize=0,

)

pyz = PYZ(a.pure)

exe = EXE(

pyz,

a.scripts,

[],

exclude_binaries=True,

name='entrypoint',

debug=False,

bootloader_ignore_signals=False,

strip=False,

upx=True,

console=True,

disable_windowed_traceback=False,

argv_emulation=False,

target_arch=None,

codesign_identity=None,

entitlements_file=None,

)

coll = COLLECT(

exe,

a.binaries,

a.datas,

strip=False,

upx=True,

upx_exclude=[],

name='entrypoint',

)

You can then run build a single executable with:

pyinstaller entrypoint.spec

Then run your application by running this into your terminal:

chmod +x dist/entrypoint

./dist/entrypoint

An important caveat to note is that this executable is actually a self-extracting archive, which writes files to a temporary directory to disk at runtime. This means that it’s slower compared to launch compared to PyInstaller’s --onedir mode².

This executable will also only work on the distribution that it was created with. For example, assuming you created the executable on Windows, it will only run on Windows and not on MacOS or Linux.

You should now have an executable that launches a Streamlit webserver on port 8501. However, this is not ideal since your user will need to open their browser, and navigate to http://localhost:8501 which may not be intuitive for someone not tech-savvy.

But what if you want the application to automatically open in a browser window?

Enter Tauri

Tauri is a framework used for building cross-platform desktop applications that is written in Rust. Compared to Electron, it uses the system’s native web renderer (WebView2 on Windows, WebKit on macOS and Linux). This allows for more efficient memory & CPU usage since it uses native web renderers, and also results in smaller application sizes as it doesn’t include a browser engine.

If you tried out Nativefier earlier, you’ll see that the executable easily goes up to 100–200 megabytes — and this is before any external binaries or dependencies are bundled.

To get started, you’ll need to install pnpm:

curl -fsSL https://get.pnpm.io/install.sh | sh -

You can then create a tauri project where your Streamlit project is located with:

pnpm create tauri-app --beta

To run the example application:

cd tauri-app

pnpm install

pnpm tauri dev

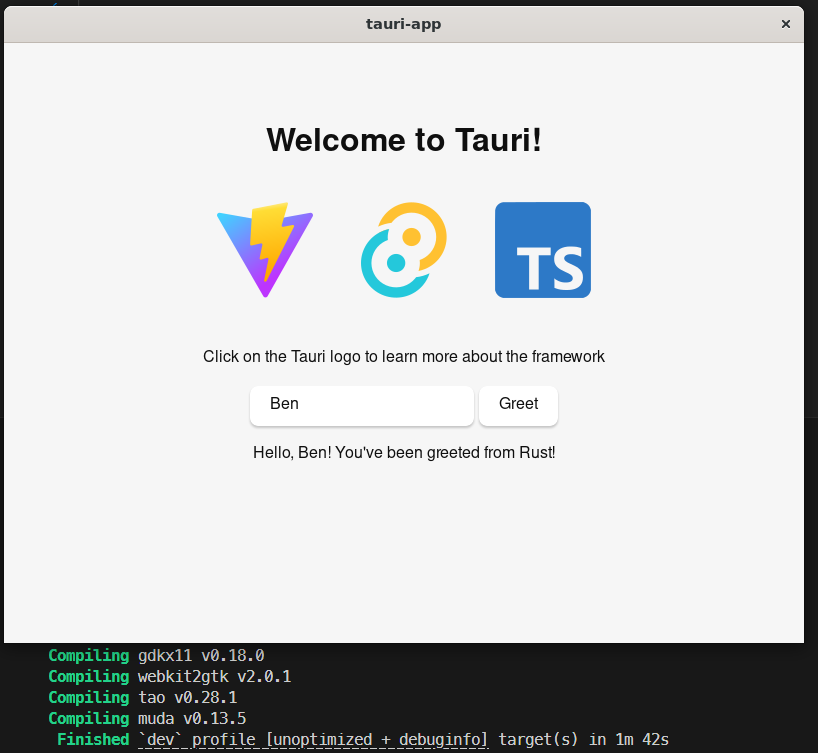

If you’ve done these steps correctly, you should see a screen like this:

To give a quick breakdown of the files you’ll now see in the project:

index.html: Entry point for your web application; initializes rendering of web content.

node_modules: Directory containing Node.js packages and dependencies.

package.json: Project manifest with metadata, scripts, and dependencies.

pnpm-lock.yaml: pnpm lock file that ensures consistent dependency versions across environments.

src: Stores the source code for the front-end application (JavaScript/TypeScript, CSS etc.)

src-tauri: Tauri-specific source code, configurations, and Rust backend.

tsconfig.json: TypeScript configuration file; sets compiler options.

vite.config.ts: Vite is a frontend bundler and build tool that provides various quality-of-life features like hot reloading, and also converts your source code into optimized HTML, CSS and JavaScript when building for production.

If you’re coming from Python and aren’t familiar with Rust, don’t worry. The backend code itself isn’t very complex. If you look at the code powering the example application at tauri-app/src-tauri/main.rs, it’s actually fairly simple:

#[tauri::command]

fn greet(name: &str) -> String {

format!("Hello, {}! You've been greeted from Rust!", name)

}

fn main() {

tauri::Builder::default()

.plugin(tauri_plugin_shell::init())

.invoke_handler(tauri::generate_handler![greet])

.run(tauri::generate_context!())

.expect("error while running tauri application");

}

The above code simply defines a greet function, then spins up Tauri and then registers the greet() command to handle frontend invocations.

The rest of the greet() logic is stored in a TypeScript file at tauri-app/src-tauri/main.ts. I’ll skip explaining this in detail since we don’t need this functionality to launch our Streamlit app. Basically, this file contains TypeScript code that links the frontend to the Rust backend, and invokes the greet() command in the backend when the greet button is clicked.

So, you’re probably wondering where Streamlit fits into this picture.

It turns out that Tauri has a feature that allows you to embed external binaries or a “sidecar”. This allows us to use Tauri’s Rust backend to call the executable we created earlier, which essentially spins up a webserver. Neat, huh?

You’ll need to add an externalBin array to the bundle key in your tauri.conf.json file which tells Tauri where to find your executable.

For reference, my configuration file looks something like this:

{

"productName": "monopoly",

"version": "0.3.4",

...

"bundle": {

"active": true,

"targets": "all",

"icon": [

"icons/32x32.png",

"icons/128x128.png",

"icons/128x128@2x.png",

"icons/icon.icns",

"icons/icon.ico"

],

"externalBin": [

"binaries/monopoly"

]

}

}It then expects a binary with your host triplet appended as a suffix to be stored in this folder.

To find your host triplet:

# install rust

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

# find host triplet

export HOST_TRIPLET=$(rustc -Vv | grep host | cut -f2 -d' ')

Then copy your executable from earlier:

export APP_NAME=<this should be whatever your app is called>

mkdir tauri-app/src-tauri/binaries

cp dist/entrypoint tauri-app/src-tauri/binaries/$APP_NAME-$HOST_TRIPLET

This should result in a file tree like this:

├── src-tauri

│ ├── binaries

│ │ └── monopoly-x86_64-unknown-linux-gnu

You can then invoke the binary in your code, and get the Tauri browser window to access your Streamlit application:

// src-tauri/src/main.rs

use std::env;

use std::time::Duration;

use tauri::Manager;

use tauri_plugin_shell::ShellExt;

use tokio::time::sleep;

pub fn run() {

tauri::Builder::default()

.plugin(tauri_plugin_shell::init())

.setup(|app| {

let main_window = app.get_webview_window("main").unwrap();

let sidecar = app.shell().sidecar("monopoly").unwrap();

tauri::async_runtime::spawn(async move {

let (mut _rx, mut _child) = sidecar.spawn().expect("Failed to spawn sidecar");

// wait for the streamlit server to start

sleep(Duration::from_secs(5)).await;

main_window

.eval("window.location.replace('http://localhost:8501');")

.expect("Failed to load the URL in the main window");

});

Ok(())

})

.run(tauri::generate_context!())

.expect("error while running tauri application");

}

This is pretty similar to the example code, except that we’re loading in our external binary as a sidecar. Tauri is smart enough to look for the correct binary, so when you call app.shell().sidecar(“monopoly”), it detects that my host triplet is x86_64-unknown-linux-gnu and looks for monopoly-x86_64-unknown-linux-gnu in the binaries folder.

To avoid a pre-mature HTTP 404 error, we wait 5 seconds for the server to spin up, then tell the browser window to navigate to http://localhost:8501.

Great! We’re done. You can then build your project with pnpm tauri build, and then run your application at src-tauri/target/release/bundle/<target>/<app>.

Naturally, there are further improvements that could be made to the above code. Instead of waiting for a pre-determined amount of time, you could also poll localhost to check for a response, and then load the server when ready.

You’ll also want to build a way to clean up the sidecar process. Theoretically, this should be handled by Tauri already, but it is common for the sidecar process to exist even after closing the app. Assuming your user opens and closes their application several times, they would end up with multiple processes, which iterate over ports (8501, 8502, 8503 etc.) since Vite uses the next available port if the current one is taken.

You could also add a loading splash screen, which is slightly involved and therefore something I won’t get into in this article.

If you’re interested in the polling, automated process cleanup, or splash screen, I have something here that you can refer to.

Here’s what a complete iteration of a Streamlit + Tauri application might look like:

Cross-Platform Compilation

Unfortunately, cross-platform compilation isn’t possible with PyInstaller and Tauri.

A simple way around this is to use a CICD pipeline like Github Actions that gives you access to runners with different operating systems.

Specifically, Github Actions has a matrix strategy that lets you use variables in a single job definition to automatically create multiple job runs that are based on the combinations of the variables.

This could look something like the following:

name: Build App & Release

on:

push:

tags:

- "v*.*.*"

workflow_dispatch:

defaults:

run:

shell: bash

jobs:

build:

runs-on: ${{ matrix.os-target.os }}

strategy:

matrix:

os-target:

- os: ubuntu-latest

target: x86_64-unknown-linux-gnu

- os: windows-latest

target: x86_64-pc-windows-msvc

- os: macos-latest

target: x86_64-apple-darwin

- os: macos-latest

target: aarch64-apple-darwin

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Setup Conda (windows)

if: matrix.os-target.os == 'windows-latest'

uses: s-weigand/setup-conda@v1

with:

activate-conda: false

- name: Install pdftotext dependencies (windows)

if: matrix.os-target.os == 'windows-latest'

run: |

conda install -c conda-forge poppler

- name: Setup brew (mac)

if: matrix.os-target.os == 'macos-latest'

uses: Homebrew/actions/setup-homebrew@master

- name: Install pdftotext dependencies (mac)

if: matrix.os-target.os == 'macos-latest'

run: brew install poppler

- name: Install pdftotext dependencies (ubuntu)

if: matrix.os-target.os == 'ubuntu-latest'

uses: daaku/gh-action-apt-install@v4

with:

packages: build-essential libpoppler-cpp-dev pkg-config

- name: Install Rust

uses: dtolnay/rust-toolchain@stable

with:

toolchain: stable

target: ${{ matrix.os-target.target }}

- name: Install Monopoly dependencies

uses: ./.github/actions/setup-python-poetry

with:

python-version: "3.11"

poetry-version: "1.8.3"

- name: Create executable with pyinstaller

run: poetry run pyinstaller entrypoint.spec

- name: Copy executable to tauri binaries

run: cp ./dist/entrypoint ./tauri/src-tauri/binaries/monopoly-${{ matrix.os-target.target }}

- uses: Swatinem/rust-cache@v2

with:

cache-on-failure: true

workspaces: tauri/src-tauri

- name: Install tauri dependencies (ubuntu)

if: matrix.os-target.os == 'ubuntu-latest'

run: |

sudo apt-get update

sudo apt-get install -y javascriptcoregtk-4.1 libsoup-3.0 webkit2gtk-4.1

- name: Install pnpm

uses: pnpm/action-setup@v4

with:

version: 9.0.6

run_install: false

- name: Install tauri

working-directory: tauri

run: pnpm install

- name: Build tauri app

working-directory: tauri/src-tauri/

run: pnpm tauri build -t ${{ matrix.os-target.target }}

- name: Upload tauri app

id: artifact_upload

uses: actions/upload-artifact@v4

with:

name: monopoly-${{ matrix.os-target.target }}

path: |

tauri/src-tauri/target/${{ matrix.os-target.target }}/release/bundle/appimage/*.AppImage

tauri/src-tauri/target/${{ matrix.os-target.target }}/release/bundle/nsis/

tauri/src-tauri/target/${{ matrix.os-target.target }}/release/bundle/dmg/

compression-level: 9

That’s it for this article! I hope it was helpful for you. If you have any feedback or questions, feel free to reach out to me on LinkedIn. Otherwise, if you’re looking for my Streamlit + Tauri app, you can find it here.

Notes

1: ˄ PyMuPDF does have some support for Pyodide, but you still need to build it yourself. Unfortunately, micropip.install() doesn’t work because PyMuPDF also uses Emscripten. This means that at any point in time, the versions ofEmscripten used by Pyodide and PyMuPDF will most likely differ, leading to build-time errors when you try to compile the wheel.

2: ˄ You can also use PyInstaller’s — onedir mode with Tauri, but you’ll need to embed the internal files and the executable, which is doable but not as straightforward as using a single binary.

Building an Offline Streamlit Application with Tauri was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Benjamin Dornel

Benjamin Dornel | Sciencx (2024-06-30T18:08:49+00:00) Building an Offline Streamlit Application with Tauri. Retrieved from https://www.scien.cx/2024/06/30/building-an-offline-streamlit-application-with-tauri/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.