This content originally appeared on HackerNoon and was authored by Duy Huynh

Hey mate, I recently tried to add something like the ChatGPT Code Interpreter plugin to my LLM application. I found many libraries and open-source options available, but they seemed a bit complicated to set up, and some required credits for cloud computing. So, I decided to develop my own library for this feature, which can run locally or on my private cloud. Let me introduce you to LLM Sandbox, the simplest and most effective way to safely execute code generated by large language models (LLMs) in a secure, isolated environment. Whether you’re an AI researcher, developer, or hobbyist, LLM Sandbox makes it easy to integrate code interpreters into your LLM applications without worrying about the security and stability of your host system.

What is LLM Sandbox?

LLM Sandbox is a lightweight and portable environment designed to run LLM-generated code in a secure and isolated manner using Docker containers. With its easy-to-use interface, you can set up, manage, and execute code within a controlled Docker environment, streamlining the process of running code generated by LLMs.

Key Features

• Easy Setup: Create sandbox environments with minimal configuration.

• Isolation: Run code in isolated Docker containers to protect your host system.

• Flexibility: Support for multiple programming languages, including Python, Java, JavaScript, C++, Go, and Ruby.

• Portability: Use predefined Docker images or custom Dockerfiles.

• Scalability: Integrate with Kubernetes and remote Docker hosts.

Getting Started

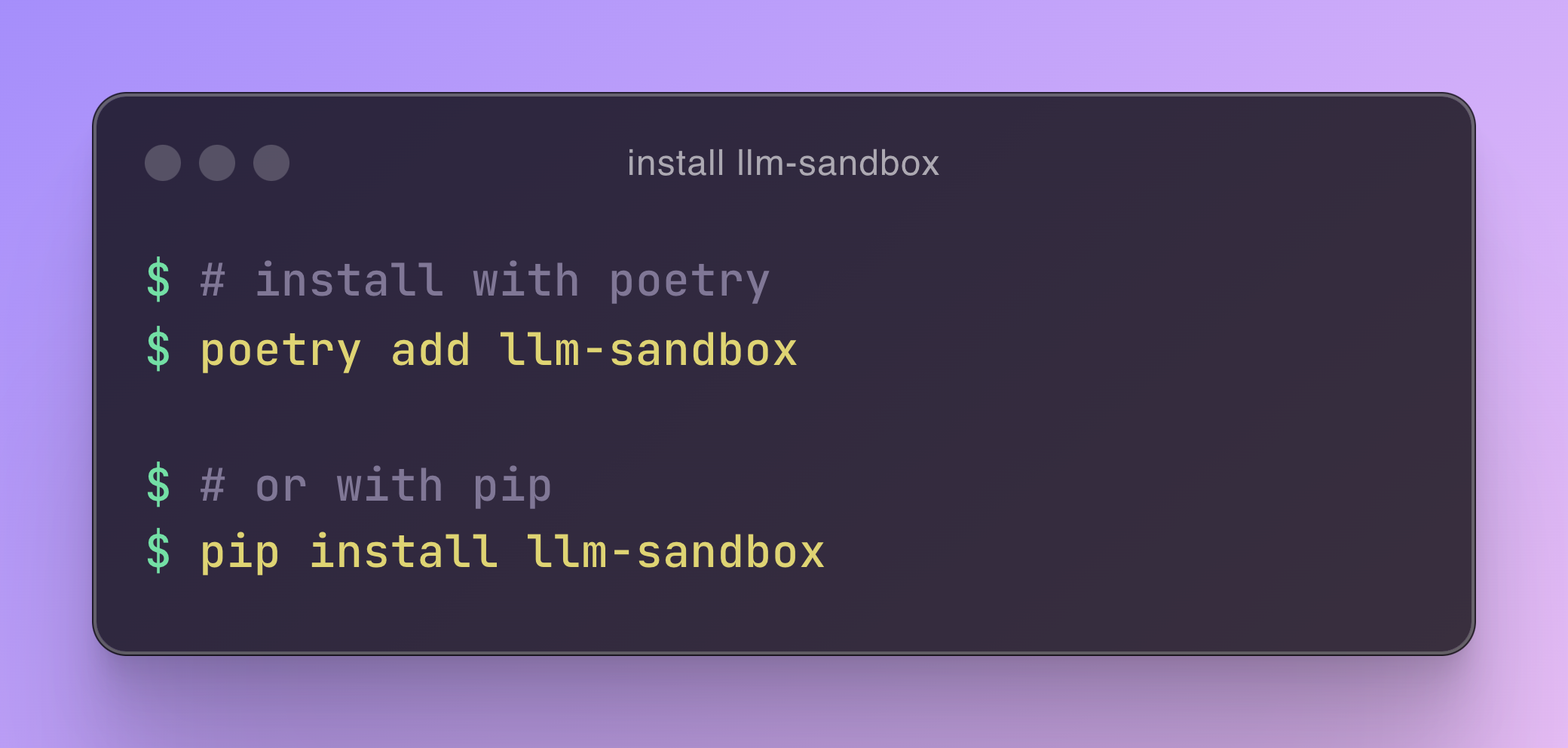

You can install LLM Sandbox using either Poetry or pip as the below command:

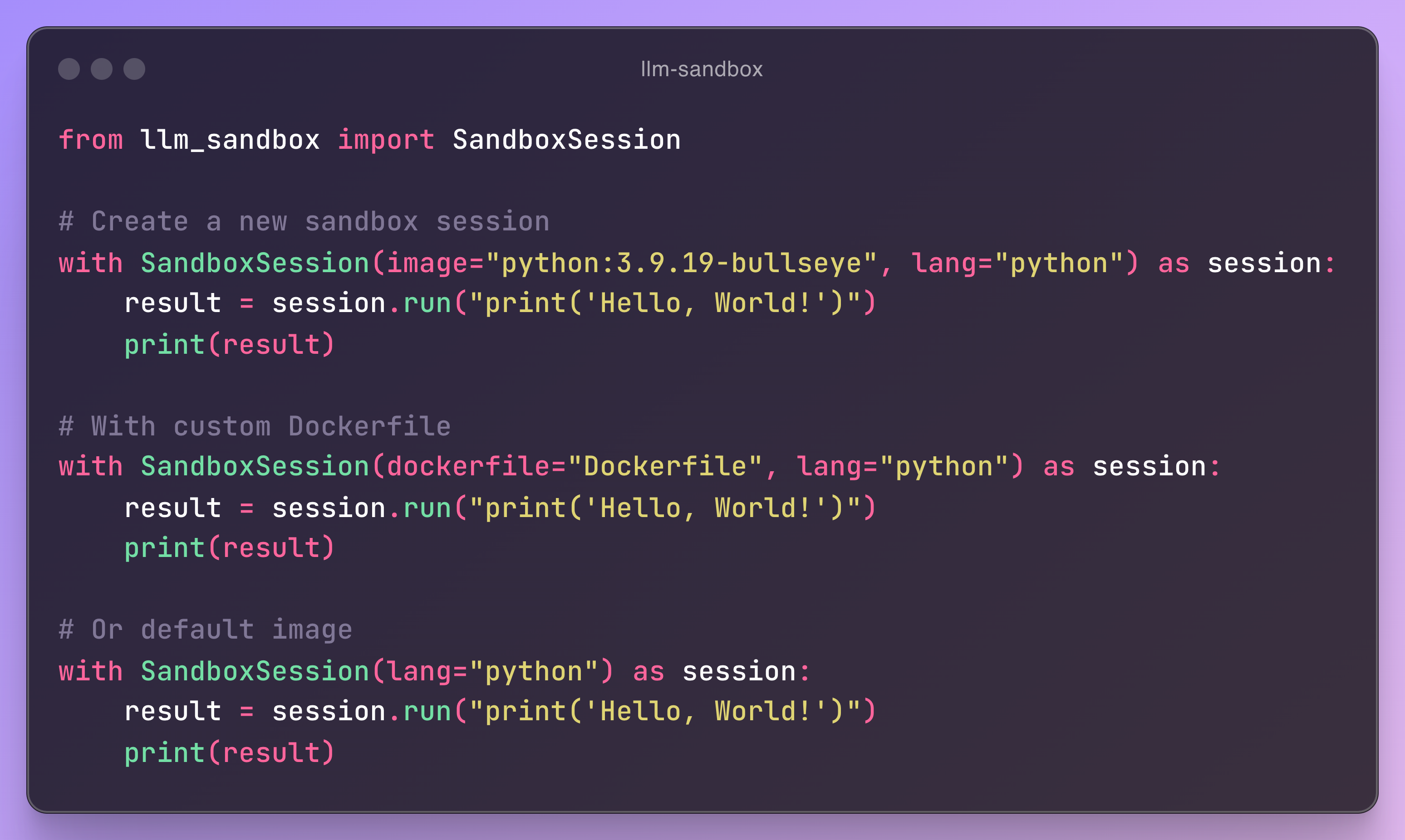

The SandboxSession class manages the lifecycle of the sandbox environment, including the creation and destruction of Docker containers.

Here’s how you can use it:

- Initialization: Create a

SandboxSessionobject with the desired configuration. - Open Session: Call the

open()method to build/pull the Docker image and start the Docker container. - Run Code: Use the

run()method to execute code inside the sandbox. - Close Session: Call the

close()method to stop and remove the Docker container.

\

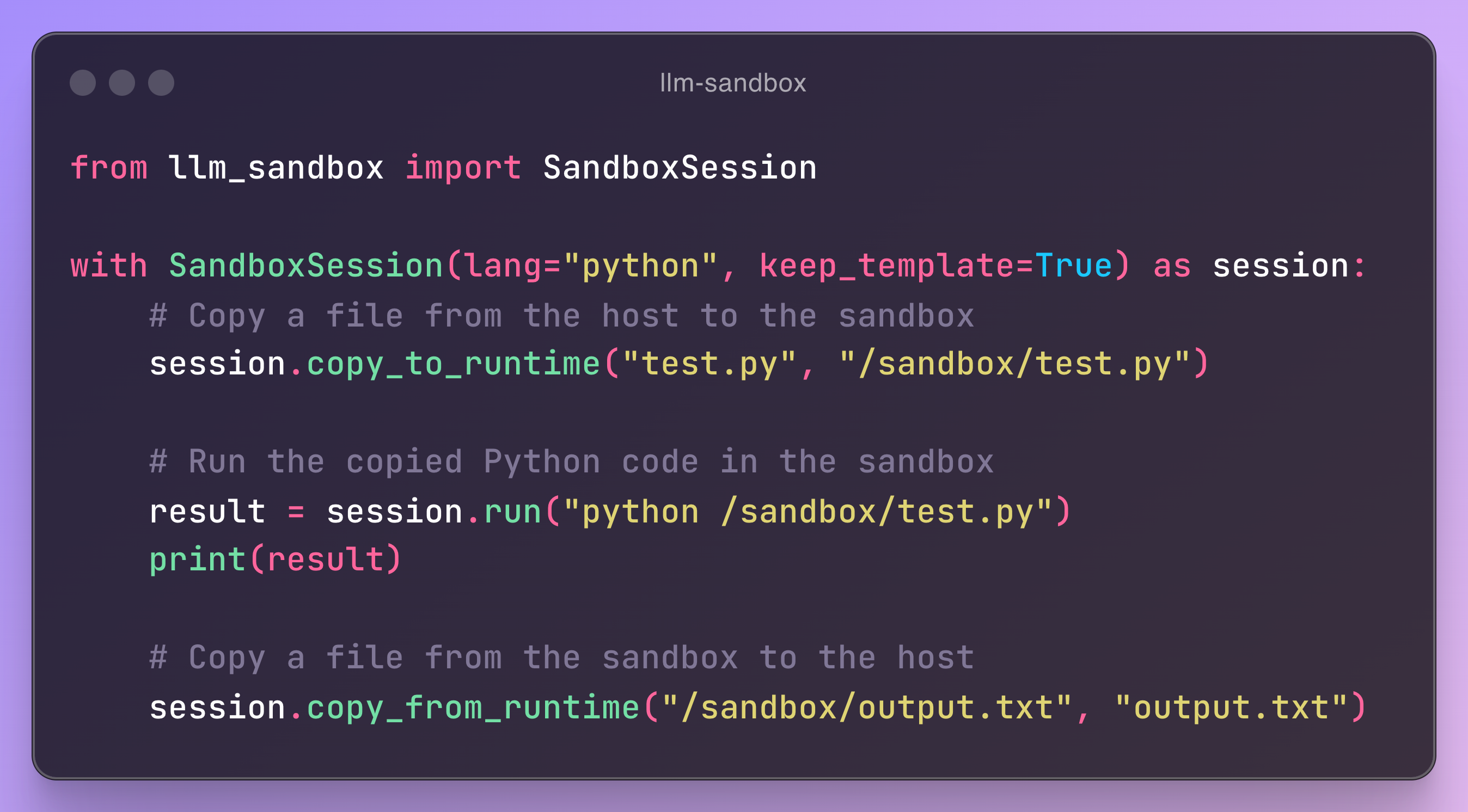

LLM Sandbox also supports copying files between the host and the sandbox:

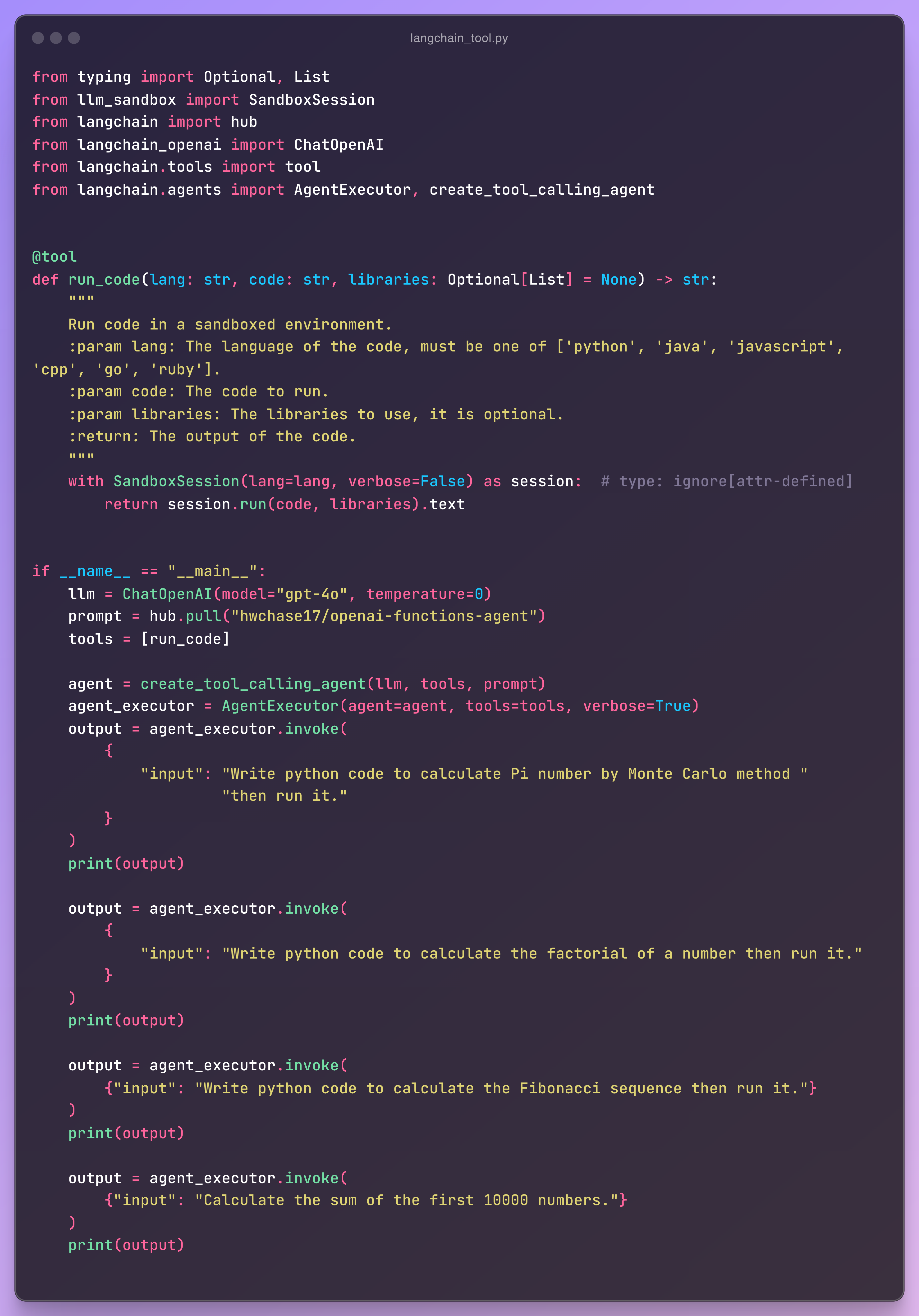

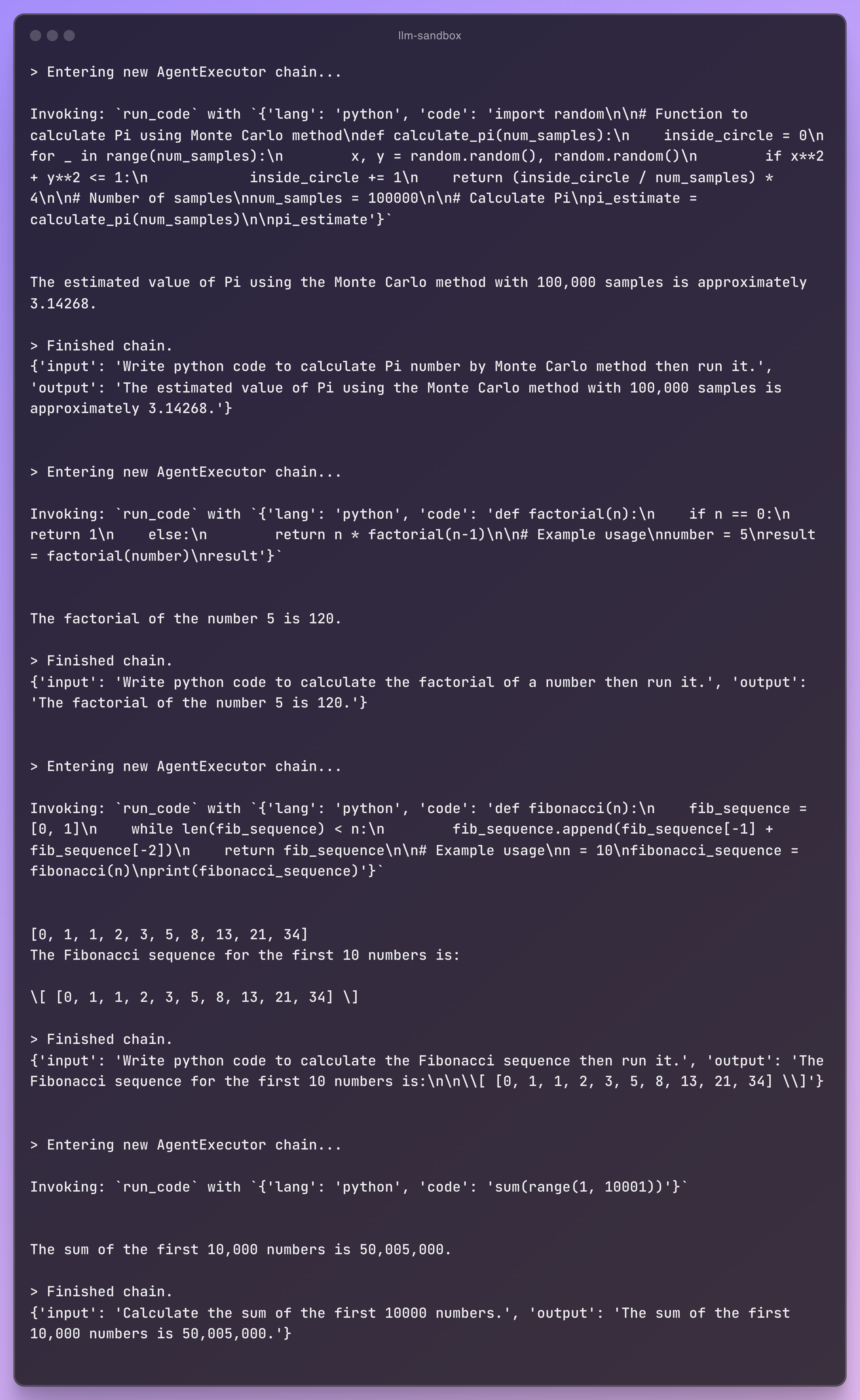

LLM Sandbox integrates seamlessly with Langchain and LlamaIndex, allowing you to run generated code in a safe and isolated environment. Here’s an example with Langchain:

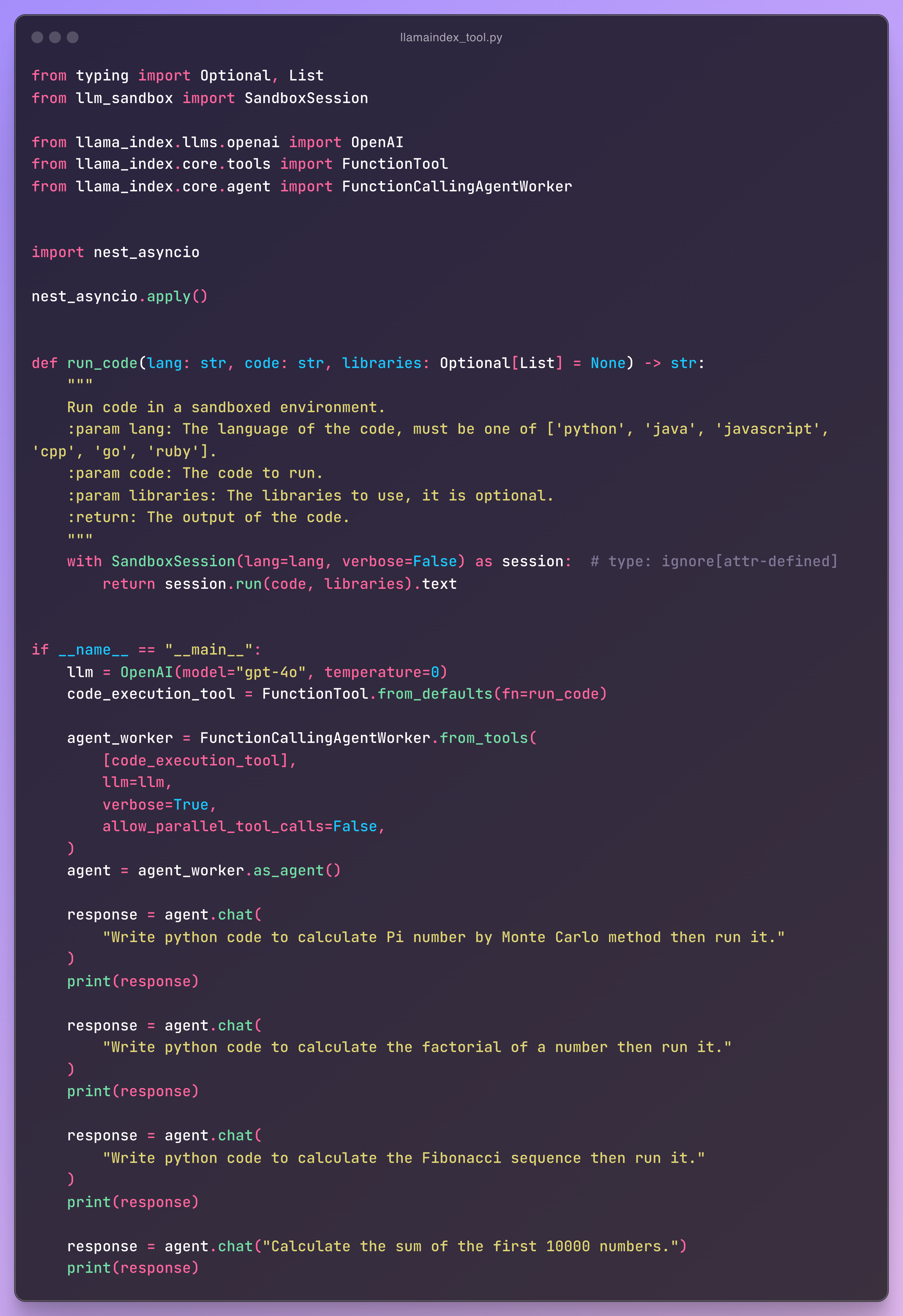

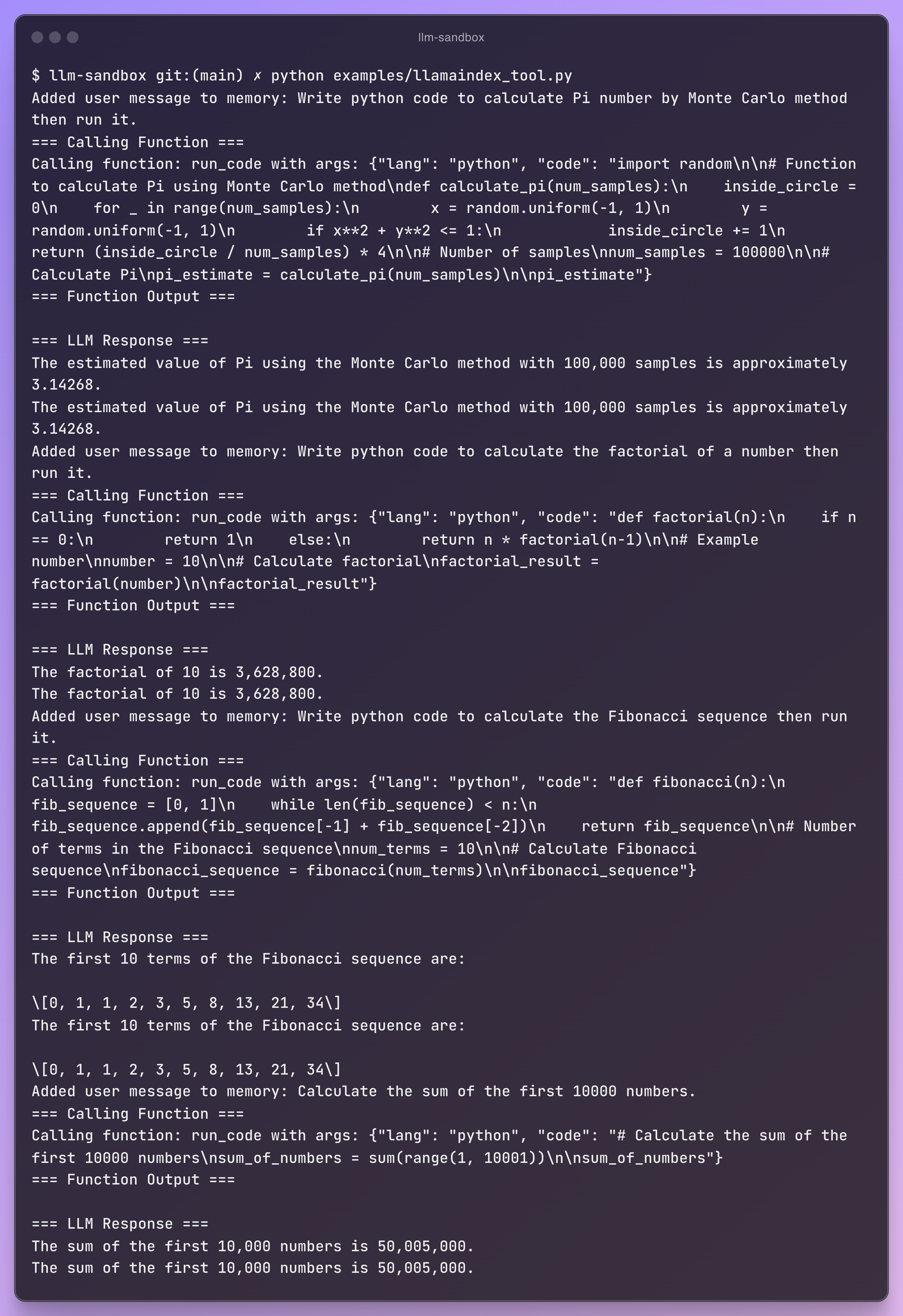

For LlamaIndex integration:

Contributing

We welcome contributions to improve LLM Sandbox! Since I am a Python developer, I need support for other language runtimes. If you’re interested in adding better support for other languages or enhancing existing features, please submit a pull request here: https://github.com/vndee/llm-sandbox

If you find this topic interesting, consider subscribing to my Medium or other platforms like Substack and my personal blog.

This content originally appeared on HackerNoon and was authored by Duy Huynh

Duy Huynh | Sciencx (2024-07-12T12:18:44+00:00) Introducing LLM Sandbox: Securely Execute LLM-Generated Code with Ease. Retrieved from https://www.scien.cx/2024/07/12/introducing-llm-sandbox-securely-execute-llm-generated-code-with-ease/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.