This content originally appeared on HackerNoon and was authored by Miguel Rodriguez

In the same way that a young city slicker impresses the folks back home with stories of life in the Metropole, AI seems to be creative for those who encounter it. One has to admit that the width of knowledge that AI seems to have is impressive. And the way it seems to be able to transfer writing styles and connect concepts feels unlimited.

\ To debunk the creativity of AI, we need to take a peak under the hood of how the LLM algorithms work. LLMs are large multilayered neural networks that relate tokens to each other. Their design mimics the structure of our brain with the nodes being our neurons and the relations between the tokens and the synapsis between our neurons.

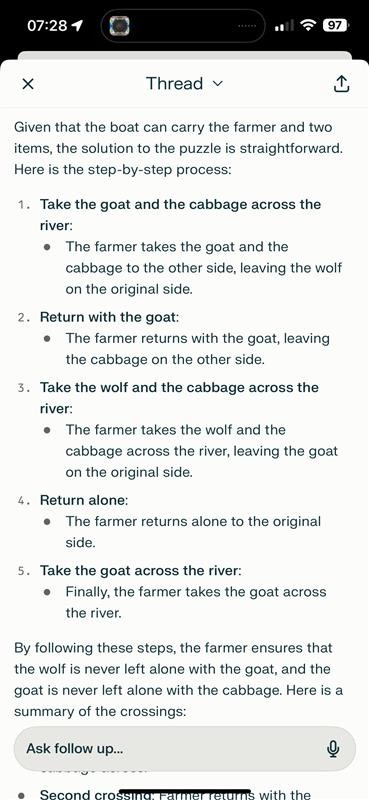

\ Like Hebb said: Neurons that fire together, wire together. In the case of LLMs, this is done by a system of weights that represent the relationship between tokens. Tokens that represent similar concepts will have a smaller distance than tokens that represent concepts that are unrelated. Like in the image, concepts of time will have a shorter weight factor among each other than other concepts.

\

Intelligence is the ability to look at a novel problem and come up with a solution.

\ One important breakthrough in the early design of LLM's was increasing the amount of layers. Similar to the brain, this allows complex concepts to be stored and related to each other. If you think about it, words can be defined by other words. Being able to define new concepts based on a set of basic concepts is something we humans do in all our activities.

\ When you ask a question to an LLM, it translates the input you gave it and searches for concepts related to the set of tokens that you provided. It then transforms this back into a textual answer. Because of the large amount of information that was used to train these models, it looks like they are omni-sapient.

\ On top of that, the models are able to transform the answers in multiple ways. You want the answer in German? No problem. Want it as a Haiku in the style of Shakespeare? No problem again.

\

Yet, the models can only answer from their large pool of data. Their algorithms are not able to be creative.

\ In Sean Carroll's Mindscape podcast, Francois Chollet brilliantly exposed this characteristic of generative AI. You probably have heard the trick question:

\ "What is heavier: A kilogram of iron or a kilogram of feathers?"

\ Ask this question and all LLM's will answer it correctly. But if you modify the question to be something like:

"What is heavier: A kilogram of iron or two kilograms of feathers?"

\ Some of the LLMs will be fooled and still answer that they weigh the same. The reason is that this case is not available in the information that was used to train their models. In a strange fashion, LLMs are like the student who memorizes facts for an exam but is not capable of deducing something new based on all she has learned.

\

\ Thus, I decided to check this hypothesis by asking 4 LLMs that I currently use a modified version of the Farmer crossing the river with a Wolf, a Goat, and a box of Cabbage.

\ The original riddle goes like this:

A farmer wants to cross a river and take with him a wolf, a goat, and a cabbage.

There is a boat that can fit him plus either the wolf, the goat, or the cabbage.

If the wolf and the goat are alone on one shore, the wolf will eat the goat. If the goat and the cabbage are alone on the shore, the goat will eat the cabbage.

How can the farmer bring the wolf, the goat, and the cabbage across the river?

\ I changed the riddle so that the boat could fit him plus two items (instead of one).

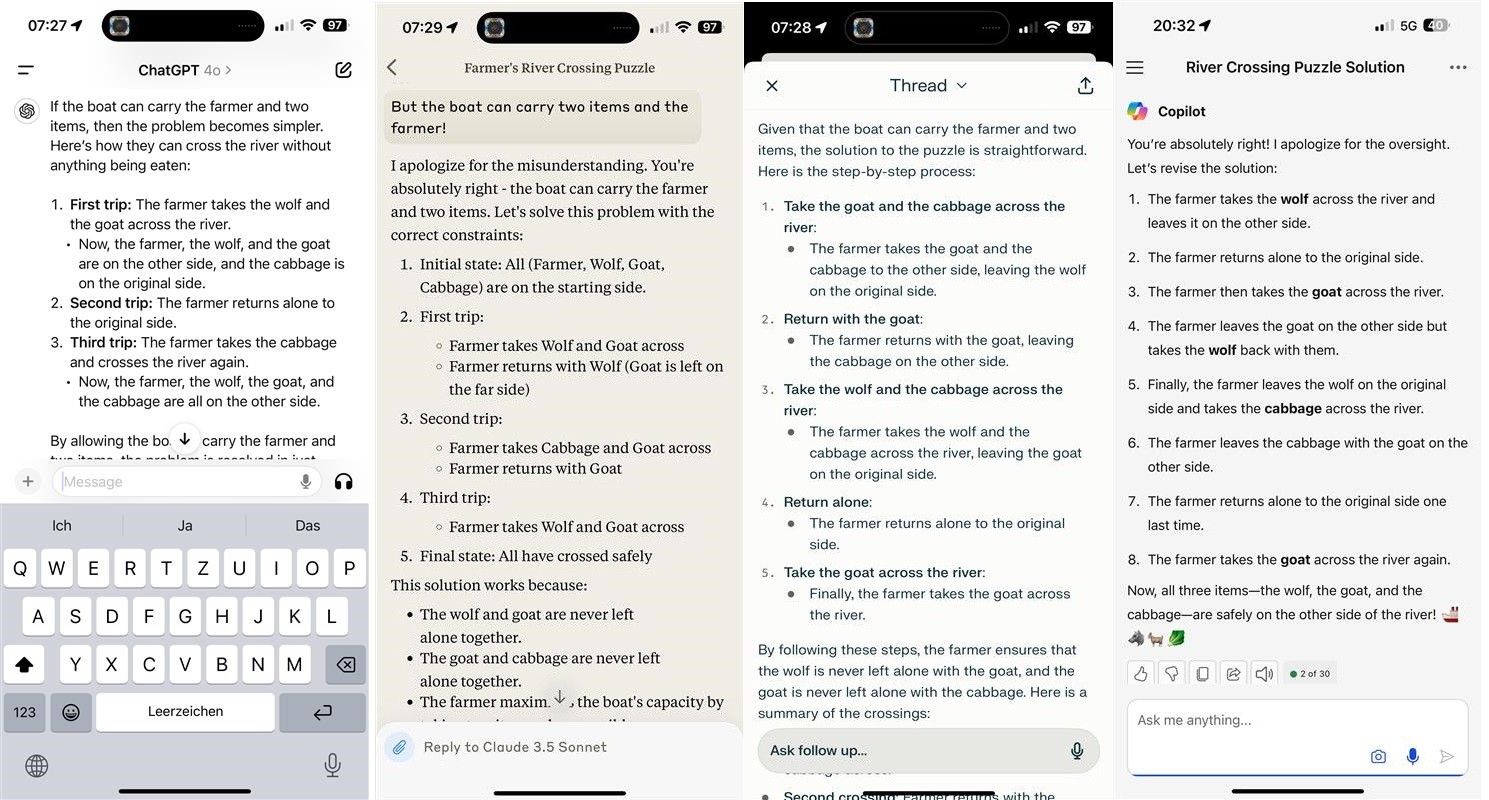

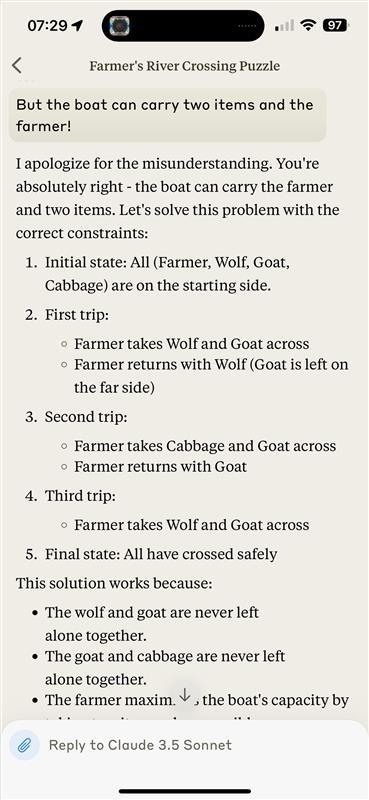

\ Initially, all LLM's confused the riddle with the original version. I had to point to them that the conditions were different, and got quite amusing answers.

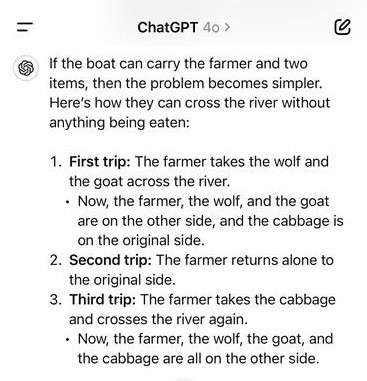

Chat GPT 4.o

ChatGPT 4.o model does understand the number of trips required to cross the river, yet it commits the capital mistake of leaving the wolf and goat on the other side. The farmer will be taking the wolf and the cabbage home, but no goat.

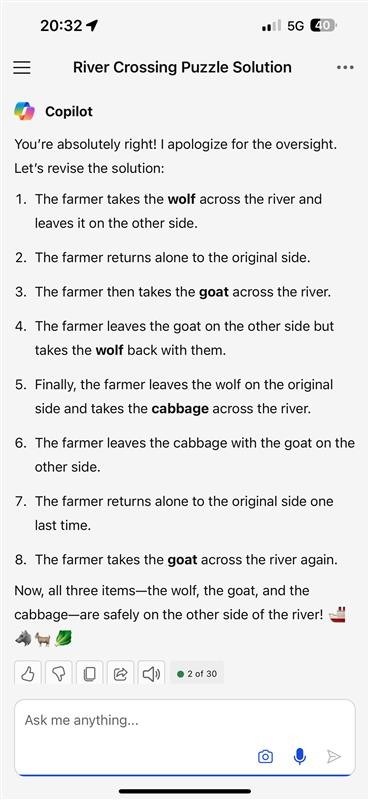

\ Copilot, through Bing, decides to go the safe way and cross with one item at a time, better safe than sorry, but resulting in an unnecessary amount of trips. As a consolation for his extra effort, the farmer will take all 3 items home.

\ Perplexity starts in a great way, returning with the goat after the first crossing and saving its cabbage. Yet somehow, a second goat magically appears on the other side for whom the farmer has to cross alone again. This, of course, risked coming back to an empty box of cabbage and a wolf with a full belly.

\ Finally, Claude 3.5 creates a second imaginary wolf. At least the farmer knows better than to leave the goat alone and takes it along on all his crossings.

\ Francois Chollet argues that current LLM algorithms are boxed and not really capable of original thinking. He has created a challenge, the $1M ARC-AGI prize. Its premise is to have a set of novel riddles that are not yet solved in public, and thus, absent from training data and to have systems compete with each other trying to solve them.

\ The riddles are only known to the organizers, and the competing systems have to be provided on a laptop with no access to the internet. In other words, if you want to use an LLM it needs to run locally.

I find these arguments compelling. But maybe it is because I was never good at memorization, and also because I love riddles. And on the way to ARC-AGI, I will be peppering the models at my disposition with questions to see if they are getting closer to reasoning.

This content originally appeared on HackerNoon and was authored by Miguel Rodriguez

Miguel Rodriguez | Sciencx (2024-07-15T20:52:00+00:00) Here’s How to Prove AI Is Not Creative. Retrieved from https://www.scien.cx/2024/07/15/heres-how-to-prove-ai-is-not-creative/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.