This content originally appeared on Telerik Blogs and was authored by Dany Paredes

Get started with an LLM to create your own Angular chat app. We will use Ollama, Gemma and Kendo UI for Angular for the UI.

The wave of AI is real. Every day, most companies build, create or adapt their products using chats or context actions that help us interact with large language models (LLMs). But have you asked yourself how hard it is to build an application that interacts with LLMs like Gemini or Llama3?

Well, nowadays it is so easy because we have all the necessary tools to enable us to build apps like chats with contextual menus for actions to interact with LLMs using Angular and Kendo UI and run open LLMs like Gemma or Llama3 on our computers with Ollama.

So, if we have the tools, why not build something nice? Today we are going to learn how to run LLMs locally, connect with Gemma, and create a chat using Progress Kendo UI for Angular! Let’s do it!

Setup

First, we need to visit https://ollama.com/download and download Ollama. It helps us run models on our computers. Once the download is complete, install the app and open it—now, Ollama is ready to use.

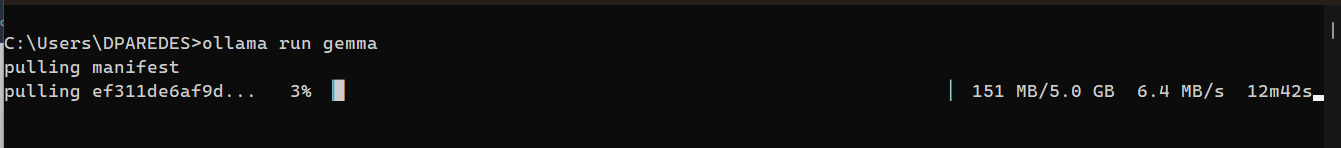

Next, we need to run Ollama and start using the Gemma model. In the terminal, run the command ollama run gemma:

ollama run gemma

It will start downloading the Gemma model, which might take a while.

After finishing, we are ready to interact with Gemma on our machine using the terminal.

OK, perfect. We have Gemma running in the terminal, but I want to build a chat like ChatGPT , so I want a fancy interface and the typing effect.

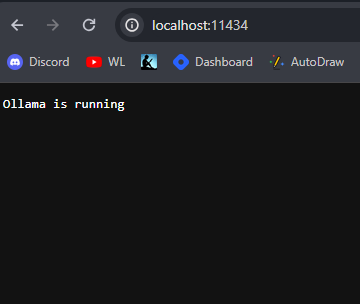

One great thing with Ollama is it enables an HTTP API by default, so we can use it to interact with the models. Feel free to navigate to http://localhost:11434 in your browser to get the response.

Also, we have the Ollama npm package to make it easy to interact with the API. We’ll install it after creating our application. So with all tools ready, let’s build our chat with Angular and Kendo UI!

Build the Chat

First, set up your Angular application with the command ng new gemma-kendo.

C:\Users\DPAREDES\Desktop>ng new gemma-kendo

? Which stylesheet format would you like to use? Sass (SCSS) [ https://sass-lang.com/documentation/syntax#scss

]

? Do you want to enable Server-Side Rendering (SSR) and Static Site Generation (SSG/Prerendering)? No

cd gemma-kendo

Install the Ollama library by running the command npm i ollama

C:\Users\DPAREDES\Desktop\gemma-kendo>npm i ollama

added 2 packages, and audited 929 packages in 3s

118 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

Next install the Kendo UI for Angular Conversational UI, by using a schematics command to register:

C:\Users\DPAREDES\Desktop\gemma-kendo>ng add @progress/kendo-angular-conversational-ui

i Using package manager: npm

√ Found compatible package version: @progress/kendo-angular-conversational-ui@16.1.0.

√ Package information loaded.

The package @progress/kendo-angular-conversational-ui@16.1.0 will be installed and executed.

Would you like to proceed? Yes

√ Packages successfully installed.

UPDATE package.json (1674 bytes)

UPDATE angular.json (3022 bytes)

√ Packages installed successfully.

UPDATE src/main.ts (301 bytes)

UPDATE tsconfig.app.json (310 bytes)

UPDATE tsconfig.spec.json (315 bytes)

We’re all set up, so let’s proceed to code!

Interact with Ollama API

Before we start, we need work with the Angular Chat component. The chat needs two entities—the User and the IA. So create two User variables, gemmaIA and user, in a new file entities/entities.ts:

import { User } from "@progress/kendo-angular-conversational-ui";

export const gemmaIA: User = {

id: crypto.randomUUID(),

name: 'KendoIA',

};

export const user: User = {

id: crypto.randomUUID(),

name: 'Dany'

};

Next, using the CLI, generate the service ollama by using the Angular CLI command ng g s services/ollama.

C:\Users\DPAREDES\Desktop\gemma-kendo>ng g s services/ollama

CREATE src/app/services/ollama.service.spec.ts (373 bytes)

CREATE src/app/services/ollama.service.ts (144 bytes)

Open ollama.service.ts. Because we want to make a list of messages available, we’ll use signals to expose a property of an array of messages with a default message.

Yes, we embrace signals! Learn more about signals.

import { Injectable, signal } from '@angular/core';

import { Message } from '@progress/kendo-angular-conversational-ui';

import { gemmaIA } from '../../entities/entities';

...

messages = signal<Message[]>([{

author: gemmaIA,

timestamp: new Date(),

text: 'Hi! how I can help you!'

}]);

Next create the method generate with the text as the parameter. Create two objects: gemmaMessage (will be used to notify the user that the AI is typing) and userMessage (containing the text from the user). Finally, update the messages signals with the new messages.

import { gemmaIA, user } from '../../entities/entities';

...

async generate(text: string) {

const gemmaMessage = {

timestamp: new Date(),

author: gemmaIA,

typing: true

};

const userMessage = {

timestamp: new Date(),

author: user,

text

}

this.messages.update((msg) => [...msg, userMessage, gemmaMessage] );

}

Save changes and now we have the service ready to publish messages. Let’s move on to integrating the service and using the Conversational UI Chat.

Building Chat

Next, we will have to perform some actions to use the kendo-chat and to use the ollama.service in the app.component.ts:

- Import the

ChatModuleto get access to thekendo-chat. - Inject the OllamaService using the inject function :

private readonly _ollamaService = inject(OllamaService);. - Declare a messages variable from the

_ollamaServicein the$messages:$messages = this._ollamaService.messages;. - Store the user declared in the entities.

The code looks like this:

import { Component, inject } from '@angular/core';

import { ChatModule } from '@progress/kendo-angular-conversational-ui';

import { gemmaIA, user } from './entities/entities';

import { OllamaService } from './services/ollama.service';

@Component({

selector: 'app-root',

standalone: true,

imports: [ChatModule],

templateUrl: './app.component.html',

styleUrl: './app.component.css',

})

export class AppComponent {

private readonly _ollamaService = inject(OllamaService);

$messages = this._ollamaService.messages;

readonly user = user;

}

The next step is to use the generate method from our service. So add a new method generate that will take the event from the kendo-chat and call generate from ollamaService.

async generate($event: any): Promise<void> {

const { text } = $event.message;

await this._ollamaService.generate(text);

}

Open app.component.html, remove all HTML markup, add the component kendo-chat, set the user and message with the signal $messages(). The kendo-chat provides the sendMessage event, so we can attach to it and use our method generate to listen for when the user sends a message.

The markup looks like this:

<kendo-chat [user]="user" [messages]="$messages()" (sendMessage)="generate($event)" width="450px">

</kendo-chat>

Learn more about Angular Conversational UI.

Save changes and reload the page.

Yeah, we have a chat, but KendoIA is not answering. But it nicely shows a loading indicator. It’s a good start. Let’s interact with the Gemma LLM using the Ollama library.

Interact with Ollama API and Stream Data

Open ollama.service.ts. Using the Ollama API, the ollama object exposes the chat function. It expects a model and a message from the user. The user message is passed in under the content key (see below). In our scenario, we want to reflect the same behavior as ChatGPT, so we enable the stream to true.

This makes the data come in chunk responses. Because ollama.chat returns a promise, we store it in a variable I’m calling responseStream.

async getGemmaResponse(userMessage: string): Promise<void> {

const responseStream = await ollama.chat({

model: 'gemma',

messages: [{ role: 'user', content: userMessage }],

stream: true,

});

Next, we have two goals: get the response and every message from the stream and update the signals with these results. Declare the variable responseContent = '' and use for await to iterate over every responseStream and concatenate the message.content in the responseContent.

let responseContent = '';

for await (const chunk of responseStream) {

responseContent += chunk.message.content;

}

The final step is to update the last message in the chat with the response content. Using the update method from signals, get the last message and update its value, and set typing to false to hide the loading message.

this.messages.update((messages) => {

const updatedMessages = [...messages];

const lastMessageIndex = updatedMessages.length - 1;

updatedMessages[lastMessageIndex] = {

...updatedMessages[lastMessageIndex],

text: responseContent,

typing: false,

};

return updatedMessages;

});

The getGemmaResponse function’s final code looks like:

import { Injectable, signal } from '@angular/core';

import { Message } from '@progress/kendo-angular-conversational-ui';

import { gemmaIA, user } from '../../entities/entities';

import ollama from 'ollama';

...

async getGemmaResponse(userMessage: string): Promise<void> {

const responseStream = await ollama.chat({

model: 'gemma',

messages: [{ role: 'user', content: userMessage }],

stream: true,

});

let responseContent = '';

for await (const chunk of responseStream) {

responseContent += chunk.message.content;

this.messages.update((messages) => {

const updatedMessages = [...messages];

const lastMessageIndex = updatedMessages.length - 1;

updatedMessages[lastMessageIndex] = {

...updatedMessages[lastMessageIndex],

text: responseContent,

typing: false,

};

return updatedMessages;

});

}

}

Finally, still in our ollama.service file, call the function getGemmaResponse from the generate method.

async generate(text: string) {

const gemmaMessage = {

timestamp: new Date(),

author: gemmaIA,

typing: true,

};

const userMessage = {

timestamp: new Date(),

author: user,

text,

};

this.messages.update((msg) => [...msg, userMessage, gemmaMessage]);

//calling the getGemmaResponse

await this.getGemmaResponse(text);

}

Save changes, reload and tada!! We have built a chat with Gemma! We are talking with our local LLM without paying for tokens and have a nice interface with just a few lines of code.

Conclusion

It was so fun and surprisingly easy to run LLM on our machine, interact with the Ollama API and of course build our chat with a few lines of code. Using Ollama to run models locally and Kendo UI for Angular for a chat interface, we can integrate advanced AI capabilities into our projects without pain.

We learned how to set up and interact with the Gemma model, create an Angular application, and build a real-time chat interface with ease, using the power of Kendo UI for Angular Conversational UI. Open up new possibilities and start building your next AI-driven app today!

- Source code: https://github.com/danywalls/run-local-gemma

Don’t forget: Kendo UI for Angular comes with a free 30-day trial!

This content originally appeared on Telerik Blogs and was authored by Dany Paredes

Dany Paredes | Sciencx (2024-07-17T15:10:57+00:00) Interact with Local LLMs with Ollama, Gemma and Kendo UI for Angular. Retrieved from https://www.scien.cx/2024/07/17/interact-with-local-llms-with-ollama-gemma-and-kendo-ui-for-angular/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.